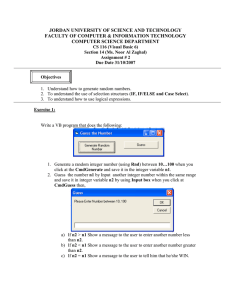

CUAHSI Community Observations Data Model (ODM) - CUAHSI-HIS

advertisement