Active Documents and their Applicability in Distributed Environments

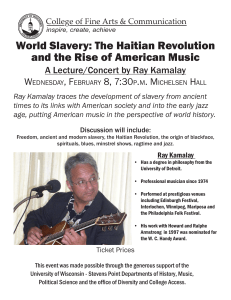

advertisement