IHSN ADP Evaluation - Final report

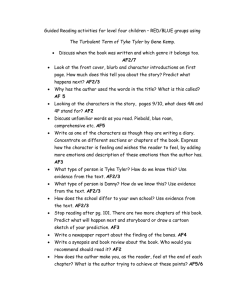

advertisement