Signal Processing 104 (2014) 319–324

Contents lists available at ScienceDirect

Signal Processing

journal homepage: www.elsevier.com/locate/sigpro

Fast communication

New blind source separation method of independent/

dependent sources

A. Keziou a,n, H. Fenniri b, A. Ghazdali c, E. Moreau d,e

a

Laboratoire de Mathématiques de Reims EA 4535, Université de Reims Champagne-Ardenne, France

CReSTIC, Université de Reims Champagne-Ardenne, France

LAMAI, FST, Université Cadi-Ayyad, Morocco

d

ENSAM, LSIS, UMR CNRS 7296, Université du Sud-Toulon-Var, France

e

Aix Marseille Université, France

b

c

a r t i c l e i n f o

abstract

Article history:

Received 24 January 2014

Received in revised form

4 April 2014

Accepted 19 April 2014

Available online 30 April 2014

We introduce a new blind source separation approach, based on modified Kullback–

Leibler divergence between copula densities, for both independent and dependent source

component signals. In the classical case of independent source components, the proposed

method generalizes the mutual information (between probability densities) procedure.

Moreover, it has the great advantage to be naturally extensible to separate mixtures of

dependent source components. Simulation results are presented showing the convergence and the efficiency of the proposed algorithms.

& 2014 Elsevier B.V. All rights reserved.

Keywords:

Blind source separation

Modified Kullback–Leibler divergence

between copulas

Mutual information

1. Introduction

Blind source separation (BSS) is an instrumental problem in signal processing which has been addressed in the

last three decades. We consider an instantaneous linear

mixture described by

xðtÞ ≔AsðtÞ þnðtÞ A Rp ;

ð1Þ

where A A Rpp is an unknown non-singular mixing

matrix, sðtÞ ≔ðs1 ðtÞ; …; sp ðtÞÞ > is the unknown vector of

source signals to be estimated from xðtÞ ≔ðx1 ðtÞ; …; xp ðtÞÞ > ,

the vector of observed signals. The number of sources and

the number of observations, for the present work, are

assumed to be equal. The presence of additive noise nðtÞ

within the mixing model complicates significantly the BSS

problem. It is reduced by applying some form of

n

Corresponding author.

E-mail addresses: amor.keziou@univ-reims.fr (A. Keziou),

hassan.fenniri@univ-reims.fr (H. Fenniri),

a.ghazdali@gmail.com (A. Ghazdali), moreau@univ-tln.fr (E. Moreau).

http://dx.doi.org/10.1016/j.sigpro.2014.04.017

0165-1684/& 2014 Elsevier B.V. All rights reserved.

preprocessing such as denoising the observed signals

through regularization approach, see e.g. [15]. The goal is

to estimate the vector source signals sðtÞ using only the

observed signals xðtÞ. The estimate yðtÞ of the source

signals sðtÞ can be written as

yðtÞ ¼ BxðtÞ;

ð2Þ

pp

where B A R

is the de-mixing matrix. The question is

b which has to be

how to obtain the de-mixing matrix B

close to the ideal solution A 1 , using only the observed

signals xðtÞ? It is well known, by Darmois theorem, that if

the source components are mutually independent and at

b

most one component is Gaussian, a consistent estimate B

of A 1 (up to scale and permutation indeterminacies of

rows) is the one that makes the components of the vector

yðtÞ independent; see e.g. [6]. The corresponding signals

b

b ðtÞ ≔BxðtÞ

y

are the estimate of the source signals sðtÞ.

Under the above hypotheses, many procedures have been

proposed in the literature. Some of these procedures use

second or higher order statistics, see e.g. [13,2] and the

references therein, others consist of optimizing (on the

320

A. Keziou et al. / Signal Processing 104 (2014) 319–324

de-mixing matrix space) an estimate of some measure of

dependency structure of the components of the vector yðtÞ.

As measures of dependence used in BSS, we find in the

literature the criterion of mutual information (MI) [14,7],

the criteria of α, β and Renyi's-divergences [5,19], and the

criteria of ϕ-divergences [15]. The procedures based on

minimizing estimates of MI are considered as the most

efficient, since this criterion can be estimated efficiently,

other procedures using divergences lead to robust method

for appropriate choice of divergence criterion [15]. In this

paper, we will focus on the criterion of MI (called also

modified Kullback–Leibler divergence), viewed as measure

of difference between copula densities, and we will use

it to propose a new BSS approach that applies in both cases

of independent or dependent source components. In the

following, we will show that the mutual information of

a random vector Y ≔ðY 1 ; …; Y p Þ > can be written as the

modified Kullback–Leibler divergence (KLm-divergence)

between the copula of independence and the copula of

the vector. Then, we propose a separation procedure based

on minimizing an appropriate estimate of KLm-divergence

between the copula density of independence and the

copula density of the vector. This approach applies in

the standard case, and we will show that the proposed

criterion can be naturally extended to separate mixture of

dependent source components. The proposed approach

can be adapted also to separate complex-valued signals. In

all the sequel, we assume that at most one source is

Gaussian, and we will treat separately the case of independent source components, and then the case of dependent source components. Chen et al. [3] proposed a BSS

algorithm (for independent source components) based

on minimizing a distance between the parameter of

the copula of the estimated source and the value of the

parameter corresponding to copula of independence. Ma

and Sun [9] proposed a different criterion combining the

MI between probability densities and Shannon entropy of

semiparametric models of copulas.

2. Brief recalls on copulas

Consider a random vector Y ≔ðY 1 ; …; Y p Þ > A Rp , p Z1,

with joint distribution function (d.f.) FY ðÞ: y A Rp ↦FY ðyÞ ≔

FY ðy1 ; …; yp Þ ≔PðY 1 ry1 ; …; Y p ryp Þ, and continuous marginal d.f.'s F Y j ðÞ: yj A R↦F Y j ðyj Þ ≔PðY j r yj Þ, 8 j ¼ 1; …; p. The

characterization theorem of Sklar [17] shows that there

exists a unique p-variate function CY ðÞ: ½0; 1p ↦½0; 1, such

that, FY ðyÞ ¼ CY ðF Y 1 ðy1 Þ; …; F Y p ðyp ÞÞ, 8 y ≔ðy1 ; …; yp Þ > A Rp .

The function CY ðÞ is called a copula and it is in itself a joint

d.f. on ½0; 1p with uniform marginals. We have for all

u ≔ðu1 ; …; up Þ > A ½0; 1p , CY ðuÞ ¼ PðF Y 1 ðY 1 Þ r u1 ; …; F Y p ðY p Þ

r up Þ. Conversely, for any marginal d.f.'s F 1 ðÞ; …; F p ðÞ,

and any copula function CðÞ, the function CðF 1 ðy1 Þ; …;

F p ðyp ÞÞ is a multivariate d.f. on Rp . On the other hand, since

the marginal d.f.'s F Y j ðÞ, j ¼ 1; …; p, are assumed to be

continuous, then the random variables F Y 1 ðY 1 Þ; …; F Y p ðY p Þ

are uniformly distributed on the interval [0, 1]. So, if the

components Y 1 ; …; Y p are statistically independent, then

the corresponding copula writes C0 ðuÞ ≔∏pj¼ 1 uj ; 8 u A

½0; 1p . It is called the copula of independence. Define,

when it exists, the copula density (of the random vector Y)

cY ðuÞ ≔ð∂p =∂u1 ⋯∂up ÞCY ðuÞ; 8 u A ½0; 1p . Hence, the copula

density of independence c0 ðÞ is the function taking the

value 1 on ½0; 1p and zero otherwise, namely,

c0 ðuÞ ≔1½0;1p ðuÞ;

8 u A ½0; 1p :

ð3Þ

Let f Y ðÞ, if it exists, be the probability density on Rp

of the random vector Y ≔ðY 1 ; …; Y p Þ > , and, respectively,

f Y 1 ðÞ; …; f Y p ðÞ, the marginal probability densities of the

random variables Y 1 ; …; Y p . Then, a straightforward computation shows that, for all y A Rp , we have

p

f Y ðyÞ ¼ ∏ f Y j ðyj ÞcY ðuÞ;

ð4Þ

j¼1

where u ≔ðu1 ; …; up Þ > ≔ðF Y 1 ðy1 Þ; …; F Y p ðyp ÞÞ > . In the

monographs by [11,8], the reader may find detailed

ingredients of the modeling theory as well as surveys of

the commonly used semiparametric copulas.

3. Mutual information and copulas

The MI of a random vector Y ≔ðY 1 ; …; Y p Þ > A Rp is

defined by

Z

∏pj¼ 1 f Y j ðyj Þ

f Y ðyÞ dy1 ⋯dyp :

MI ðY Þ ≔

log

ð5Þ

f Y ðyÞ

Rp

It is called also the modified Kullback–Leibler divergence

(KLm-divergence) between the product of the marginal

densities and the joint density of the vector. Note also that

MIðYÞ≕KLm ð∏pj¼ 1 f Y j ; f Y Þ is nonnegative and achieves its

minimum value zero if and only if (iff) f Y ðÞ ¼ ∏pj¼ 1 f Y j ðÞ,

i.e., iff the components of the random vector Y are statistically independent. An equivalent formula of (5) is

!

∏pj¼ 1 f Y j ðY j Þ

MI ðY Þ ≔E log

;

ð6Þ

f Y ðYÞ

where EðÞ is the mathematical expectation. Using the

relation (4), and applying the change variable formula for

multiple integrals, we can show that MIðYÞ can be written

as

Z

1

cY ðuÞ du≕KLm ðc0 ; cY Þ

log

MI ðY Þ ¼

cY ðuÞ

½0;1p

¼ Eðlog cY ðF Y 1 ðY 1 Þ; …; F Y p ðY p ÞÞÞ≕ HðcY Þ;

R

where HðcY Þ ≔ ½0;1p logðcY ðuÞÞcY ðuÞ du is the Shannon

entropy of the copula density cY ðÞ. The relation above

means that the MI of the random vector Y can be seen as

the KLm-divergence between the copula density of independent c0 ðÞ, see (3), and the copula density cY ðÞ of the

random vector Y. We summarize the above results in the

following proposition.

Proposition 1. Let Y A Rp be any random vector with

continuous marginal distribution functions. Then, the MI of

Y can be written as the KLm-divergence between the copula

density c0 of independence and the copula density of the

vector Y:

Z

1

MI ðY Þ ¼

cY ðuÞ du≕KLm ðc0 ; cY Þ

log

cY ðuÞ

½0;1p

¼ Eðlog cY ðF Y 1 ðY 1 Þ; …; F Y p ðY p ÞÞÞ:

ð7Þ

A. Keziou et al. / Signal Processing 104 (2014) 319–324

Moreover, KLm ðc0 ; cY Þ is nonnegative and takes the value zero

iff cY ðuÞ ¼ c0 ðuÞ≕1½0;1p ðuÞ, 8 u A ½0; 1p , i.e., iff the components of the vector Y are statistically independent.

4. A separation procedure for mixtures of independent

sources through copulas

In this section, we describe our approach based on

minimizing a nonparametric estimate of the KLm-divergence KLm ðc0 ; cY Þ, assuming that the source components

are independent. Denote by S ≔ðS1 ; …; Sp Þ > the random

source vector, X ≔AS the observed random vector and

Y ≔BX the estimated random source vector. The discrete

(noise free) version of the mixture model (1) writes xðnÞ ≔

AsðnÞ; n ¼ 1; …; N. The source signals sðnÞ; n ¼ 1; …; N, will

be considered as N i.i.d. copies of the random source vector

S, and then xðnÞ; yðnÞ ≔BxðnÞ; n ¼ 1; …; N, are, respectively,

N i.i.d. copies of the random vectors X and Y ≔BX. When

the source signal data sðnÞ; n ¼ 1; …; N, are not i.i.d., such

as cyclo-stationary sources, see e.g. [10], we can return to

the above i.i.d. case, by developing the observed signals

xðnÞ; n ¼ 1; …; N, following some harmonic or trigonometric basis, and then separate the error vector components ϵ ≔ðϵ1 ; …; ϵp Þ > from the data ϵðnÞ; n ¼ 1; …; N, which

can be assumed i.i.d. copies of ϵ. Note that, by the linearity

of the problem, the separating matrix will be the same for

the original observed signals xðÞ or the errors signals ϵðÞ.

The same methodology can be used for Section 5 below. In

view of Proposition 1, the function B↦KLm ðc0 ; cY Þ is non-

density. A more appropriate choice of the kernel kðÞ, for

estimating the copula density, can be done according to

[12], which copes with the boundary effect. The bandwidth parameters H 1 ; …; H p in (9) and h1 ; …; hp in (10) will

be chosen according to Silverman's rule of thumb, see e.g.

[16], i.e., for all j ¼ 1; …; p, we take

1=ðp þ 4Þ

1=5

4

b j N 1=ðp þ 4Þ and hj ¼ 4

b j N 1=5 ;

Hj ¼

s

Σ

p þ2

3

b j and s

b j are, respectively, the empirical standard

where Σ

deviation of the data Fb Y ðy ð1ÞÞ; …; Fb Y ðy ðNÞÞ and

j

1 N

d

KL

∑ log b

c Y Fb Y 1 y1 ðnÞ ; …; Fb Y p yp ðnÞ ;

m ðc0 ; cY Þ ≔

Nn¼1

ð8Þ

j

j

from the proper definitions of the estimates as follows:

d

b

b b

d

dKL

1 N dB c Y F Y 1 y1 ðnÞ ; …; F Y p yp ðnÞ

m ðc0 ; cY Þ

¼

∑

;

dB

Nn¼1

b

c YðFb Y 1 ðy1 ðnÞÞ; …; Fb Y p ðyp ðnÞÞÞ

ð11Þ

where d=dB ≔ð∂=∂Bij Þij , i; j ¼ 1; …; p, and

∂b

c Y ðFb Y 1 ðy1 ðnÞÞ; …; Fb Y p ðyp ðnÞÞÞ

∂Bij

¼

N

1

∑

NH 1 ⋯H p m ¼ 1

0

negative and achieves its minimum value zero iff B ¼ A

(up to scale and permutation indeterminacies). In other

b

b ðnÞ ¼ BxðnÞ;

mated source signals y

n ¼ 1; …; N. Based on

the equality (7), we propose to estimate the KLm-divergence KLm ðc0 ; cY Þ by a “plug-in” type procedure. We obtain

then the estimate

j

b the estimate of the

yj ð1Þ; …; yj ðNÞ. In order to compute B,

de-mixing matrix, we can use a gradient descent algorithm taking as initial matrix B0 ¼ I p , the p p identity

d

matrix. The gradient in B of KL

m ðc0 ; cY Þ can be explicated

1

words, we have A 1 ¼ arg inf B KLm ðc0 ; cY Þ. Hence, to

achieve separation, the idea is to minimize on B some

d

statistical estimate KL

m ðc0 ; cY Þ, of KLm ðc0 ; cY Þ, constructed

from the data yð1Þ; …; yðnÞ. The separation matrix is then

d

b ≔arg inf B KL

estimated by B

m ðc0 ; cY Þ, leading to the esti-

321

k

p

∏

j ¼ 1;j a i

k

!

Fb Y j ðyj ðmÞÞ Fb Y j ðyj ðnÞÞ

Hj

1

!

b

b

Fb Y i ðyi ðmÞÞ Fb Y i ðyi ðnÞÞ 1 ∂ F Y i ðyi ðmÞÞ F Y i ðyi ðnÞÞ A

;

∂Bij

Hi

Hi

with

∂Fb Y i ðyi ðmÞÞ

1 N

y ðℓÞ yi ðmÞ ¼

∑ k i

xj ðℓÞ xj ðmÞ

∂Bij

Nhi ℓ ¼ 1

hi

and

∂Fb Y i ðyi ðnÞÞ

1 N

y ðℓÞ yi ðnÞ ¼

∑ k i

xj ðℓÞ xj ðnÞ :

∂Bij

Nhi ℓ ¼ 1

hi

5. A solution to the BSS for dependent source

components

where

!

p

N

Fb Y j ðyj ðmÞÞ uj

1

b

c Y ð uÞ ≔

∑ ∏ k

;

NH 1 ⋯H p m ¼ 1 j ¼ 1

Hj

ð9Þ

for all u A ½0; 1p , is the kernel estimate of the copula density cY ðÞ, and Fb Y j ðxÞ, 8 j ¼ 1; …; p, is the smoothed estimate

of the marginal distribution function F Y j ðxÞ of the random

variable Yj, at any real value x A R, defined by

yj ðℓÞ x

1 N

Fb Y j ðxÞ ≔ ∑ K

;

ð10Þ

Nℓ¼1

hj

where KðÞ is the primitive of a kernel kðÞ, a symmetric

centered probability density. In our forthcoming simulation study, we will take as kernel kðÞ a standard Gaussian

We give in the following subsections a solution to

the BSS problem for mixtures of dependent component

sources. The method depends on the knowledge about the

dependency structure of the source components.

5.1. When both the model and the parameter are known

Assume that we dispose of some prior information

about the density copula of the random source vector S.

Note that this is possible for many practical problems, it

can be done, from realizations of S, by a model selection

procedure in semiparametric copula density models

fcθ ðÞ; θ A Θ Rd g, typically indexed by a multivariate parameter θ, see e.g. [4]. The parameter θ can be estimated

322

A. Keziou et al. / Signal Processing 104 (2014) 319–324

using maximum semiparametric likelihood, see [18,1].

Denote by θ0 the obtained value of θ and cθ0 ðÞ the copula

density modeling the dependency structure of the source

components. Obviously, since the source components are

assumed to be dependent, cθ0 ðÞ is different from the

density copula of independence c0 ðÞ. Hence, we naturally

replace, in the above criterion function B↦KLm ðc0 ; cY Þ, c0

by cθ0 . Moreover, we can show that the criterion function

B↦KLm ðcθ0 ; cY Þ is nonnegative and achieves its minimum

value zero iff B ¼ A 1 (up to scale and permutation

indeterminacies), i.e., A 1 ¼ arg inf B KLm ðcθ0 ; cY Þ, provided

that the copula density cθ0 ðÞ of S satisfies the following

assumption (C.A): for any regular matrix M, if the copula

density of MS is equal to cθ0 ðÞ, then M ¼ DP, where D is

diagonal and P is a permutation. Note also that the

criterion KLm ðcθ0 ; cY Þ can be written as

!!

cY ðF Y 1 ðY 1 Þ; …; F Y p ðY p ÞÞ

KLm cθ0 ; cY ¼ E log

:

cθ0 ðF Y 1 ðY 1 Þ; …; F Y p ðY p ÞÞ

So as before, we propose to estimate the de-mixing matrix

d

b ≔arg inf B KL

by B

m ðcθ0 ; cY Þ, where

bY ðFb Y 1 ðy1 ðnÞÞ; …; Fb Y p ðyp ðnÞÞÞ

1 N

c

d

KL

∑ log

:

m cθ0 ; cY ≔

Nn ¼ 1

cθ0 ðFb Y 1 ðy1 ðnÞÞ; …; Fb Y p ðyp ðnÞÞÞ

The estimates of copula density and the marginal distribub can be

tion functions are defined as before. The solution B

computed by a descent gradient algorithm. The gradient in

B can be explicitly computed in a similar way as in (11)

b

b ðnÞ ¼ BxðnÞ;

Section 4. The estimated source signals are y

indeterminacies), provided that the copula density model

fcθ ðÞ; θA Θ Rd g of S satisfies the following identifiability

assumption (C.B): for any regular matrix M, if the copula

density of MS A fcθ ðÞ; θ A Θ Rd g, then M ¼ DP, where D is

diagonal and P is a permutation.

5.3. When both the model and the parameter are unknown

In this case, we can use the following methodology. We

consider a class of L models of copula densities of the

source components, denoted M1 ≔fc1θ1 ðÞ; θ1 A Θ1 g; …; M L ≔

fcLθL ðÞ; θL A ΘL g. Here, the goal is to separate the source

signal using the true unknown model of dependency of

the sources. Assume that each model Mi, i¼1,…,L, satisfies

the identifiability condition (C.B) in the above subsection.

Then, the criterion function B↦inf ℓ ¼ 1;…;L inf θℓ A Θℓ KLm

ðcℓθℓ ; cY Þ is nonnegative and achieves its minimum value

zero iff B ¼ A 1 (up to scale and permutation indeterminacies). Hence, we propose to apply the method described

in the above subsection for each model, and then

choose the solution that minimizes the criterion over all

considered models, i.e.,

ℓn

b ≔arg inf inf KL

d

B

m ðcθ ; cY Þ

B

θ A Θ ℓn

where

ℓ

d

ℓn ≔arg min inf inf KL

m ðcθ ; cY Þ:

ℓ ¼ 1;…;L B

θ A Θℓ

6. Simulation results

n ¼ 1; …; N. We obtain then the following algorithm.

Algorithm 1. A copula based BSS algorithm for dependent

component sources.

Data : the observed signals xðnÞ; n ¼ 1; …; N

b ðnÞ; n ¼ 1; …; N

Result : the estimated sources y

Initialization : y0 ðnÞ ¼ B0 xðnÞ, B0 ¼ I p . Given ε 4 0 and μ 40

suitably choosen

Do

Update B and y:

cm ðcθ ;cY Þ

dKL

0

Bk þ 1 ¼ Bk μ

dB

B ¼ Bk

yk þ 1 ðnÞ ¼ Bk þ 1 xðnÞ; n ¼ 1; …; N

Until

J Bk þ 1 Bk J o ε

b ðnÞ ¼ yk þ 1 ðnÞ; n ¼ 1; …; N

y

5.2. When the model is known and the parameter is

unknown

Denote by fcθ ðÞ; θ A Θ Rd g the semiparametric copula

density model of the source components. Since the parameter θ is unknown, we propose to adapt the above

criterion and to estimate the demixing matrix by

b ≔arg inf B inf θ A Θ KL

d

B

m ðcθ ; cY Þ, which can be computed

using the gradient (on both B and θ) of the criterion

d

function ðB; θÞ↦KL

m ðcθ ; cY Þ. Note that the criterion function

B↦inf θ A Θ KLm ðcθ ; cY Þ is nonnegative and achieves its mini-

mum value zero iff B ¼ A 1 (up to scale and permutation

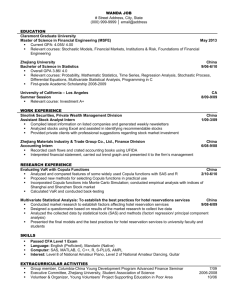

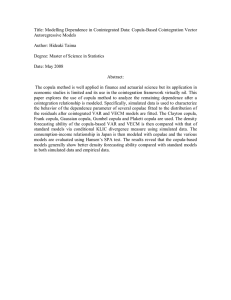

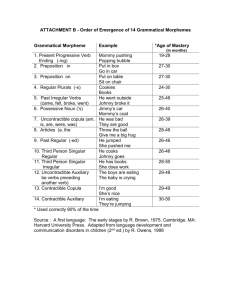

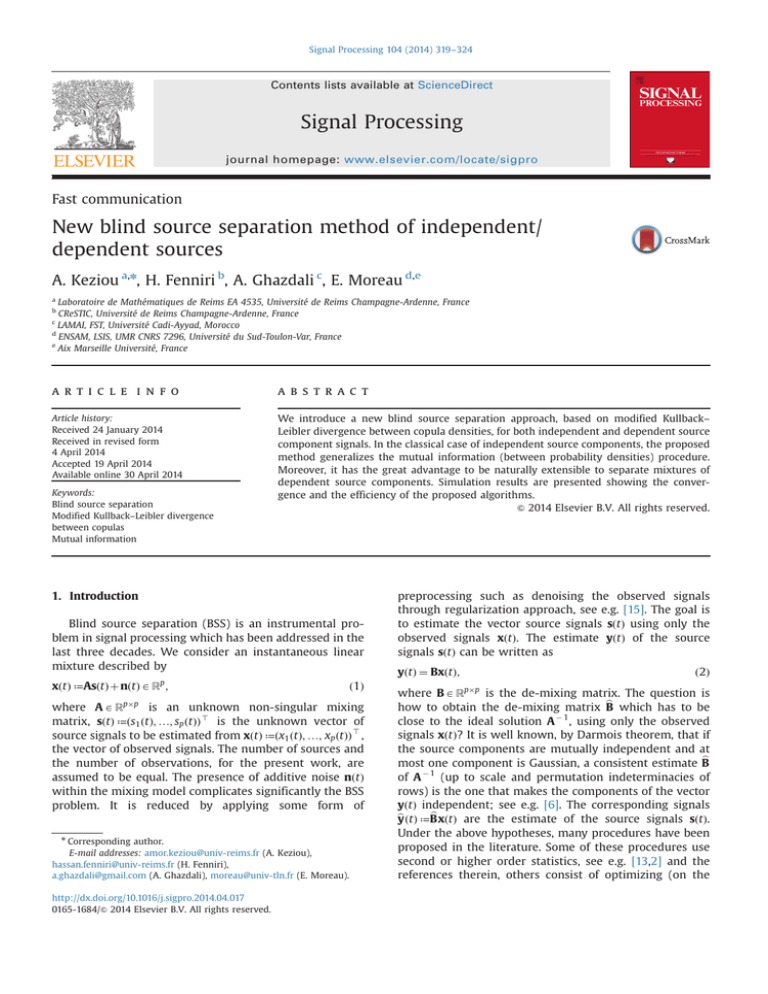

In this section, we give simulation results for the proposed method. We dealt with instantaneous mixtures

(2 mixtures of 2 sources) of five kinds of sample sources:

uniform i.i.d. with independent components (Fig. 1a); i.i.d.

sources with independent components where the marginals are drawn from the 4-ASK (Amplitude Shift Keying)

alphabets ( 3, 1,1,3 with equal probabilities 0.25) at

which was added a centered Gaussian random variable

with variance equal to 0.25 (Fig. 1b); i.i.d. (with uniform

marginals) vector sources with dependent components

generated from Fairlie–Gumbel–Morgenstern (FGM)copula with θ0 ¼ 0:8 (Fig. 2); i.i.d. (with uniform marginals

in Fig. 3a and binary phase-shift keying (BPSK)-marginals

in Fig. 3b) vector sources with dependent components

generated from Ali–Mikhail–Haq (AMH)-copula with

θ0 ¼ 0:6. The accuracy of source estimation is evaluated

through the signal-noise-ratio (SNR), defined by SNRi ≔

2

2

N

b

10 log10 ∑N

In the

n ¼ 1 si ðnÞ =∑n ¼ 1 ðy i ðnÞ si ðnÞÞ ; i ¼ 1; 2.

standard case of independent source components, the

proposed method described in Section 4 is compared with

the MI one proposed by [14], under the same conditions,

see Fig. 1. The used mixing matrix is A ≔½1 0:5; 0:5 1. The

number of samples is N ¼2000, and all simulations are

repeated 100 times. The gradient descent parameter is

taken μ ¼0.1 in all cases. We observe from Fig. 1 that the

proposed method gives good results for the standard case

of independent component sources. Moreover, we see,

from Figs. 2 and 3, that our proposed method is able to

separate, with good performance, mixtures of dependent

source components.

A. Keziou et al. / Signal Processing 104 (2014) 319–324

55

50

50

45

45

40

35

35

SNR (dB)

SNR (dB)

40

30

25

30

25

20

20

15

15

0

10

20

30

40

50

KLm_C

MI

10

KLm_C

MI

10

5

323

60

5

70

0

10

20

Iterations

30

40

50

Iterations

Fig. 1. SNRs vs iterations with independent sources. (a) Uniform sources. (b) ASK sources.

50

0.7

45

0.6

40

0.5

criterion value

SNR (dB)

35

30

25

0.4

0.3

20

0.2

15

10

5

0.1

SNR_y1

SNR_y2

0

10

20

30

40

50

0

60

0

10

20

Iterations

30

40

50

60

Iterations

55

55

50

50

45

45

40

40

35

35

SNR (dB)

SNR (dB)

Fig. 2. Separation of dependent sources from FGM copula. (a) SNRs vs iterations. (b) Criterion vs iterations.

30

25

30

25

20

20

15

15

SNR_y1

SNR_y2

10

5

0

50

100

Iterations

150

SNR_y1

SNR_y2

10

200

5

0

50

100

Iterations

Fig. 3. SNRs vs iterations with dependent sources from AMH copula. (a) Uniform sources. (b) BPSK sources.

150

200

324

A. Keziou et al. / Signal Processing 104 (2014) 319–324

7. Conclusion

We have proposed a new BSS approach by minimizing

the empirical KLm-divergence between copula densities.

The approach is able to separate instantaneous linear

mixtures of both independent and dependent source

components. In Section 6, the accuracy and the consistency of the obtained algorithms are illustrated by simulation, for 2 2 mixture-source. Other simulation examples

give similar results for the case of 3 3 mixture-source.

It should be mentioned that our proposed algorithms

based on copula densities, rather than the classical ones

based on probability densities, are more time consuming,

since we estimate both copulas density of the vector and

the marginal distribution function of each component. The

present approach can be extended to deal with convolutive mixtures, and it will be interesting also to investigate a

theoretical study of the identifiability assumptions as well

as the convergence of the proposed algorithms. These

developments are beyond the scope of the present paper,

and will be addressed in future communications.

Acknowledgments

Thanks to Egide PHC-Volubilis program for funding the

project 27093ND - MA/12/270. The authors wish to thank

the reviewers for their constructive comments and criticisms leading to improvement of this paper.

References

[1] S. Bouzebda, A. Keziou, New estimates and tests of independence in

some copula models via ϕ-divergences, Kybernetika 46 (1) (2010)

178–201.

[2] M. Castella, S. Rhioui, E. Moreau, J.-C. Pesquet, Quadratic higher order

criteria for iterative blind separation of a MIMO convolutive mixture of

sources, IEEE Trans. Signal Process. 55 (1) (2007) 218–232.

[3] R.B. Chen, M. Guo, W. Hardle, S. Huang, Independent component

analysis via copula techniques, SFB 649 Discussion Paper 2008-004,

2008.

[4] X. Chen, Y. Fan, Pseudo-likelihood ratio tests for semiparametric

multivariate copula model selection, Can. J. Stat. 33 (3) (2005)

389–414.

[5] A. Cichocki, S.-i. Amari, Families of alpha- beta- and gammadivergences: flexible and robust measures of similarities, Entropy

12 (6) (2010) 1532–1568.

[6] P. Comon, Independent component analysis, a new concept? Signal

Process. 36 (3) (1994) 287–314.

[7] M. El Rhabi, G. Gelle, H. Fenniri, G. Delaunay, A penalized mutual

information criterion for blind separation of convolutive mixtures,

Signal Process. 84 (10) (2004) 1979–1984.

[8] H. Joe, Multivariate Models and Dependence Concepts, Monographs

on Statistics and Applied Probability, vol. 73, Chapman & Hall,

London, 1997.

[9] J. Ma, J. Sun, Copula component analysis, in: ICA 2007, 2007, pp. 73–80.

[10] W. Naanaa, J.-M. Nuzillard, Blind source separation of positive and

partially correlated data, Signal Process. 85 (9) (2005) 1711–1722.

[11] R.B. Nelsen, An Introduction to Copulas, Springer Series in Statistics,

second ed., Springer, New York, 2006.

[12] M. Omelka, I. Gijbels, N. Veraverbeke, Improved kernel estimation of

copulas: weak convergence and goodness-of-fit testing, Ann. Stat.

37 (5B) (2009) 3023–3058.

[13] J.-C. Pesquet, E. Moreau, Cumulant-based independence measures

for linear mixtures, IEEE Trans. Inf. Theory 47 (5) (2001) 1947–1956.

[14] D.-T. Pham, Mutual information approach to blind separation of

stationary sources, IEEE Trans. Inf. Theory 48 (7) (2002).

[15] M.E. Rhabi, H. Fenniri, A. Keziou, E. Moreau, A robust algorithm for

convolutive blind source separation in presence of noise, Signal

Process. 93 (4) (2013) 818–827.

[16] B.W. Silverman, Density Estimation for Statistics and Data Analysis,

Monographs on Statistics and Applied Probability, Chapman & Hall,

London, 1986.

[17] A. Sklar, Fonctions de répartition à n dimensions et leurs marges,

Publ. Inst. Stat. Univ. Paris 8 (1959) 229–231.

[18] H. Tsukahara, Semiparametric estimation in copula models, Can.

J. Stat. 33 (3) (2005) 357–375.

[19] F. Vrins, D.-T. Pham, M. Verleysen, Is the general form of Renyi's

entropy a contrast for source separation? in: Lecture Notes in

Computer Science, vol. 4666/2007, Springer, Berlin, 2007.