OUTCOMES BASED ASSESSMENT OF THE ENGINEERING

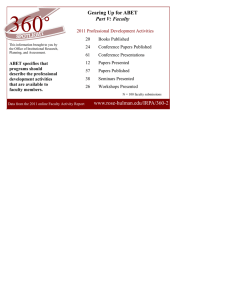

advertisement

OUTCOMES BASED ASSESSMENT OF THE ENGINEERING PROGRAMS AT QASSIM UNIVERSITY FOR ABET ACCREDITATION Sulaiman A. AlYahya Al-Badrawy A. Abo El-Nasr Dept. of Mechanical Engineering, College of Engineering, Qassim University, Buraydah, Saudi Arabia syhya@qec.edu.sa, albadarwye@qec.edu.sa Abstract—In this paper, a continuous improvement process was developed and implemented in our engineering programs at College of Engineering, Qassim University, Saudi Arabia. The continuous improvement plan was based on an integrated set of strategies aimed at: establishing and implementing a structured process that translates educational objectives into measurable outcomes, and specifies feedback tracks for corrective actions. As a result of setting of direct and indirect measures, the programs can assess and evaluate the level of achievement of the program outcomes during the course of the educational process. These relate to the skills, knowledge, and behaviors that students acquire in their matriculation through the program. The documentation of the continuous improvement process, prepared by our engineering programs, describes two intersecting assessment loops; slow and fast. The slow loop refers to the process by which the program examines its program outcomes. The fast loop refers to the process by which we assess the degree to which our students and graduates achieve the educational program objectives developed in the slow loop. The stages of developing and implementing the objectives, outcomes and the assessment plan for the engineering programs are presented. Preliminary results from pilot implementations are also presented. Improvements are already evident in the areas of teaching effectiveness, assessment of student learning, and involvement of all the constituencies. Based on these procedures, the Accreditation Board for Engineering and Technology (ABET) visit to our engineering programs had achieved great success and all of the programs have fully accredited on August 2010 and the next visit to our engineering programs will be on 2016. Keywords-Accreditation; Self Assessment; PEOs; POs; Continuous Improvement; Soft skills I. INTRODUCTION Engineering education is considered to be pivotal in the development of modern societies. One way of ensuring and demonstrating the high quality of engineering education is through evaluation carried out by accreditation bodies like ABET. In an effort to improve and maintain the quality of engineering education offered by its programs, the College of Engineering at Qassim University initiated external evaluations conducted by the ABET. This process was planned initially to make sure that what our programs offer, is equivalent to their peer programs worldwide, in addition, to satisfy the graduates and society needs. Accreditation, as it is known, is a voluntary certified mechanism for engineering programs, administered by recognized professional bodies [1]. It is also a positive move towards securing professional legitimacy, granting accredited engineering programs their deserving honor and esteem through continued professional development. Internationally, ABET has been considered as one of the highly respected bodies that lead this process. In a major shift influenced by pressure from industry and global competition, ABET has introduced a new Engineering Criteria 2000 (EC 2000) [2], which address the effectiveness of engineering education programs by focusing on an assessment and evaluation process that assures the achievement of a set of educational objectives and outcomes. An important element of these criteria is the establishment of a continuous improvement process thorough outcomes assessment. Since then, the old system of counting course credits was largely abandoned and replaced by an outcomes-based process. Outcomes based assessment process focuses on what the students learned or what they actually can do at the time of graduation. In its accreditation criteria, ABET reaffirmed a set of "hard" engineering skills while introducing a second, equally important, set of six "professional or soft" skills. These latter skills include communication, teamwork, and understanding ethics and professionalism and engineering within a global and societal context, lifelong learning, and knowledge of contemporary issues. Engineering schools must present evidence that their graduates achieve reasonable levels of these skills, in addition to technical discipline-specific knowledge content [3]. Measuring student outcomes and understanding the learning experience is critical for continuous improvement and satisfying accreditation agencies. Furthermore, the design and implementation of the educational programs should be harmonized to achieve the departments' mission and the programs educational objectives and outcomes. On the other side, the educational program promotes continuous improvement to: enhance its ability to satisfy the program's constituencies by meeting their needs and enhance the students' achievement of the program objectives and outcomes [4]. In fulfilling ABET criteria; many hurdles have been faced in our engineering program: the most difficult ones were the establishment of Program Educational Objectives (PEOs) and Program Outcomes (POs). This has gone through number of trials and modifications made by the Programs Committees (PCs) that were formed to prepare for accreditation. Though, the ABET definitions [2] of objectives as "broad statements that describe the career and professional accomplishment ---", and outcomes as "narrower statements that describe what students are expected to know and be able to do ---" seem to be simple and straightforward, nevertheless, the PCs along with the main program constituencies and in consultation with the Professional Advisory Boards (PABs) kept revising and rephrasing the objectives and outcomes each time it discovered that there is a need for more elaboration and/or confusion of the two criteria [5]. Professional expertise from industry and the program alumni were the main tools for the assessment of PEOs and POs, while faculty and senior exit students assessed only the POs. Assessment of PEOs and POs show normally the strengths and weaknesses of the whole educational process. Subsequently, continuous improvement strategies were set to elaborate on the strengths and to overcome the weaknesses. The improvement process should describe also the available documentation processes commonly used in making decisions regarding program improvement. The implementation of these strategies was made by revising the curriculum, teaching methods, lab facilities … etc [5]. In this paper, the development process of the PEOs, POs and the continuous improvement process are presented. The difficulties encountered during this process, in addition to the result of continuous improvement conducted for mechanical engineering (ME) program, are discussed. In the following, we will focus only on the ME program as an example of the undergraduate engineering programs of our college. II. ME PROGRAM EDUCATIONAL OBJECTIVES (PEOS) In 2005, Qassim University has adopted a new system called the Preparatory Year Program (PYP) in which the new university students enrolled for one complete academic year studying math, physics, English language and other general courses before they are eligible to admit to the scientific colleges. On this basis, the College of Engineering decided to modify its academic programs in order to be in alignment with the new system and job market requirements, taking into consideration, the recommendations raised by the engineering educational conference held by College of Engineering, Qassim University in 2006. In 2006, the Faculty of the ME department had revised the PEOs of the undergraduate program and finalized them to take a final form that published in different ways such as the student handbook [6] and appears in the college website [7] and in the student displaying boards in the department building. This process occurred through a series of faculty meetings along with the available program constituencies including: expected employers of our graduates, Faculty of the ME department, Professional Advisory Boards, and University Council, and work of several committees and subcommittees on program development. In addition, the pioneer engineers in Saudi Arabia were asked for their inputs on the suggested objectives. The PEOs are developed to be consistent with the mission of the College of Engineering, Qassim University, that stated as: "The College of Engineering seeks to meet the needs of the Saudi society and the region with outstanding engineering programs in education, research, and community service" [5,7] and in accordance with the ABET accreditation criteria. The ME Program Committee has finally formulated the following objectives for the ME program as follow. The ME graduates should: 1. Possess the skills and knowledge necessary for work environment including design, installation, operation, maintenance and inspection of mechanical and energy systems. 2. Utilize information technology; data analysis; and mathematical, computational, and modernexperimental techniques in solving practical problems. 3. Fit in various working environments through effective communication skills and the ability to work in multidisciplinary teams. 4. Engage in life-long learning and gain skills to promote professional development, and career planning based on social and professional ethics. It was planned to periodically collect and document the needs and feedbacks of the program's various constituencies regarding the program objectives as will be outlined in section 5. Based on these needs and feedbacks, the PEOs are evaluated to check that the established objectives significantly reflect the constituencies' needs. III. ME PROGRAM OUTCOMES (POS) The ME program outcomes describe knowledge, skills and ability of students by the time of their graduation from ME department. These relate to the skills, knowledge, and behaviors that students acquire in their matriculation through the program. The POs of the ME program support the PEOs and the College mission. And as mentioned in the preceding section, in 2006 the College of Engineering took the opportunity and adapted its academic programs in order to be aligned with the new system that implemented in the university. At that time, the educational outcomes for the ME program were established by the Faculty of ME department in consultation with the available constituencies. Then, the ME department council formulated the POs to be closely aligned to the ABET Criterion 3 (a) through (k) outcomes [2]. In addition, one extra technical outcome was added to the eleven ABET outcomes. The POs were presented to various constituent groups to get their comments and review. The outcomes of ME program are published on the college website [7] and listed in the student handbook [6]. The outcomes of the ME Program are as below: At the time of graduation, our students will have: a. An ability to apply knowledge of mathematics, science, and engineering; b. An ability to design and conduct experiments, as well as to analyze and interpret data; c. An ability to design a system, component, or process to meet desired needs within realistic constraints; d. An ability to function on teams; e. An ability to identify, formulate, and solve engineering problems; f. An understanding of professional and ethical responsibility; g. An ability to communicate effectively; h. The broad education necessary to understand the impact of engineering solutions in a global,economic, environmental, and societal context; i. A recognition of the need for, and an ability to engage in, life-long learning; j. A knowledge of contemporary issues; k. An ability to use the techniques, skills, and modern engineering tools necessary for engineering practice; and l. The ability to work professionally in both thermal and mechanical systems areas including the design and realization of such systems. The program outcomes from (a) to (k) are in full consistence with the stated ABET outcomes Criterion 3 [2], while program outcome (l) adopts the ME specific criteria. It is planned to periodically evaluate the POs and revise them if needed in order to continuously improve their compatibility with the program objectives and their achievability. The process described in section 4 shows the methodology of periodically evaluating, revising and improving the program outcomes. A. Relationship of Program Outcomes to Program Educational Objectives A summary of the relationship between the established outcomes and the objectives of the ME program is shown in Table A.1 in Appendix A. In order to quantify this relationship, three correlation levels are used. A solid square indicates the strongest correlation between a specific outcome and a given educational objective. The next level of correlation, marked by a half-filled square, represents lower level correlation. An open square indicates no correlation. As shown in the table, every PEO is correlated with at least one program outcome with strong degree of correlation. B. Relationship of Courses in the Curriculum to the Program Outcomes A summary of the relationship between the curriculum courses and the established outcomes and the ME program is shown in Tables A.2 in appendix A. The data in this tables indicate the level of contribution of courses in the ME curriculum to the outcomes (a) – (k) and specific ME outcome (l). The course syllabi in the self study reports [5,7] show clearly that each course outcome fulfills part of the requirements of the program outcomes. IV. THE CONTINUOUS IMPROVEMENT PROCESS (CIP) A. Development and Implementation The outcomes assessment process means here that: "The systematic collection, review, and use of information about educational programs undertaken for the purpose of improving student learning and development" [4]. In our programs, the continuous improvement process was basically based on an integrated set of strategies aimed at: establishing and implementing a structured process that translates educational objectives into measurable outcomes, performance criteria and then specifies feedback tracks for corrective actions, Figure 1 [8,9]. On the basis of this understanding, the ME department provides the necessary assessment training on the plan, creating an assessment tools, and identifying and reviewing key institutional practices to ensure that they are aligned with the assessment process. The conceptual model of the assessment plan used in the department is shown in Figure 2. The process is basically an application of the methodology known as "Plan-Do-Check-Act" (PDCA). This plan describes two interacting assessment loops. These are often referred to as the "slow loop" and the "fast loop" [10]. The slow loop refers to the process by which the department examines its program outcomes. During the first few years of the assessment process (20062007), the ME department reviewed its program outcomes and course outcomes each year. After the first few years, the program and course outcomes became an accurate reflection of the current program objectives. The program outcomes and course outcomes were then only reviewed and updated every five years to reflect changes in the curriculum. The PEOs and POs were reviewed by ME Program Committee in spring 2006. Performance Criteria Learning Outcome Program Educational Objective The ME graduates should fit in various working environments through effective communication skills and the ability to work in multidisciplinary teams. An ability to function on team Research and gather information Fulfill duties of team roles Shares in work of team Listens to other teammates Figure 1 Transferring the educational objectives to outcomes and then to performance criteria. The fast loop refers to the process by which we assess the degree to which our students and graduates achieve the educational program objectives developed in the slow loop. Data are gathered at least once a year. For each round in the fast loop, these data are summarized by the Analysis Committee in a general assessment report. The assessment results are then reviewed by the ME Subject Committees and the conclusions and recommendations drawn are added to the report. These results and conclusions are then presented to the ME Program Committee and then to the ME faculty in special meeting for this purpose. Implementation of changes in courses is discussed by the Subject Committee members who are the course coordinators in the required ME courses. Recommended changes are then presented by the course coordinator to the course caucus and discussed in a caucus meeting. The course coordinator then discusses the implementation of course changes with the instructors of the course for that year. Some recommendations that come from the assessment report may be changes the curriculum. Curriculum changes are discussed by the Subject Committees and any specific curriculum change proposal is written and submitted to the Program Committee for review before being presented to the ME faculty for endorsement. In the assessment plan, the fast loop has been simplified and the terminology reduced to only the essential phrases so that all faculty and constituencies can easily understand the process. It is planned to periodically evaluate the level of achievement of the Program Outcomes and take appropriate actions towards continuous improvement. This process normally has the following steps: a) Setting performance criteria, b) Collecting data and measuring of the program outcomes, c) Reviewing, analyzing and identifying improvement actions, d) Implementing the improvement actions, and e) Evaluating the effectiveness of improvement actions and changes. B. Achievement of Program Outcomes 1) Assessment Process Over the course of every academic year, substantial data are generated through our assessment processes using the available assessment tools. The methods used for measuring the achievement of the program outcomes are classified into two categories in which each one of the program outcomes must be assessed by at least one direct and one indirect of the following measures [5]: a) Direct performance measures which include: i. Student performance evaluations (exams, quizzes, assignments, reports … etc.), ii. Instructors evaluation report, iii. Evaluation of the Graduation Project, iv. Evaluation of the summer/co-op training, and v. Outcomes Achievement Exam (a comprehensive exam that consists of at least 150 questions and covers all the courses in the curriculum and graduate should take it by their graduation). b) Indirect performance measures which include: i. Surveys: students' surveys, exit students surveys, and training surveys, ii. Reports from the Professional Advisory Board, iii. Reports from the External Visitor, and iv. Reports from the Student Advisory Board 2) Level of achievement for the program outcomes (Assessment Criteria) In the ME program, the level of achievement for the program outcomes from (a) to (l) are assessed and then evaluated against the following criteria [5]: a) For the group of selected courses specified in Table 1 (these specified courses are highly correlated to the specified outcome), the percentage of student passing the course is not less than 60% and average final grade is not less than 60%. b) Mean rating* of the responses from the student surveys regarding each outcome are not less than 3.5 out of 5 for the courses specified in the previous item "l". c) Mean rating* of the responses from the senior exit surveys regarding each outcome are not less than 3.7 out of 5. d) No negative comments are reported regarding each outcome from the course instructors and exam committees. e) No negative comments are reported regarding each outcome in the reports of the Students Advisory Board, Professional Advisory Board and External Visitor. f) The average grading of the students Outcomes achievement Exam regarding the questions related to each outcome is not less than 2.4 out of 4 (60%). This criterion is not applicable for the outcomes d and g. g) The feedback from rubrics analysis of graduation project and summer training for the graduated students regarding each outcome are not less than 2.5 out of 5. h) For the recent batch of graduate, the average rate** calculated using rubric analysis of the specified courses in Table 1 is not less than 2.5 out of 4. * This rating for a grading scale of 5: Strongly Agree, 4: Agree, 3: Uncertain, 2: Disagree, 1: Strongly Disagree. ** This rate is based on the credit hour and grade of each course Mission Program Outcomes Educational Objectives Assess and Evaluate Feedback for Continuous Improvement Evaluation: Interpretation of Evidence Measurable Performance Criteria Program Constituencies Assessment: Data Collection and analysis Educational Practices/Strategies Students Graduates Indirect Measures Direct Measures Figure 2 Flow chart of the continuous improvement process TABLE 1 THE HIGHLY CORRELATED COURSES* CORRESPONDING TO EACH PROGRAM OUTCOME Program Outcomes a b c d e Highly correlated courses with each POs GE 104, GE 202, ME 241, ME 251, ME 463, GE 105 ME 383, ME 384, ME 352, ME 363 ME 341, ME 441, ME 472, ME 473, ME 330, ME 372 ME 384, ME 464, ME 352, ME 363 ME 362, ME 373, ME 481, ME 372 Specified courses for the recent graduate batch ME251, ME361, ME372, ME341 ME352, ME361, ME464, ME383 ME341, ME361, ME441, ME461 ME341, ME352, ME361, ME382 ME251, ME341, ME361, ME371 ME 352, ME 371, ME352, ME371, ME 381, ME 380 ME382, ME383 ME 383, ME 384, ME241, ME352, g ME 352, ME 363 ME464, ME362 ME 372, ME 471, ME251, ME361, h ME 330, ME 360 ME471, ME331 ME 371, ME 382, ME371, ME382, i ME 473, ME 351 ME498, PHYS104 GE 104, GE401, ME ME371, ME382, j 380 ME498, GE401 ME 461, ME 464, ME241, ME361, k GE 105, ME 330, ME461, ME464 ME 380 ME 461, ME 464, ME241, ME341, l ME 471,ME 473, ME ME361, ME372 481 * All the details regarding ME courses codes and title are found in Table A.2 f 3) Results of the Assessment Process To measure the achievement of the program outcomes, the previous assessment criteria were applied on the collected data. It is found that significant achievement of the program outcomes were obtained as in the following results [5]: a) As shown in Table A.3, the percentage of student passing the courses are more than 60% and average final grades are more than 60% for all the courses except for the courses ME 251, ME 362, and ME 472. These results satisfy the first assessment criterion. b) As shown in Figure 3. Mean rating of the responses from the senior exit surveys regarding each outcome are at least 3.9 out of 5 for the courses specified in Table 1. c) No negative comments were reported regarding the program outcomes from the course instructor and graduation project exam committees. d) No negative comments were reported regarding the program outcomes from the Students Advisory Board Report and Professional Advisory Board report. On the other hand the External Visitor had some comments regarding the written reporting projects. e) The rubrics analysis applied to the outcomes achievement exam regarding the program outcomes resulted in an average of 2.9 out of 4, shown in Figure 4. f) The feedback from rubrics analysis of graduation project and summer training for the graduated students regarding the program outcomes resulted in an average of 2.93 out of 4, as demonstrated in Figure 5. g) For the recent batch of graduates, the average rate calculated using rubric analysis of the specified courses in Table 1 is 2.83 out of 4, as demonstrated in Figure 6. Figure 4 The average rate of the rubrics analysis applied to the outcomes achievement exam. Figure 5 Feedback from rubrics analysis of graduation project and summer training for the recent batch of graduated students. 5 4 3 2 1 0 l k j i h g f e d c b a Figure 3 The mean rating of the responses from the student surveys for each program outcome. Figure 6 The average rate of the specified courses in Table A.1 for recent batch of graduated students. 4) Closing the Assessment Loop and Final Conclusion: The ME Program Committee evaluated the previous assessments and found that most of the outcomes assessment results met or exceeded the stated criteria. This is summarized in the following: a) The percentage of student passing the course and average final grade: As it can be noticed from the tables under each outcome, the percentage of student passing courses and average final grades of courses are mostly higher than the criteria (60%). The exceptions were courses ME 251, ME 363 and ME 472. These courses will be in focus in the next assessments to find out whether is it a phenomenon or it was just an accidental case. It worthily mentioned that, the final result for each course is an accurate and direct judgment for the achievement of the course outcomes, since through each course there are accumulated steps of assessments including quizzes, reports, oral presentations, home works, midterm and final exams. These assure that only students who passed the courses have achieved the course outcomes. b) Mean rating of the responses from the student surveys: The results of student's surveys for the selected courses regarding each outcome are not less than 3.5 out of 5. However, for ME 341, ME 372, ME 351 and ME 471, the planned criteria were not achieved. The ME program committee decided not to take immediate action and they will monitor these courses in the coming semesters. In general, this proves that the students were mostly satisfied about their achievement of the program outcomes. c) Mean rating of the responses from the senior exit surveys: The results of senior exit surveys demonstrated under each outcome show that the mean ratings were higher than 3.7. This prove that the graduate student were fully satisfied about their achievement of the program outcomes. d) Instructors and exam committees feed backs: The ME subject committees have reviewed the feedbacks and reports from courses instructors. This review ended to non substantial comments regarding any of the planned outcomes. Some suggestions of minor changes were only reported. These suggested changes were only to improve the courses practicing and management. e) Reports of Students Advisory Board, Professional Advisory Board and External Visitor: The ME program committee has thoroughly reviewed the reports from the Students Advisory Board, Professional Advisory Board and External Visitor. The review has ended to the conclusion that these boards were mostly satisfied with the program outcomes and their achievement. However, there was a comment raised from the external visitor regarding written reporting (outcome g). This comment was previously observed by the ME faculty and a previous action of adapting two new engineering design courses, GE 211 and GE 213, was made. These courses are planned to improve the student ability for oral and written communications. Recently, all faculty members and specially the graduation projects supervisors were directed to give more consideration of written reporting. f) Average grading of the students outcomes achievement exam: For the conducted Outcomes Achievement Exam, senior students optionally attended the exam. The rubrics analysis used for assessment the outcome achievement exams was resulted in average values higher than or equal to 2.4. This means that all students attended the exam were successfully answered more than 60% of questions which measures the ME program outcomes in holistic manner. g) Graduation project and summer training for the graduated students: The analysis committee has assessed the feedbacks from the faulty member who involved in the graduation project and summer training regarding the achievement of program outcomes for each graduated student. On the basis of rubrics analysis the committee has ended to the results demonstrated in Figure 6. As shown in the figure the minimum average of both graduation project and summer training regarding each outcome were not less than 2.5 out of 4. This assures that our graduated students have achieved the ME program outcomes. i) Specified courses for the recent graduated batch The first batch from the ME program was graduated at the end of Fall 2008 (February 2009). For this batch, specified courses (shown in Table 1) have been analyzed using rubric analysis. The analysis results are shown in Figure 5 which indicates that the outcome f has an average rate less than the required criteria (2.4 out of 4). This result is also confirmed by the previous action of offering the two new engineering design courses, GE 211 and GE 213, and Coop training in our new curriculum. This action is supposed to improve the achievement of outcome f. V. ABET VISIT The programs committees prepared the self study reports (SSRs) of their programs and then submitted them to ABET on July 2009. The SSRs of our engineering programs showed significant achievements of the programs outcomes for students and graduates. In December 2009, ABET team visited the college and evaluated all the programs and inspected the documents and facilities of the college and the university. In their exit statement, the ABET team stated that no observed weaknesses were found and just three concerns were reported in the criteria: 1. Program Educational Objectives, 2. Curriculum, and 3. Facilities Corrective actions were made to fix the raised concerns during the next semesters, right after the ABET visit [11]. As result of these processes, all the programs of the college of engineering has been successfully accredited by ABET after receiving a formal notification in August 2010. While College of Engineering, Qassim University, is not the first to embark on the accreditation path for its engineering programs, the realization that it is a new one and it was started since seven year indicates how much is the effort that paid to reach this accomplishment. VI. SUMMARY AND RECOMMENDATIONS This paper presented the experiences gained in developing and implementing a strategic plan that include objectives, outcomes and continuous improvement process that based on outcome-based assessment for the engineering programs at the College of Engineering, Qassim University. The results of the application of both direct and indirect measurements have provided significant evidences of the improved teaching and learning. The above detailed assessments gave good impression regarding the achievement of the program outcomes. The implementation of this outcome-based assessment process has effected a definite change in curricular content, facilities activities and assessment practices that the students are experiencing. Corrective actions have identified to address key issues such as program objectives, facilities and increasing the math and basic sciences in the curriculum. The application of these strategies has achieved a significant success as evident by a successful ABET site visit. ACKNOWLEDGMENT The authors would like to thank all the faculty members and staff of the College of Engineering at Qassim University, Saudi Arabia, for their valuable assistance in collecting and preparing the assessment data and for the discussion of these data. REFERENCES [1] D. DiBiasio and N.A. Mello, "Multilevel Assessment of Program Outcomes: Assessing a Nontraditional Study Abroad Program in the Engineering Disciplines", the Interdisciplinary Journal of Study Abroad, Vol. 10, Fall 2004, pp. 237-252. [2] Engineering Accreditation Commission, Engineering Criteria 2000, Third Edition, Accreditation Board for Engineering and Technology, Inc., Baltimore, MD, (1997). [3] L.J. Shuman, M. Besterfield-Sacre, J. McGourty, "The ABET "Professional Skills" – Can They be Taught? Can They Be Assessed?", J. Engineering Education, Jan. 2005, pp.41-55. [4] B. Abu-Jdayil, H. Al-Attar, M. Al-Marzouqi, "Outcomes Assessment in Chemical and Petroleum Engineering Programs", 3rd Int. Symposium for Engineering Education, University College Cork, Ireland, 2010. [5] "Self-Study Report", Mechanical Engineering Department, College of Engineering, Qassim University, Saudi Arabia, July 1, 2009. [6] Student Handbook, College of Engineering, Qassim University, Buraidah, Saudi Arabia, 2009. [7] College of Engineering, Qassim University, website:http:// www.qec.edu.sa/english/ [8] McGourty, J. "Strategies for developing, implementing, and institutionalizing a comprehensive assessment process for engineering education, Proceeding of the 28th Frontiers in Education Conference, (1998), pp. 117-121. [9] Rompelman, O., "Assessment of student learning: evolution of objectives in engineering education and the consequences for assessment, European Journal of Engineering Education, Vol. 25 no. 4, (2000), pp. 339-350. [10] Rogers, G.M., Assessment of student learning outcomes, 2007, http://www.abet.org/assessment. shtml#Assessment of student learning outcomes. [11] "Response to the draft statement of engineering accreditation commission: Action taken to waive weaknesses documented in the draft statement”, Mechanical Engineering Department, College of Engineering, Qassim University, Saudi Arabia, March 2010. APPENDIX A TABLE A.1 Program Educational Objectives (PEOs) MAPPING BETWEEN THE PEOS AND POS FOR THE ME PROGRAM ME Program Outcomes a b c d e f g h i j k l PEO# 1 PEO# 2 PEO# 3 PEO# 4 Strongly correlated, Somewhat correlated, Non-correlated TABLE A.2 MAPPING OF MECHANICAL ENGINEERING COURSES TO THE PROGRAM OUTCOMES: THE TABLE INCLUDES THE REQUIRED AND ELECTIVE COURSES. ME Program Outcomes Elective courses in Major Required courses in Major Course Codes and Names a b c d e f g h i j k l GE 104 Basics of Engineering Drawing GE 105 Basics of Engineering Technology GE 201 Statics GE 202 Dynamics ME 241 Mechanical Drawing ME 251 Materials Engineering ME 330 Manufacturing Processes ME 340 Mechanical Design-1 ME 343 Measurements and Instrumentation ME 351 Mechanics of Materials ME 352 Mechanics of Materials Lab ME 360 Mechanics of Machinery ME 363 Mechanics of Machinery Lab ME 371 Thermodynamics -1 ME 372 Thermodynamics – 2 ME 374 Heat and Mass Transfer ME 380 Fluid Mechanics ME 383 Thermo-fluid Laboratory -1 ME 384 Thermo-fluid Laboratory -2 ME 400 Senior Design Project ME 467 System Dynamics and Automatic Control ME 468 System Dynamics and Automatic Control Lab ME 423 Renewable Energy ME 425 Solar Energy ME 431 Tool Manufacturing ME 441 Mechanical Design -2 ME 453 Modern Engineering materials ME 455 Corrosion Engineering ME 462 Mechatronics ME 463 Mechanical vibrations ME 466 Robotics ME 470 Thermal Power Plants ME Program Outcomes Course Codes and Names a b c d e f g h i j k l ME 474 Refrigeration Engineering ME 475 Air Conditioning ME 480 Turbo Machinery ME 482 Compressible Fluids ME 483 Pumping Machinery ME 490 Selected Topics in Mechanical Engineering The relation depends on the selected topics however it must be at least strongly correlated to the outcomes j and l Course Codes are typical to those appeared in the ME Curriculum Strongly correlated, Somewhat correlated, Non-correlated TABLE A.3 THE AVERAGE FINAL GRADES, PERCENTAGE OF STUDENTS PASSING THE COURSE AND MEAN RATING FROM STUDENT SURVEYS OF THE SELECTED COURSES. Course No. of students studying the course Average final grade Percentage of students passing the course Mean rating from student surveys GE 104 144 86 95.8 4.39 GE 105 76 73.7 98.6 4.06 GE 202 34 77.7 97.1 4.25 ME 241 52 81.6 97.2 4.12 ME 251 31 53.8 67.7 3.75 ME 351 35 72 94.5 N/A ME 352 32 64.1 84.3 3.71 ME 330 13 80.3 100 3.69 ME 341 17 70 100 3.40 ME 360 18 83.8 100 N/A ME 362 6 56.6 83.3 3.75 ME 363 11 81 100 4.27 ME 371 24 70.13 75 N/A ME 372 7 70 85.7 3.14 ME 373 8 74.1 100 3.87 ME 380 14 74.1 92.8 4.29 ME 381 7 74.86 85 4.67 ME 384 6 72.1 100 4 ME 441 12 75 100 3.67 ME 461 14 71.6 92.8 4.57 ME 462 5 75 100 4.80 ME 463 11 81 100 4.4 ME 464 14 78.5 100 4.36 ME 471 6 65.6 66.6 N/A ME 472 9 51.8 55.5 4.17 ME 473 10 80.1 100 3.87 ME 481 9 83.8 100 4 GE 401 15 72.7 93.3 4.50