Influential Observations and Inference in Accounting Research

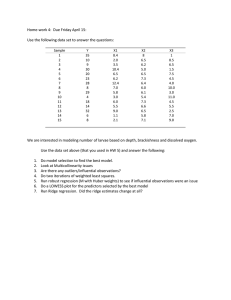

advertisement