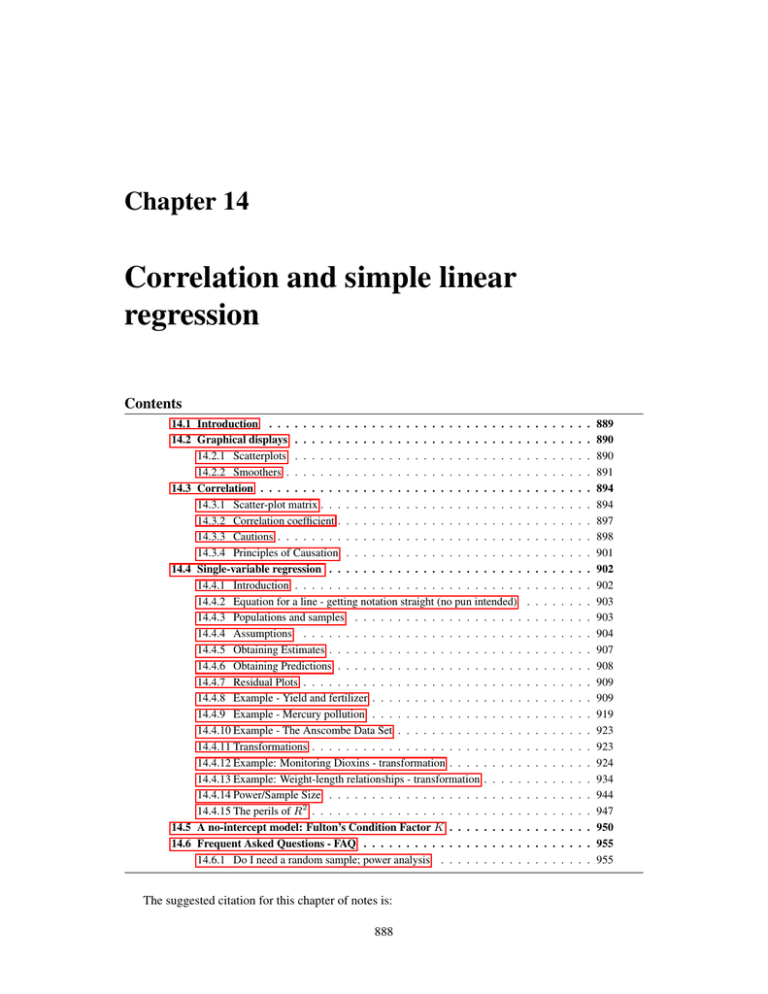

Correlation and simple linear regression

advertisement