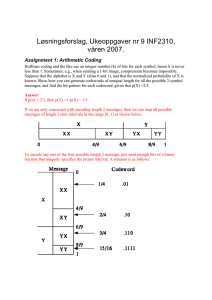

Huffman Code for “Fun” Image

advertisement

ECEN 1200

Telecommunications 1

10-16-06

Fall 2006

P. Mathys

Huffman Code for “Fun” Image

The “Fun” image shown below is a bitmap graphic with 32×16 = 512 pixels using 6 different

colors: White, yellow, magenta, blue, black, and red.

If the different pixel colors are encoded using a fixed length binary representation, such as

the one shown in the following table:

Color

Code

White

Yellow

Magenta

Blue

Black

Red

000

001

010

011

100

101

then 3 bits are needed for each pixel. This results in a total size of 32 × 16 × 3 = 1536 bits

when no data compression is used.

One data compression strategy to reduce the number of bits needed to represent the image

is to use short strings to encode colors that occur frequently and longer strings for colors

that are occur less frequently. Counting the number of pixels for each color in the “Fun”

image yields:

1

Color

# of Pixels

Probability

White

Yellow

Magenta

Blue

Black

Red

394

42

30

22

18

6

394/512 = 0.770

42/512 = 0.082

30/512 = 0.058

22/512 = 0.043

18/512 = 0.035

6/512 = 0.012

Sum

512

1.000

Huffman coding is a recursive procedure to build a prefix-free variable length code with

shortest average length from the above table. It works as follows. Start by writing down all

the colors, together with their probabilities or the number of times they occur, in increasing

numerical order, e.g., from right to left as shown below.

394

white

42

yellow

30

magenta

22

blue

18

black

6

red

Huffman’s algorithm regards each of the quantities listed above as a leaf of a (upside-down)

tree. In a first step the two leaves that occur least frequently are joined by branches to an

intermediate (or parent) node which gets labeled with the sum of the weights of the joined

leaves as shown next.

24

394

white

42

yellow

30

magenta

22

blue

18

black

6

red

Now the procedure is repeated, working with the remaining leaves and the newly created

intermediate node. Note that the problem size has now been reduced by one, i.e., from

creating a code for 6 quantities to creating a code for 5 quantities in this example. These

two features, reduction of the size of the problem in each step, and repetition of the same

procedure on the smaller problem, is what characterizes a recursive algorithm. The next

step of the Huffman coding algorithm, again combining the two quantities with the lowest

weight into an intermediate node, is shown in the figure below.

46

24

394

white

42

yellow

30

magenta

22

blue

2

18

black

6

red

The following three figures show the next steps of building the tree for the Huffman code.

118

46

72

394

white

42

yellow

46

24

30

magenta

22

blue

18

black

72

6

red

512

42

yellow

394

white

24

30

magenta

22

blue

18

black

6

red

118

46

72

42

yellow

394

white

24

30

magenta

22

blue

18

black

6

red

Once the tree is completed, label the two branches emanating from each parent node with

different binary symbols, e.g., using 0 for all left branches and using 1 for all right branches,

as shown in the next figure

512

0

394

white

1

118

1

0

72

0

42

yellow

46

0

1

30

magenta

22

blue

1

0

18

black

24

1

6

red

The code for each leaf in the tree is now obtained by starting at the root (the node at the

top of the upside-down tree) and writing down the branch labels while travelling to the leaf.

The result is shown in the figure below.

3

Note that, because all codes are for quantities which are tree leaves (and not intermediate

nodes), the code is automatically prefix-free, i.e., no codeword is a prefix of another codeword. This is important because it guarantees unique decodability of the variable length

code. The codes for each color are shown again in the following table:

Color

# of Pixels

Code

394

42

30

22

18

6

0

100

101

110

1110

1111

White

Yellow

Magenta

Blue

Black

Red

Thus, the code for white (which occurs most frequently) has the shortest length and the

codes for black and red (which occur least frequently) have the longest lengths. The total

number of bits required to represent the image using this Huffman code is computed as

(number of occurrences of color times number of bits in corresponding code, added over all

colors)

394 × 1 + 42 × 3 + 30 × 3 + 22 × 3 + 18 × 4 + 6 × 4 = 772 bits .

Dividing the uncompressed size (1536 bits) by the compressed size (772 bits) yields x =

1536/772 = 1.99 and therefore the compression ratio is 1.99 : 1, almost a factor of 2, without

losing any information. Another way to characterize the coding scheme is to compute the

average number of bits needed per pixel, in this case 772/512 = 1.51 bits/pixel, down from

3 bits/pixel without compression.

An interesting question to ask is “What is the smallest number of bits per pixel needed for

lossless coding?” The answer to this question was found in 1950 by Claude E. Shannon. He

introduced the notion of the entropy of a source as a measure of the amount of uncertainty

about the output that a source generates. If that uncertainty is large, then the output of a

source contains a lot of information (in an information theoretic sense), otherwise its output

is (partially) predictable and contains less information. Shannon proved that the minimum

number of bits necessary for a faithful representation of the source output is equal to the

entropy (in bits per symbol) of the source.

The entropy of a source is computed from its probabilistic characterization. In the simplest

case the output symbols from the source, denoted by the random variable X, are i.i.d.

(independent and identically distributed) with a probability mass function pX (x). In this

case the entropy H(X) of a source that produces M -ary symbols, 0, 1, . . . M −1, is computed

as

H(X) = −

M

−1

X

pX (x) log2 pX (x) [bits/symbol] .

x=0

Note that the logarithm to the base 2 of some number n can be computed as log2 (n) =

log10 (n)/ log10 (2) or as log2 (n) = ln(n)/ ln(2).

4

Binary Entropy function. The entropy function of a binary random variable X ∈ {0, 1}

with probability mass function pX (x=1) = 1−pX (x=0) is computed as

H(X) = −pX (x=0) log2 (pX (x=0)) − pX (x=1) log2 (pX (x=1)) [bits/bit]

The graph of this function is shown below.

From the graph it is easy to see that the entropy (or uncertainty) is maximized when pX (x =

0) = pX (x = 1) = 1/2, with a maximum value of 1 bit per source symbol (which is also a bit

in this case). This would correspond, for example, to the uncertainty that one has about the

outcome (heads or tails) of the flip of an unbiased coin. On the other hand, as pX (x = 0)

approaches either 0 or 1 (and thus pX (x = 1) approaches either 1 or 0), the entropy (or

uncertainty) goes to zero. Using the example of flipping a coin, this would correspond to

flipping a heavily biased coin (or even one that has heads or tails on both sides).

For the “Fun” image, the entropy (based on the i.i.d. assumption and single pixels) is

computed as

H(X) = −0.77 log2 0.77 − 0.082 log2 0.082 − 0.058 log2 0.058 − 0.043 log2 0.043+

− 0.035 log2 0.035 − 0.012 log2 0.012 = 1.2655 [bits/pixel]

Thus, between the 1.51 bits/pixel achieved by the Huffman code and the theoretical minimum

given by the entropy H(X) = 1.27 bits/pixel there is still some room for improvement, but

not by a very large amount. To get closer to H(X), one would have to use a larger (Huffman)

coding scheme that combines several pixels (e.g., pairs or triplets of pixels) into each coded

binary representation.

c

1996–2006,

P. Mathys.

Last revised: 10-17-06, PM.

5