Loss aversion and learning to bid

advertisement

Loss aversion and learning to bid∗

Dennis A.V. Dittricha, Werner Güthb,

Martin G. Kocherc, Paul Pezanis-Christoud

February, 2005

Abstract

Bidding challenges learning theories since experiences with the same bid vary stochastically: the same choice can result in either a gain or a loss. In such an environment the

question arises how the nearly universally documented phenomenon of loss aversion affects the adaptive dynamics. We analyze the impact of loss aversion in a simple auction

for different learning theories. Our experimental results suggest that a version of reinforcement learning which accounts for loss aversion fares as well as more sophisticated

alternatives.

Keywords: loss aversion, bidding, auction, experiment, learning

JEL-Classification: C91, D44, D83

∗ We are grateful for the assistance of Torsten Weiland and Ben Greiner who helped in programming the experiment.

a Max Planck Institute for Research into Economic Systems, Jena; email: dittrich@mpiew-jena.mpg.de

b Max Planck Institute for Research into Economic Systems, Jena; email: gueth@mpiew-jena.mpg.de

c University of Innsbruck; email: Martin.Kocher@uibk.ac.at

d BETA-Theme, Université Louis Pasteur, Strasbourg; email: ppc@cournot.u-strasbg.fr

1. Introduction

There are very rare situations where bidding games, usually referred to as auctions, are dominance solvable, like in the random price mechanism (see Becker, de Groot, and Marshak, 1964)

or in second-price auctions (see Gandenberger, 1961, and Vickrey, 1961). Apart from such special setups bidding poses quite a challenge for learning to bid. According to the usual first-price

rule (the winner is the bidder with the highest bid, and she pays her bid to buy the object), one

has to underbid one’s own value (bid shading) to guarantee a positive profit in case of buying.

Such underbidding may cause feelings of loss when the object has not been bought, although

its price was lower than one’s own value. In a similar vein, even when winning the auction,

feelings of loss may be evoked to think one could also have won by a lower bid and could thus

have achieved a larger profit. Given that the values of the interacting bidders are stochastic,

whether one experiences a loss or a gain is highly stochastic as well.

In this paper we investigate two important issues. First, we are going to reassess the issue

of learning in auctions. Although bidding experiments are numerous in economics (see Kagel,

1995, for an early survey), and quite a few of them provide ample opportunities for learning

(e. g., Garvin and Kagel, 1994; Selten and Buchta, 1998; Armantier, 2002, and Güth et al.,

2003), we believe that, so far, the number of repetitions in these studies has been too low to

fully account for possible learning dynamics. In stochastic environments, however, learning

is typically slow, and the number of repetitions or past bidding experiences are important

dimensions.

Thus, we experimentally implement a rather simple one-bidder contest (Bazerman and

Samuelson, 1983, and Ball, Bazerman, and Carroll, 1991) that allows for a considerable number of repetitions. To directly identify the path dependence of bidding behavior (i. e., why and

how bidding behavior adjusts in the light of past results) without any confounding factors, we

deliberately choose a bidding task that lacks strategic interaction.

Second, we experimentally test for the impact of loss aversion (Kahneman and Tversky,

2

1979; Tversky and Kahneman, 1992) on the learning dynamics in this bidding task.1 Loss aversion is referred to as the behavioral tendency of individuals to weigh losses more heavily than

gains. Obviously, in a first-price auction all possible choices can prove to be a success (yield a

gain) or a failure (imply a loss), at least in retrospect. Therefore, the usual and robust finding

of loss aversion should be reflected in the adaptation dynamics. More specifically, our main

hypothesis on the impact of loss aversion claims that, in absolute terms, an actual loss will

change bidding dispositions more than an equally large gain. To the best of our knowledge,

loss aversion has so far not been considered when specifying learning dynamics in situations

comparable to ours.

In the basic bidding task (originally studied experimentally by Samuelson and Bazerman,

1985, and more recently by Selten, Abbink, and Cox, 2002), to which subjects are exposed

in our experiment, the only bidder and the seller have perfectly correlated values. Put differently, the seller’s valuation is always the same constant and proper share of the bidder’s value.

Whereas the seller knows her value, the bidder only knows how it is randomly generated.

The bid of the potential buyer determines the price if the asset is sold. In our experiment

(like in earlier studies) the seller is captured by a robot strategy accepting only bids exceeding

her evaluation. Thus, the bidder has to anticipate that, whenever her bid is accepted, it must

have exceeded the seller’s valuation. Neglecting this fact may cause a winner’s curse as in standard common-value auctions involving more than one bidder. If the seller’s share or quota in

the bidder’s value is high, such a situation turns out to be a social dilemma: According to the

solution under risk neutrality, the bidder abstains from bidding in spite of the positive but –

due to the high quota – relatively low surplus for all possible values. However, if the quota is

low, the surplus is fully exploited: the optimal bid exceeds the highest valuation of the seller

and thereby guarantees trade. Earlier studies have only focused on the former possibility. In

our experiment each participant, as bidder, repeatedly experiences both low and high quotas.

1 There is ample evidence for loss aversion in both risky and risk-free environments (see Starmer, 2000, for a recent

overview).

3

In a setting where bids can vary continuously it is rather tricky to explore how bidding

behavior is adjusted in the light of past results. Traditional learning models like reinforcement learning, also referred to as stimulus-response dynamics or law of effect (see Bush and

Mosteller, 1955), cannot readily be applied. Therefore, we deviated from earlier studies by offering participants only a binary choice, namely to abstain from or engage in bidding. In order

to observe directly the inclination to abstain or engage, respectively, we allowed participants

to explicitly randomize their choice among the two possible strategies (for earlier experiments

offering explicitly mixed strategies, see Ochs, 1995; Albers and Vogt, 1997; Anderhub, Engelmann, and Güth, 2002).

More specifically, each participant played 500 rounds of the same high- and low-quota

game where a low quota suggests an efficient benchmark solution and a quota an inefficient

one. As mentioned earlier, we consider such extensive experience necessary since learning in

stochastic settings is typically slow. We also wanted sufficiently many loss and gain experiences

for each participant when trying to explore the path dependence of bidding behavior and how

adaptation is influenced by loss aversion.2

The paper proceeds as follows: The next section introduces the basic model in its continuous and its binary forms. Section three derives our hypothesis on the impact of loss aversion on reinforcement learning and on experience-weighted attraction learning, respectively.

In Section four we discuss the details of the experimental design and the laboratory protocol. Section five presents the results for the estimation of the learning models, and Section six

concludes.

2. The model

Before introducing the binary (’mini’-)bidding task, let us briefly discuss its continuous version. Let v ∈ [0, 1] denote the value of the object for the sole bidder who will be the only de2 It is straightforward that the bidding tasks participants are exposed to is an ideal environment to study learning

since other, possibly confounding behavioral determinants like social preferences cannot occur.

4

cision maker in this game. The seller’s evaluation is linearly and perfectly correlated to v, i. e.,

qv with q ∈ (0, 1) and q 6= 1/2. We assume that quota q is commonly known. Let b ∈ [0, 1]

denote the bid. It is assumed that v ∈ [0, 1] is realized according to the uniform density on

[0, 1], that the seller knows v, whereas the bidder only knows how v is randomly generated and

that the object is sold whenever b > qv.

Since the risk neutral buyer earns v − b in case of b > qv and 0 otherwise, her payoff

expectation is

E(v − b) =

2 R

b

1

(v − b) dv = q 2q − 1 for 0 6 b 6 q

b>qv

R1

(v − b) dv = 1/2 − b for q < b < 1.

(1)

0

Thus, any positive bid b for b 6 q < 1/2 implies a positive expected profit, and the optimal

bid b∗ depends on q via

b∗ = b∗ (q) =

q for 0 < q < 1/2

(2)

0 for 1/2 < q < 1

so that E(v − b∗ ) = 0 for 1/2 < q < 1 and E(v − b∗ ) = 1/2 − q for 0 < q < 1/2, since q is the

highest possible evaluation by the seller. In case of q > 1/2, the positive expected surplus of

Z1

(1 − q)v dv =

1−q

2

(3)

0

is lost, whereas in case of q < 1/2 it is fully exploited.

In the mini-version, used for the experimental implementation, the assumption b ∈ [0, 1]

is substituted for b ∈ {0, B} with 0 < B < q. More specifically, in the experiment all participants will repeatedly encounter two bidding tasks, namely the case of q = q with 0 < q < 1/2

for which b∗ = B and the case of q = q with 1/2 < q < 1 for which b∗ = 0. Actually, it will be

5

assumed that the same B < q(1 + q)−1 < q applies regardless whether q = q or q = q. Thus,

the two bidding alternatives do not vary with q, and even in case of q = q, bidding b = B

does not guarantee trade and thereby efficiency.

Let us finally investigate the stochastic payoff effects for bidding b = 0 or b = B. In case

of b = 0, the bidder never buys. However, since she will be informed about v, she should in

retrospect understand that, compared to b = B, her past bid b = 0 is justified retrospectively

if B > v, which happens with probability P0+ = B. If, on the other hand, v > B > qv, which

happens with probability P0− = (B/q) − B, having bid b = 0 counts as a loss of v − B in

retrospect. Finally, none of the two applies if there is no trade even if b = B, i. e. if qv > B

which occurs with probability P0n = 1 − (B/q).

Similarly, bidding b = B implies a success of v − B if v > B > qv, what happens with

+

−

probability PB

= B/q − B, and a loss of v − B if B > v, which occurs with probability PB

= B.

n

In case qv > B, which happens with probability PB

= 1 − (B/q), trade is excluded. The

−

+

, P0− = PB

and

assumption 0 < B < q(1 + q)−1 guarantees that all probabilities P0+ = PB

n

P0n = PB

= Pn are positive. Thus, regardless whether q = q or q = q applies, bidding b = 0

or b = B implies positive, negative as well as neutral experiences (at least in retrospect).

3. Prospect theory, learning, and loss aversion

The above-described environment, simple as it may seem at first sight, poses quite a challenge

for learning theories. Obviously, whatever the bidder chooses, she can in retrospect experience

both an encouragement and regret with positive probability. As already mentioned, we are

interested in two specific research questions: (i) Will regret be more decisive than positive

encouragement as suggested by (myopic) loss aversion (see Benartzi and Thaler, 1995, as well

as Gneezy and Potters, 1997)? (ii) Will learning bring about an adjustment to bidding b = 0

when q = q and b = B when q = q?

6

3.1. Prospect theory

For a rigorous investigation of those questions, it is necessary to specify in detail how (myopic) loss aversion should be accounted for in learning theories where, of course, the fact of

repeatedly confronting the same or a similar task might weaken or even question the idea of

loss aversion. Let us, however, first derive the benchmark solution based on prospect theory

claiming risk aversion in the gain region and risk seeking in the loss region (Kahneman and

Tversky, 1979, and Tversky and Kahneman, 1992). To limit the number of parameters, assume

V(m) =

mα for a gain m > 0

(4)

−K(−m)α for a loss m < 0

where K(> 1) captures the stronger concern for losses than for gains and α ∈ [0, 1] the bidder’s

constant relative risk attitude.

For the continuous version of our bidding task this yields

Z

Z

α

(b − v)α dv.

(v − b) dv − K

V=

v>b>qv

(5)

b>qv

b>v

Solving the integrals, one obtains

V=

(1 − q)α+1 − qα+1 K α+1

b .

(α + 1)qα+1

(6)

Thus, the optimal bid b+ is

b+ =

0 if K > (1 − q)α+1 q−(α+1)

(7)

q otherwise.

In experimental economics one usually observes a K-parameter close to K = 2 (see Langer

7

and Weber, forthcoming, for a discussion).3 For such a value and the experimental specifications of q that we will use, the condition K > (1 − q)α+1 q−(α+1) always applies. Prospect

theory can therefore account for abstaining from bidding independently of the experimentally

used q-values, but not for general bidding activity.

3.2. Reinforcement learning

In general, reinforcement learning relies on the cognitive assumption that what has been good

in the past, will also be good in the future. In such a context, one does not have to be aware of

the decision environment except for an implicit stationarity assumption justifying that earlier

experiences are a reasonable indicator of future success.

Our decision environment implies straightforwardly that subject’s behavior is not only

influenced by actual gains and losses but also by retrospective gains and losses. These can be

derived unambiguously when, after each round, the bidder learns about the value v ∈ [0, 1],

regardless whether the object has been bought or not.

For t = 1, 2, . . . let Ct (0) denote the accumulated retrospective gains from bidding b = 0

by

Ct (0) = δ0t (B − vt ) − ρ0t k(vt − B) + dCt−1 (0)

(8)

where d with 0 < d < 1 discounts earlier experiences and k > 1 captures the idea that

retrospective losses (if vt > B) of bidding b = 0 matter more than retrospective gains (if

B > vt ). Here, of course, δ0t = 1 if B > vt and δ0t = 0 otherwise, and ρ0t = 1 if vt > B > qvt

and ρ0t = 0 otherwise. Similarly,

B

Ct (B) = δB

t (vt − B) − ρt k(B − vt ) + dCt−1 (B)

(9)

3 Benartzi and Thaler (1995) show that for a K-parameter close to K = 2, the empirically observed equity premium

would be consistent with an annual evaluation of investments.

8

is the accumulated realized gains from bidding b = B, where δB

t = 1 if vt > B > qvt and

B

B

δB

t = 0 otherwise, as well as ρt = 1 if B > vt and ρt = 0 otherwise.

In the tradition of reinforcement learning, these accumulated gains are assumed to determine the probabilistic choice behavior (see Bush and Mosteller, 1955; Roth and Erev, 1996;

Camerer and Ho, 1999, and Ho, Camerer, and Chong, 2002). If Pt denotes the probability of

selecting bt = B in round t and bt = 0 is chosen with probability 1 − Pt , we postulate

Pt =

exp(Γt )

1 + exp(Γt )

(10)

with

and

Γt = β0 + β1 I(q, q)Ct + β2 I(q, q)Ct

Ct = Ct (B) + αCt (0),

where I(x, y) is an indicator function that equals zero if x 6= y and 1 if x = y. For all βi as

well as for α and the actual choice sequence before round t, i. e. for {(bτ , vτ )}τ=1,...,t−1 for all

rounds t = 1, 2, . . ., the condition Pt ∈ [0, 1] is always satisfied. Thus the parameters to be

estimated are βi , α, d(∈ [0, 1]), and k(> 1).

3.3. Functional experience-weighted attraction learning and loss aversion

Ho, Camerer, and Chong (2002) proposed a one-parameter theory of learning in games that

appears to be especially suitable for our environment: Functional experience-weighted attraction (fEWA) is supposed to predict the path dependence of individual behavior in any normalform game and takes into account both past experience and expectations. Ho, Camerer, and

Chong (2002) claim themselves that it can be easily extended to extensive form games and

games with incomplete information. In the following, we describe how we adapted their model

to fit it to our experimental setting and to incorporate loss aversion.

9

Denote a bidder’s jth choice by sj , her round t choice st , and her payoff by π(sj ). Let

I(x, y) be an indicator function that equals zero if x 6= y and 1 if x = y. Using the notation

of Ho, Camerer, and Chong (2002), a bidder’s attraction Ajt for choice j at time t can then be

defined as

Ajt

φNt−1 Ajt−1 + [δ + (1 − δ)I(sj , st )]πjt (sj )

=

Nt−1 φ(1 − κt ) + 1

(11)

where Nt = Nt−1 φ(1 − κt ) + 1. Here κ represents an exploitation parameter that is defined as a normalized Gini coefficient on the choice frequencies, which is introduced to control

the growth rate of attractions. For the case at hand we will either set it equal to 1 or compute it

for each q-regime separately. The parameter φ reflects profit discounting or, equivalently, the

decay of previous attractions due to forgetting. It is analogous to the d parameter of equation

(9) of our reinforcement learning model and will be freely estimated, as in the original EWA

model of Camerer and Ho (1999). The δ parameter is the weight placed on retrospective gains

and losses (foregone payoffs) and we assume that it is either equal to 1 or to φ. Being fully responsive to foregone payoffs, i.e., δ = 1, implies that the decision maker’s behavior is not only

reinforced but rather driven by her payoff expectations, which suggests a thorough cognitive

retrospective analysis of bidding behavior. By tying it to the decay of previous attractions, we

are going to relax this assumption in the heuristic way proposed by Ho, Camerer, and Chong

(2002).

To augment the model in equation (11) by loss aversion, we introduce a loss weight l,

and we define by vt the realized losses or non-realized gains at time t, depending on whether

bidding B generated a loss. The bidder’s attraction AB

t for the choice b = B at time t then

becomes

10

AB

t =

B

B

B

φNt−1 AB

t−1 + [δ + (1 − δ)I(s , st )]πt + l[δ + (1 − δ)I(s , st )]vt

.

Nt−1 φ(1 − κt ) + 1

(12)

With such a specification, a loss weight of l = 0 indicates that losses influence the probability of choosing b = B to the same extent as gains. The probabilistic choice behavior is then

assumed to follow

Pt =

exp(Γt )

1 + exp(Γt )

(13)

with

B

Γt = β0 + β1 I(q, q)AB

t + β2 I(q, q)At .

Thus, the parameters to be estimated are βi , φ(∈ [0, 1]), and l(> 0).

Notice that, in contrast to the reinforcement learning model presented in the previous

section, the fEWA model accounts for retrospective gains and losses from having bid B but

cannot account for those from having bid 0, i. e., abstaining.

4. Laboratory protocol

Participants in the experiments were instructed that there were two roles to be assigned in

the experiment. They themselves would act as buyers, and the computer would follow a fixed

robot strategy as a seller. The instructions informed participants about parameter values, the

distribution of the values, and the rule followed by the robot seller.4 We chose B = 25 and a

4 A transcript of the German instructions can be found in the Appendix.

11

uniformly distributed value v ∈ [0, 100] as well as q = 0.6 and q = 0.4. For such parameters,

it is optimal to always bid b = B if q = 0.4 and to bid b = 0 if q = 0.6.

To observe propensities Pt for bidding b = B, we directly elicited mixed strategies by

asking participants to allocate x0 of 10 balls to the b = 0-box and the remaining 10 − x0

balls in the b = B-box, where, of course, 0 6 x0 6 10 must hold. The advantage is that

one avoids the randomness of the actual choice between bt = 0 and bt = B when testing

for each individual participant how well the adaption dynamics postulated in (10) capture her

idiosyncratic learning behavior.

In spite of the 500 rounds of play, the whole experiment took less than two hours to complete, which included the time needed to read the instructions. As already mentioned, we allowed for a sufficiently large number of repetitions to be able to test for learning effects since

the assessment of loss aversion in a stochastic environment obviously requires many repetitions. At the end of each round, participants were informed about the value v, whether trade

occurred, and about earnings for both possible choices, i. e., for b = B and b = 0. The seller’s

quota, q = 0.4 or q = 0.6, was displayed on each decision screen and changed every ten

rounds.

The conversion rate from experimental points to euros (1:200) was announced in the instructions. Participants received an initial endowment of 1500 experimental points (¤ 7.50)

to avoid bankruptcy during the experiment. As a matter of fact, such a case never occured.

Overall, 42 subjects participated in the two sessions that we conducted. The experiment was

computerized using z-tree (Fischbacher, 1999) and was conducted with students from various

faculties of the University of Jena.

5. Experimental results

We start by presenting some descriptive results on subjects’ behavior. We then discuss the

econometric estimations of the loss aversion parameter within our reinforcement learning

12

framework and within the more elaborate experience-weighted attraction model à la Ho, Camerer,

and Chong (2002).

5.1. Descriptive results

O 1

Only 4 (out of 42) subjects always played the optimal pure strategy when q =

0.4, and only 2 subjects always played the optimal pure strategy of bidding 0 when q = 0.6. No

single participant chose the optimal strategy in any round. Subjects performed significantly better

when q = 0.4 than when q = 0.6.

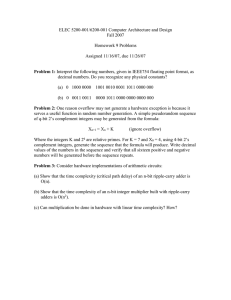

Table 1 plots the 500 decisions of each participant together with the individual average

deviations from optimal choices. Black dots represent actual choices, grey bars indicate deviations from the normative solution. Mean deviations from the normative solution in columns

three and four of Table 1 are computed for each block of ten rounds. The table allows discerning straightforwardly how subjects fare in the experiment in comparison to the theoretical

prediction and to what extent deviations from the normative solution decline over time, i. e.,

to what extent learning occurres. As can be seen rather easily, a few participants play optimally

almost always when q = 0.6 (i. e., subjects 2, 19, 26, and 39), and a few more do so when

q = 0.4 (i. e., subjects 2, 9, 19, 26, 27, 28, and 39).

5

In general, mean deviations from the

normative solution are significantly larger for the q-regime than for the q-regime for most of

the subjects, which indicates a behavioral tendency to bid even if it is not optimal. This bias toward bidding resembles the well-documented phenomenon of the winner’s curse in standard

first-price auctions. Another obvious regularity of the bidding data – that will have to be taken

into account in the estimation of our learning models – is a considerable heterogeneity across

subjects.

In order to give an impression of the strategies played by our subjects, Figure 1 shows the

relative frequency of probability choices (0,0.1,0.2,0.3,0.4,0.5,0.6,0.7,0.8,0.9,1) contingent on

5 Obviously, those observations will have to be dropped when estimating learning dynamics. Note that subject 19

did not manage to play the last 100 rounds in the given time for the experiment.

13

0.6

0.4

0.2

0.0

Relative Frequency

q = 0.4

q = 0.6

0

10

20

30

40

50

60

70

80

90 100

Probability of Bidding

Figure 1: Relative frequency of probability choices

Table 1: Choices and Deviations from the Normative Prediction

Subject

ID

Choices

1

Round

Mean deviation

500

1

2

3

4

5

6

7

8

9

10

11

12

14

q

q

Table 1: Choices and Deviations from the Normative Prediction

Subject

ID

Choices

1

Round

Mean deviation

500

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

15

q

q

Table 1: Choices and Deviations from the Normative Prediction

Subject

ID

Choices

1

Round

Mean deviation

500

q

q

35

36

37

38

39

40

41

42

Note:

Black dots represent actual choices, grey bars are deviations from the normative solution. Mean deviations from the normative solution in columns

three and four are computed for each block of ten rounds.

q. When q = 0.4, the optimal pure strategy of bidding B represents 58 % of all probability

choices, whereas the zero bid option represents only 3 % of them. When q = 0.6, the optimal

zero bid strategy represents 18 % of all probability choices. The fact that in this treatment 34 %

of all probability choices are “Bidding B for sure” either indicates that participants did not

perceive the expected revenue implications of such a choice, or that they were extremely risk

seeking.

To evaluate the degree of normative optimality of a bidder’s choices from a behavioral

point of view, we denote by fqa the frequency of probability choices a = 0, 0.1, 0.2, . . . , 1 in

regime q. We then measure her performance by the percentage sq of optimal choices in 250

bidding rounds for each q-regime, that is:

16

1 X 0.4

=

f

·a

25000 a=0 100a

1

s0.4

1 X 0.6

=1−

f

·a

25000 a=0 100a

1

and s0.6

(14)

0.4

s0.4 takes its minimum value (0) when f0.4

0 = 250 and its maximum value (1) when f1 =

250. Likewise, s0.6 takes its minimum value (0) when f0.6

1 = 0 and its maximum value (1) when

f0.6

0 = 250. Finally, we define a bidder’s overall performance index S ∈ [0; 1] as

1

1

S= +

2 50000

1

X

f0.4

100a · a −

a=0

1

X

!

f0.6

100a · a .

(15)

a=0

Figure 2 reports the distributions of s0.4 , s0.6 , and S. The average of s0.4 is 0.79 (s.d.: 0.19)

whereas s0.6 averages at 0.41 (s.d.: 0.28). The difference is significant at α < .001 according to a

one-tailed Wilcoxon Rank Sum test, confirming the visual expression from Table 1 that choices

are closer to the theoretical prediction in case of q = 0.4. This difference in performance is

also reflected in the average earnings under the two regimes: they are equal to 825.76 points

when q = 0.4 (s.d.: 223.96; Min: 269; Max: 1274) and to -213.66 points when q = 0.6 (s.d.:

127.985; Min: -547; Max: 20).

O 2

On average, subjects performed significantly better in the last rather than in

the first 100 rounds of the experiment. This holds for both q-regimes. In the last 100 rounds, four

bidders always used the optimal pure strategy in each q-regime.

Figures 3 and 4 report the same data as 1 but only for the first and last 100 rounds of

play (each of these 100 rounds are made up of 50 rounds with q = 0.4 and 50 rounds with

q = 0.6). In the last 100 rounds, 24 % of participants (ten bidders) always played the optimal

strategy when q = 0.4, and 10 % of participants (four bidders) did so when q = 0.6. The

latter outcome is in line with the 7 % reported by Ball, Bazerman, and Carroll (1991) for a

game that corresponds to our q = 0.6-regime which spanned only 20 rounds and for which

17

1.0

0.8

0.6

0.4

0.2

0.0

Cumulative Density

Performance for q = 0.4

Performance for q = 0.6

Performance for all q

0.2

0.4

0.6

0.8

1.0

Performance Score

Figure 2: Cumulative density of performance indices

no significant change in behavior was found. The performance indices sq are also significantly

greater in the last 100 rounds in both q-regimes (p < 0.001, according to Wilcoxon Rank

Sum tests). In fact, the relative frequency of "bidding B for sure" increases by 16.25 percentage

points between the first and last 100 rounds when q = 0.4, whereas those of "bidding 0 for

sure" when q = 0.6 increase by a smaller degree, i. e., 1.6 percentage points.

The average overall performance index S is equal to 0.597 for the first 100 rounds and

increases significantly (p = 0.009) to 0.648 for the last 100 rounds so that in the aggregate,

behavior gets closer to the optimum as the experiment proceeds. Nevertheless, as only four

bidders always use the optimal strategy in both q-regimes, the well-established pattern of the

winner’s curse still remains predominant.

18

0.6

0.4

0.2

0.0

Relative Frequency

First 100 Periods

Last 100 Periods

0

10

20

30

40

50

60

70

80

90 100

Probability of Bidding

0.6

0.2

0.4

First 100 Periods

Last 100 Periods

0.0

Relative Frequency

Figure 3: Relative frequency of probability choices during first and last 100 periods for q = 0.4

0

10

20

30

40

50

60

70

80

90 100

Probability of Bidding

Figure 4: Relative frequency of probability choices during first and last 100 periods for q = 0.6

5.2. Estimation of the learning functions and loss aversion parameter

As learning patterns are obviously rather heterogeneous in our population of 42 bidders, and

as we have data on individual behavior for a sufficiently large number of rounds, we estimate learning models for each participant separately. Clearly, estimating a random coefficients

model would also be possible, but this would (i) require specific assumptions on the distribution of each random coefficient and (ii) would not enable us to identify and assess individual

learning behavior and loss aversion.

19

Estimation is done by minimizing residual deviance.

2

Xh

i

Pt (log Pt − log P̂t ) + (1 − Pt )(log(1 − Pt ) − log(1 − P̂t ))

(16)

t

where Pt denotes the observed probability of bidding b = B and P̂t the expected probability

of bidding b = B from either (10) or (13).6

5.2.1. Reinforcement learning with retrospective loss aversion

In a first model, there is no loss aversion and no retrospective evaluation of bidding 0 so that

in (10), k = 1 and α = 0. We then reestimate (10) with no restriction on α. Finally, we allow

for loss aversion by estimating (10) with no restriction on both k and α. The estimated three

models (Model 1, 2, and 3, respectively) are reported in Tables 7, 8, and 9 in the Appendix.

Table 2 summarizes the explanatory power of retrospective gains and losses from "bid 0" by

comparing the outcomes of Model 2 to those of Model 1. For this comparison we discarded the

data of ten individuals for whom the estimation procedure could not converge due to a lack of

variation (i.e., individuals who did not learn anything during the experiment). A joint test on

the comparisons of the 32 remaining individuals indicates that including retrospective gains

and losses, as defined by equation 8, improves the model’s explanatory power significantly.

Including a loss aversion parameter in the reinforcement learning model yields a significant

improvement in terms of the model’s goodness-of-fit, which is confirmed by the joint test

comparing Models 2 and 3.

Table 3 reports some summary statistics for the main parameters. They were generated

by taking into account only those individuals for whom the model likelihood ratio test was

significant at the 1 % level, i. e. for 21 subjects (see Table 9). As can be easily discerned in

Table 3, the estimate of the profit discounting factor d is slightly smaller than 1, meaning that

6 The R procedures (see R Development Core Team, 2004) that were used for the estimation can be obtained from

the authors upon request.

20

Table 2: Reinforcement Learning Model Comparison

Model 1 vs. 2

α = 0 vs. α 6= 0

ID ∆ Residual deviance p-value

1

3

4

5

6

7

10

11

12

13

14

15

16

17

18

20

21

22

24

25

29

30

31

32

33

34

35

36

38

40

41

42

Joint test

Model 2 vs. 3

k = 1 vs. k 6= 1

∆ Residual deviance p-value

0.1544

0.2946

0.2704

0.0726

0.1058

4.9449

0.5342

0.2902

18.4784

0.0579

0.8995

1.6431

8.8800

6.0536

0.7973

0.1099

6.1838

0.0972

8.1094

21.7328

0.4391

1.1104

16.0136

7.0901

2.3987

6.9365

3.9780

3.2591

15.9563

0.8099

0.7655

2.5330

0.6944

0.5873

0.6030

0.7876

0.7449

0.0262

0.4649

0.5901

<0.0001

0.8098

0.3429

0.1999

0.0029

0.0139

0.3719

0.7403

0.0129

0.7553

0.0044

<0.0001

0.5075

0.2920

0.0001

0.0078

0.1214

0.0084

0.0461

0.0710

0.0001

0.3682

0.3816

0.1115

0.0002

0.2025

0.4491

0.6274

1.8389

15.9013

3.7280

0.2431

6.1161

0.3114

0.0019

0.0111

0.1401

5.7064

7.4571

0.0506

1.4109

0.0057

4.3111

0.1576

5.0221

0.0331

23.5405

7.2050

15.959

0.1089

1.8963

1.2497

2.6395

15.9504

10.9593

0.7135

0.9898

0.6527

0.5028

0.4283

0.1751

0.0001

0.0535

0.6220

0.0134

0.5768

0.9649

0.9159

0.7082

0.0169

0.0063

0.8220

0.2349

0.9398

0.0379

0.6914

0.0250

0.8555

<0.0001

0.0073

0.0001

0.7414

0.1685

0.2636

0.1042

0.0001

0.0009

0.3983

140.9999

<0.0001

133.9478

<0.0001

21

5

Table 3: Summary Statistics for the Final Reinforcement Learning Model (Model 3)

3

2

1

0.995

0.823

0.894

0.954

0

Median

Mean

Trimmed mean (90%)

Trimmed mean (80%)

Density

4

d

−0.2

1.073

61.348

0.973

0.868

0.2

0.1

Density

k

0

2

4

6

0.0 0.1 0.2 0.3 0.4

−2

Median

Mean

Trimmed mean (90%)

Trimmed mean (80%)

1.0

0.0

0.071

0.897

0.082

0.124

Density

Median

Mean

Trimmed mean (90%)

Trimmed mean (80%)

0.6

0.3

α

0.2

−2

0

2

4

6

8

participants tend to discount only moderately, i. e., they account for the entire history when

making a decision. This is quite reasonable since the experimental environment has been rather

stable (except for the q-switches).

The parameter α is usually positive suggesting that retrospective gains and losses from “bid

0” in the previous round actually affect learning. However, given the spread of the estimates,

there is a lot of heterogeneity among subjects. Finally, we find no strong evidence of a loss

aversion parameter k > 1 as its median value is greater but almost equal to 1 and its trimmed

mean is smaller than 1. The considerable discrepancy between the median and the mean values

of k suggests again an important heterogeneity in behavior.

5.2.2. Experience-weighted attraction models with(out) loss aversion

We now analyze loss aversion within the experience-weighted attraction framework. To this

end, we estimate intermediate models with various restrictions on the parameters to assess

22

their separate impact on learning. In the first model, the loss aversion parameter l is restricted

to l = 0, δ to δ = 1 and κ to κ = 1. This reduces the EWA model to a reinforcement learning

model with profit discounting and equal weights of actual and foregone payoffs. We will refer

to this model as Model 1’. We then relax the restriction on the loss aversion parameter (Model

2’). In Models 3’ and 4’, we estimate a version of the fEWA model proposed by Ho, Camerer,

and Chong (2002) that uses the functional form of κ (a Gini coefficient on choice frequencies)

and, given the stable strategic environment of our individual decision-making experiment,

with the additional restriction that δ = φ. The loss aversion parameter l is restricted to l = 0

in Model 3’ and is estimated freely in Model 4’. Estimation results are reported in Tables 10 to

13 in the Appendix. A summary of the explanatory power of loss aversion for learning to bid

is reported in Table 4.

After discarding the data of ten individuals for whom the EWA estimation procedures did

not converge, we find that including loss aversion in an EWA-type of reinforcement learning

model significantly increases the model’s goodness of fit (Model 1’ vs Model 2’). A similar conlusion can be reached when the growth rate of the attraction of bidding B is controlled by κ

(Model 3’ vs Model 4’). The statistics on the estimated parameters are reported in Tables 5 and

6 and confirm our previous finding that participants tend to discount past profits only moderately. Interestingly, we now find that the loss aversion parameter l is mostly positive, thereby

indicating that individuals tend to react more strongly to losses than to gains. Compared to the

more mitigated outcome of our previous model (where the loss aversion parameter accounted

for actual and retrospective losses from both bidding and not bidding), we conclude that although the inclusion of a loss aversion parameter significantly improves the goodness of fit in

both types of models, it is aversion to actual losses from bidding B that matters most in this

bidding game.

Note that Model 4’ uses more data than Model 2’ (i. e., it includes retrospective gains and

losses), although it has the same number of freely estimated parameters. Surprisingly, a pair-

23

Table 4: fEWA Model Comparison

Model 1’ vs. 2’

l = 0 vs. l 6= 0

ID ∆ Residual deviance p-value

1

3

4

5

6

7

10

11

12

13

14

15

16

17

18

20

21

22

24

25

29

30

31

32

33

34

35

36

38

40

41

42

Joint test

Model 3’ vs. 4’

l = 0 vs. l 6= 0

∆ Residual deviance p-value

1.2283

1.4229

4.6326

3.5452

4.3095

0.0096

15.8465

0.3823

9.4439

5.6123

0.0263

0.0096

0.0686

28.3936

3.7105

0.1298

24.5608

0.0000

11.8696

11.1497

17.2196

1.9858

1.0861

10.3973

22.8017

6.2305

1.4409

5.4314

33.6736

2.9117

12.4242

1.1212

0.2677

0.2329

0.0314

0.0597

0.0379

0.9219

0.0001

0.5364

0.0021

0.0178

0.8711

0.9221

0.7934

<0.0001

0.0541

0.7187

<0.0001

0.9999

0.0006

0.0008

<0.0001

0.1588

0.2973

0.0013

<0.0001

0.0126

0.2300

0.0198

<0.0001

0.0879

0.0004

0.2897

0.0986

0.4269

2.8800

3.0013

4.8293

0.1759

11.4128

0.3521

9.2802

0.6737

0.0147

11.1774

2.0021

28.5713

3.3562

0.0817

24.2655

0.0635

10.7218

18.1861

18.3205

2.2000

1.3126

10.9121

26.2552

4.3010

1.3047

5.475

33.3827

4.945

13.2883

1.0561

0.7535

0.5135

0.0897

0.0832

0.0280

0.6749

0.0007

0.5529

0.0023

0.4118

0.9034

0.0008

0.1571

<0.0001

0.0670

0.7751

<0.0001

0.8010

0.0011

<0.0001

<0.0001

0.1380

0.2519

0.0010

<0.0001

0.0381

0.2534

0.0193

<0.0001

0.0262

0.0003

0.3041

243.0757

<0.0001

254.3245

<0.0001

24

4

Table 5: Summary Statistics for the Final fEWA Model 2’

2

1

0.993

0.795

0.858

0.930

0

Median

Mean

Trimmed mean (90%)

Trimmed mean (80%)

Density

3

φ

0.0

1.2

0.2

0.0

0.289

-0.381

0.288

0.316

Density

Median

Mean

Trimmed mean (90%)

Trimmed mean (80%)

0.8

0.4

l

0.4

−2

0

1

2

3

Median

Mean

Trimmed mean (90%)

Trimmed mean (80%)

0.992

0.834

0.893

0.953

Density

φ

0 1 2 3 4 5 6 7

Table 6: Summary Statistics for the Final fEWA Model 4’

Median

Mean

Trimmed mean (90%)

Trimmed mean (80%)

0.285

0.115

0.321

0.312

Density

l

0.4

0.8

1.2

0.00 0.10 0.20 0.30

0.0

−2

0

1

2

3

wise comparison of the residual deviances reveals no improvement over Model 2’ (t-test, onesided, p = 0.211). Thus, the simpler reinforcement-type learning models capture the learning

dynamics as effectively as the more sophisticated fEWA versions do.

6. Conclusion

One of the most robust findings of auction experiments is the winner’s curse in common

value auctions. However, all reasonable adaptation or learning dynamics suggest that ’winners’

25

should learn to update their profit expectations more properly. In the simple bilateral trade

situation at hand, the winner’s curse would mean that the buyer neglects that the price of B

will be accepted only if it exceeds the seller’s evaluation of the asset (qv). We designed our

experiment so that the buyer’s optimal strategy, in terms of expected revenues, is to abstain

from bidding B when the seller’s quota (q) is high, and to bid B with probability one when it

is low.

Although we have provided ample opportunity for learning (250 rounds for each q-regime),

bidding B with a positive probability when the seller’s quota is high remains a strong pattern

throughout the experiment and weakens only slightly over time. In contrast, learning to bid B

with probability one when the seller’s quota is low was much faster. This could be partly due to

an efficiency motive. Efficiency always requires trade if the seller were real. For the real bidder,

the bid B generates for any possible positive v a larger surplus ((1−q)v) when the seller’s quota

is low than when it is high. As a consequence, bidding B is more profitable for lower quotas

than for higher ones, and is therefore more strongly reinforced.

In general, the bidding task seems a far greater challenge for learning models. There is

considerable individual heterogeneity of learning dynamics. Moreover, there is a small fraction

of people who almost instantaneously converge to optimal bidding. Lastly, learning to avoid

the winner’s curse is, if it exists at all, very weak. Either the participants understand sooner or

later that bidding is dangerous when q is high, or they never recognize it clearly and are only

slightly discouraged by (the more frequent) loss experiences.

Altogether, the important conclusions concerning learning are that loss aversion significantly influences the learning dynamics, both in a simple reinforcement learning model and in

a more sophisticated fEWA model, and that individual parameter estimates are mostly in line

with the loss aversion hypothesis when the model tested accounts for actual and retrospective

losses, as is the case of fEWA. However, in terms of goodness of fit, this model does not explain the behavioral adjustments in the bidding task better than reinforcement learning does.

26

Finally, retrospective gains and losses have a limited influence on learning to bid.

References

Albers W. and B. Vogt, 1997, The selection of mixed strategies in 2x2 bimatrix games, Working

Paper No. 286, Institute of Mathematical Economics, Bielefeld University.

Anderhub V., D. Engelmann and W. Güth , 2002, An experimental study of the repeated

trust game with incomplete information, Journal of Economic Behavior and Organization,

vol. 48, 197-216.

Armantier O., 2004, Does observation influence learning? Games and Economic Behavior,

vol. 46, 221-239.

Avrahami J., W. Güth and Y. Kareev, 2001, Predating predators: An experimental study,

Homo Oeconomicus, vol. 18, 337-352.

Becker G.M., M.H. de Groot and J. Marshak, 1964, Measuring utility by a single-response

sequential method, Behavioral Science, vol. 9, 226-232.

Ball S.B., M.H. Bazerman and J.S. Carroll , 1991, An evaluation of learning in the bilateral

winner’s curse, Organizational Behavior and Human Decision Processes, vol. 48, 1-22.

Bazerman M.H. and W.F. Samuelson, 1983, I won the auction but don’t want the prize,

Journal of Conflict Resolution, vol. 27, 618-634.

Benartzi S. and R.H. Thaler, 1995, Myopic loss aversion and the equity premium puzzle,

Quarterly Journal of Economics, vol. 110, 73-92.

Bush R. and F. Mosteller, 1955, Stochastic models for learning, New York: Wiley.

Camerer C. and T.-K. Ho, 1999, Experience-weighted attraction (EWA) learning in normalform games, Econometrica, vol. 67, 827-873.

Fischbacher U., 1999, z-Tree – Zurich Toolbox for readymade economic experiments –

Experimenter’s manual, WP 21, Institute for Empirical Research in Economics, University of

Zurich.

27

Gandenberger O., 1961, Die Ausschreibung, Heidelberg: Quelle und Meyer.

Garvin S. and J. Kagel, 1994, Learning in common value auctions: Some initial observations, Journal of Economic Organization and Behavior, vol. 25, 351-372.

Gneezy U. and J. Potters, 1997, An experiment on risk taking and evaluation periods,

Quarterly Journal of Economics, vol. 112, 631-645.

Güth W., R. Ivanova-Stenzel, M. Königstein and M. Strobel, 2003, Learning to bid – An

experimental study of bid function adjustments in auctions and fair division games, Economic

Journal, vol. 103, 477-494.

Ho T.-K., C. Camerer and J.-K. Chong, 2002, Functional EWA: A one-parameter theory of

learning in games, mimeo, California Institute of Technology.

Kagel J., 1995, Auctions: A survey of experimental research; in J. Kagel and A. Roth (eds.),

The Handbook of Experimental Economics, Cambridge, MA: MIT Press.

Kahneman D. and A. Tversky, 1979, Prospect theory: An analysis of decision under risk,

Econometrica, vol. 47, 263-91.

Langer T. and M. Weber, (forthcoming), Myopic prospect theory vs myopic loss aversion,

Journal of Economic Behavior and Organization.

Ochs J., 1995, Games with unique, mixed strategy equilibria: An experimental study, Games

and Economic Behavior, vol. 10, 202-217.

R Development Core Team, 2004, R: A language and environment for statistical computing, Vienna: R Foundation for Statistical Computing.

Roth A.E. and I. Erev, 1998, Predicting how people play games: Reinforcement learning in

experimental games with unique, mixed strategy equilibria, American Economic Review, vol.

88, 848-880.

Samuelson W.F. and M.H. Bazerman , 1985, The winner’s curse in bilateral negotiations; in

V.L. Smith (ed.): Research in Experimental Economics, Greenwich: JAI Press, vol. 3, 105-137.

28

Starmer C., 2000, Developments in non-expected utility theory: The hunt for a descriptive

theory of choice under risk, Journal of Economic Literature, vol. 38, 332-382.

Selten R., K. Abbink and R. Cox, 2002, Learning direction theory and the winner’s curse,

Discussion Paper 10/2001, Department of Economics, University of Bonn.

Selten R. and J. Buchta, 1998, Experimental sealed bid first price auctions with directly

observed bid functions; in O.D. Budescu, I. Erev and R. Zwick (eds.), Games and Human

Behavior – Essays in Honor of Amon Rapoport, Mahwah (N.J.): Lawrence Erlbaum Ass.

Tversky A. and D. Kahneman, 1992, Advances in prospect theory: Cumulative representation of uncertainty. Journal of Risk and Uncertainty, vol. 5, 297-323.

Vickrey W., 1961, Counterspeculation, auctions, and competitive sealed tenders, Journal

of Finance, vol. 16, 8-37.

A. Appendix

A.1. Instructions (originally in German; not necessarily for publication)

Welcome to the experiment!

Please do not speak with other participants from now on.

Instructions

This experiment is designed to study decision making. You can earn ‘real’ money, which

will be paid to you in cash at the end of the experiment. The ‘experimental currency unit’ will

be ‘experimental points (EP)’. EP will be converted to euros according to the exchange rate

in the instructions. During the experiment you and other participants will be asked to take

decisions. Your decisions will determine your earnings according to the instructions. All participants receive identical instructions. If you have any questions after reading the instructions,

raise your hands. One of the experimenters will come to you and answer them privately. The

experiment will last for 2 hours maximum.

Roles

29

You are in the role of the buyer; the role of the seller is captured by a robot strategy of the

computer. The computer follows an easy strategy that will be fully described below.

Stages

At the beginning of each round you can decide whether you want to ‘bid’ for a good or

abstain. For this you can apply a special procedure that we will introduce in a moment. Your

bid B is always 25 EP. The value of the good v will be determined independently and by chance

move in every round. v is always between (and including) 0 EP and 100 EP. The chosen values

are equally distributed, which means that every integer number between (and including) 0 EP

and 100 EP is the value of the good with equal probability. The computer as the seller only sells

the good when the bid is higher than the value multiplied by a factor q.

Mathematically, the computer only sells if B > qv.

The factor q takes on the values 0.4 or 0.6. At the beginning of each round you will be

informed about the value of q in that round. Every 10 rounds q changes. Keep in mind that

you are the only potential buyer of the good. Therefore, this is a very special case of an auction.

Special procedure to choose to bid or to abstain

The choice between bidding or abstaining is made by assigning balls to the two possibilities. You have 10 balls that have to be assigned to the two possibilities. The number of assigned

balls corresponds with the probability that a possibility is chosen. Thus, each ball corresponds

with a probability of 10 percent. All possible distributions of the 10 balls to the two possibilities

are allowed.

Information at the end of each round

At the end of each round you receive information on the value v, whether the good was

sold by the computer (or whether the good would have been sold in case you had bid). Additionally, you see your round profit.

Profit

• If you did not bid, your round profit is 0.

30

• If the bid was smaller than the value of the good multiplied by the factor (B < qv), the

computer does not sell. No transaction takes place and your round profit is 0.

• If you bid and B > qv, then your round profit depends on the value of the good. In case

of a value of the good that is higher than the bid v > B, you make a profit. In case of

a value of the good that is lower than the bid, you make a loss in that round. Losses in

single rounds can, of course, be balanced by profits from other rounds.

At the beginning of the experiment you receive an endowment of 1500 EP. At the end of the

experiment, experimental points will be exchanged at a rate of 1:200. That means 200 EP = 1

¤.

Rounds

There are 500 rounds in the experiment. In each round you have to take the same decision

(assign the balls to the two possibilities of bidding or abstaining) as explained above. Only

factor q will be changed every 10 rounds.

Means of help

You find a calculator on your screen. You are, of course, allowed to write notes on the

instruction sheets.

Thank you for participating!

31

32

-0.1817

(-0.850)

0.2736

(1.008)

1.0019

(791.170)

0.8081

(6.930)

1.0073

(371.731)

0.9316

(46.595)

0.4184

(2.715)

0.9915

(56.611)

0.6792

(4.820)

-0.2689

(-0.266)

0.9833

(126.366)

0.7943

(6.698)

1

15

14

13

12

11

10

7

6

5

4

3

d

ID

1.7662

(13.536)

0.7217

(7.307)

1.3759

(5.597)

1.7516

(10.157)

2.0418

(9.510)

0.3437

(2.314)

0.6313

(6.146)

0.1007

(0.631)

0.8513

(7.132)

-0.3000

(-3.278)

0.4041

(2.020)

1.2297

(10.002)

Constant

A.2. Estimation Results (not for publication)

0.0508

(2.099)

0.0600

(2.791)

0.0173

(4.581)

0.0144

(1.077)

0.0123

(5.302)

-0.0291

(-3.206)

-0.0417

(-1.825)

0.0047

(1.067)

0.0156

(0.976)

0.0150

(0.667)

-0.0036

(-0.889)

-0.0101

(-0.693)

β1

0.0348

(2.299)

-0.0001

(-0.007)

0.0035

(3.277)

-0.0243

(-2.445)

0.0002

(1.109)

0.0085

(2.564)

-0.0411

(-3.546)

0.0014

(0.744)

-0.0203

(-2.211)

-0.0026

(-0.182)

0.0083

(2.603)

-0.0154

(-1.707)

β2

864.35

954.69

1276.36

657.81

1362.63

671.58

1079.36

733.68

790.48

1005.43

1025.99

643.83

Null

Deviance

858.96

924.04

1275.86

651.39

1351.28

649.67

1058.17

662.15

777.22

764.37

1017.38

633.60

Residual

Deviance

Table 7: Estimation results for Model 1

0.4412

0.0016

0.9686

0.3608

0.1284

0.0120

0.0141

0.0000

0.0848

0.0000

0.2306

0.1636

0.0054

0.0305

0.0005

0.0064

0.0113

0.0220

0.0211

0.0715

0.0132

0.2333

0.0086

0.0102

Estrella

P(> χ )

R2

2

33

-0.1598

(-1.063)

0.9702

(88.896)

-0.0229

(-0.095)

0.9951

(246.349)

0.8207

(10.513)

0.9995

(246.236)

0.9402

(24.589)

-0.3207

(-0.951)

0.9971

(232.382)

0.4263

(1.803)

0.9836

(323.234)

0.9816

(114.093)

0.6136

(2.983)

16

33

32

31

30

29

25

24

22

21

20

18

17

d

ID

1.4953

(12.371)

0.7869

(5.740)

1.4109

(12.066)

0.6018

(2.536)

-0.2688

(-2.691)

-0.0846

(-0.422)

0.8997

(6.768)

0.9011

(8.935)

0.5825

(4.308)

2.4052

(13.609)

-1.4311

(-6.417)

0.6442

(1.928)

1.3517

(11.785)

Constant

β2

0.0410

0.0513

(1.784) (3.569)

0.0258 -0.0006

(2.634) (-0.226)

0.0391

0.0356

(1.848) (2.516)

0.0069

0.0076

(2.436) (3.144)

-0.0049

0.0180

(-0.329) (2.485)

-0.0015

0.0049

(-0.641) (2.591)

0.0172

0.0002

(1.725) (0.080)

0.0566

0.0052

(2.788) (0.508)

-0.0046

0.0056

(-1.873) (2.632)

0.0649

0.0525

(2.364) (2.645)

-0.0138

0.0336

(-1.947) (6.632)

0.0096

0.0089

(1.099) (1.556)

-0.0334

0.0013

(-1.591) (0.130)

β1

595.38

643.98

783.69

392.98

913.47

612.69

1156.32

1109.81

1214.59

994.84

766.89

1202.12

512.72

Null

Deviance

591.82

575.48

486.45

377.89

889.16

603.66

1151.95

1033.66

1203.06

844.88

756.23

1192.46

493.62

Residual

Deviance

Table 7: Estimation results for Model 1

0.6194

0.0000

0.0000

0.0563

0.0069

0.2110

0.5345

0.0000

0.1237

0.0000

0.1492

0.1846

0.0228

P(> χ2 )

0.0036

0.0695

0.3038

0.0152

0.0243

0.0091

0.0044

0.0751

0.0115

0.1468

0.0107

0.0096

0.0193

Estrella

R2

34

0.9924

(199.553)

0.9968

(107.95)

0.9992

(386.720)

1.0022

(146.288)

0.5158

(2.337)

0.987

(290.577)

0.5802

(0.976)

34

42

41

40

38

36

35

d

ID

β1

0.2481

0.0001

(0.820) (0.023)

2.1328

0.0086

(7.187) (1.347)

1.2656

0.0089

(5.109) (3.506)

2.2389 -0.0074

(10.202) (-1.689)

1.0945 -0.0415

(9.918) (-1.852)

-0.3127

0.0133

(-1.604) (1.630)

1.3141

0.0031

(10.920) (0.192)

Constant

0.0052

(2.667)

0.0023

(1.368)

0.0011

(1.643)

0.0018

(1.616)

0.0063

(0.725)

0.0194

(4.520)

0.0118

(0.840)

β2

895.63

942.39

778.32

352.90

990.60

498.87

894.65

Null

Deviance

894.39

724.17

773.22

339.72

867.97

423.61

824.34

Residual

Deviance

Table 7: Estimation results for Model 1

0.8919

0.0000

0.4662

0.0862

0.0000

0.0000

0.0000

P(> χ2 )

0.0012

0.2134

0.0051

0.0133

0.1207

0.0775

0.0699

Estrella

R2

35

-0.2002

(-0.953)

0.2121

(0.773)

1.0054

(243.536)

0.8157

(7.507)

1.0026

(118.500)

0.9399

(34.705)

0.4574

(3.123)

0.9914

(45.434)

0.1093

(0.780)

-0.0900

(-0.088)

0.9804

(133.639)

0.924

(13.366)

-0.0601

(-0.557)

1

16

15

14

13

12

11

10

7

6

5

4

3

d

ID

1.7692

(13.522)

0.7322

(7.277)

1.4413

(5.984)

1.7407

(10.146)

1.9838

(8.436)

0.6518

(2.411)

0.6352

(6.117)

0.0946

(0.512)

0.7687

(7.622)

-0.3065

(-3.199)

0.0744

(0.186)

1.4256

(7.401)

1.5139

(12.140)

Constant

-0.4527

(-0.551)

-0.3594

(-0.750)

0.3320

(0.802)

0.2154

(0.381)

-0.8311

(-0.619)

1.7568

(1.046)

-0.3399

(-0.982)

-0.8252

(-0.278)

17.7032

(0.666)

0.5845

(0.289)

-1.1882

(-1.313)

-5.2126

(-0.689)

-3.0953

(-2.556)

α

0.0502

(2.077)

0.0607

(2.822)

0.0163

(4.799)

0.0138

(1.058)

0.0135

(4.665)

-0.0219

(-1.351)

-0.0451

(-2.112)

0.0028

(0.394)

-0.008

(-0.678)

0.0123

(0.450)

-0.0051

(-1.270)

0.0032

(0.635)

0.0369

(2.207)

β1

0.0349

(2.346)

-0.0008

(-0.076)

0.0031

(3.013)

-0.0243

(-2.578)

0.0004

(0.889)

0.0027

(0.426)

-0.0375

(-3.036)

0.0008

(0.325)

-0.0062

(-0.656)

-0.0051

(-0.431)

0.0091

(2.753)

-0.0036

(-0.601)

0.0536

(3.850)

β2

512.72

864.35

954.69

1276.36

657.81

1362.63

671.58

1079.36

733.68

790.48

1005.43

1025.99

643.83

Null

Deviance

Table 8: Estimation results for Model 2

475.86

855.68

922.25

1275.74

614.44

1350.70

648.60

1048.28

661.94

777.08

763.83

1016.79

633.30

Residual

Deviance

0.0010

0.3623

0.0027

0.9891

0.0002

0.2016

0.0216

0.0037

0.0000

0.1526

0.0000

0.3312

0.2609

P(> χ2 )

0.0374

0.0087

0.0323

0.0006

0.0438

0.0119

0.0231

0.0309

0.0717

0.0134

0.2338

0.0092

0.0105

Estrella

R2

36

1.0065

(169.029)

0.1534

(0.584)

0.9938

(206.353)

0.9170

(29.875)

1.0008

(233.816)

0.9495

(12.783)

0.2182

(1.998)

0.9979

(259.432)

0.3247

(1.350)

1.0030

(453.062)

0.9812

(154.361)

0.5449

(2.317)

0.9867

(242.560)

17

34

33

32

31

30

29

25

24

22

21

20

18

d

ID

0.3715

(3.247)

1.3936

(11.536)

0.5413

(2.022)

-0.0383

(-0.282)

-0.1570

(-0.631)

0.9166

(7.954)

0.9133

(8.645)

0.4892

(2.919)

2.4432

(13.761)

0.5807

(2.369)

1.1760

(4.216)

1.4158

(12.217)

-0.1709

(-0.808)

Constant

1.2755

(11.534)

-0.8383

(-1.071)

-0.2151

(-0.426)

1.3142

(2.960)

-0.7012

(-0.428)

-642.2003

(-34.980)

-10.4398

(-0.774)

-0.533

(-0.831)

-1.0994

(-1.478)

3.3416

(3.449)

-1.4824

(-2.067)

3.5832

(0.855)

1.4371

(4.844)

α

β2

0.0136

0.0029

(2.052) (2.452)

0.0319

0.0362

(1.354) (2.647)

0.0063

0.0081

(1.901) (3.076)

-0.0081

0.0139

(-1.160) (2.922)

-0.0011

0.0047

(-0.706) (2.592)

0.0000

0.0000

(0.814) (0.561)

0.0151

0.0125

(0.710) (0.905)

-0.0040

0.0055

(-1.775) (2.797)

0.0722

0.0507

(2.911) (2.555)

-0.0038

0.0059

(-2.702) (3.316)

0.0138

0.0046

(2.658) (1.870)

-0.0180 -0.0098

(-0.837) (-1.283)

0.0117

0.0138

(2.031) (4.193)

β1

894.65

595.38

643.98

783.69

392.98

913.47

612.69

1156.32

1109.81

1214.59

994.84

766.89

1202.12

Null

Deviance

Table 8: Estimation results for Model 2

810.46

587.02

561.30

454.42

375.66

888.28

560.19

1135.73

1033.47

1190.70

844.66

754.63

1180.36

Residual

Deviance

0.0000

0.3824

0.0000

0.0000

0.0702

0.0134

0.0000

0.0357

0.0000

0.0177

0.0000

0.1899

0.0279

P(> χ2 )

0.0836

0.0084

0.0841

0.3375

0.0175

0.0251

0.0534

0.0205

0.0753

0.0238

0.1470

0.0122

0.0217

Estrella

R2

37

0.9909

(177.051)

1.0001

(421.061)

0.9830

(103.276)

0.5280

(2.961)

0.9862

(262.811)

0.9423

(24.538)

35

42

41

40

38

36

d

ID

α

β1

1.3930

2.1080

0.0237

(3.559)

(4.629) (4.042)

1.3305

2.4618

0.0075

(5.629)

(2.187) (3.400)

2.793

-7.6377 -0.0146

(9.610)

(-2.927) (-1.687)

1.0695

-1.0336 -0.0411

(9.352)

(-1.049) (-1.947)

-0.1858

-0.4673

0.0115

(-0.892)

(-0.923) (1.671)

1.4983 3162.4744

0.0000

(10.854)

(3.043) (-2.273)

Constant

0.0064

(2.176)

0.0017

(1.406)

0.0085

(2.641)

0.0050

(0.636)

0.0186

(4.468)

0.0000

(2.840)

β2

895.63

942.39

778.32

352.90

990.60

498.87

Null

Deviance

Table 8: Estimation results for Model 2

889.32

722.63

771.60

307.81

861.45

415.65

Residual

Deviance

0.5326

0.0000

0.4994

0.0002

0.0000

0.0000

P(> χ2 )

0.0063

0.2149

0.0067

0.0463

0.1270

0.0860

Estrella

R2

38

16

15

14

13

12

11

10

7

6

5

4

3

1

ID

k

-0.2008

0.9848

(-0.948)

(1.182)

0.2423

2.0438

(0.891)

(0.629)

1.0016

1.3453

(235.795)

(3.433)

0.8015

1.5633

(7.100)

(2.474)

1.0047

6.1333

(358.70)

(1.265)

0.9760

-0.4556

(120.647)

(-1.371)

0.5768

4.7332

(4.580)

(2.438)

0.9962

0.1575

(113.585)

(0.507)

0.1167

0.1868

(1.092)

(1.391)

-0.2464 1123.6293

(-0.362)

(2.296)

0.9809

1.0369

(95.173)

(1.763)

0.9309

0.9029

(11.148)

(1.530)

-0.0409

1.2548

(-0.371)

(2.358)

d

1.7681

(12.347)

0.7482

(7.189)

1.6335

(4.664)

1.6714

(8.656)

2.4142

(8.622)

2.8197

(5.277)

0.4159

(2.888)

0.1124

(0.574)

0.9177

(7.678)

-0.2946

(-3.007)

0.0750

(0.188)

1.4302

(7.663)

1.5343

(11.692)

Constant

β1

-0.4573

0.0508

(-0.535) (1.289)

-0.4439

0.0337

(-0.609) (0.665)

0.0706

0.013

(0.134) (4.075)

0.0332

0.0085

(0.083) (0.850)

-1.3038

0.001

(-1.168) (1.387)

2.7452 -0.0121

(1.742) (-1.758)

0.0517 -0.0080

(0.449) (-1.569)

-5.1509

0.0005

(-0.890) (0.637)

26.9258 -0.0086

(0.602) (-0.602)

-0.2802

0.0000

(-0.208) (6.296)

-1.2201 -0.0048

(-1.199) (-0.810)

-6.8641

0.0026

(-0.325) (0.311)

-3.3013

0.0331

(-2.431) (2.019)

α

0.0352

(1.774)

0.0023

(0.251)

0.0051

(2.304)

-0.0246

(-2.827)

0.0003

(0.435)

-0.0052

(-2.299)

-0.0275

(-2.474)

0.0011

(0.522)

-0.0071

(-0.599)

0.0000

(0.435)

0.0089

(2.103)

-0.0030

(-0.333)

0.0478

(2.890)

β2

512.72

864.35

954.69

1276.36

657.81

1362.63

671.58

1079.36

733.68

790.48

1005.43

1025.99

643.83

Null

Deviance

Table 9: Estimation results for Model 3

475.58

855.66

922.24

1275.12

602.20

1350.21

641.14

1016.48

658.26

775.82

762.93

1016.39

633.30

Residual

Deviance

0.0023

0.5004

0.0062

0.9870

0.0000

0.2863

0.0095

0.0000

0.0000

0.1974

0.0000

0.4408

0.3840

P(> χ2 )

0.0377

0.0087

0.0323

0.0012

0.0564

0.0124

0.0307

0.0622

0.0754

0.0146

0.2347

0.0096

0.0105

Estrella

R2

39

34

33

32

31

30

29

25

24

22

21

20

18

17

ID

k

1.0005

-0.3975

(246.846)

(-0.298)

0.9928 796.6397

(464.660)

(23.563)

0.9945

1.2129

(203.067)

(1.808)

0.9068

1.4012

(26.515)

(4.219)

1.0012

1.0727

(180.817)

(1.613)

1.0035

8.2077

(422.765)

(3.149)

0.2021

1.3001

(1.956)

(2.237)

0.9952

0.3826

(151.811)

(2.555)

0.3545

1.1699

(1.302)

(1.633)

0.9968

-23.2483

(71.116)

(-0.377)

0.8546

-1.0078

(23.379)

(-2.794)

0.7353 1290.2667

(12.731)

(2.718)

0.9908

1.1653

(141.01)

(5.045)

d

-0.2491

(-1.033)

1.7032

(12.387)

0.5609

(1.998)

-0.0373

(-0.310)

-0.1605

(-0.659)

0.4854

(2.591)

0.9102

(8.462)

0.3559

(1.324)

2.4713

(11.747)

1.3334

(0.711)

-0.0669

(-0.241)

0.6749

(3.865)

0.0679

(0.152)

Constant

1.3106

(0.767)

-1.0300

(-8.770)

-0.2210

(-0.376)

0.8399

(2.201)

-0.6839

(-0.402)

-7.1516

(-4.816)

-8.6853

(-1.316)

-0.5476

(-2.591)

-1.1412

(-1.343)

1.6332

(0.279)

-0.4118

(-1.674)

0.466

(1.902)

1.3499

(6.712)

α

0.0024

(1.268)

-0.0000

(-4.575)

0.0049

(1.018)

-0.0077

(-0.957)

-0.0011

(-0.687)

0.0010

(2.332)

0.0180

(1.210)

-0.0202

(-1.747)

0.0636

(1.624)

-0.0006

(-1.514)

0.0584

(3.268)

-0.0000

(-2.840)

0.0136

(2.289)

β1

0.0008

(1.403)

0.0000

(7.645)

0.0091

(2.124)

0.0151

(3.180)

0.0048

(2.406)

0.0000

(2.684)

0.0128

(1.546)

0.0037

(1.323)

0.0461

(1.815)

0.0002

(0.223)

0.0379

(4.112)

-0.0001

(-3.521)

0.012

(2.958)

β2

894.65

595.38

643.98

783.69

392.98

913.47

612.69

1156.32

1109.81

1214.59

994.84

766.89

1202.12

Null

Deviance

Table 9: Estimation results for Model 3

810.24

555.10

546.89

407.34

375.60

878.24

559.88

1127.11

1033.45

1187.87

844.56

739.72

1168.94

Residual

Deviance

0.0000

0.0012

0.0000

0.0000

0.1220

0.0035

0.0001

0.0122

0.0000

0.0202

0.0000

0.0185

0.0053

P(> χ2 )

0.0838

0.0406

0.0991

0.3874

0.0175

0.0351

0.0537

0.0290

0.0753

0.0266

0.1471

0.0272

0.0329

Estrella

R2

40

1.0046

(128.696)

1.0047

(124.280)

0.9964

(142.649)

1.0108

(91.242)

0.8785

(21.224)

0.9423

(26.620)

35

42

41

40

38

36

d

ID

1.6008

(4.153)

0.4024

(4.443)

1.9930

(3.081)

0.7179

(3.756)

-1.3977

(-1.668)

0.4975

(1.224)

k

α

β1

1.3851

4.7046

0.0123

(4.149)

(2.806) (2.301)

1.2825

0.2218

0.0477

(5.266)

(0.411) (1.572)

1.9640

-5.4936 -0.0095

(4.372)

(-1.830) (-1.671)

1.0065

0.7123

0.0022

(4.645)

(1.824) (0.551)

-0.3146

0.5150 -0.0106

(-0.655)

(0.637) (-0.865)

1.4983 3162.4744 -0.0000

(11.239)

(1.419) (-4.126)

Constant

0.0041

(2.106)

0.0004

(0.701)

0.0064

(2.210)

0.0025

(1.061)

0.0499

(2.237)

0.0000

(0.304)

β2

895.63

942.39

778.32

352.90

990.60

498.87

Null

Deviance

Table 9: Estimation results for Model 3

887.89

700.72

739.70

302.53

858.95

411.86

Residual

Deviance

0.5688

0.0000

0.0017

0.0001

0.0000

0.0000

P(> χ2 )

0.0077

0.2358

0.0386

0.0519

0.1294

0.0900

Estrella

R2

41

-0.2204

(-1.049)

0.1461

(0.520)

0.9944

(275.804)

0.8545

(4.725)

1.0018

(291.874)

0.9357

(31.562)

0.5356

(4.043)

0.9919

(64.471)

0.1221

(0.413)

0.6189

(0.684)

0.9816

(144.29)

0.8099

(8.883)

-0.1085

(-0.899)

1

16

15

14

13

12

11

10

7

6

5

4

3

φ

ID

β2

0.0326

0.0450

(2.342) (2.030)

-0.0014

0.0467

(-0.156) (2.769)

0.0054

0.0113

(3.629) (3.172)

-0.0142

0.0150

(-1.119) (1.383)

0.0004

0.0134

(1.200) (4.544)

0.0059 -0.0118

(1.963) (-2.028)

-0.0252 -0.0371

(-2.855) (-2.416)

0.0007

0.0024

(0.702) (1.054)

0.0091

0.0605

(0.614) (3.563)

0.0020

0.0031

(0.286) (0.249)

0.0088 -0.0054

(2.881) (-1.321)

-0.0168

0.0024

(-2.328) (0.211)

0.0616

0.0518

(4.345) (2.513)

β1

1.7686

(13.532)

0.7360

(7.286)

0.8665

(3.650)

1.7838

(7.652)

1.9897

(9.063)

0.4734

(2.668)

0.6261

(6.003)

0.0895

(0.470)

0.7597

(7.027)

-0.3112

(-2.977)

0.0742

(0.204)

1.3317

(9.253)

1.5110

(12.182)

Constant

512.72