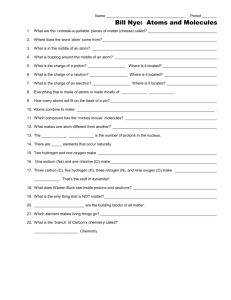

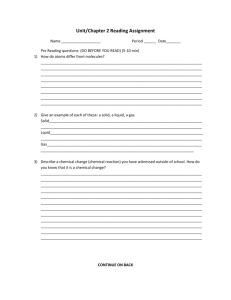

Atoms, Molecules and Matter: The Stuff of Chemistry

advertisement