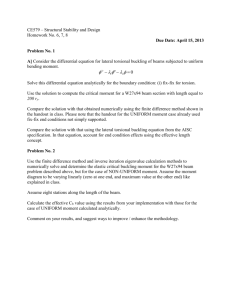

Journal Article pdf - STFC Numerical Analysis Group

advertisement