4654 Plomp Ch-19 - Stanford University

advertisement

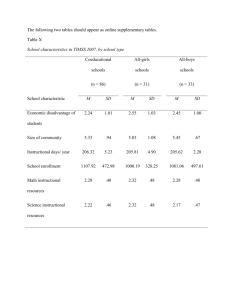

4654 Plomp Ch-19 2/2/06 12:56 PM Page 291 19 Towards a Science Achievement Framework: The Case of TIMSS 1999 Min Li, Maria Araceli Ruiz-Primo and Richard J. Shavelson Introduction Increasingly testing has become an essential element in educational reforms. Testing is used to gauge both students’ and teachers’ performance and to improve students’ learning (for example, Baker et al, 2002; Linn, 2000; Pellegrino et al., 2001). While the debates about testing and related issues have been heated and political, it is recognized that testing can be far too powerful an instrument to be used without very careful scrutiny of what is measured by the tests. Motivated by this belief, we present a secondary analysis that addresses the validity of TIMSS 1999 test score interpretation as a measure of students’ science achievement. In this chapter, we describe and implement a framework for assessing science achievement. The framework conceptualizes science achievement as four types of knowledge that are characteristic of competency or achievement in science. We apply this framework to the TIMSS 1999 science items and scores by logically analyzing test items and statistically modeling the underlying patterns of item scores. We use a coding system to analyze and map test items into the type(s) of knowledge that they most likely measure. We also report results from factor analyses to determine whether the knowledge-factor model accounts for the underlying pattern of item scores better than alternative models. Lastly, we examine the mosaic of instructional factors that influence students’ performance in terms of knowledge types. 4654 Plomp Ch-19 2/2/06 12:56 PM Page 292 292 MIN LI ET AL. Theoretical Framework Science Achievement Defining Science Achievement Parallel to the old definition of intelligence as what intelligence test measures, today we can say that science achievement is conventionally considered as what multiplechoice tests measure due to the pervasive and accepted use of the multiple-choice technology. Such a narrow perspective for measuring science achievement not only provides limited and even inaccurate information about students’ performance but undermines desirable outcomes of students’ learning (for example, Kohn, 2000; Shavelson et al., 1990). In contrast, our knowledge-based conception of science achievement is intended to broaden the notion of science achievement. In a series of papers (for example, Shavelson and Ruiz-Primo, 1999; Ruiz-Primo, 2002; Ruiz-Primo et al., 2002; Li et al., in press), we have developed and described a framework for science achievement drawn upon a body of related research (for example, Alexander and Judy, 1988; Bybee, 1996, 1997; Chi, Feltovich and Glaser, 1981; Chi, Glaser and Farr, 1988; Bennett and Ward, 1993; de Jong and FergusonHessler, 1996; OECD/PISA, 2001; Pellegrino et al., 2001; Sadler, 1998; White, 1999). We believe that such a framework should fully address the connections between standards, science curriculum, student learning, instruction, and educational measurement. Specifically, we conceptualize science achievement as four interdependent types of knowledge1: 1. Declarative knowledge: knowing that. Such knowledge includes scientific terms, definitions, facts, or statements, such as ‘CO2 is carbon dioxide’ or ‘green plants manufacture glucose from carbon dioxide and water’. Declarative knowledge can be learned and held in memory as words, pictures, or sensory representations. Indeed, representing concepts in a variety of forms is considered essential to the learning of science (for example, National Research Council [NRC], 1996). 2. Procedural knowledge: knowing how. Procedural knowledge takes the form of ifthen production rules or a sequence of steps, such as applying algorithms to balance chemical equations, reading data tables, or designing experiments to identify components of a mixture. With practice, individuals can convert their procedural knowledge into automatic responses. Such automaticity is one of the characteristics of expertise (for example, Anderson, 1983; Dreyfus and Dreyfus, 1986; Dutton and Jackson, 1987; Randel and Pugh, 1996; Rasmussen, 1986). 3. Schematic knowledge: knowing why. Schematic knowledge typically entails the application of scientific principles or explanatory models, such as explaining why the moon generally rises about 50 minutes later each day or how a particular virus functions. Schematic knowledge can also be used to guide actions, to troubleshoot systems, and to predict the effect that changes in some phenomenon will have on others (De Kleer and Brown, 1983; Gentner and Stevens, 1983). 4. Strategic knowledge: knowing when, where, and how to apply knowledge. This knowledge includes domain-specific strategies such as ways to represent a problem or strategies to deal with certain types of tasks. It also entails general 4654 Plomp Ch-19 2/2/06 12:56 PM Page 293 TOWARDS A SCIENCE ACHIEVEMENT FRAMEWORK 293 monitoring performance or planning strategies such as dividing a task into subtasks, or integrating the three other types of knowledge in an efficient manner. Experts, for example, are reported to interpret problems based on relatively absolute principles compared to the novices who just group problems using superficial features such as springs or inclined planes (for example, Chi et al., 1981; Zeitz, 1997). Linking Assessments to the Knowledge Framework This achievement framework can be used to specify important aspects of an achievement test. By synthesizing the research of cognitive psychology and assessment (for example, Glaser et al., 1992; Hamilton, Nussbaum et al., 1997; Li et al., in press; Martinez, 1999; Messick, 1993; Shavelson et al., 2002; Shavelson and Ruiz-Primo, 1999; Snow, 1989; Sugrue, 1993, 1995; White, 1999), we argue that some types of science knowledge may be well tapped by certain assessment items because of particular affordances and constraints provided by those items. Accordingly, in order to assess science achievement accurately and adequately, assessors should employ tests varying in their methods for eliciting students’ performance regarding knowledge types. To this end, we take one step further to map assessment items to these knowledge types. Note that it is not a straightforward task. Martinez (1999), for example, has demonstrated that testing method alone does not determine what type of knowledge an item measures. Instead, test items vary in many other characteristics, including the nature of the task demands placed on test takers or degree of task openness. Therefore, all these item characteristics should, if possible, be taken into account during the process of mapping assessment items to science knowledge. Research Questions Having sketched our framework for defining and measuring science achievement, in what follows we report a study using the TIMSS 1999 items and scores of the USA sample to examine the viability of this framework. For the sake of simplicity, we only report the results on TIMSS 1999 Booklet 8 items since similar findings were observed from the analysis of the other seven booklets.2 This study purports to validate the framework, which allows us to measure students’ science achievement and interpret scores in a meaningful way rather than using total scores. We ask two research questions. First, can the science achievement measured by the TIMSS 1999 items be decomposed as four types of knowledge? Research has shown that science achievement measured by large-scale tests can be decomposed as different aspects (for example, Hamilton, Nussbaum et al., 1997). In the logical analysis, two coders independently coded and classified the items into types of knowledge. Then, a set of confirmatory factor analyses (CFA) using the Booklet 8 item scores was performed to ascertain whether the items measured the types of knowledge as hypothesized and to compare against the competing models for science achievement. 4654 Plomp Ch-19 294 2/2/06 12:56 PM Page 294 MIN LI ET AL. Second, do students’ instructional experiences affect their science achievement? Instruction is conceived of a vital factor that contributes to the variations in students’ learning outcomes, such as test scores (NRC, 1996). The structural equation modeling was conducted after controlling other related variables to examine how the instructional variables are associated with students’ use of science knowledge. Students’ Science Achievement Measured by TIMSS 1999 Items In this section, we apply the achievement framework to examine the TIMSS 1999 science items and scores. We first present the logical analysis in which we used a coding system to identify item characteristics and classify the items into knowledgetypes. Following this, we report a set of CFA that were executed to verify the item classifications. Coding System and Example Items We relied on the research work done by us and other researchers to develop a coding system to analyze item characteristics (Ayala et al., 2000; Baxter and Glaser, 1998; Li, 2001; Quellmalz, 2002). We identified four major categories, listed from most important to least important: task demands; cognitive demands; item openness; and complexity (examples of those coding decisions are presented in Table 19.1). Task and cognitive demands are both critical cues for establishing the item-knowledge link whereas the latter two categories provide additional information to modify or revise the link. Through generating and weighting the item characteristics, we were able to map the TIMSS 1999 test items into types of knowledge. Below we present several TIMSS 1999 items to illustrate the utility of the coding system to classify test items. Item D05 was classified as an item tapping declarative knowledge (Figure 19.1). It asks students to identify the organ that carries the sensorial information. The coding led us to conclude that it mainly taps declarative knowledge. First, the response is expected to be in the form of terms (for example, names of the human organs). This item asks for a specific content question (that is, a specific fact), leaving students little opportunity to provide relations between concepts or to apply principles. Second, the cognition evoked is likely to be recalling facts. The cognitive process to answer the item is to directly retrieve information or do a minimum of scientific reasoning to sort out the relevant information. Third, speaking item openness, Item D05 is restricted because of the multiple-choice format and the unfinished stem that forces students to select options instead of providing responses prior to reading options. Being restricted in turn reinforces the task and cognitive demands placed on students. Finally, the coding for complexity supports the declarative link as well. For example, the item is similar to school-type problems. Consequently when answering the question students may recall exactly what they had been taught. Therefore, weighing the four categories, we classified Item D05 as a declarative-knowledge item. Item P01 was coded as testing procedural knowledge (Figure 19.2). It provides students with a graph showing a distance that a car has traveled for 75 minutes and asks 4654 Plomp Ch-19 2/2/06 12:56 PM Page 295 TOWARDS A SCIENCE ACHIEVEMENT FRAMEWORK Table 19.1. Coding System. Coding Description category Task Task(s) that demands students are asked to perform 295 Selected examples of Possible links with coding sub-categories knowledge typea .D . De®ning/using terms . Providing explanations . D/Sc .P . Designing an .P experiment . Mathematical calculation .D Cognitive Inferred cognitive . Recalling facts .P . Reading ®gures demands processes . Sc . Reasoning with students likely . St models act upon to provide responses . Comparing and selecting strategies . Selecting good for D . Selecting vs. Item Degree of . Incomplete stem good generating responses openness freedom students for D, but not good for . Stem presented as a are allowed to Sc/P/St complete question vs. have in shaping . One good for D vs. an incomplete stem responses to multiple good for . One vs. multiple an item Sc/St correct answers . Not good for St . Recipe directions . Not good for any type . Linguistic challenges Complexity Domain-general . Textbook good for D . Textbook vs. novel factors that vs. novel good for St tasks in¯uences the . Good for St . Inclusion of irrelevant item diculty . Not good for any type information . Use of everyday knowledge a Notes. D declarative knowledge, P procedural knowledge, Sc schematic knowledge, and St strategic knowledge. These abbreviations are applied hereafter. Figure 19.1. Example of a declarative-knowledge item. 4654 Plomp Ch-19 2/2/06 12:56 PM Page 296 296 MIN LI ET AL. Figure 19.2. Example of a procedural-knowledge item. them to select the speed of that car. The item requires students to respond with an algorithm to calculate speed and/or to interpret the diagram directly, both of which are procedural knowledge as we defined. Second, the cognitive process students probably undertake can be either applying a calculation algorithm for speed by dividing distance with time or locating the point corresponding to 60 minutes on the line in the graph (that is, an hour) and simply reading the distance on the vertical axis. However, the item’s lack of openness to some degree limits students’ applying procedural knowledge. Although this item allows students to generate their own responses before reading the options, students can arrive at the correct answer by simply working backwards from options or even merely guessing. Finally, the analysis of complexity does not provide additional information for modifying the coding decision. Considering all the codes, Item P01 was classified as procedural item though it is not a perfect candidate. Very different from Item D05 and P01, our logical analysis revealed that Item P02 tended to test students’ schematic knowledge. This short-answer item asks students to provide an explanation for why the same, or less, amount of light shines on the wall 4654 Plomp Ch-19 2/2/06 12:56 PM Page 297 TOWARDS A SCIENCE ACHIEVEMENT FRAMEWORK 297 from two meters away (Figure 19.3). The four item characteristics support students to use schematic knowledge. First, the item is intended to assess students’ knowledge about factors that determine the amount of light. It invites students to make a prediction and provide conceptual explanations by using the phrase ‘explain your answer’ in the stem. The knowledge that students use can be models or theories about light in words or pictures. Second, the dominant cognitive process is reasoning with theories. Very likely students have to develop responses by explaining the key features about light and its movement. Third, Item P02 is moderately open, requiring students to reason and organize their responses. Finally, the low complexity (for example, no heavy reading or irrelevant information) strengthens the posited link to schematic knowledge by reducing construct-irrelevant variances. Lastly and unfortunately, there are no example items for strategic knowledge that can be selected from TIMSS 1999. Strategic items are expected to have certain item characteristics, such as a complex, novel task that entails students’ interpreting the problem and selecting domain-specific strategies, an open item structure that supports the use of strategic knowledge, and a scoring that directly captures the differences in students’ use of strategic knowledge (for example, types of strategies, efficiency, patterns of monitoring). However, no TIMSS 1999 items satisfied the criteria for being strategic knowledge items. Logical Analysis Two coders independently reviewed and classified the TIMSS-R science items into knowledge types following the same procedures illustrated in the previous section. The inter-coder agreement was 89 percent.3 A final item classification was achieved as follows. For those items on which the two coders agreed, the agreed upon classifi- Figure 19.3. Example of a schematic-knowledge item. 4654 Plomp Ch-19 298 2/2/06 12:56 PM Page 298 MIN LI ET AL. cation is reported. For the disagreed items, we consulted the findings of Pine and colleagues (AIR, 2000) and resolved the difference based on their rating. In some cases where the item might tap a second knowledge type, coders were instructed then to provide a secondary code for the item. This was expected as the TIMSS-R items were designed to tap more than one performance expectation domain. Due to ambiguity and items drawing on everyday experience, we found that the knowledge codes were not applicable for a few items (then, those items were labeled as NA; see Table 19.2). This difficulty was not unique. In a recent study analyzing the same items, Pine and his colleagues (AIR, 2000) experienced similar difficulty. Item classifications are presented in Table 19.2. Given the fact that the Booklet 8 science items varied a great deal in their item characteristics, we anticipated that those items would require the use of different knowledge types. Consistent with this prediction, we observed that the booklet measured three of the four proposed types of knowledge, while – as stated before – none of the items was found to assess the strategic knowledge. The Booklet 8 science test is heavily loaded on declarative knowledge (approximately 50 percent) with balance of procedural and schematic knowledge items about equally distributed. The finding that declarative knowledge is overwhelmingly tested is also reported at other studies that examined large-scale tests at various grade levels (Britton et al., 1996; Lomax et al., 1995). As expected, three items (A08, P03 and R05) were double coded which were ambiguous to an extent that students could use either of two types of knowledge. We also classified Item Q16 as NA because students could use many different ways, even general reasoning, to figure out the correct answer. The item itself did not have sufficient affordances for triggering a certain type of knowledge. Rather, the knowledge to be used is more determined by an individual student’s preference and other factors. We also examined item format and item difficulty indexed by p-values in the table. There was little association between item format and the type of knowledge items are intended to test (adjusted χ 2 = 0.68, p = 0.71). This is not surprising. As we explained, the knowledge that one brings to work on an item is elicited by a complicated interaction of his cognition and a set of item characteristics, including but not limited to format. Further, a comparison of item difficulties revealed that relatively easier items were used for measuring the declarative knowledge rather than other types of knowledge. On average about 15 percent more students were able to provide correct answers to declarative items than other items. Putting those pieces together, one disturbing conclusion is that the Booklet 8 test largely measured students’ declarative knowledge with less challenging items. Collectively, we found that raters could apply the coding system to consistently classify the TIMSS 1999 items into types of knowledge. The coding showed that the Booklet 8 test was heavily loaded on declarative knowledge while procedural or schematic knowledge was measured by relatively fewer items. Factor Analysis The link between construct and assessment does not stop with the logical analysis. A variety of analyses can be performed to verify the posited links, such as examining 4654 Plomp Ch-19 2/2/06 12:56 PM Page 299 TOWARDS A SCIENCE ACHIEVEMENT FRAMEWORK 299 Table 19.2. Classi®cations of TIMSS-R Booklet 8 Science Items with the USA Booklet 8 Sample. Item Description p Classi®cationc label Formata Valueb D P Sc St NA A07 Organ not in abdomen MC 73 T A08 Stored energy in two springs MC 80 T T A09 Fanning a wood ®re MC 78 T A10 Seeing person in a dark room MC 76 T A11 Overgrazing by livestock MC 62 T A12 Changes in river shape/speed MC 52 T B01 Layers in earth MC 0.91 T B02 Energy released from car engine MC 0.64 T B03 Greatest density from mass/volume table MC 0.18 T B04 Pulse/breathing rate after exercise MC 0.92 T B05 Elevation diagram of wind/temperature MC 0.49 T B06 Color re¯ecting most light MC 0.82 T P01 Determination of speed from graph MC 0.47 T P02 Amount of light on wall and ceiling SA 0.29 T P03 Tree growth SA 0.41 T T P04 Hibernating animal MC 0.44 T P05 Two reasons for famine SA* 0.32 T P06 Digestion in stomach SA 0.42 T P07 Replication of measurement MC 0.37 T Q11 Earth year MC 0.63 T Q12 Water level in U-tube SA 0.26 T Q13 Alcohol/glass expansion in thermometer MC 0.44 T Q14 Sugar dissolving in water MC 0.35 T Q15 NOT a chemical change MC 0.39 T Q16 Light from sun and moon SA 0.59 T Q17 Function of chlorophyll MC 0.53 T Q18 Mass of freezing water SA 0.30 T R01 Bacteria/mold experience MC 0.46 T R02 Appearance of red dress in green light MC 0.45 T R03 Two outcomes of introducing new species SA* 0.39 T R04 Atmospheric conditions in jets SA 0.15 T R05 Small pieces of wood burn faster SA 0.16 T T R06 Results of global warming MC 0.28 T Notes. aMC multiple-choice, SA short-answer worth of 1 point, and SA * shortanswer worth of 2 points. bThe p-value reported is de®ned as the proportion of students who got completely correct answers out of those who took the Booklet 8 test. cNA indicates that the items cannot be classi®ed into certain knowledgetype(s) since items are too ambiguous or not dealing with science knowledge. students’ responses to the test items, collecting and analyzing students’ think-aloud verbalizations while responding to the test items, or statistically modeling the underlying patterns. In this chapter we empirically evaluate the posited link by examining the patterns of how item scores covariate with each other. We performed the CFA using software Mplus (Muthén and Muthén, 1999) to ascertain whether the knowledge model could account for the underlying covariance pattern. We built a statistical 4654 Plomp Ch-19 300 2/2/06 12:56 PM Page 300 MIN LI ET AL. model to test the links between the items and the coded knowledge types, which exactly correspond to the item classifications resulted from the logical analysis in Table 19.2 after removing Item Q16. Mplus outputs χ2 to indicate the model-goodness-fit of the data to the hypothesized model. The statistics of our model (χ2 = 325.49, df = 294, p = 0.10) and RMSEA (0.01) indicate that the fit of the item scores to our model was adequately good and was in the bounds of statistical acceptability. That is, the CFA confirmed the item classifications from the logical analysis: 17 items as declarative-knowledge; eight items as schematic-knowledge; and seven as procedural-knowledge. The results completely supported these classifications. Most factor loadings were statistically significant and generally high, which was also true for at least one loading with those double-coded items. The fit and the factor loadings support the feasibility to use our framework of science achievement to analyze the TIMSS 1999 science items. Further, the relation between each pair of the three hypothetical knowledge factors was significant too, indicated by the estimate to standard error ratios ranging from 3.73 to 11.04. We speculate that the strong associations presented in the knowledge types may be due to several reasons: (1) Different types of knowledge, in nature, are interdependent aspects of science understanding that one can achieve, therefore knowledge types should be moderately correlated. (2) Inevitably an individual’s general ability more or less affects her performance on each item, subsequently the knowledge types. And (3) items are unevenly distributed across the format and content, for example, 70 percent of items in multiple-choice format and more than half in life science and physics. If replicating the analysis with appropriately sampled items, we should expect to observe diluted correlations after reducing the influences from format or content. Another part of our CFA work was to compare the knowledge model with competing models. We built alternative models based on different theoretical claims, such as one factor as general ability, two factors as format (multiple-choice and shortanswer), or three factors using the performance expectation framework by TIMSS 19994 (Table 19.3). Using the fit measure and other information, we found that the knowledge model had a much better fit than those alternative models. All the models Table 19.3. Comparison Between the Knowledge-type Model and Alternative Models. Tested model Description of factors Fit of model 2 probability One factor model General reasoning ability 343.16 0.03 (df 297) Knowledge model Declarative, procedural, and 325.49 0.10 (df 294) schematic Format model Multiple-choice, and short-answer 339.20 0.04 (df 296) Content model Life science, physics, environmental 333.89 0.04 (df 290) issues, chemistry, earth science, and nature of science TIMSS-R performance Simple information, complex 341.79 0.04 (df 296) expectation model information, applying, theorizing, and solving problems 4654 Plomp Ch-19 2/2/06 12:56 PM Page 301 TOWARDS A SCIENCE ACHIEVEMENT FRAMEWORK 301 did fit well the item scores. Yet, only the knowledge model was significantly superior to the one factor model whereas other models just slightly improved the fit. The results suggest that a knowledge model better reflects the underlying pattern among item scores than using the total score as the general science achievement or using alternative structures. Overall, regardless of the domain, format, or thinking processes the TIMSS 1999 items have, they clustered into three knowledge factors. Linking Instructional Experiences to Students’ Achievement In order to provide additional evidence bearing on the construct validity, we attend to the relationship between students’ achievement and their instructional experiences. Ample research has addressed the relation between learning outcomes and instructional practice based on the very premise that students’ learning is influenced by classroom experiences (for example, Hamilton et al., 2003; Wenglinsky, 2002). One challenge that such studies must face is what learning outcomes are measured (Glass, 1990; Haertel, 1986; Linn, 2000). This exactly relates to the validity concerns that we pointed out at the beginning of this chapter. Use of invalid measures of science achievement not only results in misleading interpretations and decisions but eventually undermines the teaching and learning practice. With this concern, we continue validating our achievement framework by relating students’ classroom experiences with their learning defined as distinct types of knowledge. We expect that the associations would vary in their strength depending on the types of knowledge, which renders appropriate interpretations about students’ achievement. In contrast to using total scores, this partition approach provides more accurate, diagnostic information in tracing the effects of instructional variables. A similar approach used to conceptualize and decompose the science achievement, but on the basis of reasoning dimensions, has been reported more valuable than using total scores in a series of studies on National Educational Longitudinal Study of 1988 achievement test (for example, Hamilton, Nussbaum et al., 1997; Kupermintz and Snow, 1997). In summary, this section continues to apply the proposed knowledge framework to examine how instructional variables were associated with different types of knowledge demonstrated by the students. We anticipate that the findings are meaningfully different enough to justify the use of the knowledge framework rather than total scores. Measures of instructional experiences TIMSS 1999 administered questionnaires to both students and their teachers to collect information about the classroom instructional experiences that students have been exposed to. We limit the scope of our analysis by examining: (1) the instructional variables available in the student questionnaires (Martin et al., 2000); (2) literature on the impact of instructions (for example, De Jong and van Joolingen, 1998; Duit and Treaguest, 1998; Hamilton et al., 2003; Mayer, Mullens and Moore, 2000; Monfils et al, 2000; National Center for Education Statistics, 2001; Papanastasiou et al., 2004; 4654 Plomp Ch-19 302 2/2/06 12:56 PM Page 302 MIN LI ET AL. Ruiz-Primo et al., 2002; Smerdon et al., 1999; Tytler, 2002; Von Secker, 2002; Wenglinsky, 2002); and (3) instructional methods that teachers are recommended in science standard documents (for example, NRC, 1996; New Standards, 1997). Specifically, we selected to focus our analysis on eight instructional methods, grouped as textbook-based and hands-on. Textbook-based methods require students to copy notes from the board, to take quizzes and tests, or to work from worksheets or textbook questions. In contrast, hands-on methods are those recommended by national and state standards to teach science through authentic activities and inquiry experience. While the textbook-based methods tend to concentrate on literally rote learning of the materials from the texts, the hands-on methods attempt to help students make sense of what is learned. Table 19.4 summarizes the means of the instructional variables self-reported by the TIMSS 1999 USA Booklet 8 sample. Generally, students were often exposed to all those instructional methods, indicated by the range of these variables. The textbookbased methods seemed to be a more frequent experience than the hands-on methods, among which the use of everyday life was the least frequently reported; nevertheless, such a difference was negligible regarding the effect size. Comparing to the finding reported by some studies in last decade that textbook-based was a quite dominate teaching mode compared to hands-on in many American classrooms (Kober, 1993), this shows the gradual shift of teachers’ instructional practice, but a fundamental change in response to the national and state science standards has not yet fully achieved. Table 19.4. Means of Instructional Methods Reported by the TIMSS-R USA Booklet 8 Sample. Instructional Description of questionnaire item n Mean experience (max=4) Textbook-based methods Notes from board We copy notes from the board. 990 3.08 Quiz We have a quiz or test. 986 3.06 Worksheet We work from worksheets or textbooks on 984 3.12 our own. Hands-on methods Problem The teacher shows us how to do science 993 2.98 problems. Project We work on science projects. 983 2.82 Everyday life We use things from every day life in solving 985 2.50 science problems. Demonstrated experiment The teacher gives a demonstration of an 982 2.99 experiment. Student experiment We ourselves do an experiment or practical 978 2.82 investigation in class. Note. Students were instructed to report how often each of the instructional methods happened in their science classrooms at a 4-point scale. After recoded, scores of 1, 2, 3, and 4 refer to `Never', `Once in a while', `Pretty often', and `Almost always', respectively. 4654 Plomp Ch-19 2/2/06 12:56 PM Page 303 TOWARDS A SCIENCE ACHIEVEMENT FRAMEWORK 303 Relation between types of knowledge and instructional experiences Informed by the relevant research and science standards, we speculate that the textbook-based instructional methods are associated with students’ learning of declarative knowledge whereas the hands-on methods can be effective in improving students’ procedural and/or schematic knowledge (for example, Haury and Rillero, 1994; Glasson, 1989; Sutton and Kruger, 2001). For instance, textbook-based lecture, an effective way of teaching declarative knowledge, is unlikely to be successful in teaching procedural knowledge. In contrast, using everyday life examples, instead of unconnected factual information, to solve science problems is recommended as a strategy to improve students’ understanding across more than one content area (NRC, 1996). Therefore, this instructional method supports students’ construction of schematic knowledge rather than rote-learning of declarative knowledge. In order to test this predicted pattern, a set of structural equation modeling was executed while controlling for some important variables at student level (that is, highest education level of parents, index of home educational resources, out-of-school study science time, index of confidence in science ability, self-reported indication of usually doing well in science and positive attitude toward science). Decisions for selecting those controlling variables were made because they are commonly identified in the literature and available in the TIMSS 1999 dataset. We acknowledge that the complex sampling, including clustering of students within classrooms and sampling weights, should be taken into account in order to model instructional data and achievement scores (for example, Keeves et al., 2000). However, it is inappropriate with the data sample for each booklet to run the hierarchical linear modeling, for example, about half of the classrooms had only one or two students in Booklet 8 (for example, Kreft, 1996; Pollack, 1998).5 To address this methodological dilemma, we chose to report the results from the structural equation modeling and focus our interpretations on the differences between the use of knowledge framework and total scores instead of statistical significance (see Table 19.5). Overall, most of the factor loadings of instructional experiences on students’ performance on the test items were not significant, indicating that after controlling all the relevant background variables students’ instructional experiences did not contribute much to their learning outcomes. Across both models, teaching methods as copying notes from board, using everyday life problems and examples, and having students’ engaged in experiments seems to be associated with the students’ performance whereas other instructional methods are negatively related with students’ test scores. The magnitude of the loadings and the patterns of instruction-learning relationship are not consistent with the findings from a recent study by Papanastasiou and her colleagues to examine the relation between TIMSS 1999 instructions and students’ achievement indicated by Rasch (2004) scores. We suspect this may be due to the use of data from different countries and different indicators for student performance. In terms of differences between the two models, the relationship exhibits slightly different patterns for the instructional methods, such as the direction of the relationship for methods as Quiz and Everyday life or the strength of the relationship for methods as Student experiment. Within the knowledge-factor model, the factor loadings vary across the three types of knowledge, but some of the instruction–learning Page 304 12:56 PM 2/2/06 4654 Plomp Ch-19 MIN LI ET AL. 304 450.0 750.0 350.0 750.0 750.0 350.0 960.0 940.0 .E.S 461.0 360.0± 390.0± 550.0± 400.0 111.0± 120.0± 900.0± setamitsE citamehcS 840.0 440.0 340.0 740.0 540.0 440.0 350.0 040.0 .E.S .noitaler eht fo htgnerts lacitsitats eht stneserp yllacipyt .E.S ot etamitse fo oitar ehT .etoN 131.0 050.0 190.0 530.0 390.0 tnemirepxe tnedutS 840.0± 050.0 340.0± 330.0 830.0± tnemirepxe detartsnomeD 600.0± 050.0 280.0± 130.0 940.0± tcejorP 320.0± 250.0 100.0± 330.0 710.0± melborP 001.0± 550.0 910.0 430.0 500.0 efil yadyrevE 570.0± 050.0 240.0± 130.0 550.0± teehskroW 510.0 260.0 080.0± 040.0 920.0± ziuQ 450.0 740.0 630.0 030.0 220.0 draob morf setoN setamitsE .E.S setamitsE .E.S setamitsE larudecorP evitaralceD gnidnatsrednU elbairav ledom rotcaf-egdelwonK ledom rotcaf enO lanoitcurtsnI .ledoM rotcaF-egdelwonK dna ledoM rotcaF eno fo sgnidaoL rotcaF gnirapmoC .5.91 elbaT 4654 Plomp Ch-19 2/2/06 12:56 PM Page 305 TOWARDS A SCIENCE ACHIEVEMENT FRAMEWORK 305 relationships are contradictory to our predication that the textbook-based instructions are positively related to students’ learning of declarative knowledge where as handson instructions are effective for learning procedural and schematic knowledge. To make sense of this contradiction, we reason that the instruction–learning relation is more accurately represented if considering the quality of teaching in terms of how teachers implement the instructional methods rather than the implementation frequency or the amount of time teachers use those methods. For example, some researchers claim that frequent use of hands-on instructions does not automatically lead to the increase of students’ learning if instructional activities are not focused or well-connected to scientific concepts (for example, Nancy, 1993). Therefore, one real challenge for studies such as TIMSS 1999 would be to include items within the limited scope of student and teacher questionnaires to capture some important aspects of teaching quality. Equally challenging, secondary studies must strategically select and work with the data from those studies, such as being aware of the constraint of the data while interpreting the results, interpreting data combining several items to create appropriate indicators. To conclude, the relations between instruction and student performance differ between the one factor and knowledge-factor models and across the three types of knowledge. Generally speaking, the knowledge-factor model shows a more detailed picture of the instruction-learning relation compared to one factor model. Of course, given problems associated with the self-report technique (for example, inaccuracy of reported information, discrepancy between students’ and teachers’ perceptions about instruction) and the limitation of our methodology discussed earlier, these conclusions need to be cautiously examined before generalization. Conclusion The main purpose of this chapter was to outline and apply a knowledge framework to examine the science achievement tested by the TIMSS 1999 Booklet 8 science items and its relation with students’ instructional experiences. This framework conceptualizes science achievement as four types of knowledge: declarative knowledge or ‘knowing that’, procedural knowledge or ‘knowing how’, schematic knowledge or ‘knowing why’ and strategic knowledge or ‘knowing when, where, and how knowledge applies’. We argue that test items with different combinations of item characteristics as affordances and constraints can elicit or restrict students’ use of their science knowledge. For this purpose, we presented three sets of analyses. First, we applied the framework in a logical analysis to code and classify the TIMSS 1999 items. We observed that declarative knowledge was heavily loaded in the test items whereas none of the items tested strategic knowledge. Second, the results from factor analyses indicated that the underlying pattern for the item scores converged with the proposed itemknowledge links from the logical analysis. Those items that pre-classified as a certain knowledge type were clustered together. The knowledge-factor model was also found to fit better the item scores than any of the alternative models. And third, we exam- 4654 Plomp Ch-19 2/2/06 12:56 PM Page 306 306 MIN LI ET AL. ined the associations between students’ science knowledge and the self-reported instructional experiences. The factor loadings revealed that a modeling of the relationship with the knowledge factors provided more specific, meaningful interpretations of students’ learning than with one factor model. In addition, factor loadings varied across the three types of knowledge, which was another source of evidence bearing on the differences between the knowledge types. Even though the pattern failed to fully confirm our predictions, most loadings supported the distinctions between the knowledge types that we proposed. Overall, we conclude that the science achievement measured by the TIMSS 1999 test can be distinguished as different types of knowledge. The study reported suggests that it is necessary to implement a framework for science achievement, which can be either the one we proposed here or any theoretical frameworks developed on the basis of cognitive research and related fields, to carefully examine what is measured in science tests. Such a framework provides not only accurate interpretations of students’ achievement scores but meaningful insights and interesting questions on how instructional experiences are connected to students’ learning. Furthermore, the framework offers valuable information for testing development and practice as well. This chapter, we believe, is a beginning to develop and evaluate those frameworks. It should be followed with more studies to provide systematic, vigorous evidence using different methods with additional sets of science items. Notes 1. We recognize the simplification of our framework, for example, treating types of knowledge as if they were truly separable or presenting a static typology of knowledge. 2. In order to make it manageable to test any single student, TIMSS 1999 used an incomplete block design to sample test items and students. The TIMSS 1999 science items were organized into 21 clusters, which were then placed into eight test booklets so that a student only responded in one randomly assigned test booklet. Released items and data files can be found at http://isc.bc.edu/timss1999i/study.html. 3. The inter-coder agreement was calculated based on the codes for all the TIMSS 1999 science items. 4. Performance expectations implemented in TIMSS 1999 included five categories: (1) understanding simple information; (2) understanding complex information; (3) theorizing, analyzing and solving problems; (4) using tools, routine procedures; and (5) investigating the natural world. The framework was revised from a very similar one originally developed in TIMSS whereas a substantive modified framework based on cognitive demands as (1) factual knowledge, (2) conceptual understanding and (3) reasoning and analysis was proposed in TIMSS 2003. This chapter applied the TIMSS 1999 framework to classify the test items. 5. Analysis data within each booklet allows us to analyze test items and validate the posited knowledge codes in CFA. 4654 Plomp Ch-19 2/2/06 12:56 PM Page 307 TOWARDS A SCIENCE ACHIEVEMENT FRAMEWORK 307 References AIR (2000) Review of science items from TIMSS 1999: A report from the science committee to the technical review panel. Washington, DC: American Institutes for Research. Alexander, P.A. and Judy, J.E. (1988) The interaction of domain-specific and strategic knowledge in academic performance. Review of Educational Research, 58, 375–404. Anderson, J.R. (1983) The architecture of cognition. Cambridge, MA: Harvard University. Ayala, C.C., Ayala, M.A. and Shavelson, R.J. (2000, April) On the cognitive interpretation of performance assessment scores. Paper presented at the AREA annual meeting, New Orleans, LA. Baker, E.L., Linn, R.L., Herman, J.L. and Koretz, D. (2002) Standards for educational account systems. CRESST policy brief 5. Los Angeles: University of California, CRESST. Baxter, G.P. and Glaser, R. (1998) Investigating the cognitive complexity of science assessments. Educational Measurement: Issues and Practice, 7(3), 37–45. Bennett, R. and Ward, W. (1993) (eds.). Construct versus choice in cognitive measurement. Hillsdale, NJ: Erlbaum. Britton, E.D., Dossey, J., Eubanks, L., Gisselberg, K., Hawkins, S., Raizen, S.A. and Tamir, P. (1996) Comparing examinations across subjects and countries. In E.D. Britton and S.A. Raizen (eds.) Examining the examinations: An international comparison of science and mathematics examination for college bound students (pp. 23–54). Boston, MA: Kluwer. Bybee, R.W. (1996) The contemporary reform of science education. In J. Rhoton and P. Bowers (eds.), Issues in science education (pp. 1–14). Arlington, VA: National Science Teachers Association. Bybee, R.W. (1997) Achieving scientific literacy: from purpose to practice. Portsmouth, NH: Heinemann. Chi, M.T.H., Feltovich, P. and Glaser, R. (1981) Categorization and representation of physics problems by experts and novices. Cognitive Science, 5, 121–52. Chi, M.T.H., Glaser, R. and Farr, M. (eds.). (1988) The nature of expertise. Hillsdale, NJ: Erlbaum. de Jong, T. and Ferguson-Hesser, M.G.M. (1996) Types and qualities of knowledge. Educational Psychologist, 31, 105–13. de Jong, T. and van Joolingen, W.R. (1998) Scientific discovery learning with computer simulations of conceptual domains. Review of Educational Research, 68(2), 179–201. De Kleer, J. and Brown, J.S. (1983) Assumptions and ambiguities in mechanistic mental models. In D. Gentner and A.L. Stevens (eds.), Mental models (pp. 155–90). Hillsdale, NJ: Erlbaum. Dreyfus, H.L. and Dreyfus, S.E. (1986) Mind over machine: The power of human intuition and expertise in the era of the computer. New York: The Free Press. 4654 Plomp Ch-19 308 2/2/06 12:56 PM Page 308 MIN LI ET AL. Duit, R. and Treagust, D. (1998) Learning in science – from behaviorism towards social constructivism and beyond. In B. Fraser and K. Tobin (eds.), International handbook of science education (pp. 3–25). Dordrecht, Netherlands: Kluwer. Dutton, J.E. and Jackson, S.E. (1987) The categorization of strategic issues by decision makers and its links to organizational action. Academy of Management Review, 12, 76–90. Gentner, D. and Stevens, A.L. (eds.) (1983) Mental models. Hillsdale, NJ: Erlbaum. Glaser, R., Raghavan, K. and Baxter, G.P. (1992) Cognitive theory as the basis for design of innovative assessment: Design characteristics of science assessment (CSE Technical report No. 349). Los Angeles: University of California, CRESST. Glass, G.V. (1990) Using student test scores to evaluate teachers. In J. Millman and L. Darling-Hammond (eds.), The new handbook of teacher evaluation (pp. 229–40). Newbury Park, CA: SAGE. Glasson, G.E. (1989) The effects of hands-on and teacher demonstration laboratory methods on science achievement in relation to reasoning ability and prior knowledge. Journal of Research in Science Teaching, 26, 121–31. Haertel, E. (1986) The valid use of student achievement measures for teacher evaluation. Educational Evaluation and Policy Analysis, 8(1), 45–60. Hamilton, L.S., McCaffrey, D.F., Stecher, B.M., Klein, S.P., Robyn, A. and Bugliari, D. (2003) Studying large-scale reforms of instructional practice: An example form mathematics and science. Educational Evaluation and Policy Analysis, 25(1), 1–29. Hamilton, L.S., Nussbaum, E.M., Kupermintz, H., Kerthoven, J.I.M. and Snow, R.E. (1997) Enhancing the validity and usefulness of large-scale educational assessments: II. NELS: 88 science achievement. American Educational Research Journal, 32, 555–81. Hamilton, L.S., Nussbaum, E.M. and Snow, R.E. (1997) Interview procedures for validating science assessments. Applied Measurement in Education, 10(2), 181–200. Haury, D.L. and Rillero, P. (1994) Perspectives of hands-on science teaching. Columbus, OH: ERIC Clearinghouse for Science, Mathematics and Environmental Education. Keeves, J.P., Johnson, T.G. and Afrassa, T.M. (2000) Errors: What are they and how significant are they? International Education Journal, 11(3), 164–80. Kober, N. (1993) What we know about science teaching and learning. EdTalk. Washington, DC: Council for Educational Development and Research. Kohn, A. (2000) The case against standardized testing. Greenwich, CT: Heinemann. Kreft, Ita G.G. (1996) Are multilevel techniques necessary? An overview, including simulation studies. Unpublished report, California State University, Los Angeles. Retrieved 12 August 2003, from http://www.calstatela.edu/faculty/ikreft/quarterly/ quarterly.html Kupermintz, H. and Snow, R.E. (1997) Enhancing the validity and usefulness of large-scale educational assessments: II. NELS: 88 mathematics achievement to 12th grade. American Educational Research Journal, 34, 124–50. Li, M. (2001) A framework for science achievement and its link to test items. Unpublished dissertation at Stanford University. 4654 Plomp Ch-19 2/2/06 12:56 PM Page 309 TOWARDS A SCIENCE ACHIEVEMENT FRAMEWORK 309 Li, M., Shavelson, R.J. and White, R. (In progress). Towards a framework for achievement assessment design: The case of science education. Manuscript submitted. Linn, R.L. (2000) Assessment and accountability. Educational Researcher, 29, 4–16. Lomax, R.G., West, M.M., Harmon, M.C. and Viator, K.A. (1995) The impact of mandated standardized testing on minority students. Journal of Negro Education, 64, 171–85. Martin, M.O., Mullis, I.V.S., Gonzalez, E.J., Gregory, K.D., Smith, T.A., Chrostowski, S.J., Garden, R.A. and O’Connor, K.M. (2000) TIMSS 1999 international science report findings from IEA’s Repeat of the Third International Mathematics and Science Study at the eighth grade. Chestnut hill, MA: Boston College. Martinez, M.E. (1999) Cognition and the questions of test item format. Educational Psychology, 34, 207–18. Mayer, D.P., Mullens, J.E. and Moore, M.T. (2000) Monitoring school quality: An indicators report (NCES 2001–030). Washington, DC: National Center for Education statistics. Messick, S. (1993) Trait equivalence as construct validity of score interpretation across multiple methods of measurement. In R. Bennett and W. Ward (eds.), Construction versus choice in cognitive measurement (pp. 61–74). Hillsdale, NJ: Erlbaum. Monfils, L., Camilli, G., Firestone, W. and Mayrowetz, D. (2000, April) Multidimensional analysis of scales developed to measure standards based instruction in response to systemic reform. Paper presented at the annual meeting of AERA. Muthén, L.K. and Muthén, B.O. (1999) Mplus The comprehensive modeling program for applied researchers: User’s guide. Los Angeles, CA: Muthén and Muthén. National Center for Education Statistics (2001) The nation’s report card: Science highlights 2000. Washington, DC: National Center for Education Statistics. National Research Council (NRC) (1996) National Science Education Standards. Washington, DC: National Academy. New Standards (1997) Performance standards: English language arts, mathematics, science, applied learning. Washington, DC: Author. OECD/PISA (2001) Measuring student knowledge and skills. The PISA 2000 assessment of reading, mathematical, and scientific literacy. Paris: Organisation for Economic Cooperation and Development/Programme for International Student Assessment. Papanastasiou, E.C., Zacharia, Z. and Zembylas, M. (2004, May) Examining when instructional activities work well in relation to science achievement. Paper presented at the 1st IEA International Research Conference, Lefkosia, Cyprus. Pellegrino, J., Chudowsky, N. and Glaser, R. (2001) Knowing what students know: The science and design of educational assessment. Washington, DC: National Academy Press. Pollack, B.N. (1998) Hierarchical linear modeling and the ‘unit of analysis’ problem: A solution for analyzing responses of intact group member. Group Dynamics: Theory, Research, and Practice, 2, 299–312. 4654 Plomp Ch-19 310 2/2/06 12:56 PM Page 310 MIN LI ET AL. Quellmalz, E. (2002) Using cognitive analysis to study the validities of science inquiry assessments. Paper presented at the Annual AERA Meeting, New Orleans, LA. Randel, J.M. and Pugh, H.L. (1996) Differences in expert and novice situation awareness in naturalistic decision making. International Journal of Human Computer Studies, 45, 579–97. Rasmussen, J. (1986) Information processing and human-machine interaction: An approach to cognitive engineering. New York: North-Holland. Ruiz-Primo, M.A., Shavelson, R.J., Hamilton, L. and Klein, S. (2002) On the evaluation of systemic science education reform: Searching for instructional sensitivity. Journal of Research in Science Teaching, 39, 369–93. Ruiz-Primo, M.A. (2002, February) On a seamless assessment system. Paper presented to the AAAS annual meeting, Boston, MA. Sadler, P.M. (1998) Psychometric models of student conceptions in science: Reconciling qualitative studies and distractor-driven assessment instruments. Journal of Research in Science Teaching, 35(3), 265–96. Shavelson, R.J., Carey, N.B. and Webb, N.M. (1990, May) Indicators of science achievement: Options for a powerful policy instrument. Phi Delta Kappan, 692–7. Shavelson, R.J., Li, M., Ruiz-Primo, M.A. and Ayala, C.C. (2002, August) Evaluating new approaches to assessing learning. Presented at Joint Northumbria/EARLI Assessment Conference, University of Northumbria at New Castle, Longhirst Campus. Shavelson, R.J. and Ruiz-Primo, M.A. (1999) On the assessment of science achievement. (English version) Unterrichts wissenschaft, 27(2), 102–27. Smerdon, B.A., Burkam D.T. and Lee, V.E. (1999) Access to constructivist and didactic teaching: Who gets it? Where is it practiced? Teachers College Record, 101(1), 5–34. Snow, R.E. (1989) Toward assessment of cognitive and conative structures in learning. Educational Researcher, 18(9), 8-14. Sugrue, B. (1993) Specifications for the design of problem-solving assessments in science. Project 2.1. Designs for assessing individual and group problem-solving. ERIC ED: 372 081. Sugrue, B. (1995) A theory-based framework for assessing domain-specific problemsolving ability. Educational Measurement: Issues and practices, 14(3), 29–36. Sutton, J. and Krueger, A. (eds.). (2001) EDThoughts: What we know about science teaching and learning. Aurora, CO: Mid-continent Research for Education and Learning. Tytler, R. (2002) Teaching for understanding in science: Constructivist/conceptual change teaching approaches. Australian Science Teachers Journal, 48(4), 30–5. Von Secker, C. (2002) Effects of inquiry-based teacher practices on science excellence and equity. Journal of Educational Research, 95, 151–60. Wenglinsky, H. (2002) How schools matter: The link between teacher classroom practices and student academic performance. Education Policy Analysis Archives, 10(12). Retrieved 12 August 2003, from http://epaa.asu.edu/epaa/v10n12/ 4654 Plomp Ch-19 2/2/06 12:56 PM Page 311 TOWARDS A SCIENCE ACHIEVEMENT FRAMEWORK 311 White, R. (1999) The nature and structure of knowledge: Implications for assessment. Manuscript. Zeitz, C. (1997) Some concrete advantages of abstraction: How experts’ representations facilitate reasoning. In P.J. Feltovich, K.M. Ford and R.R. Hoffman (eds.), Experts in context (pp. 43–65). Menlo Park, CA: AAAI Press. 4654 Plomp Ch-19 2/2/06 12:56 PM Page 312