Least squares

advertisement

COMP 558 lecture 18

Nov. 15, 2010

Least squares

We have seen several least squares problems thus far, and we will see more in the upcoming lectures.

For this reason it is good to have a more general picture of these problems and how to solve them.

Version 1: Given an m × n matrix A, where m > n, find a unit length vector x that

minimizes k Ax k.

Here, and in the rest of this lecture, the norm k · k is the L2 norm. Also note that minimizing the

L2 norm is equivalent to minimizing the sum squares of the elements of the vector Ax, i.e. the

L2 norm is just the square root of the sum of squares of the elements of Ax. Also, the reason we

restrict the minimization to be for x of unit length is that, without this restriction, the minimum

is achieved when x = 0 which is uninteresting.

We can solve this constrained least squares problem using Lagrange multipliers, by finding the

x that minimizes:

xT AT Ax + λ(xT x − 1).

(∗)

That is, taking the derivative of (*) with respect to λ and setting it to 0 enforces that x has unit

length. Taking derivatives with respect to the x components enforces

AT Ax + λx = 0

which says that x is an eigenvector of AT A.

So which eigenvector gives the least value of xT AT Ax ? Since xT AT Ax ≥ 0, it follows that

the eigenvalues of AT A are also greater than or equal to 0. Thus, to minimize k Ax k, we take

the (unit) eigenvector of AT A with the smallest eigenvalue, i.e. we are sure this eigenvalue is

non-negative.

Version 2: Given an m × n matrix A with m > n, and given an m-vector b, minimize

k Ax − b k.

If b is 0 then we have the same problem above, so let’s assume b 6= 0. We don’t need Lagrange

multipliers in this case, since the trivial solution x = 0 is no longer a solution so we don’t need to

avoid it. Instead, we expand:

k Ax − b k2 = (Ax − b)T (Ax − b) = xT AT Ax − 2bT Ax + bT b

and then take partial derivatives with respect to the x variables and set them to 0. This gives

2AT Ax − 2AT b = 0.

or

AT Ax = AT b

(1)

which are called the normal equations. Assume the columns of the m × n matrix A have full rank

n, i.e. they are linearly independent so AT A is invertible. (Not so obvious.) Then, the solution is

x = (AT A)−1 AT b.

1

COMP 558 lecture 18

Nov. 15, 2010

What is the geometric interpretation of Eq. (1)? Since b is an m-dimensional vector and A is

an m×n matrix, we can uniquely write b as a sum of a vector in the column space of A and a vector

in the space orthogonal to the column space of A. To minimize k Ax − b k, by definition we find

the x such that the distance from Ax to b is as small as possible. This is done by choosing x such

that Ax is the component of b that lies in the column space of A, that is, Ax is the orthogonal

projection of b to the column space of A. Note that if b already belonged in the column space of

A then the “error” k Ax − b k would be 0 and there would be an exact solution.

Pseudoinverse of A

In the above problem, we define the pseudoinverse of A to be the n × m matrix,

A+ ≡ (AT A)−1 AT .

Recall we are assuming m > n, so that A maps from a lower dimensional space to a higher

dimensional space. 1

Note that

A+ A = I.

Moreover,

AA+ = A(AT A)−1 AT

and AA+ projects any vector b ∈ <m onto the column space of A, that is, it removes from b the

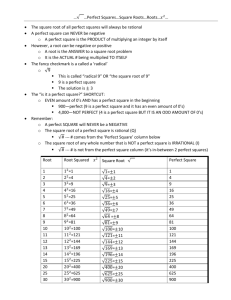

component that is perpendicular to the column space of A. This is illustrated in the figure below.

Note that the plane in the figure on the right contains the origin, since it includes A x = 0, where

x = 0.

Am x n

+

x=Ab

+

A

nxm

Ax

b

The pseudoinverse maps in the reverse direction of A, namely it maps b in an m-D space to an

x in an n-D space. Rather than inverting the mapping A, however, only “inverts” the component

of b that belongs to the column space of A, i.e. A+ A = I. It ignores the component of b that is

orthogonal to the column space of A.

1

Note that if m = n then A+ = A−1 .

2

COMP 558 lecture 18

Nov. 15, 2010

Non-linear least squares (Gauss-Newton method)

Let’s look at a commmon application of version 2 above. Suppose we have m differentiable functions

fi (x) that take <n to <m . The problem we consider now is, given an initial value x0 ∈ <n , find a

nearby x that minimizes k f~(x) k . Note that this problem is not well defined, in the sense that

“nearby” is not well defined. Nonetheless, it is worth considering problems for which one can seek

to improve the solution from some initial x0 .

Consider a linear approximation of the m-vector of functions f~(x) in the neighborhood of x0 ,

so that we are trying to minimize

k f~(x0 ) +

where the Jacobian

∂ f~(x)

(x − x0 ) k,

∂x

∂ f~(x)

∂x

is evaluated at x0 . It is an m×n matrix. This new linearized minimization

~

~

problem is now of the form we saw above in version 2 where A = ∂ f∂x(x) and b = f~(x0 ) − ∂ f∂x(x) x0 .

Since we are using a linear approximation to the f~(x) functions, we do not expect to minimize

k f~(x) k exactly. Instead, we iterate

x(k+1) ← x(k) + ∆x.

At each step, we evaluate the Jacobian at the new point x(k) .

Examples

• P

To fit a line y = mx + b to a set of points (xi , yi ) we found the m and b that minimized

2

i (yi − mxi − b) . This is a linear least squares problem (version 2).

• To

(xi , yi ), we found the θ and r that minimized

P fit a line x cos2θ + y sin θ = r to a set of points

2

(ax

+

by

−

r)

,

subject

to

the

constraint

a

+

b2 = 1. As we saw in lecture 15, this reduces

i

i

i

P

to solving for the (a, b) that minimized i (a(xi − x̄) + b(yi − ȳ))2 , which is the version 1

least squares problem. Once we have θ, we can solve for r since (recall lecture 15), namely

r = x̄ cos θ + ȳ sin θ.

• Vanishing point detection (lecture 15). It is of the form version 2.

• Recall the image registration problem where we wanted the (hx , hy ) that minimizes:

X

{I(x + hx , y + hy ) − J(x, y)}2

(x,y)∈N gd(x0 ,y0 )

This is a non-linear least squares problem, since the (hx , hy ) are parameters of I(x+hx , y+hy ).

To solve the problem, we took a first order Taylor series expansion of I(x + hx , y + hy ) and

thereby turned it into a linear least squares problem. Our initial estimate of (hx , hy ) was

(0, 0). We then iterated to try to find a better solution.

Note that we required that A is of rank 2, so that AT A is invertible. This condition says

that the second moment matrix M needs to be invertible.

3

COMP 558 lecture 18

Nov. 15, 2010

SVD (Singular Value Decomposition)

In the version 1 least squares problem, we need to find the eigenvector of AT A that had smallest

eigenvalue. In the following, we use the eigenvectors and eigenvalues of AT A to decompose A into

a product of simple matrices. This singular value decomposition is a very heavily used tool in data

analysis in many fields. Strangely, it is not taught in most introductory linear algebra courses. For

this reason, I give you the derivation.

Let A be an m × n matrix with m ≥ n. The first step is to note that the n × n matrix AT A is

symmetric and positive semi-definite – that’s easy to see since xT AT Ax ≥ 0 for any real x. So AT A

has an orthonormal set of eigenvectors and the eigenvalues are all real and non-negative. Let V be

an n × n matrix whose columns are the orthonormal eigenvectors of AT A. Since the eigenvalues

are non-negative, we can write2 them as σi2 .

Define Σ to be an n × n diagonal matrix with values Σii = σi on the diagonal. The elements, σi

are called the singular values of A. Note that they are the square roots of the eigenvalues of AT A.

Also note that Σ2 is an n × n diagonal matrix, and

AT AV = VΣ2 .

Next define

Ũm×n ≡ Am×n Vn×n .

(2)

Then

ŨT Ũ = VT AT AV = Σ2 .

Thus, the n columns of Ũ are orthogonal and of length σi .

We now define an m × n matrix U whose columns are orthonormal (length 1), so that

UΣ = Ũ.

Substituting into Eq. 2 and right multiplying by VT gives us

T

Am×n = Um×n Σn×n Vn×n

.

Thus, any m × n matrix A can be decomposed into

A = UΣVT

where U is m × n, Σ is an n × n diagonal matrix, and V is n × n. A similar construction can be

given when m < n.

One often defines the singular value decomposition of A slightly differently than this, namely

one defines the U to be m × m, by just adding m − n orthonormal columns. One also needs to add

m − n rows of 0’s to Σ to make it m × n, giving

T

Am×n = Um×m Σm×n Vn×n

For our purposes there is no important difference between these two decompositions.

Finally, note Matlab has a function svd which computes the singular value decomposition. One

can use svd(A) to compute the eigenvectors and eigenvalues of AT A.

2

Forgive me for using the symbol σ yet again, but σ is always used in the SVD.

4