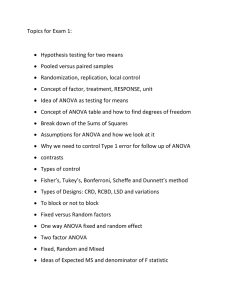

anova - Stata

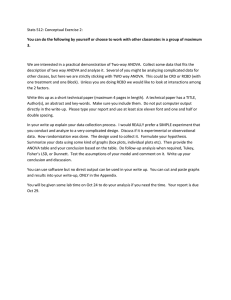

advertisement