Power Reduction Techniques For

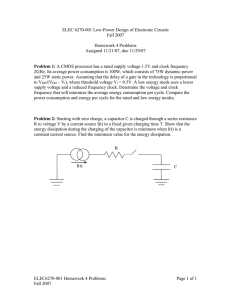

advertisement