Setup and configuration best practices for HPE StoreEasy 3850

advertisement

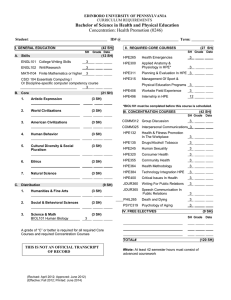

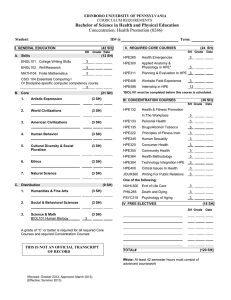

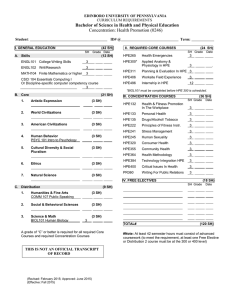

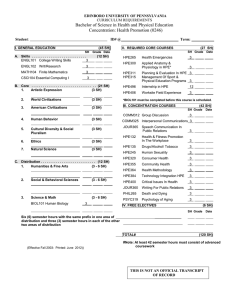

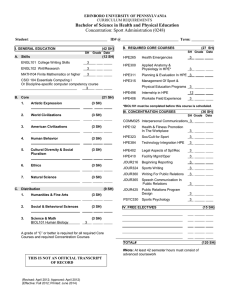

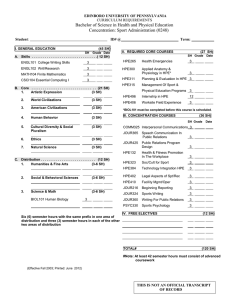

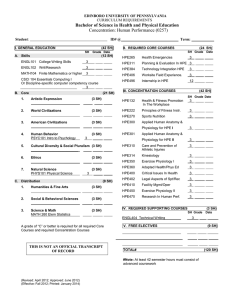

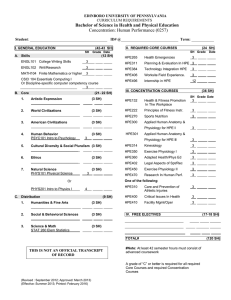

Best practices for HPE StoreEasy 3850 Setup and configuration Technical white paper Technical white paper Contents Technologies used to address these challenges ........................................................................................................................................................................................................................................................3 Objective of this white paper.........................................................................................................................................................................................................................................................................................................3 Best practices for performance ...................................................................................................................................................................................................................................................................................................3 Best practices for fault tolerance ...............................................................................................................................................................................................................................................................................................3 Best practices for networking configuration ...................................................................................................................................................................................................................................................................4 HPE StoreEasy 3850 performance kits ........................................................................................................................................................................................................................................................................4 Network implementation best practices.......................................................................................................................................................................................................................................................................4 NIC teaming ............................................................................................................................................................................................................................................................................................................................................4 SMB 3.0 Multichannel....................................................................................................................................................................................................................................................................................................................5 Best practices for cluster configurations ............................................................................................................................................................................................................................................................................5 Cluster heartbeat network.........................................................................................................................................................................................................................................................................................................5 Quorum.......................................................................................................................................................................................................................................................................................................................................................5 Best practices for storage configurations ..........................................................................................................................................................................................................................................................................6 HPE StoreEasy 3850 disk configuration .....................................................................................................................................................................................................................................................................6 Storage management initiative specification ...........................................................................................................................................................................................................................................................6 Configuring SMI-S provider......................................................................................................................................................................................................................................................................................................6 Microsoft Multipath I/O ................................................................................................................................................................................................................................................................................................................8 Best practices for data protection ............................................................................................................................................................................................................................................................................................8 Snapshots .................................................................................................................................................................................................................................................................................................................................................8 Replication ...............................................................................................................................................................................................................................................................................................................................................9 Backup and restore......................................................................................................................................................................................................................................................................................................................11 Antivirus .................................................................................................................................................................................................................................................................................................................................................12 Best practices for security ............................................................................................................................................................................................................................................................................................................ 12 User access .........................................................................................................................................................................................................................................................................................................................................12 Encryption ............................................................................................................................................................................................................................................................................................................................................12 Best practices for system monitoring ................................................................................................................................................................................................................................................................................ 14 Summary ......................................................................................................................................................................................................................................................................................................................................................14 Technical white paper Page 3 Users today are demanding file services that are efficient, secure, highly available, and which support hundreds to thousands of concurrent users and multiple workloads. Technologies used to address these challenges The HPE StoreEasy 3850 can answer the challenge of implementing a file services solution that is efficient, secure, and highly available. HPE StoreEasy 3850 provides integrated capabilities not found on unified storage, enabling you to deploy cost-effective solutions with advanced file-serving capabilities. HPE StoreEasy 3850 is one of the world’s most advanced storage platforms and the first to bring enterprise data services and quad controller designs to midmarket price points. Objective of this white paper The objective of is to provide best practices for managing your HPE StoreEasy 3850 Storage. The topics covered in this white paper include network configuration, cluster configurations, storage configurations, data protection, security, and monitoring. Best practices for performance The HPE StoreEasy 3850 has various performance options available. This table depicts best practices for increasing performance response and throughput. Table 1. Performance Increase performance For more information Increased throughput by aggregating network adapter ports. NIC teaming Decrease response times by load balancing the storage fabric. Microsoft® Multipath I/O (MPIO) Lower CPU utilization using Server Message Block (SMB) direct support of remote memory direct access (RMDA) capable network adapters offloading network-related functions from the CPU. SMB 3.0 Multichannel Utilize Windows® Storage Server 2012 R2 Cluster Scale-Out File Server role and Clustered Shared Volumes (CSVs) for Hyper-V with SMB, to increase overall performance. TechNet article on Scale-Out File Server for application data overview Best practices for fault tolerance To increase fault tolerance and high availability of data, there are multiple tools and applications that can enable these capabilities. This table depicts best practices for increasing fault tolerance. Table 2. Fault tolerance Increase fault tolerance For more information Implement failover clustering with two or more nodes to remove dependency on a specific file or application server. Failover clustering overview Adding a cluster node to an HPE StoreEasy 3850 Configure multiple network ports that provide redundant network routes utilizing network interface card (NIC) teaming. NIC teaming Implement SMB 3.0 Transparent Failover to enable high availability to your file shares. Note: SMB should be configured utilizing two identical network adapters in both type and speed. What’s new in SMB in Windows Server® 2012 R2 HPE StoreEasy 3850 Implement redundant routes to utilizing dual fabric infrastructure. Microsoft Multipath I/O Technical white paper Page 4 Best practices for networking configuration Implementing fast and efficient network configurations will provide the following advantages: • Increased throughput by aggregating network adapter ports • Lower latency by decreasing the network response time Lower CPU utilization through the use of SMB direct, including support of RMDA-capable network adapters offloading network-related functions from the CPU. HPE StoreEasy 3850 performance kits The HPE StoreEasy 3850 comes with HPE FlexibleLOM ports with a standard configuration of a 2-port 1GbE FlexibleLOM ports installed. These ports can be teamed together to provide aggregated network bandwidth. For high availability network connectivity to the StoreEasy 3850, it is recommended that you invest in this kit: HPE StoreEasy 3850 Gateway Storage 10Gb Performance Kit, part number K2R71A, which includes one HPE Ethernet 10Gb 2-port 560SFP+ Network Adapter, a second processor, and 32 GB of memory. Network implementation best practices When configuring the networking on each of the HPE StoreEasy 3850 nodes within a cluster configuration, they should have the same network segments on each node. Within each node, it is a best practice to: • Remove network bottlenecks by implementing multiple network segments utilizing multiple network ports or by implementing NIC teaming. • Disable unused network ports. • Configure IP addresses on unit network segments for each network interface. • Isolate the heartbeat network from other network traffic. See the section on heartbeat cluster network in this document for further direction. By default, the intra-node cluster communications are routed over IP version 6 (IPv6), therefore it is recommended that you not disable IPv6. NIC teaming NIC teaming, also known as load balancing and failover (LBFO), enables bandwidth aggregation and traffic failover in the event of a network component failure. Implementing a network configuration that provides high availability of network connections to HPE StoreEasy 3850 requires that network ports on different network adapters be members of NIC teams. Beginning with Windows Server 2012, the ability to implement NIC teaming is included in the operating system; therefore HPE no longer has a separate application to enable this capability. Figure 1. 10 GB NIC teaming Technical white paper Page 5 SMB 3.0 Multichannel The SMB 3.0 Multichannel feature provides additional capabilities in regards to network performance and availability for specific scenarios or applications. Some practical uses of SMB Multichannel include Hyper-V over SMB and accessing SQL database files that are hosted on an SMB network share, provided the share is on a CSV. Implementation of SMB Multichannel requires multiple network adapters that support Receive Side Scaling (RSS) and, optionally, RDMA. See the “Learn more” section at the end of this document for additional information. HPE InfiniBand network adapters include RDMA capability. Best practices for cluster configurations Failover clusters manage possible failures and events of each cluster node by monitoring each node in the cluster membership. The health of the cluster is determined by the heartbeat and quorum statuses as well as share accessibility. Cluster heartbeat network The heartbeats are exchanged every second. By default if a node does not respond to a heartbeat within five seconds, then the cluster will consider that node as “down.” When a node is considered to be down, the failover cluster manager will automatically move any resources that were active on that node to the remaining active nodes in the cluster. With the heartbeat being dependent on receiving the heartbeat in a timely matter the configuration of your heartbeat network should be: • Reliable and isolated—from other networks to prevent network congestion. • Unencrypted—IPSec encryption is turned off for inter-node cluster communication of the heartbeat. • Responsive—take into account other factors that can inhibit timely delivery of heartbeat packets such as an overwhelmed server that has high utilization, which inhibits the heartbeat from being responded to within its heartbeat threshold. Windows Storage Server 2012 R2 includes the ability to modify the heartbeat default threshold for Hyper-V failover clusters. However, do not increase the default heartbeat threshold when implementing scale-out file server cluster roles as this would increase the node failure detection to the point that the failover between nodes may no longer be seamless. Quorum It is recommended to utilize a quorum witness disk configured on the storage array. The functionality of the quorum has been improved in Windows Storage Server 2012 R2; one of these improvements enhances the quorum ability to dynamically decide whether or not it will utilize the quorum witness. The witness vote dynamically adjusts based upon the number of voting nodes in the current cluster membership. If there is an odd number of votes, then the quorum witness does not have a vote. If there is an even number of votes then the quorum witness has a vote. • Create the quorum witness disk as a virtual volume with a minimum size of 544 MB. • The RAID level of the virtual volume should be RAID 1. • The virtual volume should be thinly provisioned. The quorum virtual volume needs to be exported (unmasked) to the hosts or host sets that are to be members of the cluster nodes. If additional nodes are added to the cluster, the virtual volume needs to be exported to the new nodes as well. Technical white paper Page 6 Best practices for storage configurations The storage configuration of the storage attached to the HPE StoreEasy 3850 is dependent upon your business and application requirements and the capabilities of the storage array itself. For ease of storage management, the “Windows Standards-Based Storage Management” feature is pre-installed on each HPE StoreEasy 3850. It is recommended that you manage the HPE StoreEasy 3850 array from this application once the initial setup and configuration has been completed. Refer to the HPE StoreEasy 3850 Administrator Guide and Quick Start Guides for initial setup. After the initial configuration have been completed, the rest of the storage configuration steps can be accomplished through the Windows 2012 R2 Server Manager Dashboard. HPE StoreEasy 3850 disk configuration • Storage Pools should contain one LUN, multiple LUNs in a pool may suffer performance degradation. When multiple LUNs are contained within a storage pool any operations performed on a LUN will affect the other LUNs in that storage pool until the specific operation has completed. • Midline (MDL) or Nearline SAS drives 2 TB or greater should use RAID 6, the added redundancy of RAID 6 will protect against data loss in the event of a second drive failure with large MDL SAS drives. • When using RAID 5 with a MDL SAS drive then it is recommended that you assign a spare drive to the RAID 5 group per enclosure. • RAID groups should not contain drives from multiple enclosures. This also applies to spare drive assignment to the RAID group. • A pool that has multiple LUNs should not be shared between server nodes, in other words all LUNs in a single pool should be mounted on one server. • It is recommended that you use RAID 1 when configuring virtual machine (VM), with one RAID 1 LUN per VM. • Consider using RAID 10 (RAID 1+0) for applications that have a very high write ratio (over 50 percent of the access rate). • RAID 50 (RAID 5+0) should be considered for FC or serial-attached SCSI (SAS) providing the highest performance-to-capacity ratio. Storage management initiative specification SMI-S uses an object-oriented model based upon APIs that define objects and services, which enables the management of storage area networks. Application programming interface (API) based upon the SMI-S standard so that HPE StoreEasy 3850 can manage the storage array through this API. Centralized management of consolidated storage environments can be configured through the SMI-S provider, provided that storage array has support for SMI-S. Configuring SMI-S provider The HPE StoreEasy 3850 includes a simplified method for registering the SMI-S provider during the initial configuration tasks (ICT), which registers both cluster nodes when executed. The registration process will complete the following tasks: • Save the provider information for reuse whenever the service or system restarts • Save the credentials for the provider • Allow adding certificates to the proper store—if you are using the recommended Secure Sockets Layer (SSL) (HTTPS) communication protocol • Perform a basic discovery • Subscribe for indications Technical white paper Page 7 To complete the registration you will need to enter the IP address for the array, the user ID, and user password. As part of this registration, the storage provider cache is also updated on both cluster nodes. Figure 2. SMI-S Registration If additional SMI-S providers need to be registered, then use PowerShell commands to complete this task. • At an elevated PowerShell prompt, execute the “Register-SmisProvider” PowerShell commandlet. More details can be found here: technet.microsoft.com/en-us/library/jj884241.aspx. Figure 3. SMI-S Registration using PowerShell • After registering the provider, execute the “Update-StorageProviderCache” commandlet: Update-StorageProviderCache –DiscoveryLevel Full Registration is supported for HTTP through port 5988 and HTTPS through port 5989. It is recommended to register through HTTPS to ensure that the SSL certificates are properly configured and provide a higher level of security for communications. Note Updating the cache can take several minutes depending on the storage configuration. You must manually update the provider cache any time a change is made to the HPE StoreEasy 3850 Storage system using HPE Intelligent Management Center (IMC). Technical white paper Page 8 Microsoft Multipath I/O The Microsoft Multipath I/O (MPIO) feature is pre-installed on the HPE StoreEasy 3850. This feature, when utilized in a highly available storage fabric, provides the highest level of redundancy and availability. The HPE StoreEasy 3850 systems use the Microsoft MPIO device-specific modules (DSM). The HBAs installed in the HPE StoreEasy 3850 are MPIO-capable. During initial configuration of the HPE StoreEasy 3850 through ICT, the MPIO will be configured and this will require a reboot to complete. Figure 4. MPIO not configured After the system has been rebooted, it will reflect the MPIO in ICT as configured. Figure 5. MPIO configured Best practices for data protection As file storage data grows or as file storage consolidation from other storage mediums are leveraged into the HPE StoreEasy solutions that has been implemented it becomes increasingly more important to be able to recover or restore the data. Snapshots Volume Shadow Copy Service The ability to perform accurate and efficient backup and restore operations requires close coordination of backup applications, business applications, and the storage management hardware and hardware-related software. Volume Shadow Copy Service (snapshots) provides this coordination of actions that are required to create a consistent shadow (also known as snapshot or point-in-time copy) of the data that is to be backed up. Here are some typical VSS scenarios: • Back up application data and system state information, including archiving data to another hard disk drive, to tape, or to other removable media. • Efficiently perform disk-to-disk backups. Require fast recovery from data loss by restoring data to the original logical unit number (LUN) or to an entirely new LUN that replaces an original LUN that failed. Windows features and applications that use VSS include Windows Server Backup, Shadow Copies of Shared Folders, System Center Data Protection Manager, and System Restore. Plan your backup strategy carefully when backing up virtual volumes utilizing VSS because of the fact that VSS does not support creating a shadow copy of a virtual volume and the host volume in the same snapshot set. VSS does support creating snapshots of volumes on a virtual hard disk (VHD), if backup of the virtual volume is necessary. Technical white paper Page 9 When configuring VSS to make shadow copies of shared folders the following best practices apply: • Locate the snapshot copy of a volume on a different volume so that each volume has its own snapshot volume. • Snapshot volumes and their partner volumes must be part of the same cluster resource group for clustered StoreEasy 3850 implementations. • The Diff Area storage is the storage space that Shadow Copies of Shared Folders allocates on a volume for maintaining the snapshots of the contents of shared folders. When in a cluster configuration, the cluster manages the disk for online and offline operations; but the VSS needs to have the Diff Area and original volumes brought offline or online in a specific order. Because of this situation, it is recommended that when utilizing failover clusters, volumes on the same disk should not be associated with Diff Area storage. Instead, the storage volume and the original volume need to be the same volume, or they need to be on separate physical disks. • Adjust the shadow copy schedule to fit the work patterns of your clients. • Do not enable shadow copies on volumes that use mount points. You should explicitly include the mounted volume in the schedule for shadow copy creation (For previous versions of a file to be available, the volume must have a drive letter assigned). • Perform regular backups of your file server; snapshots of shared folders are not a replacement for performing regular backups. • Do not schedule copies to occur more often than once per hour. The default schedule for creating shadow copies is at 7:00 a.m., Monday through Friday. If you decide that you need copies to be created more often, verify that you have allotted enough storage space and that you do not create copies so often that server performance degrades. There is also an upper limit of 64 copies per volume that can be stored before the oldest copy is deleted. If shadow copies are created too often, this limit might be reached very quickly, and older copies could be lost at a rapid rate. • Before deleting a volume that is being shadow copied, delete the scheduled task for creating shadow copies. If the snapshots are no longer needed, then the snapshot volume can also be deleted. • Use an allocation unit size of 16 KB or larger when formatting a source volume on which Shadow Copies of Shared Folders will be enabled. If you plan to defragment the source volume on which Shadow Copies of Shared Folders is enabled, we recommend that you set the cluster allocation unit size to be 16 KB or larger, when you initially format the source volume. If you do not, the number of changes caused by defragmentation can cause previous versions of files to be deleted. Also, if you require NT File System (NTFS) file compression on the source volume, you cannot use an allocation unit size larger than 4 KB. In this case, when you defragment a volume that is very fragmented, you may lose older shadow copies faster than expected. After backing up a volume that contains shadow copies, do not restore the volume to a different volume on the same computer. Doing this would cause multiple snapshots with the same snapshot ID on the system and will cause unpredictable results (including data loss) when performing a shadow copy revert. Replication Windows DFS Replication and Namespaces DFS Namespaces and DFS Replication are role services in the File and Storage Services role. DFS Namespaces (DFS-N) enables you to group shared folders that are located on the same or different servers into one or more logically structured namespaces. Windows DFS Replication (DFS-R) enables efficient replication of folders across multiple servers and multiple locations. There has been new functionality implemented in Windows 2012 R2, which includes the ability to support data deduplication volumes. The following list of new or improved capabilities were included for DFS-R in the Windows 2012 R2 release: • New Windows PowerShell commandlets for performing the majority of administrative tasks for DFS-R. Administrators can use the extensive Windows PowerShell commandlets to perform common administrative tasks, and optionally automate them by using Windows PowerShell scripts. These tasks include operational actions such as creating, modifying, and removing replication settings. New functionality is also included with the commandlets, such as the ability to clone DFS Replication databases and restore preserved files. • New Windows Management Infrastructure (WMI)-based methods for managing DFS Replication. Windows Server 2012 R2 includes new Windows Management Infrastructure (sometimes referred to as WMI v2) provider functionality, which provides programmatic access to manage DFS Replication. The new WMI v1 namespace is still available for backwards compatibility. Technical white paper Page 10 • The ability to perform database cloning for initial sync was introduced, providing the ability to bypass initial replications when creating new replicated folders, replacing servers, or recovering from a database. Now the “Export-DfsrClone” commandlet allows you to export the DFS Replication database and volume configuration .xml file settings for a given volume from the local computer to clone that database on another computer. Running the commandlet triggers the export in the DFS Replication service and then waits for the service to complete the operation. During the export, DFS Replication stores file metadata in the database as part of the validation process. After you press the data and copy the exported database and .xml file to the destination server, you use “Import-DfsrClone” to import the database to a volume and validate the files in the file system. Any files that perfectly match don’t require expensive inter-server metadata and file synchronization, which leads to dramatic performance improvements during the initial sync. • Added the ability to support the rebuilding of corrupt databases without unexpected data loss caused by non-authoritative initial sync. In Windows Server 2012 R2, when DFS Replication detects database corruption, it rebuilds the database by using local file and update sequence number (USN) change journal information, and then marks each file with a “Normal” replicated state. DFS Replication then contacts its partner servers and merges the changes, which allows the last writer to save the most recent changes as if this was normal ongoing replication. • The ability to provide the option to disable cross-file remote differential compression (RDC) between servers. In Windows Server 2012 R2, DFS Replication allows you to choose whether to use cross-file RDC on a per-connection basis between partners. Disabling cross-file RDC might increase performance at the cost of higher bandwidth usage. • Includes the ability to configure variable file staging sizes on individual servers. DFS Replication now allows you to configure the staging minimum size from as little as 256 KB to as large as 512 TB. When you are not using RDC or staging, files are no longer compressed or copied to the staging folder, which can increase performance at the cost of much higher bandwidth usage. • Provides the capability to restore files from the “ConflictAndDeleted” and “PreExisting” folders. DFS Replication now enables you to inventory and retrieve the conflicted, deleted, and pre-existing files by using the “Get-DfsrPreservedFiles” and “Restore-DfsrPreservedFiles” commandlets. You can restore these files and folders to their previous location or to a new location. You can choose to move or copy the files, and you can keep all versions of a file or only the latest version. • Updated capability to enable automatic recovery after a loss of power or an unexpected stoppage of the DFS Replication service. In Windows Server 2012 and Windows Server 2008 R2, the default behavior when an unexpected shutdown happened required you to re-enable replication manually by using a WMI method. With Windows Server 2012 R2, it defaults to triggering the automatic recovery process. You must opt out of this behavior by using the registry value. In addition, if the only replicated folder on a volume is the built-in “SYSVOL” folder of a domain controller, it automatically triggers recovery regardless of the registry setting. In Windows Server 2012 and earlier operating systems, disabling a membership immediately deleted the “DfsrPrivate” folder for that membership, including the “Staging,” “ConflictAndDeleted,” and “PreExisting” folders. After these folders are deleted, you can’t easily recover data from them without reverting to a backup. Now with Windows 2012 R2, DFS Replication leaves the DfsrPrivate folder untouched when you disable a membership. You can recover conflicted, deleted, and pre-existing files from that location if the membership is not re-enabled (Enabling the membership deletes the content of all private folders). The following list provides a set of scalability guidelines for DFS-R that have been tested by Microsoft on Windows Server 2012, Windows Server 2008 R2, and Windows Server 2008: • Size of all replicated files on a server: 10 terabytes • Number of replicated files on a volume: 11 million Maximum file size: 64 gigabytes With Windows 2012 R2, improvements have been made with regards to DFS-R. These improvements include the increase of the size of all replicated files on a server from 10 TB to 100 TB. Refer to this blog for more details on the Windows 2012 R2 DFS-R capabilities. DFS-N can contain more than 5,000 folders with targets; the recommended limit is 50,000 folders with targets. DFS-N can support a stand-alone namespace if your environment supports specific conditions. For details on the requirements go to technet.microsoft.com/library/cc770287.aspx. DFS-R relies on Active Directory Domain Services for configuration; it will only work in a domain. DFS-R will not work with workgroups. Extend the Active Directory Domains Services (AD DS) schema to include Windows Server 2012 R2, Windows 2012, Windows Server 2008 R2, Windows Server 2008, and Windows Server 2003 R2 schemas. Ensure that all servers in a replication group are located in the same active directory forest. You cannot enable replication across servers in different forests. Technical white paper Page 11 DFS-R can replicate numerous folders between servers. Ensure that each of the replicated folders has a unique root path and that they do not overlap. For example, D:\Sales and D:\Accounting can be the root paths for two replicated folders, but D:\Sales and D:\Sales\Reports cannot be the root paths for two replicated folders. For DFS-R, locate any folders that you want to replicate on volumes formatted with the NTFS. DFS-R does not support the Resilient File System (ReFS) or the file allocation table (FAT) file system. DFS Replication also does not support replicating content stored on Cluster Shared Volumes. DFS-R does not explicitly require time synchronization between servers. However, DFS Replication does require that the server clocks match closely. The server clocks must be set within five minutes of each other (by default) for Kerberos authentication to function properly. For example, DFS Replication uses time stamps to determine which file takes precedence in the event of a conflict. Accurate times are also important for garbage collection, schedules, and other features. Do not configure file system policies on replicated folders. The file system policy reapplies NTFS permissions at every group policy refresh interval. DFS-R cannot be used to replicate mailboxes hosted on Microsoft Exchange Server. DFS-R can safely replicate Microsoft Outlook personal folder files (.pst) and Microsoft Access files only if they are stored for archival purposes and are not accessed across the network utilizing a client such as Outlook or Access. File Server Resource Manager (FSRM) file screening settings must match on both ends of the replication. When implementing file screens or quotas you should follow these best practices: • The hidden DfsrPrivate folder must be subject to quotas or file screens. • Screened files must not exist in any replicated folder before screening is enabled. • No folders may exceed the quota before the quota is enabled. Use hard quotas with caution. It is possible for individual members of a replication group to stay within a quota before replication, but exceed it when files are replicated. Backup and restore It is always a best practice to have a backup solution that backs up your data from the storage arrays. This will provide assurance that you will be able to recover your data in the event of a catastrophic failure or due to file corruption due to user error, application problems, or database issues. When planning and implementing your backup and restore process for a cluster configuration, take note of the following: • For a backup to succeed in a failover cluster, the cluster must be running and must have quorum. In other words, enough nodes must be running and communicating (perhaps with a disk witness or file share witness, depending on the quorum configuration) that the cluster has achieved quorum. For more information about quorum in a failover cluster, see understanding quorum configurations in a failover cluster. • Before putting a cluster into production, test your backup and recovery process. • When you perform a backup (using Windows Server Backup or other backup software), choose options that will allow you to perform a system recovery from your backup. For more information, see help or other documentation for your backup software. • When backing up data on clustered disks, notice which disks are online on that node at that time. Only disks that are online and owned by that cluster node at the time of the backup are backed up. • When restoring data to a clustered disk, notice which disks are online on that node at that time. Data can be written only to disks that are online and owned by that cluster node when the backup is being restored. • The HPE StoreEasy 3850 server operating system should be backed up before and after any significant changes in configuration. Perform system state backups of each of your HPE StoreEasy 3850 servers. System state backups, also called authoritative system restore (ASR) capable backups, include the cluster configuration information needed to fully restore the cluster. You need system state backups of every node, and you need to do them regularly in order to record the meta-information on the cluster’s disks. Note The Microsoft Cluster Service must be running on the node to record the proper disk information on the clusters disks. Technical white paper Page 12 • Review backup logs routinely to ensure that data is being properly backed up. • Monitor network reports for errors that may inhibit or cause congestion on the network, which can create backup errors. • It is recommended to have at least 1.5 times in storage capacity of the items that you want to back up. • Rotate backup media between onsite and offsite locations on regular intervals. • When backing up clustered shared volumes refer to the information in this link, technet.microsoft.com/library/jj612868.aspx. Be sure to back up the cluster quorum data, as it contains the current cluster configuration, application registry checkpoints, and the cluster recovery log. For further information on backing up and restoring failover clusters, see go.microsoft.com/fwlink/?LinkId=92360. Antivirus Antivirus products can interfere with the performance of a server and cause issues with the cluster. The antivirus should be configured to exclude the cluster-specific resources on each cluster node. The following should be excluded on each cluster node: • A witness disk if used • A file share witness if used The cluster folder “%systemroot%\cluster” on all cluster nodes Utilizing antivirus software that is not cluster-aware may cause unexpected problems on a server that is running Cluster Services. For more details, visit this link: support.microsoft.com/kb/250355. It is recommended that you disable scanning of DFS sources; to review further details about this, go to support.microsoft.com/kb/822158. Antivirus software may create issues when dealing with virtual machines; this link will provide further details—support.microsoft.com/kb/961804. Best practices for security User access User access should be managed through Active Directory and not local accounts. Limit user access to shares, folders, or files by implementing domain-level group permissions. Create a different user for each system administrator that will use the system. Alternatively, configure the system to use Active Directory and make sure all users use their own accounts to log into the system. When scripting, use the lowest privilege level required. If a script requires only read access to the system, use a “Browse” account. If a script does not need to remove objects, use a “Create” account. Encryption Windows BitLocker Windows BitLocker Drive Encryption is a data protection feature of the operating system. Having BitLocker integrated with the operating system addresses the threats of data theft or exposure from lost, stolen, or inappropriately decommissioned computers. When installing the BitLocker optional component on a server, you will also need to install the “Enhanced Storage” feature. On servers there is also an additional BitLocker feature that can be installed—BitLocker Network Unlock. The recommended practice for BitLocker configuration on an operating system drive is to implement BitLocker on a computer with a Trusted Platform Module (TPM) version 1.2 or 2.0 and a Trusted Computing Group (TCG)-compliant BIOS or Unified Extensible Firmware Interface (UEFI) firmware implementation, plus a personal identification number (PIN). By requiring a PIN that was set by the user in addition to the TPM validation, a malicious user that has physical access to the computer cannot simply start the computer. The part number for the TPM module supported on the HPE StoreEasy 3850 is 488069-B21. Technical white paper Page 13 Encrypting File System Windows Encrypting File System (EFS) includes the ability to encrypt data directly on volumes that use the NTFS so that no other user can access the data. Encryption attributes can be managed through folder or file objects’ properties dialog box. EFS encryption keys are stored in Active Directory objects on the domain controller with the user account that encrypted the files. Some EFS guidelines are: • Teach users to export their certificates and private keys to removable media and store the media securely when it is not in use. For the greatest possible security, the private key must be removed from the computer whenever the computer is not in use. This protects against attackers who physically obtain the computer and try to access the private key. When the encrypted files must be accessed, the private key can easily be imported from the removable media. • Encrypt the “My Documents” folder for all users (User_profile\My Documents). This makes sure that the personal folder, where most documents are stored, is encrypted by default. • Teach users to never encrypt individual files but to encrypt folders. Programs work on files in various ways. Encrypting files consistently at the folder level makes sure that files are not unexpectedly decrypted. • The private keys that are associated with recovery certificates are extremely sensitive. These keys must be generated either on a computer that is physically secured, or their certificates must be exported to a .pfx file, protected with a strong password, and saved on a disk that is stored in a physically secure location. • Recovery agent certificates must be assigned to special recovery agent accounts that are not used for any other purpose. • Do not destroy recovery certificates or private keys when recovery agents are changed (Agents are changed periodically). Keep them all, until all files that may have been encrypted with them are updated. • Designate two or more recovery agent accounts per organizational unit (OU), depending on the size of the OU. Designate two or more computers for recovery, one for each designated recovery agent account. Grant permissions to appropriate administrators to use the recovery agent accounts. It is a good idea to have two recovery agent accounts to provide redundancy for file recovery. Having two computers that hold these keys provides more redundancy to allow recovery of lost data. • Implement a recovery agent archive program to make sure that encrypted files can be recovered by using recovery keys. Recovery certificates and private keys must be exported and stored in a controlled and secure manner. Ideally, as with all secure data, archives must be stored in a controlled access vault, and you must have two archives: a master and a backup. The master is kept onsite, while the backup is located in a secure, offsite location. • Avoid using print spool files in your print server architecture, or make sure that print spool files are generated in an encrypted folder. The Encrypting File System does take some CPU overhead every time a user encrypts and decrypts a file. Plan your server usage wisely. Load balance your servers when there are many clients using Encrypting File System. HPE StoreEasy 3850 data encryption HPE StoreEasy 3850 data encryption prevents data exposure that might result from the loss of physical control of disk drives. This solution uses self-encrypting drive (SED) technology to encrypt all data on the physical drives and prevent unauthorized access to data-at-rest (DAR). When encryption is enabled, the SED will lock when power is removed, and it will not be unlocked until the matching key from the HPE StoreEasy 3850 system is used to unlock it. Keep the encryption key file and password safe. If you lose the encryption key and the HPE StoreEasy 3850 system is still functioning, you can always perform another backup of the encryption key file. However, should you lose the encryption key file or the password, and should the HPE StoreEasy 3850 system then fail, the HPE StoreEasy 3850 system will be unable to restore access to data. Ensure that backup copies of the latest encryption key file are retained and that the password is known. The importance of keeping the encryption key file and password safe cannot be overstated. HPE does not have access to the encryption key or password. Different arrays need separate backups, although the same password can be applied. The SED data store provides an open interface for authentication key management. The SED data store tracks the serial number of the array that owns each SED, which disallows SEDs from being used in other systems. Technical white paper Best practices for system monitoring HPE System Management Homepage HPE System Management Homepage (SMH) is a Web-based interface that consolidates and simplifies single system management for HPE servers. The SMH is the primary tool for identifying and troubleshooting hardware issues in the storage system. You may select this option to diagnose a suspected hardware problem. Go to the SMH main page and open the “Overall System Health Status” and the “Component Status Summary” sections to review the status of the storage system hardware. Windows performance monitoring It is recommended to have monitoring running on all your cluster nodes. This monitoring should be set up so all the relevant counters are logging information to a binary file. Circular logging should also be used to overwrite previous data. This also ensures the captured data file does not grow too large and therefore does not eat up disk space. This information is useful when a performance issue occurs; rather than waiting for performance data to be gathered once an issue has been raised, the performance data is already in hand. Summary The HPE StoreEasy 3850 offers a tightly integrated, converged solution when paired with HPE Storage. By following guidelines and best practices as outlined in this technical white paper, you can increase the efficiency and performance of your HPE StoreEasy 3850 converged solution. Resources Implementing Microsoft’s NIC teaming SMB Multichannel Learn more at hp.com/go/storeeasy3000 Sign up for updates © Copyright 2015–2016 Hewlett Packard Enterprise Development LP. The information contained herein is subject to change without notice. The only warranties for Hewlett Packard Enterprise products and services are set forth in the express warranty statements accompanying such products and services. Nothing herein should be construed as constituting an additional warranty. Hewlett Packard Enterprise shall not be liable for technical or editorial errors or omissions contained herein. Microsoft, Windows, and Windows Server are either registered trademarks or trademarks of Microsoft Corporation in the United States and/or other countries. 4AA6-1003ENW, August 2016, Rev. 1