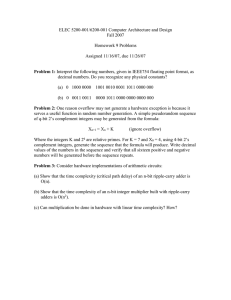

Slides

advertisement

Coding Techniques

for Data Storage Systems

Thomas Mittelholzer

IBM Zurich Research Laboratory

1 /28

Göttingen 2012

www.zurich.ibm.com

Agenda

1.

Channel Coding and Practical Coding Constraints

2.

Linear Codes

3.

Weight Enumerators and Error Rates

4.

Soft Decoding

5.

Codes on Graphs

6.

Stopping Sets

7.

Error Rate Analysis of Array-Based LDPC Codes

8.

Summary

2 /28

Göttingen 2012

www.zurich.ibm.com

1. Channel Capacity and Coding

Discrete memoryless channel (DMC)

Example: BSC(ε) = binary symmetric channel

with crossover probability ε

Y output

input X

DMC

1-ε

0

0

ε

X

PY|X

Y

ε

1

1

1-ε

Shannon 1948: Channel capacity can be approached by coding

Capacity:

C = max I( X; Y)

PX

( Recall: I(X;Y) = - ∑ P(y)logP(y) - ∑ P(x,y)logP(y|x) )

Channel Coding Theorem (Gallager): For every rate R < C, there is a code of length n and rate R such

that under maximum-likelihood (ML) decoding the worst case block error probability is arbitrarily small,

i.e.,

P{ X^ ≠ X}worst case < 4 exp[ – n Er(R) ]

where Er(R)>0 is the random coding exponent (which depends on the channel and R).

Practical Problems: 1. Finding a good code is difficult

2. ML-decoding is complex.

E.g., exhaustive search over code to find x ^ = arg max P (y | x)

x∈code

3 /28

Göttingen 2012

www.zurich.ibm.com

1. Practical Coding Constraints of Data Storage Devices

Practical constraints of DRAM, hard disk drives and tape drives

DRAM (DDR3)

Hard Disk Drives

Tape Drives

BER ~ 10-21

BER ~ 10-15

BER ~ 10-17

~ 50 Gbit/s

~ 1 Gbit/s

~ 1 Gbit/s

Delay

~ 5 ns

(~ 3 ms with seek time)

-

Codeword Length

(in bits)

~ 72

~ 4500

~ 160,000

Reliability

(maximum error rates)

Throughput

(burst operation)

For DRAM, the codeword length and/or decoding time cannot be much extended

For hard disk drives and tape drives, the codeword lengths and/or decoding time could be

extended at the price of buffering more data

The low bit error rates (BER) cannot be verified by simulations

Need coding schemes with efficient decoders whose error rates can be assessed analytically

4 /28

Göttingen 2012

www.zurich.ibm.com

2. Linear Codes

Def.: A linear (n,k) code over a finite field GF(q) is a k-dimensional linear subspace C ⊆ GF(q)n

Examples: 1. Binary (3,1) repetition code C = { 0 0 0, 1 1 1 } in GF(2)3

2. Ternary (3, 1) repetition code C = { 0 0 0, 1 1 1, 2 2 2} in GF(3)3

Def.: Hamming distance between two vectors x = x1 x2 … xn and y = y1 y2 … yn in GF(q)n :

d( x, y) = # { i : xi ≠ yi }

(= number of components in which x and y differ)

Hamming weight of a vector x: w(x) = d(x,0)

Proposition: The pair (GF(q)n , d(. , .) ) is a metric space.

Def.: Minimum distance of a linear code C:

dmin = min {d( x, y) : x, y ∈ C, x ≠ y }

Proposition: A linear code C with minimum distance dmin can correct up to t = (dmin -1)/2 errors.

Proof:

5 /28

Any error pattern e = e1e2…en of weight w(e) ≤ t distorts a codeword x into a received word

y = x + e, which lies in the Hamming sphere S(x,t) of radius t around the codeword x. Since

∩x ∈ C S(x,t) = ∅ , there is a unique closest codeword x to each received word y.

Göttingen 2012

www.zurich.ibm.com

2. Linear Codes: Hamming Codes

Description of a linear code C by parity check matrices H and generator matrices G: ker H = C = im G

Example: Binary (n=7, k=4, dmin = 3) Hamming code C = { x = [x1x2…x7] : x HT = 0 } = ker H, where

0

H = 0

1

0

0

1

1

1

1

0

1

1

0

0

0

1

1

0

1

1

1

Equivalent systematic parity check matrix H’, i.e., ker H’ = C, and systematic generator matrix G

0

H ' = 1

1

1

0

1

1

1

1

1

0

0

1

1

0

1

0

0

0

0

1

=P

1

0

G=

0

0

0

1

0

0

0

1

0

0

0

0

1

1

1

0

1

0

0

1

1

1

=> G HT = [ I – PT] [ P I ]T = PT – PT = 0

Encoding a 4-bit message u = [u1 u2 u3 u4] with generator matrix G:

1

1

0

1

= - PT

x=uG

If G is systematic, the encoder inverse map x → u is a projection

6 /28

Göttingen 2012

www.zurich.ibm.com

2. Linear Codes: Hamming Codes (cont.ed)

The binary (7,4) Hamming code has dmin = 3:

•

there is a weight-3 codeword, viz., x = 1 1 1 0 0 0 0

•

no two columns of H are linearly dependent, i.e.,

there is no weight-2 codeword (and no weight-1 codeword)

0

H = 0

1

0

0

1

1

1

1

0

1

1

0

0

0

1

1

0

=> The (7,4) Hamming code can correct t = 1 error.

1

1

1

j-th column

Syndrome Decoding

For an error pattern e = [e1e2…e7] of weight w(e) = 1, the received word y = x + e satisfies

s = y HT = (x + e) HT = eHT = [0 … 1 .. 0] HT = transpose of j-th column of H

“syndrome”

j-th nonzero component of e

=> Error correction at j-th position:

u

7 /28

Encoder

x = uG

x

BSC

y

x^ = y – [0 … 1 .. 0]

Syndrome

Former

s = yHT

Göttingen 2012

s

Decoder

x^

Encoder

Inverse

u^

www.zurich.ibm.com

2. Linear Codes: Hamming Codes (cont.ed)

Proposition:

0

For every m > 1, there is a binary Hamming code

M

of length n = 2m – 1, dimension k = n – m and

0

H

=

dmin = 3, characterized by the mxn parity check matrix

0

m

(columns = binary representation of all numbers 1 … 2 – 1)

1

L

0

0

0

M

0

M

0

M

1

M

1

1

0

1

1

0

0

1

0

L

1

1

M

1

1

1

m

rows

Proposition: Hamming codes are perfect, i.e., the decoding spheres fill the entire space

∪x∈C S(x,t=1) = GF(2)n

To show: ∑x∈C |S(x,t=1)| = 2n ⇔ 2k Vt=1 = 2n-m (1 + n) = 2n

n

n

where Vt = 1 + + ... +

1

t

23 23 23 12

Remark: There is a (n=23,k=12,dmin=7) perfect “Golay” code

1 + + + 2 = 2 23

and there are no other nontrivial binary codes.

1 2 3

Sphere packing problems

Applications:

A shortened (n=72,k=64,dmin=4) Hamming code with an overall parity bit is used in

DRAM standards. For a BSC with ε ≈ 10-12, one achieves a BER of about 10-21.

8 /28

Göttingen 2012

www.zurich.ibm.com

2. Linear Codes: Reed-Solomon Codes

Let α∈GF(q) be a primitive element, i.e., <α> = GF(q)\{0}.

Let d > 0 “design distance”

Def.: An (n = q – 1, k = n – d + 1) Reed-Solomon (RS) code is determined by the parity-check matrix

1 α

2

1

α

H = 1 α 3

M

1 α d -2

α2

(α 2 ) 2

(α 3 ) 2

α3

(α 2 ) 3

(α 3 ) 3

L

L

L

(α d-2 ) 2

(α d-2 ) 3

L

α n -1

(α 2 ) n −1

(α 3 ) n −1

M

d - 2 n −1

(α )

d–1

rows

Every (d-1)x(d-1)-submatrix is a Vandermonde matrix based on mutually different terms αi1, αi2, …, αid-1

and, hence, has full rank d-1. Thus, no d – 1 columns of H are linearly dependent dmin ≥ d

Theorem: The minimum distance of the (n, k) RS code is dmin = d = n – k + 1

Singleton – Bound on an (n, k, dmin) linear code:

dmin ≤ n – k + 1

Let G be systematic. Then [ 0 0 … 0 1] G = [ 0 0 … 0 1 xk+1 xk+2 … xn] has weight at most n – k +1

A linear code meeting the Singleton – Bound is called maximum-distance separable

9 /28

Göttingen 2012

www.zurich.ibm.com

3. Weight Enumerators

Let C be a linear (n,k,dmin) code over GF(q) and let Ai be the number of codewords of weight i.

∆

Def.:

n

A( z ) = ∑ Ai z i

weight enumerator of the code C

i =0

(7,4) Hamming code:

Example: Weight enumerator of the (n=7, k=4) binary Hamming code:

A0 = 1, A1 = A2 = 0, A3 = 7, A4 = 7, A5 = A6 = 0, A7 = 1

A(z) = 1 + 7 z3 + 7z4 + z7

Theorem: The weight enumerator of the binary Hamming code

of length n (= 2m – 1 ) is given by

1

n

A( z ) =

(1 + z ) n +

(1 + z ) ( n −1) / 2 (1 − z ) ( n +1) / 2

n +1

n +1

MacWilliams identities, automorphism group of a code

Lattice of a linear (n,k) code C = ρ-1(C), where ρ : Zn → (Z/2Z)n

0000000

1110000

1101000

1001100

1000011

0101010

0100101

0011001

1100110

1011010

1010101

0111100

0110011

0010111

0001111

1111111

weight 0

weight 3

weight 4

weight 7

E.g., Gosset lattice E8 corresponds to the extended Hamming code of length 8

10 /28

Göttingen 2012

www.zurich.ibm.com

3. Weight Enumerators and Error Rates

When using a linear (n,k) code C on the BSC(ε), what is the probability of an undetected error?

An error is undetected iff the received word is a codeword.

By linearity, one can assume that the allzero codeword was transmitted.

ε i (1 − ε ) n −i = prob. that the BSC transforms the allzero word into a fixed codeword of weight i

ε

) − 1

Prob of an undetected error Pu ( E ) = ∑ Aiε i (1 − ε ) n −i = (1 − ε ) n A(

1− ε

i =1

n

Remarks:

1 − P

1. This result can be extended to q-ary symmetric DMC with P(b | a ) = P

P /( q − 1)

q − 1

Pu ( E ) = (1 − P) n A(

) − 1

1− P

2.

if a = b

otherwise

The weight distribution of (n,k,d) RS-codes over GF(q) is given by

A0 = 1, A1 = A2 = …= Ad-1 = 0, and

l−d

n

l − 1 l − d − j

q

Al = (q − 1)∑ (−1) j

j =0

l

j

11 /28

for ℓ = d, d+1, …, n

Göttingen 2012

www.zurich.ibm.com

3. Bounded Distance Decoding

Let C be a linear (n,k,dmin) code over GF(q), t = (dmin -1)/2. Symmetric DMC as above w/ error prob P

u

Encoder

x = uG

x

codewords are selected

equally likely

(not needed)

symmetric

DMC

y

Decoder:

Find x^ such that

d(x^,y) ≤ t

x^

Encoder

Inverse

u^

failure

Block error prob PB = P{X^ ≠ X or failure}

Prob of correct decoding Pc = P{ X^ = X } = 1 - PB

n

Pc = ∑ P i (1 − P) n −i

i =0 i

t

Theorem:

x

Proof: Each pattern of s errors has prob ( P q − 1) (1 − P)

n

There are ways to select i errors locations;

i

s

each occurs with prob P i (1 − P) n −i

12 /28

n−s

x’

x’’

transmitted codeword

and decoding sphere S(x,t)

competing codewords x’ ≠ x

with decoding spheres S(x’,t)

Göttingen 2012

www.zurich.ibm.com

3. Bounded Distance Decoding: Applications

Block (error corr. mode)

Byte (error corr. mode)

Block (erasure mode, marg=2)

Byte (erasure mode, marg=2)

-5

10

C2 Parity

RS codes can be efficiently decoded by the

Berlekamp-Massey algorithm, which achieves

bounded distance decoding.

•

C1 is decoded first

If decoder fails it outputs erasure symbols

•

C2 is decoded second

Error Rate

One Row

C1 Parity

Data

One Col

LTO-tape recording devices use two Reed-Solomon (RS) codes over GF(28),

which are concatenated to form a product code:

• (240,230,d=11) RS C1-code on rows

C2-Performance: N2=96, t2=6

• (96,84,d=13) RS C2-code on columns

0

10

10-17-limit

-10

10

-15

10

-20

By taking erasures into account

performance improves substantially!

13 /28

10

-1

10

-2

10

-3

-4

10

Byte Error Probability

10

at input of C2 decoder

Göttingen 2012

www.zurich.ibm.com

4. Soft Decoding: AWGN Channel

Z

input X

x ∈ { ± 1}

⊕

AWGN

X

Y output

Y

AWGN

pY|X(y|x) = pZ(y-x)

Binary-input additive white Gaussian noise (AWGN) channel: ± 1 valued inputs and real-valued outputs

Y=X+Z

Z ~ N(0, σ2) zero-mean normal distribution with variance σ2

2

Bit-error rate (uncoded)

BER = Q(SNR)

1.8

pY|X=-1

1.6

pY|X=1

1.4

1.2

where

• SNR = Eb/N0 = 1/(2σ2)

∞

1

exp(− s 2 / 2)ds

• Q (t ) = ∫

2π

t

1

0.8

0.6

0.4

0.2

0

-4

-3

-2

-1

X = -1

14 /28

Göttingen 2012

0

1

2

3

4

X=1

www.zurich.ibm.com

4. Soft Decoding vs Hard Decoding

Soft channel outputs

Z

AWGN

input X

x ∈ { ± 1}

⊕

Y output

Hard decision channel BSC(ε = BER)

Z

AWGN

YHD output

yHD ∈ { ± 1}

Quantizer

Y

0

10

Toy code with n=9, k=2, dmin = 6

000000000

C= 111111000

000111111

111000111

PHD

BDD

-1

PHD

ML

10

PBP

Block Error Rate

-2

Different Decoding Algorithms

ML: max. likelihood decoding

BP: graph-based decoding with

belief propagation

HD-ML: Hard decision ML decoding

HD-BDD: Hard decision bounded

distance decoding

10

PML

Capacity

-3

10

-4

10

-5

10

-6

10

-7

10

Bounded distance decoding is far from optimum

15 /28

⊕

input X

x ∈ { ± 1}

-2

Göttingen 2012

0

2

4

6

Eb/N0 [dB]

8

10

12

14

www.zurich.ibm.com

4. Soft Decoding: Bit-Wise MAP Decoding

Codewords x of C are

selected uniformly at random

Z

Xi ∈ { ± 1}

AWGN

⊕

Yi

MAP-Decoder

w.r.t. Code C

Xi^(Y)

MAP (maximum a posteriori) decoding

xi^(y) = arg max xi∈{ ± 1} PXi | Y(xi|y)

xi^(y) = arg max xi∈{ ± 1} ∑~xi PX | Y(x|y)

(law of total prob)

xi^(y) = arg max xi∈{ ± 1} ∑~xi pY | X(y|x) PX(x)

(Bayes rule)

xi^(y) = arg max xi∈{ ± 1} ∑~xi ∏ ℓ pYℓ | Xℓ (yℓ|xℓ) 1{x∈C}

(uniform priors, memoryless channel)

indicator function

summation over all components of x except xi

16 /28

Göttingen 2012

www.zurich.ibm.com

5. Codes on Graphs

1

Example

Binary length-4 repetition code C = { 0 0 0 0, 1 1 1 1} can be characterized by H = 1

0

1{x∈C} = 1{x1+x2+x3+x4=0} 1{x1+x2=0} 1{x2+x3=0}

1

1

1

0

1

1

1

0

0

The code membership function decomposes into factors => factor graph of a code

variables

x1

x2

x3

x4

1

H = 1

0

1

1

1

0

0

1

1

0

1

variable

nodes V

x1

checks

f1

x2

f2

f3

x3

H is related to the

adjacency matrix of Γ by

0

AΓ = T

H

17 /28

H

0

Göttingen 2012

x4

check

nodes F

f1

f2

f3

Bipartite graph Γ = ΓH

“Factor graph” or “Tanner graph”

www.zurich.ibm.com

5. Codes on Graphs and Message Passing

Example (cont.ed)

The variable nodes are initialized with the

channel output values p(yi|xi)

Message passing rules (“Belief Propagation”)

Initialization

p(y1|x1)

p(y2|x2)

p(y3|x3)

p(y4|x4)

Motivation for message passing rules

• “Local” MAP decoding

• If graph is has no cycles,

global MAP decoding is achieved

18 /28

Göttingen 2012

www.zurich.ibm.com

5. Codes on Graphs : LDPC Codes

0

1

Array-based LDPC codes

Let q be a prime, j ≤ q, and let P denote the q×q cyclic permutation matrix P = 0

L

1

1

1

1

1

M

1

0

P

P2

P3

L

P q −1

H = 1

M

1

P

2

P j-1

2 2

(P )

2 3

(P )

L

( P j-1 ) 2

( P j-1 ) 3

L

(P )

M

( P j-1 ) q −1

2 q −1

• fixed degree ℓ constellation at variable nodes

• fixed degree r constellation at check nodes

There are (n · ℓ) ! such codes (graphs)

19 /28

Göttingen 2012

L

0

0

L

0

1

0

O

0

L

1

1

0

0

M

0

H has column weight j, row weight q

and length n = q2. The shortest cycle

in the graph ΓH has length 6.

Regular LDPC codes

Number of edges: n · ℓ = r · #{check nodes}

0

.

.

.

P

E

R

M

U

T

A

T

I

O

N

.

.

.

www.zurich.ibm.com

5. Codes on Graphs: Performance of LDPC Codes

Example (n=2209, k=2024) array-based LDPC code

Low-density parity check (LDPC) codes

introduced by Gallager (1963)

row weight = 47

column weight = 4

Rate-2024/2209 (j=4,q=47) array code on AWGN channel

0

10

Very good performance under message

passing (BP), (MacKay&Neal 1995)

PBlock

Pbit

Capacity (BLER)

Capacity (BER)

-2

Depending on the degree structure of the

nodes in ΓH, there is a threshold T such

that message passing decodes

successfully if SNR > T and n →∞

N.B. (i) T > SNR(Capacity)

(ii) Can design ΓH such that

gap to capacity < 0.0045 dB

Error rate

Sparse H matrix

-> few cycles on ΓH

-> good performance of message passing

10

-4

10

-6

10

-8

10

2

2.5

3

3.5

4

4.5

Eb/N0 [dB]

5

5.5

6

6.5

T

(Chung, Forney, Richardson, Urbanke, IEEE Comm. Letters, 2001)

20 /28

Göttingen 2012

range of successful

density evolution

www.zurich.ibm.com

5. Codes on Graphs: Finite Length Performance

Rate-2024/2209 (j=4,q=47) array code on AWGN channel

0

10

“Short” LDPC codes, typically have an

error floor region due to cycles in the graph

PBlock

Pbit

Capacity (BLER)

Capacity (BER)

-2

Error rate

10

How can the performance be

assessed in the error floor region?

-4

10

-6

10

Data points obtained with dedicated FPGA

hardware decoder

-8

10

2

[L. Dolecek, Z. Zhang, V. Anantharam, M.J. Wainwright,

B. Nikolic, IEEE Trans. Information Theory, 2010]

2.5

3

3.5

4

4.5

Eb/N0 [dB]

5

5.5

T

range of successful

density evolution

21 /28

6

6.5

Error floor region

Göttingen 2012

www.zurich.ibm.com

6. Stopping Sets

1-ε

Analyze the behavior of message passing, on the binary erasure channel (BEC)

0

X

Motivation

Consider (short) erasure patterns, i.e., subsets D of the variable nodes V

1

for which the message passing decoder fails

ε

ε

0

Y

∆ erasure

symbol

1-ε

1

Def.: A stopping set is a subset D ⊆ V such that all neighbors of D are connected to D at least twice

1

Example: Binary n=4 repetition code with parity check matrix H = 1

Decoder fails if x1= x2 = x3= ∆ (and x4 = 0 or 1)

0

variable nodes V

message

1

D=

1

1

1

0

1

1

1

0

0

µ= ∆

There are 3 stopping sets

2

• D=∅

neighbors of D

3

• D = {1, 2, 3}

4

• D = {1, 2, 3, 4} = codeword

Decoding failure probability

erasure prob ε

PF = ∑

PF = ε3(1-ε) + (1-ε)4

ε |D| (1 – ε) (n – |D|)

D : nonempty

stopping set

22 /28

Göttingen 2012

www.zurich.ibm.com

6. Finding Stopping Sets

Minimum distance problem

Instance:

A (random) binary mxn matrix H and an integer w > 0

Question:

Is there a nonzero vector x ∈ GF(2)n of weight ≤ w such that x HT = 0?

Theorem (Vardy 1997)

Corollary

The minimum distance problem is NP-complete

Finding the minimum distance or the weight distribution of a linear code is NP-hard

Theorem (Krishnan&Shankar 2006) Finding the cardinality of the smallest nonempty stopping set

is NP-hard

Average ensemble performance (Di et al., IT-48, 2002)

For an LDPC code ensemble of length n with fixed variable and check node degree distributions:

probability that s chosen variable nodes contain a stopping set = B(s)/T(s)

n s

n− s B( s)

Average failure probability E[ PF ] = ∑ ε (1 − ε )

T (s)

s =0 s

n

23 /28

Göttingen 2012

www.zurich.ibm.com

6. Error Rate Analysis of Array-Based LDPC Codes

Example of a (3,3) fully absorbing set D

Motivation

Consider subsets D of all variable nodes V which

remain erroneous under the bit-flipping algorithm

For D ⊆ V decompose the set of neighboring

check nodes N(D) into those with unsatisfied

checks O(D) and satisfied checks E(D)

E(D): neighboring checks

N(D): neighboring checks

Def.: An (a,b) absorbing set D ⊆ V is characterized by

• | D | = a and | O(D) | = b

• every node in D has fewer neighbors in O(D) than in E(D)

F: all checks

Def.: An (a,b) fully absorbing set is an absorbing set and, in addition,

all nodes in V \ D have more neighbors in F \ O(D) than in O(D).

24 /28

Göttingen 2012

www.zurich.ibm.com

6. Error Rate Analysis of Array-Based LDPC Codes

Theorem

The minimum distance of column

weight 3 array LDPC codes is 6 with

multiplicity (q – 1)q2.

Error floor performance of the (2209,2070) array LDPC code

10

Union bound for rate-2070/2209 (j=3,q=47) array code with AWGN

0

P

Absorbing set bound on block error prob.:

Let D be a (3,3) absorbing set, then

10

10

Block Error Rate

Theorem [DZAWN, IT-2010]

For the family of column weight 3 and row

weight q array codes, the minimal absorbing

and minimal fully absorbing sets are of size

(3,3) and (4,2), respectively.

Their numbers grow as (q – 1)q2.

10

10

10

10

10

PB ≥ (q – 1) q2 P{Y∈ absorbing region of D}

10

number of (3,3)

absorbing sets

25 /28

-1

Block

Union bound

AS Bound

-2

Capacity

-3

-4

-5

-6

-7

-8

3

“easily” estimated by simulation

Göttingen 2012

3.5

4

4.5

5

5.5

6

6.5

7

E /N [dB]

b

0

www.zurich.ibm.com

6. Error Rate Analysis of Array-Based LDPC Codes

Theorem [DZAWN, IT-2010]

For the family of column weight 4 and row weight

q>19 array codes, the minimal absorbing and

minimal fully absorbing sets are of size (6,4).

Example

Error floor performance of the (2209,2024) array LDPC code

Their numbers grow as q3 (up to a constant factor).

Ref.: [L. Dolecek, Z. Zhang, V. Anantharam, M.J. Wainwright,

B. Nikolic, IEEE JSAC vol 27(6), 2009]

26 /28

Göttingen 2012

www.zurich.ibm.com

Summary

In storage applications, Hamming codes and Reed-Solomon codes are used (delay constraints).

These codes are optimal with respect to the Hamming bound and the Singleton bound, i.e.,

in terms of algebraic coding criteria.

The weight enumerator of these codes is known

Analytical evaluation of undetected error probability

error rate performance of bounded distance decoding

Bounded distance decoding is not optimum for the additive white Gaussian noise channel but

maximum-likelihood decoding is too complex.

Iterative BP decoding of LDPC codes achieves almost capacity at “low” complexity.

Error floor performance of “short” LDPC codes is an issue

Analytical results only for a small class of codes (e.g., array-based LDPC codes)

Open problems:

characterization of stopping sets/absorbing sets for special classes of LDPC codes

27 /28

Göttingen 2012

www.zurich.ibm.com

References

Algebraic Coding and Information Theory

Friedrich Hirzebruch, “Codierungstheorie und ihre Beziehung zu Geometrie und Zahlentheorie,”

Rheinisch-Westfälische Akademie der Wissenschaften, Vorträge N 370, Westdeutscher Verlag, 1989

J.H. van Lint, Introduction to Coding Theory,

GTM vol. 86, Springer, 1982

Robert G. Gallager, Information Theory and Reliable Communication,

John Wiley & Sons, N.Y., 1968

A. Vardy, “The Intractability of Computing the Minimum Distance of a Code,” IEEE Trans. Information Theory,

vol. 43(6), pp. 1757-1766, Nov. 1997

Codes on Graphs

T. Richardson & R . Urbanke, Modern Coding Theory,

Cambridge Univ. Press, N.Y., 2008

L. Dolecek, Z. Zhang, V. Anantharam, M.J. Wainwright, B. Nikolic, “Analysis of Absorbing Sets and Fully

Absorbing Sets of Array-Based LDPC Codes,” IEEE Trans. Information Theory, vol. 56(1), pp. 181-201,

Jan. 2010

28 /28

Göttingen 2012

www.zurich.ibm.com

Back-Up

29 /28

Göttingen 2012

www.zurich.ibm.com

Bounded Distance Decoding of Toy Code

0

10

Although suboptimal, the performance

of bounded distance decoding can be

easily evaluated.

P HD

BDD

P HD

ML

HD BDD

-5

Block Error Rate

10

-10

10

-15

10

2

4

6

8

10

E /N [dB]

b

30 /28

Göttingen 2012

12

14

16

0

www.zurich.ibm.com

Soft Decoding Analysis of Toy Code

0

10

BP

The toy code is an (n=9, k=2) array-based LDPC

code with q = 3 = j

PF

ML

PF

UB-ML

AS Bound

The BP performance is determined by the 18

absorbing sets of type (3,3)

The ML performance is determined by the 3

weight-6 codewords

-5

10

Block Error Rate

-10

10

-15

10

31 /28

Göttingen 2012

-2

0

2

4

6

8

Eb/N0 [dB]

10

12

14

www.zurich.ibm.com

Evolution of BP for Toy Code: Absorbing Sets

Input: noisy version of absorbing set { 3, 4, 9}

Evolution of output P(xi = 0) under BP algorithm

P(x1 = 0) P(x2 = 0) P(x3 = 0) P(x4 = 0) P(x5 = 0) P(x6 = 0) P(x7 = 0) P(x8 = 0) P(x9 = 0)

0.9991 0.9994 0.0008 0.0010 0.9992 0.9988 0.9992 0.9994 0.0008

0.6383 1.0000 0.0000 0.0000 0.7363 1.0000 0.6755 1.0000 0.0000

1.0000 0.9221 0.0000 0.0000 1.0000 0.8657 1.0000 0.9077 0.0000

0.0000 0.7329 1.0000 1.0000 0.0000 0.4720 0.0000 0.6950 1.0000

1.0000 0.9996 0.0000 0.0000 1.0000 0.9979 1.0000 0.9995 0.0000

0.0132 1.0000 1.0000 1.0000 0.0342 1.0000 0.0095 1.0000 1.0000

1.0000 0.0000 1.0000 1.0000 1.0000 0.0000 1.0000 0.0000 1.0000

1.0000 1.0000 0.0000 0.0000 1.0000 1.0000 1.0000 1.0000 0.0000

0.0000 1.0000 1.0000 1.0000 0.0000 1.0000 0.0000 1.0000 1.0000

1.0000 0.0000 1.0000 1.0000 1.0000 0.0000 1.0000 0.0000 1.0000

1.0000 1.0000 0.0001 0.0000 1.0000 1.0000 1.0000 1.0000 0.0000

Output alternates between the three absorbing sets { 3, 4, 9}, {1, 5, 7}, {2, 6, 8}

Other possible behavior:

• Output stays on the same absorbing set

• Output converges to a (possibly wrong) codeword

32 /28

Göttingen 2012

www.zurich.ibm.com