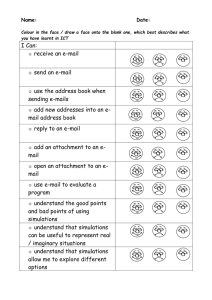

Investigating the Effectiveness of Computer

advertisement