Estimating statistical significance with reverse

advertisement

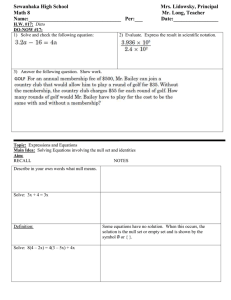

Estimating statistical significance with reverse-sequence null models Why it works and why it fails Kevin Karplus, Rachel Karchin, Richard Hughey karplus@soe.ucsc.edu Center for Biomolecular Science and Engineering University of California, Santa Cruz E-values for reverse-sequence null – p.1/32 Outline of Talk What is a null model? Why use the reverse-sequence null? Two approaches to statistical significance. What distribution do we expect for scores? Fitting the distribution. Does calibrating the E-values help? When do reverse-sequence null models fail? E-values for reverse-sequence null – p.2/32 Scoring HMMs and Bayes Rule The model M is a computable function that assigns a probability Prob (A | M ) to each string A. When given a string A, we want to know how likely the model is. That is, we want to compute something like Prob (M | A). Bayes Rule: Prob(M ) . Prob M A = Prob A M Prob(A) Problem: Prob(A) and Prob(M ) are inherently unknowable. E-values for reverse-sequence null – p.3/32 Null models Standard solution: ask how much more likely M is than some null hypothesis (represented by a null model ). Prob (M | A) Prob (A | M ) Prob(M ) = . Prob (N | A) Prob (A | N ) Prob(N ) Prob(M ) is the prior odds ratio, and represents our belief in Prob(N ) the likelihood of the model before seeing any data. Prob M |A is the posterior odds ratio, and represents our Prob N |A belief in the likelihood of the model after seeing the data. E-values for reverse-sequence null – p.4/32 Standard Null Model Null model is an i.i.d (independent, identically distributed) model. (A) len Y Prob(Ai ) . Prob A N, len (A) = i=1 Prob A N = Prob(string of length len (A)) len (A) Y Prob(Ai ) . i=1 The length modeling is often omitted, but one must be careful then to normalize the probabilities correctly. E-values for reverse-sequence null – p.5/32 Problems with standard null When using the standard null model, certain sequences and HMMs have anomalous behavior. Many of the problems are due to unusual composition—a large number of some usually rare amino acid. For example, metallothionein, with 24 cysteines in only 61 total amino acids, scores well on any model with multiple highly conserved cysteines. E-values for reverse-sequence null – p.6/32 Reversed model for null We avoid composition bias (and several other problems) by using a reversed model M r as the null model. The probability of a sequence in M r is exactly the same as the probability of the reversal of the sequence given M. If we assume that M and M r have equal prior likelihood, then Prob (S | M ) Prob (M | S) = . r r Prob (M | S) Prob (S | M ) This method corrects for composition biases, length biases, and several subtler biases. E-values for reverse-sequence null – p.7/32 Composition as source of error A cysteine-rich protein, such as metallothionein, can match any HMM that has several highly-conserved cysteines, even if they have quite different structures: cost in nats model − model − HMM sequence standard null reversed-model 1kst 4mt2 -21.15 0.01 -15.04 -0.93 1kst 1tabI -15.14 -0.10 4mt2 1kst -21.44 -1.44 4mt2 1tabI -17.79 -7.72 1tabI 1kst -19.63 -1.79 1tabI 4mt2 E-values for reverse-sequence null – p.8/32 Composition examples Metallothionein Isoform II (4mt2) Kistrin (1kst) E-values for reverse-sequence null – p.9/32 Composition examples Kistrin (1kst) Trypsin-binding domain of Bowman-Birk Inhibitor (1tabI) E-values for reverse-sequence null – p.10/32 Helix examples Tropomyosin (2tmaA) Colicin Ia (1cii) Flavodoxin mutant (1vsgA) E-values for reverse-sequence null – p.11/32 Helix examples Apolipophorin III (1aep) Apolipoprotein A-I (1av1A) E-values for reverse-sequence null – p.12/32 Fold Recognition Performance Fraction of True Positives found +=Same fold 0.35 0.3 1-track AA rev-null 1-track AA geo-null 0.25 0.2 0.15 0.1 0.05 0.001 0.01 0.1 1 10 100 False Positives/Query E-values for reverse-sequence null – p.13/32 What is Statistical Significance? The statistical significance of a hit, P1 , is the probability of getting a score as good as the hit “by chance,” when scoring a single “random” sequence. When searching a database of N sequences, the significance is best reported as an E-value—the expected number of sequences that would score that well by chance: E = P1 N . Some people prefer the p-value: PN = 1 − (1 − P1 )N , For large N and small E , PN ≈ 1 − e−E ≈ E . I prefer E-values, because our best scores are often not significant, and it is easier to distinguish between E-values of 10, 100, and 1000 than between p-values of 0.999955, 1.0 − 4E-44, and 1.0 − 5E-435 E-values for reverse-sequence null – p.14/32 Approaches to Statistical Significance (Markov’s inequality) For any scoring scheme that uses Prob (seq | M1 ) ln Prob (seq | M2 ) the probability of a score better than T is less than e−T for sequences distributed according to M2 . This method is independent of the actual probability distributions. (Classical parameter fitting) If the “random” sequences are not drawn from the distribution M2 , but from some other distribution, then we can try to fit some parameterized family of distributions to scores from a random sample, and use the parameters to compute P1 and E values for scores of real sequences. E-values for reverse-sequence null – p.15/32 Our Assumptions The sequence and reversed sequence come from the same underlying distribution. Bad assumption 1: The scores with a standard null model are distributed according to an extreme-value distribution: P ln Prob seq M > T ≈ Gk,λ (T ) = 1 − exp(−keλT ) . Bad assumption 2: The scores with the model and the reverse-model are independent of each other. Bad assumption 3: The scores using a reverse-sequence null model are distributed according to a sigmoidal function: Result: P (score > T ) = (1 − eλT )−1 . E-values for reverse-sequence null – p.16/32 Derivation of sigmoidal distribution (Derivation for costs, not scores, so more negative is better.) Z P (cost < T ) = = = ∞ −∞ Z ∞ −∞ Z ∞ −∞ ∞ Z = −∞ Z P (cM = x) ∞ x−T P (cM 0 = y)dydx P (cM = x)P (cM 0 > x − T )dx kλ exp(−keλx )eλx exp(−keλ(x−T ) )dx kλeλx exp(−k(1 + e−λT )eλx )dx E-values for reverse-sequence null – p.17/32 Derivation of sigmoid (cont.) If we introduce a temporary variable to simplify the formulas: KT = k(1 + exp(−λT )), then Z ∞ (1 + e−λT )−1 KT λeλx exp(−KT eλx )dx −∞ Z ∞ = (1 + e−λT )−1 KT λeλx exp(−KT eλx )dx −∞ Z ∞ = (1 + e−λT )−1 gKT ,λ (x)dx P (cost < T ) = −∞ = (1 + e−λT )−1 E-values for reverse-sequence null – p.18/32 Fitting λ The λ parameter simply scales the scores (or costs) before the sigmoidal distribution, so λ can be set by matching the observed variance to the theoretically expected variance. The mean is theoretically (and experimentally) zero. The variance is easily computed, though derivation is messy: E(c2 ) = (π 2 /3)λ−2 . λ is easily fit by matching the variance: v u N −1 u X λ ≈ π tN/(3 c2i ) . i=0 E-values for reverse-sequence null – p.19/32 Two-parameter family We made three dangerous assumptions: reversibility, extreme-value, and independence. To give ourselves some room to compensate for deviations from the extreme-value assumption, we can add another parameter to the family. We can replace −λT with any strictly decreasing odd function. Somewhat arbitrarily, we chose − sign(T )|λT |τ so that we could match a “stretched exponential” tail. E-values for reverse-sequence null – p.20/32 Fitting a two-parameter family For two-parameter symmetric distribution, we can fit using 2nd and 4th moments: E(c2 ) = λ−2/τ K2/τ E(c4 ) = λ−4/τ K4/τ where Kx is a constant: Z ∞ Kx = y x (1 + ey )−1 (1 + e−y )−1 dy −∞ ∞ X (−1)k /k x . = −Γ(x + 1) k=1 E-values for reverse-sequence null – p.21/32 Fitting a two-parameter family (cont.) 2 The ratio E(c4 )/(E(c2 ))2 = K4/τ /K2/tau is independent of λ and monotonic in τ , so we can fit τ by binary search. Once τ is chosen we can fit λ using E(c2 ) = λ−2/τ K2/τ . E-values for reverse-sequence null – p.22/32 Student’s t-distribution On the advice of statistician David Draper, we tried maximum-likelihood fits of Student’s t-distribution to our heavy-tailed symmetric data. We couldn’t do moment matching, because the degrees of freedom parameter for the best fits turned out to be less than 4, where the 4th moment of Student’s t is infinite. The maximum-likelihood fit of Student’s t seemed to produce too heavy a tail for our data. We plan to investigate other heavy-tailed distributions. E-values for reverse-sequence null – p.23/32 What is single-track HMM looking for? nostruct-align/3chy.t2k w0.5 3 2 1 VI VVIDD L E X XX L I A LL V L I E ND S GE N S L V F M XX X G V D N A D S NG EAL D QL XXX X X XX XXXXXX X ADK EL KFL V VDDFSTMRRIVR NLLKELGF NNVEE AE DG V DA L N KL Q A G G Y XX T X R X X V XX L P I V XXX L A X 1 T L I S E E V H L P E X L K L V E Y X X X E X L E X X X A XX E E E L X K E 50 A 10 L 40 XA X R X 30 S L 20 X G X X H 3 2 L D P G TS M I VI D DL VL L N VI L E LV L IV E I XXXXXXX XXXXXXXXXX XXX XXXXXXXX XX XX XXXX GFV IS DWN M PNMDGLELLKTI RADGAMSA LPVLM VT AE A KK E N II A A A Q A A IAFLSL I GV M S G D I VEG A SS NN DLL L F VVAA L PLA G MII E VR V L M R L T X A P X X H T E T A V V D X E E L X 80 X 70 60 51 R E E AL D A D XX X X R X 100 S G N EA 90 1 H 3 2 L G D S E N A XX K I D YV GF KP L RD S G Q EE A XXXXXXXX X M FS E L I V F M L X R I LR V L XX R XXXXX E D X A K X LR XXX L A X G X E T 120 110 GAS GY VVK P FTAATLEEKLNK IFEKLGM 101 1 H E-values for reverse-sequence null – p.24/32 Example for single-track HMM Database calibration for 3chy.t2k-w0.5 HMM 1000 E-value 100 10 1 Unfitted sigmoidal Fitted Student-t desired fit Fitted 2-parameter sigmoidal Fitted 1-parameter sigmoidal 0.1 0.01 1 10 100 Sequence Rank 1000 E-values for reverse-sequence null – p.25/32 What is second track looking for? nostruct-align/3chy.t2k EBGHTL 3 2 1 C T EEEE HHHHHHHHHHHHH C EEEE HHHHHHHHH E TTC E CTC CC T XXXE C TX X X X E T CTC TT XC X X X X X X X X X X X CE H CT C TC G HT CTEX X X X X X T C ET CT T X X X H C C TT C T TC T ETCXCC XCEXC X X X X X X T H XG X X X X X X X X X X X X T E H E 50 40 30 20 1 10 ADK EL KFLV V D DF STMRR I VRN LLK EL G FNN V EE A ED GV D AL NK LQ A G G Y H 3 2 1 EEEEE E C T HHHHHHHHH TTT H T C CCCC C T CET T C H X X X X X TTT T EEEEE HHHHHHHHHH E TC H GC E TCTHC GXCTC CXX CTXX XX EG EX XTTX X XXXCX X X X X X X X X X C X T C C CTCT CTX H XTEXXX X X X X X X XX C X X X X X X X E T H T E T 100 90 80 70 60 51 GFV IS DWNM P N MD GLELL K TIR ADG AM S ALP V LM V TA EA K KE NI IA A A Q A H 3 2 CC HHHHHHHHHHHHH C CH C H C EEEC TTCT CCH GAS GY VVKP F T AA TLEEK L NKI FEK LG M CCC TXX GG T XTEEE X X T X X X X X 110 E X E T XTTTX X X X X X X X X X X X X 120 T TC TXX X 101 1 H E-values for reverse-sequence null – p.26/32 Example for two-track HMM Database calibration for 3chy.t2k-100-30-ebghtl HMM 1000 E-value 100 10 1 Fitted Student-t desired fit Unfitted sigmoidal Fitted 2-parameter sigmoidal Fitted 1-parameter sigmoidal 0.1 0.01 1 10 100 Sequence Rank 1000 E-values for reverse-sequence null – p.27/32 Fold recognition results +=Same fold 0.22 Fraction of True Positives found 0.2 0.18 0.16 AA no calib AA db calib AA random calib AA-STRIDE no calib AA-STRIDE db calib AA-STRIDE random calib 0.14 0.12 0.1 0.08 0.06 0.04 0.02 0.001 0.01 0.1 False Positives/Query 1 10 E-values for reverse-sequence null – p.28/32 What went wrong? Why did random calibrated fold recognition fail for 2-track HMMs? “Random” secondary structure sequences (i.i.d. model) are not representative of real sequences. Fixes: Better secondary structure decoy generator Use real database, but avoid problems with contamination by true positives by taking only costs > 0 to get estimate of E(cost2 ) and E(cost4 ). E-values for reverse-sequence null – p.29/32 Fold recognition results +=Same fold 0.25 Dunbrack t2k+0.3*EBGHTL db calib Dunbrack t2k+0.3*EBGHTL nocalib Dunbrack t2k+0.3*PB nocalib Dunbrack t2k+0.3*PB simple Dunbrack t2k+0.3*PB db calib Fraction of True Positives found 0.2 0.15 0.1 0.05 0 0.001 0.01 0.1 False Positives/Query 1 10 E-values for reverse-sequence null – p.30/32 What went wrong with Protein Blocks? The HMMs using de Brevern’s protein blocks did much worse after calibration. Why? The protein blocks alphabet strongly violates reversibility assumption. Encoding cost in bits for secondary structure strings: 0-order 1st-order reverse-forward alphabet amino acid 4.1896 4.1759 0.0153 stride 2.3330 1.0455 0.0042 dssp 2.5494 1.3387 0.0590 pb 3.3935 1.4876 3.0551 E-values for reverse-sequence null – p.31/32 Web sites UCSC bioinformatics info: http://www.soe.ucsc.edu/research/compbio/ SAM tool suite info: http://www.soe.ucsc.edu/research/compbio/sam.html H MM servers: http://www.soe.ucsc.edu/research/compbio/HMM-apps/ SAM-T02 prediction server: http://www.soe.ucsc.edu/research/compbio/ HMM-apps/T02-query.html These slides: http://www.soe.ucsc.edu/˜karplus/papers/e-value-germany02.pdf E-values for reverse-sequence null – p.32/32