A GRAPHICAL MODEL FORMULATION OF THE DNA BASE

advertisement

A GRAPHICAL MODEL FORMULATION OF THE DNA BASE-CALLING PROBLEM

Lucio Andrade-Cetto and Elias S. Manolakos

Communications and Digital Signal Processing (CDSP)

Center for Research and Graduate Studies,

Electrical and Computer Engineering Department,

Northeastern University, Boston MA 02115.

landrade(elias)@ece.neu.edu

ABSTRACT

2. THE FOVD MODEL

To faithfully capture the physical process that produces each base

symbol of the DNA chromatogram, we model the complete sequence of events as interacting random variables, i.e. we do not

assume any statistical independence between events (as we did in

[1]). The key idea in this new formulation is that the joint distribution associated with the complete probabilistic inference problem can be decomposed into locally interacting factors. By taking

advantage of such probabilistic structure we can design tractable

inference algorithms while we still stay away from the unrealistic

assumption of statistical independence between the events. We end

up expressing the joint distribution as a product of factors, where

each factor depends on a subset of the random variables. Moreover, we assume a time-invariant memoryless process, which leads

to a homogeneous structure, where the factor distributions are the

same for all random samples.

Let zi = [ si ti xi yi ] be the complete random vector representing the i-th event , where si ∈ {A, C, G, T } is the channel id, ti is its time (location), xi is a measurable feature of the

event (e.g. in [5] x are the coefficients after applying NNLS, or

in [1] x = [strength width] for the extracted signal patterns),

and yi ∈ [0, 1] is an indicator variable which denotes if the event

contributes a base that appears in the final sequence, in our case a

hidden variable . Consider Z = {z1 , . . . , zN } to be the complete

experimental data extracted from the chromatogram. We start by

considering all the possible dependencies between events, therefore, the complete joint pdf can be factored using Bayes chain rule

as:

"N

#

Y

P (Z) =

P (zi |z1 , z2 , . . . , zi−1 ) P (z1 ).

(1)

A First Order Variable Dependence (FOVD) probabilistic graphical model is introduced to capture the complex inter-event dependencies that are present in DNA sequencing data. In this framework, DNA base-calling is addressed as a parameter estimation

problem using maximum likelihood methods. The FOVD model

accounts for dependencies between neighboring alleles and statistically characterizes the size of signal peaks. Our experimental

results suggest that the resulting unsupervised classification basecalling algorithms (i) achieve accuracy that exceeds on average that

of the-state-of-the art base-callers, (ii) work well for a variety of

data set types without requiring costly recalibration.

1. INTRODUCTION

Automated DNA sequencing relies on the ability to synthesize and

tag differently DNA strands ending with a different base, as well as

on the ability to separate the tagged DNA molecules into subsets

ordered according to their length. This denaturing is accomplished

by using electrophoresis (slab gel or capillary), giving rise to the,

so called, DNA electropherogram (or chromatogram). An electropherogram consists of four signal “channels”, one for each type of

terminal DNA base, namely A, C, G, or T . Each channel corresponds to a train of Gaussian-like peaks. The size of a peak (the

peak “strength”) is proportional to the number of members in the

sub-population of DNA fragments with a given length. The sequence of bases in the original DNA molecule (template) can be

inferred by considering the time ordering of the peaks. This ordering corresponds to the order of arrival (to the detection point)

of the tagged sub-populations of DNA fragments of progressively

increasing length. Due to space limitations we omitted a more detailed description of the signal pre-processing aspects in DNA sequencing. Article [1] reviews the characteristics of a typical DNA

chromatogram that make base-calling a challenging task. Furthermore, in [2, 3, 4] we study extensively typical anomalies in the raw

data trace and present algorithms for its enhancement, a prerequisite for accurate base-calling.

In [5] we presented a robust method based on NNLS to extract segments (or events) of the chromatogram (that are not always peaks) which may represent a base symbol. The underlying

base sequence can be inferred only after proper classification of

the extracted events. In this paper we introduce a new probabilistic graphical model and set the framework for a fully statistical,

non-heuristic, end-to-end base-calling strategy.

0-7803-9518-2/05/$20.00 ©2005 IEEE

i=2

We now consider that the events are time ordered. To define

the subset ancestors for the i-th event, we first define the index

subset selector M(i) as:

M(i) = {1, 2, . . . , i − 1}

∀ i ∈ {1, . . . , N }

(2)

where M(1) = ∅. Now, the ancestral subsets of events are:

Z M(i) = {z1 , z2 , . . . , zi−1 }

∀ i ∈ {1, . . . , N }

(3)

where Z M(1) = ∅, Z M(2) = {z1 }, Z M(3) = {z1 , z2 }, and so on.

Using the ancestral ordering notation we can rewrite the complete joint pdf as:

N

³ ¯

´

Y

¯

P (Z) =

P zi ¯Z M(i) .

(4)

i=1

369

Unfortunately, although expression (4) is simple, estimation of

its factors is infeasible if the number of events N is large due to the

complex structure of the inter-variable dependencies. Note that in

a typical DNA chromatogram of 1000 bp we may get N ≈ 1500

events. However, if the scope of dependencies gets contained, different interesting families of models can be arrived at, starting

from this general model. We will follow this exposition, to first

account for the available possibilities, and, then identify the one

that we think has more merit and will be pursued further.

For example; if we reduce the ancestral subset to just the last

event (Z M(i) = {zi−1 }), we get a first-order Markovian chain.

Furthermore, since we are observing only part of the experimental

data (t, s, x) while the indicator (y) is hidden, this will be a firstorder Hidden Markov Model (HMM). However, we do not have

the typical type of HMMs; although the domain of the hidden variable is discrete (finite alphabet HMM), the transition probabilities

depend on the hidden variable as well as on the observable data. If

we go one step further and cancel the dependency between the observable data of contiguous events we finally obtain an HMM with

a discrete transition probabilities matrix [6]. However, inter-event

dependencies can occur between any two close events that represent a base symbol, therefore if a spurious event lies in between

them such an interaction can not be modelled with an HMM.

A second reduction case is found when the dependencies graph

is acyclic. Under this assumption, conditional independence can

be claimed between ancestor and descendent random variables, the

ancestor subsets are greatly reduced and the estimation problem is

tractable. This type of reduction leads to Bayesian Networks (or

Bayesian Probabilistic Graphs) [7]. Selecting a node that could

separate two branches of the chromatogram events would mean

imposing an indicator variable to such event, leading to the incompleteness of the statistical model. Some extensions in Bayesian

Networks theory allow to propagate the probability inference even

through hidden elements in the random variables; however, the developed theory is oriented towards problems with varying factor

distributions (i.e. there is no homogeneity) and, thus, with small

number (N ) of interacting random variables.

Finally, Factor Graph theory [8] considers a more general case,

where the dependencies among variables could also be cyclic (nonfactorial recognition networks), i.e. when at least one random variable in Z is not dependently separated from at least another variable by the visible variables. If the dependency structure is known,

a model for the unobserved hidden variables can be reasonably obtained using methods such as Ancestral Simulation [9], Variational

Inference [10] or Helmholtz Machines [11, 12].

Let us return now to the complete joint pdf (4) and incorporate our prior knowledge about the model to simplify it. In DNA

chromatograms we know that the expected distance between contiguous base symbols (inter-base distance) should remain rather

constant. Moreover, the absence of a base-call within a large time

interval might indicate that there is a base-call error (under-call or

deletion), and the over-congestion of base-calls in a small time interval might also indicate a base-call error (over-call or insertion).

Unfortunately, we can not incorporate this knowledge directly into

the model, because inter-base distance is not the same as interevent distance, otherwise our model could be easily described by

an HMM, (some events represent non base symbols). When we

want to establish a dependence model of a given event zi to its

ancestors Z M(i) , we only want to relate it to the latest preceding

event (to its left) which generated a true base symbol, in order to

statistically test the distance between them. It makes no sense to

evaluate the dependence to any other preceding base calls and to

z1

z2

z3

z4

z5

z6

z7

z8

z9

z10

z11

z12

z13

z14

z1

z2

z3

z4

z5

z6

z7

z8

z9

z10

z11

z12

z13

z14

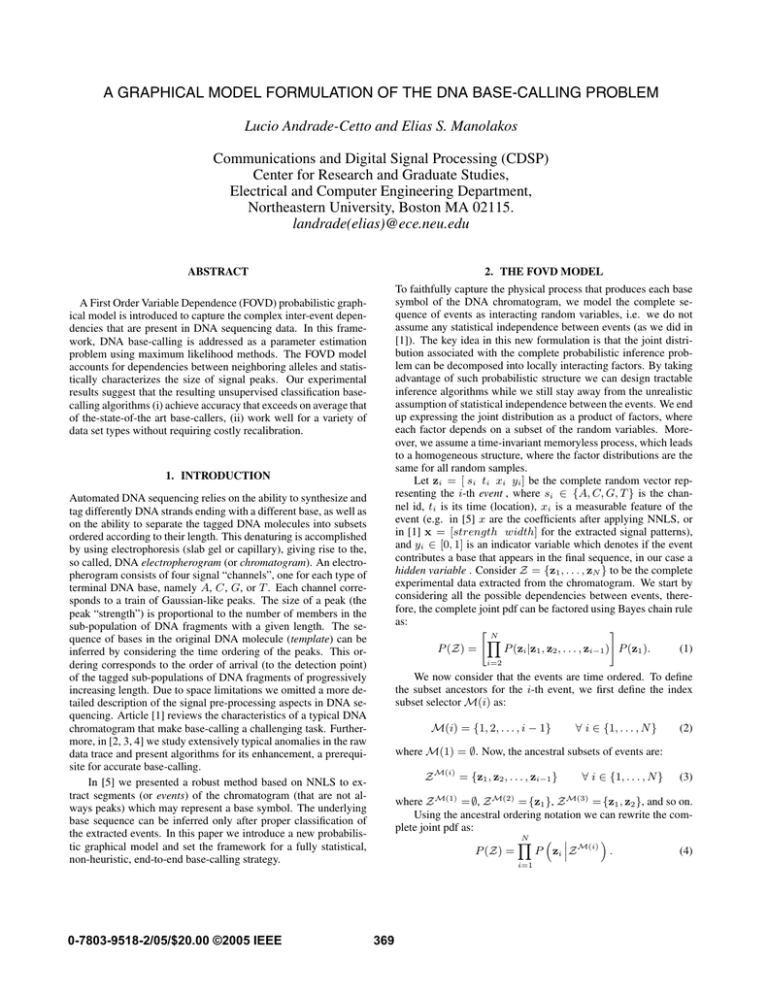

Fig. 1. Typical DNA chromatograms where the dependence structure

is marked with arcs. For the same chromatogram there may be different structures depending on the value of the hidden variable: In the upper panel {yk } = {0, 1, 1, 0, 1, 0, 1, 1, 0, 1, 0, 0, 1, 0} and in the lower

panel {yk } = {0, 1, 1, 0, 1, 1, 1, 1, 0, 1, 0, 0, 1, 0}. By overlapping both

dependence graphs an undirected cyclic path appears between events z5 ,

z6 , and z7 .

spurious or noise generated peaks.

The last assumption is the fundamental characteristic of the

model we are proposing; we have a homogeneous model (timeinvariant memoryless) with a First-Order Variable Dependence

structure (called the FOVD model). Now, we introduce the notation to identify the specific ancestor event zl from which a given

event zi depends;

l = argmax(tk ).

(5)

k∈M(i)

yk =1

Once this event is determined, we can claim conditional independence between the current event zi and all other ancestors but zl :

I(zi , zj |zl )

∀ j ∈ M(i)\{l}.

(6)

Now we can rewrite expression (4) using this conditional independence assumption:

P (Z) =

N

Y

i=1

N

³ ¯

´ Y

¯

P zi ¯zl , Z M(i)\{l} =

P (zi |zl ) .

(7)

i=1

Unlike an HMM, the dependency structure of the FOVD model

is unknown, it depends itself on the value of the hidden random

variables. Observe from the definition (5) that the index l can

be determined only if all the values of the y’s for the ancestors

are given. Furthermore, if the hidden variables were known, this

structure would lead to a directed acyclic graph (as in Bayesian

Networks), otherwise the dependency structure may exhibit undirected cycles.

The FOVD model can be used to capture the multiple interpretations suggested by DNA sequencing data. Consider the simple example in Figure 1 where a two-channel DNA chromatogram

with events that can generate one or none base symbols is shown.

Each interpretation separately gives rise to a first-order acyclic model. But as long as the hidden variables are unknown, a model that

accounts for all possible interpretations remains cyclic. In our example, if we assume that only these two shown realizations are

possible, then an undirected cyclic path exists between events z5 ,

z6 , and z7 , as it can be observed if the two graphs of Figure 1 get

superimposed.

Event thought Factor Graph Theory could handle this special

type of problem, the complexity would grow significantly when

we consider all likely realizations for the hidden variables. Even

if we limit dependencies by using an inter-event distance threshold, the possible appearance of multiple undirected cyclic paths,

370

or even worse, of nested and interlaced cycles, will make the estimation (or utilization) of the model computationally very costly.

Instead, we intend to work the FOVD model out living with the

unknown dependency structure, but taking advantage of the firstorder assumption and other conditional independence assumptions

between the elements of the random vector z.

Consider the marginal probability distribution for any single

event, in most cases knowledge of the hidden variable (Does this

peak truly represent a base symbol or not?) is enough to statistically model the size of the peak independently of its position:

2. The next term of the FOVD model corresponds to prior probabilities of the mixture densities (third and fourth term). They can

be estimated from the data, along with the rest of the model parameters, as it will be discussed later. This term captures the ratio of true base symbols to spurious peaks due to contamination,

small artifacts and noise for every channel. In practice we may use

P (yi |si , sl ) ≈ P (yi |si ) without any loss in performance.

3. Class conditional probabilities (third term) characterize the event

weight (size of the allele) given the class indicator variable (which

tells us if the event represents a true base symbol or not). This gives

rise to a mixture of two Gaussians which are to be learned independently for every channel. In practice we can use P (xi |si , sl , yi ) ≈

P (xi |si , yi ) without any loss in performance. Although not used

presently, prior knowledge of the inter-base relation may be incorporated here in the future, e.g. a small G is very probable after

an A due to steric hindrance. The left panels of Figure 2 show

the statistical characterization of the event weight for channel G

in two different types of chromatograms (top: primer dye (PD)

gel electrophoresis, bottom: terminator dye (TD) capillary electrophoresis). In some channels (or chromatograms) the probability

of miss-classification is so small that it could be possible to use

only this factor to discriminate events and therefore base-call accurately (like in PD data sets) as we intended to do in [1]. However

this is not going to be accurate for chromatograms with spurious

peaks, contamination and high variance of peak height.

I(xi , ti |yi )

∀ i ∈ {1, . . . , N }.

(8)

Note that the last claim is true only when the hidden variable

is given, otherwise there is a dependency of the event size on its

location and thus on neighboring events. There is a notable exception for which, even if the hidden variable is known, the location of

the peak does matter; we are referring to the case of heterozygous

realizations. In a heterozygous situation, even if we know that an

event represents a true base symbol its size still depends on the size

of another event in the neighborhood. Nevertheless, the frequency

of these cases is relatively small (≈ 1 : 300 based on experimental

data) and therefore we will not consider this case in our modeling

for now.

Using assumption (8) and Bayes chain rule we can simplify

and factorize the marginal distribution for each z as follows:

P (zi |zl ) =P (ti , si , xi , yi |tl , sl , xl , yl ) = P (ti , si , xi , yi |tl , sl , yl )

=P (si |tl , sl , yl ) · P (yi |si , tl , sl , yl )

· P (xi |si , yi , tl , sl , yl ) · P (ti |si , yi , tl , sl , yl ).

(9)

4. The last factor of the FOVD model describes the statistical behavior of the distance between two events (i and l), the parental

(l) event being class-1 (a true base allele). In practice we also approximate P (dil |si , sl , yi ) ≈ P (dil |yi ) without any risk, recall

that the chromatogram has been completely aligned in the preprocessing stage. In the future more complex approaches may incorporate in this term prior information such as the occurrence of

GC compressions. The bottom panels of Figure 2 show experimental histograms derived from the same chromatograms. Short

read-length and low quality DNA chromatograms exhibit similar

behavior. Case 1-1 a histogram realization of the intersymbol arrival jitter, is clearly Gaussian shaped. However, this is not true for

case 1-0 where two modes are commonly present because, class0 event i may be close either (a) to the preceding class-1 event

l, or (b) to the expected location of the next base. When using a

EM-type algorithm to solve the estimation problem we assume a

uniform density for case 1-0 distances for simplicity, this selection

is sufficient to recover the Gaussian shape of the case 1-1.

Given that yl = 1, due to (5), the last expression reduces to:

P (zi |zl ) =P (si |tl , sl ) · P (yi |si , tl , sl ) · P (xi |si , yi , tl , sl )

· P (ti |si , yi , tl , sl ).

(10)

Now let’s introduce a new random variable to represent the

distance between two events (dil = ti − tl ). The new distributions

P 0 will have the same shape as P but they will be shifted along the

ti axis to the left by tl 1 . After substituting into (7) we obtain the

following factorized expression for the complete model of a DNA

chromatogram:

P (Z) =

N

Y

P 0 (si |sl ) · P 0 (yi |si , sl ) · P 0 (xi |si , sl , yi )

i=1

· P 0 (dil |si , sl , yi ).

(11)

In fact, we have decomposed the complete joint distribution at the

level of individual variables instead of vector variables. By analyzing the last expression we can observe the similarity of the second

and third factors to the mixture density model obtained when statistical independence between events was assumed [1].

Now let us examine individually each one of the terms in expression (11) (from left to right) and consider some reasonable

simplifications:

We can now rewrite the proposed FOVD model for the unsupervised learning of a DNA chromatogram as:

1. Symbol transition probabilities, prior knowledge of the sequence

grammar, can be taken into account using this term to improve performance. E.g. the human genome exhibits sections with rich GC

content [13]. If such information is not a priori available, we suggest to assume an independent and equiprobable transition matrix

(P (si |sl ) ≈ P (si ) ≈ 14 ) (as we did in all our experiments) since

the evidence provided in a single chromatogram is not sufficient

for a robust estimation of symbol transition probabilities.

Finding the uknown FOVD model parameters can be formulated

as a Maximum Likelihood (ML) estimation problem. We expect

parameter values to vary considerably among channels and different data set types. Therefore, the ML estimation will be based on

the data set under analysis (unsupervised classification) and not on

other training data sets.

Let zi = [ti xi yi ] be the random vector representing each ith event. For clarity, we omit the fact that events can be drawn from

different channels unless when it is necessary to differentiate. Consider the complete experimental data set Z = {z1 , . . . , zN } and a

1 After

P (Z) =

N

Y

[ 14 P (yi |si )P (xi |si , yi )P (dil |yi )] .

(12)

i=1

3. UNSUPERVISED ESTIMATION PROBLEM

this step we will not continue using primes.

371

Event weight characterization, G1004

60

If the hidden variables yi were known, equation (15) could

be maximized by differentiating and equaling to zero. Lagrange

multipliers can be used to incorporate restrictions on the transition

probabilities:

P0 , P1 > 0, P0 + P1 = 1.

(16)

Inter−event distance characterization, 1004

300

Class 0 (obs)

50

Class 1−0 (obs)

Class 1−1 (obs)

Class 1−0 (est)

Class 1−1 (est)

250

Class 1 (obs)

Class 0 (est)

40

200

Class 1 (est)

30

150

20

100

10

50

0

0

0.5

1

event weight

1.5

In our case the labels are not observable (unsupervised learning). Nevertheless, convergence to a local maximum can be ensured by means of the iterative EM algorithm which uses two

steps:

0

0

5

Event weight characterization, G4103

60

10

inter−event distance

15

1. Expectation step. Compute the so called Q( ) function, which

is the expected value of the log-likelihood function given the observable data O = {x1 , . . . , xN , t1 , . . . , tN } and the previous estimate of the parameter (ΦP ):

h

i

Q(Φ, ΦP ) = E l(Φ)|O, ΦP

(17)

20

Inter−event distance characterization, 4103

250

Class 1−0 (obs)

Class 1−1 (obs)

Class 1−0 (est)

Class 1−1 (est)

Class 0 (obs)

50

200

Class 1 (obs)

Class 0 (est)

40

150

Class 1 (est)

30

100

=

20

0

0

0.5

1

event weight

1.5

0

0

5

10

15

inter−event distance

20

+

25

+

the event weight (left) and inter-event distances (right). Good quality long

read-length DNA chromatogram (more than 800 bases) and pre-classified

data were used. Top row: primer dye gel electrophoresis, Bottom row:

terminator dye capillary electrophoresis.

[ 14 P (yi ) P (xi |yi , θ) P (dil |yi , δ)]

=

N

Y

ΦP +1 = argmax Q(Φ, ΦP ).

[ 14 P (yi = c) P (xi |yi = c, θ c ) P (dil |yi = c, δ c )]∆c (yi ) ,

As before, this can be done by equating the gradient to zero, after incorporating constraints for the transition probabilities (as in

expression (16)).

where ∆c (yi ) is 1 when yi = c and 0 otherwise. The vector of

unknown parameters to be estimated is:

4. BACKWARD AND FORWARD ALGORITHM FOR THE

FOVD MODEL

(14)

Let us return to the expectation step formulation of equation (17).

Observe that due to the binary nature of ∆c (yi ) we have:

¯

h

i

¯

E ∆c (yi ) ¯O, ΦP = P (yi = c|O, ΦP ),

(19)

where θ T•,• are the parameters for the class conditional models

for the size of the event estimated for each class and channel,

δ T10 , δ T11 are the parameters for the conditional models for the distance between events estimated only for cases 1-0 and 1-1, and

P0,• = P (yi = 0|si = •), P1,• = P (yi = 1|si = •) are the prior

probabilities for the mixtures.

We now explicitly include the unknown parameters in expression (13) and compute the logarithmic likelihood function l(Φ),

so that we transform product terms into sums thus simplifying our

objective function for the maximization problem:

"N 1

XX

b

Φ = argmax l(Z, Φ) = argmax

∆c (yi ) log Pc

Φ

+

N X

1

X

i=1 c=0

+

N X

1

X

(18)

Φ

c=0 i=1

Φ

¯

h

i

¯

E ∆c (yi ) ¯O, ΦP log P (xi |yi = c, θ c ).

2. Maximization step. It produces updated parameters (ΦP +1 ) by

maximizing the Q( ) function:

(13)

Φ = [θ 0,A , . . . , θ 0,T , θ 1,A , . . . , θ 1,T , δ 10 , δ 11 ,

P0,A , . . . , P0,T , P1,A , . . . , P1,T ],

N X

1

X

The expectation step is used to create an expected value for the

hidden variables, hopefully close to their true values. The Q( )

function now only depends on the observable data (O) and the

unknown model parameters (Φ).

i=1

1

Y

¯

h

i

¯

E ∆c (yi ) ¯O, ΦP log P (dil |yi = c, δ 1c )

i=1 c=0

vector with the unknown parameters Φ of the FOVD model (12).

The model likelihood can be rewritten using an indicator variable:

N

Y

N X

1

X

i=1 c=0

Fig. 2. Histograms which capture the conditional distribution models for

L(Z, Φ) =

¯

h

i

¯

E ∆c (yi ) ¯O, ΦP log Pc

i=1 c=0

50

10

N X

1

X

which is nothing more that the a posteriori probability of the i-th

event, but using the previous set of parameters (ΦP ) in the computation. Equation (19) can be rewritten as:

P

P

P (yi , O|ΦP )

∀Y\yi P (Z|Φ )

P

P (yi |O, Φ ) =

=

. (20)

P

P

P (O|ΦP )

∀Y P (Z|Φ )

Clearly, given the large number of events (N ) the evaluation of

the model for every possible realization of the hidden variables

(∀Y) is computationally costly, if not impossible. To evaluate such

model ≈ 2N 2N multiplications are needed, without considering

the computation of the class conditional distributions. An alternative is to seek an efficient exact propagation method such as a

Forward-Backward type of algorithm. This is possible due to the

following notable characteristics of the FOVD model:

i=1 c=0

∆c (yi ) log P (dil |yi = c, δ 1c )

#

∆c (yi ) log P (xi |yi = c, θ c ) . (15)

i=1 c=0

372

y=0

yi=0

y=1

yi=1

i -3

i -2

i -1

i

y=0

i+1

i+2

i+3

yl=1

y=1

yi=1

Fig. 3. FOVD model expanded vertically using the domain of the hidden

variable. The arcs show possible paths for the realization of the sequence

of hidden states and not dependence relations.

l

1. Homogeneous structure (time-invariant and memoryless). We

have already used this property to write expression (4), let us restate it:

³ ¯

´

³ ¯

´

¯

¯

Pzi |Z M(i) zi ¯Z M(i) ≈ P zi ¯Z M(i)

∀ i ∈ {1, . . . , N }.

2. First-order dependence structure. Similarly, we have already

used this property to simplify (7):

³ ¯

´

¯

P zi ¯Z M(i) = P (zi |zl )

∀ i ∈ {1, . . . , N }.

two events with true base symbols and all other events between them being

class-0. Solid lines indicate the state sequence path while dashed lines

represent event dependencies according to the FOVD model.

y=0

yi=0

y=1

yi=1

i -3

i -2

i -1

i

i+1

i+2

i+3

Fig. 5. Induction of the forward and backward variables. Arcs represent

considered state paths over the summation.

The forward variable αi is the sum of the probabilities, over

all the possible paths, that converge at state yi = 1. Similarly the

backward variable βi is the sum of the probabilities, over all the

possible paths, that depart from the state yi = 1. Let us define

an additional variable, (γ), to help us compute the probability of

the path between two events (from l to i) with true base symbols

and all other events between them being spurious (class-0). For an

example see Figure 4.

(21)

We start by considering the joint probability of the observations (O) and the hidden variable of the current i-th event (yi ), i.e.

the numerator in (20). When the hidden variable takes a value of 1,

we can factorize this term. Using the conditional independence assumption claimed by expression (6) we can separate the ancestral

M(i) from the descendent M(i + 1) observations:

³

´

P (O, yi = 1) = P O{1,...,N }\i , zi =

(22)

¯

³

´

³

´

¯

P OM(i+1) , yi = 1 P OM(i+1) ¯ oi , yi = 1 .

|

{z

} |

{z

}

αi

i

Fig. 4. Example for calculating the probability of a single path between

3. Short-term dependencies. The probabilistic model for the distance is evaluated using the distance of the current event to its

closest ancestor event that has generated a true base symbol. Furthermore we can safely assume that the probability of the distance

being larger than a certain threshold is negligible (as verified from

the right panels of Figure 2):

P (dli > ∆ | yl , yi ) ≈ 0.

i -1

l+1

γli = P (yi = 1|yl = 1)P (xi |yi = 1)P (dli |yl = 1, yi = 1)

·

i−1

Y

(23)

[P (yj = 0|yl = 1)P (xj |yj = 0)P (dlj |yl = 1, yj = 0)] .

j=l+1

When l and i are consecutive there can not be class-0 events between them and the last term of products in (23) is equal to one.

On the other hand, if l and i are too far apart the distance model

assigns values close to zero (recall (21)) making the whole expression go to zero.

We now derive the induction step of the forward calculation.

Figure 5 shows how the state yi = 1 can be reached from all

previous states. We do not need to calculate all possible paths for

all the ancestors (as in Figure 3), instead we can compute αi using

the forward variables of the ancestors,

i−1

X

αi =

αj γji .

(24)

βi

Here αi is the forward variable i.e. the joint probability of the

partial observation sequence from the beginning to i and that the

i-th event generates a base symbol. Furthermore, βi is the backward variable, i.e. the joint probability of the partial observation

sequence from i + 1 to the end, given that the i-th event generates a base symbol. Recall that αi and βi can not be calculated

when yi = 0, since there are dependence messages between nodes,

one to the left and another to the right which do not pass through

yi = 0.

To better illustrate this concept consider Figure 3. Let us expand our graph model vertically using the domain of the hidden

variable (yi ∈ {0, 1}), which we also call states as it is usually done with HMMs. The horizontal dimension is the index of

the time ordered events. A valid path is one that contains exactly one node for every time instance and connects them consecutively. This is not a dependency graph, but, like in HMMs, a

graph that shows the likely realizations of the state sequence. In

fact, in the FOVD model there are dependencies between non consecutive events. Despite the complexity of the model, we aim to

derive an efficient probability propagation method since the shortterm dependencies will help us reduce the number of instances to

be considered.

j=i−L

In theory the summation index should start at j = 1, however, we

can use property (21) to considerably reduce the number of terms

considered in the summation.

We can similarly derive the induction formula for the backward procedure. As before, we do not need to calculate the probability for all the possible paths for the descendants, instead we use

the backward probabilities previously computed. Figure 5 shows

paths starting from state yi = 1 and reaching all the subsequent

states.

i+L

X

βi =

γij βj .

(25)

j=i+1

Now, using Eqs. (24) and (25) we can substitute in Eq. (22) and

obtain the joint probability for the complete set of observations (O)

373

and the hidden variable at the instance i being yi = 1. The key idea

for obtaining the special-case forward-backward method proposed

is that even though we do not know precisely the forward and backward variables for certain states, where we can not factorize the

model, we can still propagate the dependence messages accurately

through the states that do factorize the model. Furthermore, the

fact that we can not compute forward and backward variables for

the non-factorable states does not prevent us from computing the

joint probability of the observations at such a state; we just need

to sum the probability of all the possible paths which pass through

this state (using of course the already computed propagation variables):

P (yi = 0, O) =

i−1

X

i+L

X

αj γjl βl .

7. REFERENCES

[1] M. Pereira, L. Andrade, S. El-Difrawy, B. Karger, and

E. Manolakos, “Statistical learning formulation of the DNA

base-calling problem and its solution using a Bayesian EM

framework,” Discrete Applied Mathematics, vol. 104, no. 1–

3, pp. 229–258, 2000.

[2] L. Andrade and E. Manolakos, “Automatic Estimation of

Mobility Shift Coefficients in DNA chromatograms,” in Proceedings of the IEEE Workshop on Neural Networks for Signal Processing (NNSP), Sept. 2003, pp. 91–100.

[3] L. Andrade and E. Manolakos, “Signal Background Estimation and Baseline Correction Algorithms for Accurate

DNA Sequencing,” The Journal of VLSI Signal ProcessingSystems for Signal, Image, and Video Technology, vol. 35,

no. 3, pp. 229–243, Nov. 2003, special Issue on Signal Processing and Neural Networks for Bioinformatics.

(26)

j=1−L l=i+1

As all other known probability propagation methods, observe

that there are essentially two types of computations performed:

multiplication of local marginals (23) and summation over local

joint distributions (24), (25), and (26). The principle of effective

probability propagation is to decompose the global sum (20) into

products of local sums, so that the computation becomes tractable.

[4] L. Andrade and E. Manolakos, “Robust Normalization of

DNA Chromatograms by Regression for improved Basecalling,” Journal of the Franklin Institute, vol. 341, no. 1-2,

pp. 3–22, 2004, special Issue on Genomic Signal Processing.

[5] L. Andrade-Cetto and E. Manolakos, “Feature extraction for

DNA base-calling using NNLS,” in Proceedings of the IEEE

Workshop on Statistical Signal Processing, Jul. 2005.

5. EXPERIMENTAL EVALUATION

To quantify the performance of the FOVD model applied to basecalling we used two pools of data sets: the first pool of 112 chromatograms was obtained from the pBluescriptSK+ vector, using

primer dye chemistry and the slab gel ABI PRISM 377 sequencer.

The second pool of 2050 chromatograms was obtained from a region of the human chromosome 11, using the ABI-3700 capillary

electrophoresis sequencer and the ABI big-dye terminators. The

quality of the second pool is characteristic of a typical large scale

genome project, where the quality of every read is not monitored

and there may appear chromatograms of poor quality. In both

pools signal pre-processing and feature (event) extraction is performed with our own methods described in [2, 3, 4, 5, 14].

After testing our end-to-end statistical signal pre-processing,

feature extraction and base-calling strategy we found that it can

achieve 10% fewer errors than the most widely used Phred [15]

base-caller for long read-length sequences (> 800bp). Due to

space constrains and since the emphasis of this paper is on the

FOVD model formulation and parameter estimation we omit any

further discussion on this comparison. The details can be found in

[2, 4, 16, 14].

[6] L.R. Rabiner, “A Tutorial on Hidden Markov Models and

Selected Applications in Speech Recognition,” Proceedings

of the IEEE, vol. 77, no. 2, pp. 267–296, Feb 1989.

[7] D. Heckerman, “A tutorial on learning with bayesian networks,” Tech. Rep. MST-TR-95-06, Microsoft Research,

Nov 1996.

[8] B.J. Frey, Graphical Models for Machine Learning and Digital Communications, MIT Press, 1998.

[9] B.D. Ripley, Stochastic Simulation, John Wiley, 1987.

[10] T. Jaakkola and M.I. Jordan, “A variational approach to

Bayesian logistic regression models and their extensions,” in

Sixth International Workshop on Artificial Intelligence and

Statistics, 1997.

[11] P. Dayan, G.E. Hinton, R.M. Neal, and R.S. Zemel, “The

helmholtz machine,” Neural Computation, vol. 7, pp. 565–

579, 1995.

[12] G.E. Hinton, P. Dayan, B.J. Frey, and R.M. Neal, “The wakesleep algorithm for unsupervised neural networks,” Science,

vol. 268, pp. 1158–1161, 1995.

6. CONCLUSIONS

[13] International Human Genome Sequencing Consortium, “Initial sequencing and analysis of the human genome,” Nature,

vol. 409, pp. 860–920, February 2001.

We have introduced a new approach for modeling DNA sequencing data. Under this new perspective, base-calling becomes a problem of learning the FOVD model that best explains the observed

events sequence, and inferring the non-observed class assignments

from the observed data. This is addressed through the application

of the Expectation-Maximization algorithm, an inference procedure which iterates between the computation of probability of each

event being generated by each of the classes (E-step) and the computation of the parameters of the FOVD model according to these

probabilities (M-step). Work in progress involves using the posterior probabilities of the model as confidence estimates (or scores)

of individual base-calls. Also, some other interesting phenomena

may be captured with this model, such as the automatic identification and scoring of potentially heterozygous locations in raw DNA

traces.

[14] L. Andrade-Cetto and E. Manolakos, “Inter-peak distance

equalization for DNA sequencing,” 2005, in preparation.

[15] B. Ewing, L. Hillier, M. Wendl, and P. Green, “Base-calling

of automated sequencer traces using phred. I. Accuracy assessment,” Genome Research, vol. 8, pp. 175–185, 1998.

[16] L. Andrade-Cetto, Analysis of DNA Chromatograms using

Unsupervised Statistical Learning Methods., ECE Department, Northeastern University, 2005, Ph.D. dissertation in

preparation.

374