MOVIE SUBTITLES EXTRACTION Final Project Presentation

advertisement

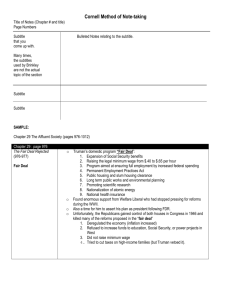

MOVIE SUBTITLES EXTRACTION Final Project Presentation Part 2 Student: Elad Hoffer Advisor: Eli Appleboim INTRODUCTION TO SUBTITLE RECOGNITION Project object is to extract and recognize subtitle embedded within video. Whilst first part demonstrated the algorithm using Matlab implementation, current part focused on delivering a working system using OpenCV. Main obstacles: Distinguish text from background. Recognize text reliably. Allow real-time implementation. ALGORITHM IMPLEMENTED Repeat for same subtitle – search for better match Dictionary and language data Acquire Frame From Video Identify text, filter unwanted noise and image artifacts Detect text using Tesseract O.C.R Compare text descriptions for best match FALSE Same subtitle New subtitle? Repeat for new subtitle TRUE New subtitle Output best identified subtitle text to file STEP 1 – DISTINGUISH TEXT FROM IMAGE B&W threshold is a crucial step for the algorithm as recognition heavily depends on clean text Different approaches were examined: Global and local thresholding using otsu’s method Text specific thresholding – Sauvola, Niblack Adaptive Local Gaussian Thresholding Stroke width transform Not a clear winner – very content dependent It seems Adaptive Gaussian thresholding gives best all-around results SWT is interesting, but way too slow for our purpose STEP 1 – DISTINGUISH TEXT FROM IMAGE STEP 1 – DISTINGUISH TEXT FROM IMAGE (CONT.) After binarization, Image is segmented into objects Noted Obstacle: OpenCV does not contain connected-component analysis implementation cvBlob library was used as a replacement, and other necessary functions were added Noise and non-text objects are then filtered out Text is identified by: Size – height, width and area parameters Color - subtitles are white and bright Location and alignment – text is displayed over one/two horizontal line Near other text objects – remove remote objects Not contained within any other text object Special consideration – punctuation marks and other language-dependent markings STEP 2 – DETECT TEXT FROM SUBTITLE IMAGE Used O.C.R – Tesseract by Google http://code.google.com/p/tesseract-ocr/ High success rate Multiple languages Open source Tesseract expects “Clean” text image – no overlay, and preferably little noise Image must be thoroughly cleaned from any objects that are not part of the subtitle Non English language is even more sensitive Big improvement over Matlab implementation – Tessercat’s full API can now be used Using user designed dictionaries – DAWG are created from words-list Using all of Tesseracts features and “switches” Getting per-word confidence STEP 3 – DETECT CHANGE OF SUBTITLES The average subtitle appears for about 3-4 seconds Output must not contain multiple, same content detections Large number of frames contains no subtitle Multiple frames of the same subtitle content are informative Some frames are noisier than others Main image content can change to better suit our algorithm Detection is made using our output, cleaned image (same input fed into the O.C.R) Decide according to correlation Fast and reliable – our input images are binary matrices Threshold heuristically decided at ~0.5 STEP 4 – IMPROVE SUCCESS RATE USING MULTIPLE FRAMES For each subtitle, we can have a large number of possible detections (4 seconds of subtitles, 30fps). Average frames containing the same subtitle to reduce noise How can we compare different detections and choose best one? Number of valid words – (1st part used this metric) Confidence level of our O.C.R engine Grammatically correct sentences Other text analysis – a try was made to “autocorrect” words (not reliable, slow) Modified metric over 1st part – check the O.C.R confidence level for each subtitle Can be tweaked using “non-dictionary” penalty Created a modified dictionary for use with Tesseract STEP 4 – IMPROVE SUCCESS RATE USING MULTIPLE FRAMES - AVERAGE Averaging Frames STEP 4 – IMPROVE SUCCESS RATE USING MULTIPLE FRAMES – RANK & CHOOSE comme d ia colère et e laïfureur. 83 comme de la colère et de la fureur. 87 STATISTICS AND PERFORMANCE Success Rate: 80-85% per word Processing time: Real-Time ability – x1 video length at most Can be a lot shorter depending on content (non text frames are omitted) Can be greatly improved – very little parallelization