Decision Analysis: An Overview

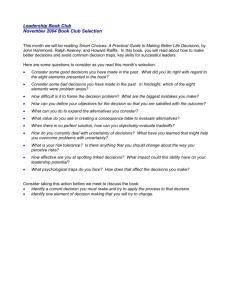

advertisement