PARALLEL VIRTUAL I`ofACmNE VERSUS REMOTE PROCEDURE

advertisement

33

PARALLEL VIRTU AL I'ofACmNE VERS US REMOTE PR OCEDURE CALLS: A

PER FORMANC E C O MPARISO N

IKam alrulnlza m Abu Bak ar , lZait ul Mlir lizaw ati Zal nudd ln

' Pacuhy of Co mputer Science & Informa tion System, lFaculty of Science,

Universn i Teknologi Mala ysia.

81310 tIfM Skuda i, Johor D. T. Ma laysia.

lkamarul@ fsksm.utm.my, l zmz.@mel .fs.utm.my

Abst rllcl: Distributed network computing offers gre(l/ potclltinl for increasing the amount of

computing power and communication resources available to large computational applications.

For this potential, a number of programming environments haw: been proposed to develop

distributedprograms. PVM and RPC are two such programming environments, which support that

ability. In this paper. an experimental performance comparison of PVM and RPC is made. The

comparison is done by evaluating their performances Ihrough two experiments. one is a broadcast

operation and Ihe other are two benchmark applications, ",!tich employ prime 'lumber calculation

and matrix multiplication. The broadcast operation will be used to evaluate the communication

overhead while the benchmark applications are used to evaluate the processing (distribution.

computation and collection) overheads. The experiments arc conducted using different sizes of

data and different numbers of workstations. All the experiments are run on a cluster of SUN

workstations. The results f ound from these experiments show the better environment under

specified conditions.

Key"' o rd ~ : Pal ullel Virtual Machine. Remme Procedure

Performance, Application Benchmark and Distributed System.

Calls,

Communication

I. In t rod urlio n

We have witnessed an unprecedent ed growth of paralle l com puting ha rd ware platfor ms, At the

same time, advancements in the design of processor arc hitecture and comm un ication med iums

have resulted in the emergence of fast workst ations conn ected by high -spee d comm unica tion

networks [I ], [2 ]. These cl usters of workslations also known as wor kstation farm s ar e approaching

the speed of so me of the contem porar y parallel computers. In recent years, stud ies have shown tha t

clusters of workstations have the potential of solvin g vel)' large-sc ale prob lems [2]. A nu mber of

program ming env ironments for such plat forms have recen tly emerged. They can sim ulate a cluster

of workstations as a virtua l pa rallel computer, and ca n perform comm unication acr oss multiple

homogeneous and heterogeneous parallel machines [3j.

Due to the popula rtty of para llel proce ssing, many software makers produce a number of

program ming environments to make use of the capability of ne tworked clu sters of workstation s.

Th ese environments offer many choice s to the user in solving com putationally complex problem.

Although these env ironments ea n so lve the limitations of the tradi tiona l compu ter, they still have

some weaknesses amongs t them. Usually the comm unication overhead and the processing

overhead affect thei r pe rformance.

This pa pe r presents the com parison of the t\VO currently popular parallel progr amm ing

en vironments. The firs! is PV M that is available in the public domam (4). The second is RPC

which is a com merci al product from SUN Microsystcms, Ine.[5). The per for mance of PVM and

RPC running on a d uster of S UN workstation con nected by an Etherne t is evaluated Th e results

of this ev aluation ca n be useful in number of ways.

•

•

•

Th ey enable a comparison of these two environments to be made to find out which one

perform better.

Th ey provide inform ation abou t the overhead incurred by bas ic communication and

processing on a du ster of SUN wo rkstations for large computation of problems.

They hel p us choose the environment best for speci fied cases.

Jilid I S.Bit. I (J un 2006 )

Jum al Teknologi Maklumat

2, Dis tribu ted Systems

Cou louris, Dolli more and Kindbcrg [7] have defined the distributed system as " a collectio n of

autonomous computers linked by a com puter ne twork and eq uipped with distributed system

so ftwar e", Meanwh ile, Tanenba um [8] de fines it as "a collectio n of independent computers that

appear to the users of the system as a single computer" and Mullender [9] defines it in short as

"several computers doing someth ing togeth er", Thus it can be summarised that a distributed

system is a group of com puters that are linked by a network to perform a process.

Mul1 ender [II J has highlighted seven objectives why we need to build distributed systems,

These object ives ca n answer the qu estion why arc distributed systems importan t.

•

People lire distributed, informa tion is distrib uted : Therefore, people all around the wor ld can

sh are reso urces in distributed systems envi ronment.

•

Per formance/cost: Distr ibu ted systems can offer high performance processor with very

econ omic cost that ca nnot be offered by any other systems.

•

Modularity: "In a dis tributed system, interfaces betwee n parts of the system have to be

much more carefully des igned than in a centr alised system. As a consequence, distributed

systems must be built in a much more mod ular fashion tha n centralised systems" [ II ].

•

Expenda bility: Distributed systems can be easily expanded without affec ting the whole

system.

•

Availability: " Distributed system s haw the potential ability to contin ue ope ration if a failure

occurs in the hard ware or software" [10J.

•

Scalability: Distributed systems can grow to what ever size the user want.

•

Reliability: The de finition of reliability is "do wha t it claims to do correctly, even when

failures occur" [I I ].

Today, "distrib uted systems have become the norm for the organiz ation of computing facilities"

[7] . Th ese systems have been used in many areas, from general-purpose interacti ve co mput ing

systems to a wide range of comm erc ial and industrial application of compu ters. Due to this

popularity of dis tributed system , many distrib uted system softwar e ha ve been produced as a too l to

develop distributed application. This tool which IS known as distributed program ming environment

in this pape r, will be discussed in the next section.

J, Distribu ted Program ming En vironme nt

Distributed Programming Environment is a software system that can be used to develop distributed

application. Th ere are man y types of this soft ware tha t have been introduced and ea ch of them has

its ow n methodology in per formi ng distributed processin g. Th is section will describe in genera l, a

few types of dist ributed program ming environment that are cur rently use d in dis tri buted system

en vironment.

•

Parallel Virtual Machine

P VM wa s developed by resear chers at Emory University, Oa k Ridge National Labo ratory

(O RNL), University of Tennessee, Carn egie Mellon University and Pittsburgh

Su percomputer Center {4]. "It pe rmits a networ k of heterogeneous compu ters to be used

as a single parallel computer" [3 ].

•

Remote Proced ure Call

RPC was developed by Sun and AT &T as part of the UNIX system V Release 4 (SVR4).

It is a powe rful technique for cons tructing dist ributed, client-se rver based applications [5].

It exten ds the local proce dure ca ll concep ts to the remote case.

•

Linda

"Linda is a co-ordination language providing a communication mechanism based on a

shared memory space call ed ' tuple space ' .. [6]. 'The operation provided arc out (add a

Jilid 18,B il. I (lun 2006)

Jumat Tekn ologi Maklumat

tuple to the space), in (read and remove a tuple) and read (read a tuple without deleting

it)" ( 11]. The basic idea of Linda is to 'allow a set of processes to execute tuple-space

operations concurrently

•

Express

Express is developed by a group of researchers who started Parasoft Corpo ration. " It can

be used to write parallel programs on a variety of parallel machine s such as CMS,

NCUBE, Intel iPSC/2 and iPSCi 860 hypercubes, Intel Paragon and transput er arra ys"

(12]. Express can also be run on workstation such as DEC, HP, IBMlR 6000, SCI and

SUN. Ahmad [3] has described that Express supports communication utilities such as

" blocking and non-b locking communication amo ng node s, exchange, broadcast and

collective communication such as reading and writing a vector " . It also supports the

globa l commu nication such as concatenation, global reduction operations,

synchronisation, etc. Express also "provides processors control, dom ain decomposition

tools, parallel l/O, graphics and debugging and per forma nce analyses tools" [3J.

•

Socket (BSD a.x UNIX)

Socket is com monl y used as a mec hanism for interp rocess com munication in UNIX

environment. Socket has been defi ned by Brown (13) as an "e ndpoint for

communication" . It is the place where the app lication program and the transport provider

meet. Socket use the pairs of system call such as "read() and write()" , "recv() and send( )",

etc. to trans fer the data . To be able to exec ute the process in parallel, socket use the

system call ' setccn) ' which can multiplex between the messages being received on each

client connection. Recently. Socket has been implemented in many distributed

applications.

Th is section introduced in general a few types of distrib uted programm ing environment that are

currently used in distributed systems environment . There are ma ny similar software environments

that have been produced in the distributed systems. All of them are trying to ex ploit thc potential

pow er of diver se parallel and distributed hardware platforms. In this work, PVM and RPC have

been selected to be used as an experiment environment . The PYM system has been selected

because of it popularity in high-performance scie ntific computing com munity. while RPC is one

of the powerful techniques for construction distributed app lication as claime d by Sun

Micrcsystem s, Inc.

4. Design and Expe r iment al Setup

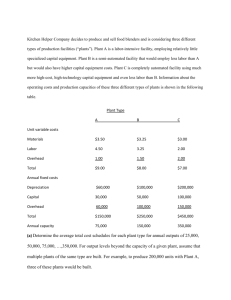

The test programs used are broadcast operation, prime numbe r and matrix multiplication. They

were written in C language and were run on the Ethernet based network SUN workstation. This

section outlines the design of the three algorithms that arc used 10 evaluate the performance of the

distributed programmi ng environment, the explan ation of the purpose of each application, the

reason for using this applica tion. the flow and imp lementation of the appl ications,

4.1 Broadrast Op er ation

One-to- many mapping of commu nication can be achieved through broadcast operation (sec Figure

I). Th is operation is used to send the same data format to all connected computers in the network ,

with no processing mechanism involved.

Jilid

rs.su.

I [Jun 2006)

Juma l Teknologi Maklumat

36

Broadcast opttaliOl\ is used 10 evaluate the communicatioo overhead. "The evaluation needs to

be done because as menucned by Tayloe 120). communication overhead can have a big impact on

lhe performance of a dist ributed appli cation. Foe instance, when the com munication overhead is

high. the performance of the distributed application cften drops. Therefore, the communication

overhead and th e performance of distributed application are directly related.

In this work,. the communication overhead produced in PVM and RPC ar e evaluated by

broadcasting dIfferent size of data to all the computers. The response lime is limed and a graph

indic ating the occurring of the overhead is pr oduce.

Broad cast operation in PVM en vironment can be implemen ted by using ~pvm-'xastO·

command. This command is provided by PVM and it can be used 10 broadcast any messages 10

an y specific grou p.

To evaluate the response time or the communication overh ead, the time is timed using

'gcnimeefday function. This funcuon is used 10 lime the beginning lime and the ending time of the

broadcast operation . The begin ning time is taken before pvrn_bc ast operation is executed an d the

ending time is ta ken as soon as the main program received the response from the w orker tasks. The

ac tua l time is calcul ated by sub tracting the beginning time from the e nding time. The time

obtai ned is the communication overhead tha t occurs during the broadcast operat ion.

Broadcast operation has been implemented in RPC e nvironmen t using ~rpe_broadcastO~

functio n. Th is is an RPC bu ilt in function and it is used to broadcast thc message to all the ser ver

programs res ide nt on different host s. The response time o r the com munication overhea d is

evaluated in this environment using ' geu imeofda y' function . The beginning time is taken before

th e rpe_b roodcastO command is iss ued a nd the e nding ti me is taken after the main progra m has

received the respon se from the server program. The act ual response lime is calculat ed by

subtra cting the begin ning time from the e nding ti me ,

01.2 Pri me N umbe r

In Th e Penguin Dict ionary of Mathem atics (27], prim e numbe r is de fined

as "A

whole nu mber

wger

than I that is divisible only by I and itself'. Prime Number is a well-known algorithm in dist ributed

environ ment. It is used to find the total num ber of pri mes in a given range. Computations ove rhead

in distributed system occur during the operations o f di stribut ion, computation and col lect ion of the

data. Th is overhead must be evaluated to j ustify the e ffecti veness of distributed process ing in

distri buted programming envi ronment. In this wor k, Prime Number algorithm is selected to be

used as an e xperi men tal program 10 evaluate the com putation overhe ad. It is because, this

algonthm is com posed of all the operations described above and it is obviousl y very

eompuulionally intensive for large range of values. Prime Number algorithm has also been used

by many authors 1ll their books suc h as Quinn [21], Qlal mers and T idm us {6J, Brown [13l, ere.

when describing the process of distributed app lication.

In this work, computation overhead for PYM and RPC wi ll be evaluated us ing prime number

algori thm on different numbcn of servers for different ranges of numbers. Th e computation time is

timed and a graph indica ling the occurring of computation o verhead is produced.

Th e computation overhead in th is program is timed using the same functi on as before which is

' gettimeofday' functi on . 1bc begi nning time is taken before the maIn program starts "spawnin g'

l ilid 18,B il. I (Jun 20(6)

l urna! Teknologi MakJumat

J7

the worker tasks. This is due to the fact that the operation of 'spawn ing' the, worker tasks is one of

the three operations contributed 10 the computation overhead. The time is stopped after all the

results have been received As before, the actual time is calculated by subtracting the beginning

lime from the ending time'. The time obtained is the computation overhead that occurs during the

process of finding and calculating the total number of prime numbers.

Prime Number program has been implemented in the RPC environment using m ultithreaded

client technique. In this technique. multiple thread will be created by the client program 10 enable

multiple process to be done: concurrently. The evaluation of communication overhead that occurs

during the proces s of prime numbe r program is done by using 'getnmeofday' function . This

function begins to lake the: time before the main program creates the lhread and STOps afler all the

results have been received. The actual lime or the occurrence of The communication overhead is

calculated as before.

4.3 Malrll Muldpllcalion

Matrix multiplication is a fundamental component of many numerical and non-numer ical

algorithms. Its operation is 10 multiply row and columns of two matrices. The definition of matrix

multiplication [26 ] is given bel ow ,

If A =~ij j is an mxn matrix and B =~ifj is an nxp matrix, then the product of A and B ,

AB =C =~,jJ,isan m xp matrix dcflned by

j :=

1,2,K ,m , j = 1.2.K ,p

It is suitable to implement this as an experimental algorithm in distr ibuted env ironme nt beca use

its operation involves very large comp utational problem. Thi s algorithm can be used to evaluate

the comp utation overhead because its operation involves the process of distributing. computing

and collecting data .

In addition to prime numbe r algorithm, matrix multiplication algorithm is used in this work 10

evaluate the computation overhead for PVM and RPC. The experiment done in evaluating the

performance of the two environments by using this algorithm is similar to the experiment done on

the prime number algorithm.

The evaluation of computation overhead is done using 'gettimeofday' function . As before, the

beginning time is taken before the main program starts 'spawning' the worker tasks and stops after

all the results have been received. The actual time is calculated by getti ng the differences of the

begin time and end time . The time obtained from this experiment will show the overhead of

computation produce by matrix multiplication program in PVM environment.

The implementation of matrix multiplication in RPC environment is done through the

combination of broadcast operation and multithreaded client. The' computation overhead is

evaluated using gcttimeofdayf) function . In this progra m the beginning time is taken before the

main progra m execute the TpC_broadcast() ope ration. The time is stopped after all the client threads

had returned the resuhs to the main program. The actua l time is calculated from the difference of

the beginning time and the ending time. This time obtained is the computation overhead that has

been produced by matrix multiplication program in RPC environment.

5, Ea pe r lme nta t Resu il

This chapter will explain the result of the experiments that have been done in evaluating the

performance of PVM and RPC. Th e explanations will focus on the tre nd of the graphs that have

been generated. Two experiments have been conducted. One experiment is focused on the area of

comm unication performance where the comm unication overhead is evaluated using broadcast

opera tion algorithm. Whereas, the second experiment used an application benchmark which are the

prime numbe r and matrix multiplication algorithms to evaluate the computation overhead.

JiJid 18,Bil. 1 (J un 2006)

Jumal Teknologi Maklumat

38

5.1 Commufllutlon Performance

As mentioned before, the communication overhead can have a big impact to the performance of

distributed programming environment. Therefore, work is done on this area to evaluate the impact

of communication overhead in PVM and RPC environments. Ahmad [3] in his paper had also

discovered thai "the size of the communication data had a great impact" to the performance of

software environment thai he evaluated. In th is work, the evaluation of communication

performance is carried out by using the: broadcast operation program. Both environments used the

same program in evaluating the communication overhead and the result gathered from this

experiment is used 10compare the performance of both environments.

5.1.1 Broadcast Operation Elperinunf Resul t

Broadcast operation is performed 10 send the same data to more than one node. It can be performed

between host and nodes, or from one node to multiple nodes. According to Ahmad (3), this

operation is "one of the frequently used primitive and is, therefore, important for performance

comparison". The following graphs give the result of the experiments done on 5, 10 and 15 SUN

workstations in evaluating the performance of PVM and RPc. Graphs are generated from the

results to make it easier for us to compare the difference in performance of the two environments.

The graphs for broadcast operation experimen t are shown in Figure 2, Figure 3 and Figure 4.

Broadcal t Operation ( 5 SLN w orkstation )

Site of array

FIgure 2 (a) Small message

Broadcal t Operaoon I 5 SlJIl WOfkstalion )

Size or array

Figure 2 (b) Large message

Jilid I S,Bi!. I {Jun 2006)

Jurnal Teknolcgi Maklumal

39

Broa dca st Oper ation ( 5 SUN w orkstatca )

Size of array

Figure 2 (c) Very large message

Figure 2(a, b, c): Graph for broadcast oper ation experiment on 5 SUN workstation

As can be noticed, in Figure 2, for sma ll messages, broadcast operation of RPe is about 1.8

times slower than PVM. However, for very large messages, the resu lt is different, broadcast

operation ofPVM became very slow com pa red to RPC. Here, PV M per formance is about 1.8

times slower than RPC.

Broadcast OperaliC>n ( 10 S~ w orklitatiJn)

FIgure 3 (a) Small message

Broadcast Operation ( 10 SlJ'J w ork.:i lato n)

Sile of arr ay

FIgure 3 (b) Large message

Jilid IS,Bit. 1 (Jun 2006)

Ju m al Teknologi Maklumat

40

Broad cast Opera lion ( 10 SLN Workstalion)

Size of array

Figure 3 (c) Very large message

Figure 3(a, b, c): Graph for broadcast operation experiment on 10 SU N wcrkstanon

Meanw hile in Figure 3, the results for broadcast ope ration in PVM en vironment is slower than

RPC for sma ll messa ges. PVM per forma nce is about L l timcs slower than RPC. For very large

messa ge size , the per forma nce of broadca st opera tion for PVM beco mes even worst' , The

performa nce of broadcast ope ratio n for PYM is about 3,5 times slowe r than RPC.

Broadcast ccerarco ( 15 SLN w or~s la tionl

"-"

!

~

200

T

' 50

'~

r - *-'*- o-<>---o-- ¢"

o

0

.,

I

I

I

I

<Ii'

0 -0

I

,i'

0 -0

I

,<Ii'

II=::

4'

see of array

Figure 4 (a) Small message

Broadcas t Operation (15 SLI'l w orkstation)

Size of array

Figure 4 (b) Large message

Jilid l8,Hil. J (Jun 20(6)

Juma l Tcknologi Maklumar

41

Broadcast cce-etco (t 5 SlJ" w orkstatioo)

Size o f array

FIgure 4 (c ) very large message

Figure 4(3 , b, c) : G raph for bro adcast operation expe riment on 15 S UN workstat ion

The experiment in evaluating the communication overhead is continued with IS SUN

workstations and the results are show n in Figure 4. The graph shows that, for small messages the

performance for broadcast operation of PVM is about \. 5 limes slower than RPC and for ve ry

large messages, about 6.5 times slowe r than RPC. From this obser vation, the comm unication

overhead in PVM increases dramatically when the num ber of workstations increases. While for

RPC environment the communicatio n overhead increases gradu ally wh-en the number of

workstations increases. As an example. for small messages the comm unicatio n overhead in PVM

environmen t for 5 SUN workstatio ns is about 50 mscc while the co mmunication overhead in PVM

environmcnt for small messag es for 15 SUN workstations is about L78.3 msec . O n the other hand,

the commun ication overhead in RPC environment for small messages for 5 SUN workst ations is

about 87.3 mscc while for 15 S UN workstations it is, about 116. 1 msec.

As stated by Ahmad P l in his paper, "the broadcast ope ration of PVM is inefficient". Therefore,

it can be seen that the results obtained from the experiments done in this project agr eed with the

statement. In conclusi on, PYM environment contain s high communication overhead com pared to

other software environments suc h as RPC. By rit;ht, PVM shou ld offer low commun ication

overhead. in order to de velop high pe rformance of distributed application .

S.2 An A ppli cation Benchm ark

To compare the perfor mance of PVM and RPC with real application, tw o application bench marks

have been Implemented. These applications are used to eval uate the comp utat ion overhead that

occurs during the distribut ion, computation and collection op erations that have been done by t he

application. This is the second important overbecd that must be evaluated in companng the

performance of so ftwar e environment. In this work, prime number and matr ix mu ltiplication

programs are used as the benchmark applications in evaluating the co mput ation overhead The se

programs have been imp lemented in both environments and the results of the exper imen ts are used

in comparing their performances.

5.2.1 Pr ime Nu mb er E xperime nt

Rc~u ll

Prime number program is used to find the total number of prime in a given range . This progr am

consists of three main operations, which arc the distrib utio n of the range, comp utation of the prime

numbe r and co llection of the results All of these operations contribute 10 the com putation

overhead that has to be evaluated . The following graph s give the results of the expe riments done

on a cluster of S UN workstat ions in evaluating the per formanc e of PVM and RPC.

Jilin IS,Bil. I [J un 2006 )

Jumal Teknologi Maklumar

42

Ptirre

~ rrber

( Range: 1 - 40(000 )

40

~ 30

e.

2O L:::

S! 10

~.

0 , __

":::::~=:;c=-.".-.."

0 _<> ---.>-

4

6

o

2

o

. ~

, --;'

"'- _,- _,- -;-

~rrtJer

8

10

12

of server

I

~ PV M

- ~-

RFC

"

Figure 5 (a) Small range

Prim! I'iJrrtler ( Range : 1 - 800000)

t>Lrrber of server

Fig ure 5 (b) Large range

Prm:. t.lumer (Range : 1 -

•

6

8

N..Lrrber of server

12ססoo0)

12

"

Figure 5 (c) Very large range

Figure 5 (a, b. c): Graph for prime number experiment

As can be seen, the performance of RPC is much better than the PYM . This conclusion is made

because, the prime number program impleme nted in PY M en vironment, perfor med poorly at all

ranges tha t have been tes ted .

5.2.2

!\lat r ix !\Iulliplifaliun Exper iment Result

T his program involves three main operations, w hich ar c the distribution of matrix A and matrix D,

computation (multiplication of matrix A and OJ and collection of the results. These operations can

be used to evaluate the computation overhe ad that OC ClJrs in PVM and RPC env ironments T he

fo llow ing graphs gi ve the res ults of the experiments.

Jilid 18,BI1. 1 (Jun 2006)

Jum al Tc knolog i Maklumat

43

Wa lfix M.Jltiplical ion

~: k

l _--~

~ : l,,--~--+-~~

N:.mtJer Of serv er

Figure 6 : Graph for matrix multiplication experiment

Figure 6 shows the processing time for matri x multiplic ation in PVM and RPC environment in

processing matrix 120x120. The graph shows that, when the number of servers increases, the

performance of matrix multiplication in RPC environment increases. O n the oth er hand, the

performance of matr ix mu ltiplicat ion in PV ,V1 environment decrease s w hen the number of serve rs

increases. One of the reasons why this phenomenon happe ns in PVM enviro nment is because the

commu nication overhea d outn umbers the computat ion overhead. This happens beca use a very

small amount of data is served by many ser vers.

The cxpcnmcnr using matri x multiplic ation program cannot be continued further because this

program cannot be executed to process size of matri x morc than 120x120 Further work can be

done to improve this program to enable it to process bigger matrix size .

As discussed, a concl usion is made that, PVM has high commumcaucn overhead compa re to

RPC. This result is obtained from the experiment co nducted using broadcast operation program.

However, the experime nt result is not consistent during the experiment. This is because the

laboratory that is being used to run the experiment is an open access laboratory. Use rs from the

remote site can login to one of these computers and use any resource available. To overcome this

problem, an isolated laboratory should be used 10 run the experiment or the test should be run

many more times in order 10 get a consistent result. In this work, 10 expenmen ts are conducted

and the average result is taken. Thi s is to ensure that the result obtained from the experiment is

valid and can be used 10 evalu ate the performance of the environments.

6,Condu!>ion aml Reco mmendatio n

In recent years, studies have sho wn that clusters of workstations have the potential of solving very

large-scale problems. A number of programming environm ents for such platforms have recently

emerged. They can simulate a cluster of workstations as a virtual parallel computer, and can

perform communication across multiple homogeneous and heterogeneous para llel machines.

However the overhead occ urs in this en vironment will give big impact to their performance.

In this work, the experiment of com paring the perform ance of PV M and RPC has been

conducted. The performance study was carr ied out on a c luster of SUN workstations connected by

an Ethernet network The summary of the results obtained from the experiment is that, the PY M

environment has a higher communication overhead compared to RPC en vironment.

Communicat ion overhead of PVM environment increase d whe n the number of workstations

increased. This would ind irectly affect the overall performance of PYM operation. On the other

hand, communication overhead in RPC en viro nment was very consistent even though the number

of workstation increase d Meanwhile, it is clearly shown that the computation overhead for RPC

environment is low compared to PVM environme nt. This conclus ion is made based on the

experiment conducted usmg prime number and matrix multiplica tion application.

Further works arc recommended for improving the evaluation of the performance of the

env ironments. Instead of conduc ting the experiments on a cluster of SUN workstations connected

by an Ethernet platform, it can be done on multiple platforms such as an Intel parallel computer, a

cluster of HI' workstations, etc. Also, different languages such as FORT RAN can be used instead

of C language. In this work only three app licat ions arc used that are broadca st operat ion, prime

number and matrix multiplication algorithms. To ma ke the evaluation more reliable, more

Jilid IS,El i!. 1 (Jun 2006)

Jum al Tckn ologi Maklumat

44

applications arc recommended such as one-to-one communication, exchange operatio n, global

communication, complete exchange. gaussian elimination and to optimise the coding of the

application programs. This is 10 ensure that the per formance of the environments is evaluated

efficiently.

Referen ces

[1)

Be ll, G., Uhracomputers a terafl op bef ore it'. time. Journal of Communication. AC~ 35

(8 ). pp . 27-4 7, 1992

[2)

Ikguclin, A., Dongarra, J., Geist, A . Manchck, R.• Scuderam. V., Parad igms w,d toolsJOI'

Heterogeneous Net work Computing. 1992.

[31

Ahmad. I., Express versus !' VM : A performan ce compa rison. l oumal o f Parallel

Comput ing. 23(6). pp 783-812., 1997.

[4 1

G eist, A, Adam, H, Jilek. D.• Wcichcng, J. Robe n , M. and S undcrum, V, PVMJ User 's

Guide and Ref erence MUIJlIOI. Tennessee. 03k Ridge Natio nal Labor atory, 199 -1

(5 J

S un Micro systcms, Inc. ONC+ De veloper's Guide. C alifor nia. SunSoft. 1995

{6]

C halmers, A. and T id mus, T . Practical Parallel Processing: A n Introduction fa Problem

Sol ving ill Para liel., Lo ndon, Intern ationa l Th omson P ublishing Inc. J 9%

(7]

C o ulo uris, G . Dolhm ore, J. Kindbcrg, T. [)is/ril>lltcd System Concepts and De sign. Reading

Ad dison-\Vesic y. 1994

[8J

Tanenbaum, A. Distnbuted Operating Sy stems. New Jerse y. Prcnncc Hall Internationa l, Inc

19'-)5

19]

M ullcndcr. S. Distrib uted Sy stems, Reading. Add ison- Wes ley. 199 5

[ IOj S lo man, M . and Kramer, J.. Distributed Sy stems IIm/ Computer Netwo rks., Lo ndon. Prentice

Hall Intern ational, Inc. 198 7

[ I IJ Mullcndcr, s. Distributed Sy ste ms . Rcadmg. Addi son-We sley. 1989

[12J Parason C orpora tion. Express Introduc tory Guidi? version 3.2. 1992

[ 13] Brown , C. Distributed Programming. London. Prentic e Hall lntcm auoo al, Inc,

(14 ) Zo maya, A Y. Parattel Computing: Paradigms and App /ieati oll, Londo n. Internationa l

Thomson Computer Press . 1996

[ 15 J Fatoo hi, R. I'erformance Evaluation of Commu nication Nel"If,'o rks fo r Distributed

Comp uting. Proce edin g's of International Confer ence on Co mputer Com munication ar.d

Netw orks, pp . 4 56-459 . 199 5

( 16) Janusz, K. rVA! : Parallel Virtual Machin(? A Users ' Guide and Tutorial for Networked

Parallel Computing . Ml'T Press. 1994

( 17) Liu, Y and Hoang, D.D. OSI RPC Mudd

C omm unicatio n. Vol 17 No. I . pp. 53 ·65.1994

and Protocol. JOIJrn31 of Com puter

(18] Martin, T. P. Macleod, I. A. and Nordin, B. Remote I'rored nre Call Facility For A I'C

Environment. Journal of C omputer Com munication. Vol 12. 1'0. I. pp. J 1-39. 1989

•

Jilid 18,Bi l. I (J un 2006 )

Jumal Tck nologi Maklumat

4S

( 19J Rauch, W., Distrib uted Ope" Systems Engineering :'O

Wto plan and develop Clien t/Se rve r

syslt'ms., New York, Wiley Computer Publishmg 1991J

[20] Taylor, S. Parallel Logic Progra mming Techniq ues , London. Prentice-Hall Internat ional,

Inc. 1989

[21] Quinn, M. J. Parallel Computing . Theory and Prod/ceo New York. Mcflr aw-Hrll, Inc. 1994

[221 Geist, A. and Sunderam. V, Network-Rased Concurrent Computing on The PVM iystem.

Journal of Concurrency . Practice and Experience. No. 4. Part 4 , pp. 293-3 11. 1992

[231 Krishnamurthy, E. V. Parallel Processing

Wesley. 1989

Principle and Practice. Reading. Addison-

{24] Stevens, W. R. UNIX Network Programming. London. I'rcnnce-Halfl mem auonal. Inc. 1990

P SI Michael, C. and Denis. T. Parallel Algorithms and Architecture . London International

Thomson Computer Press. 1995

[26} Kolman, B. and Hill, D,R, Elementary Linear Algebra. 7'· Frfiticn. Prentice Hall

International, Inc, 2000

[27) The Penguin Dict ionary of Mathematics 2"d Ediucn . Edited by DaVId Nelson. Penguin

Books Ltd. 1998

Jilid I S,BiL I (Jun 2006)

Jurnal Te knologr Maktumat