Page 1 of 5 New techniques for ATLAS and GridPP to utilise modern

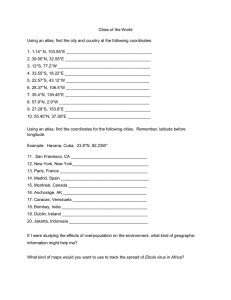

advertisement

New techniques for ATLAS and GridPP to utilise modern computer architectures Proposers (names and organisations): Dr. Philip Clark (Edinburgh) & Prof. Roger Jones (Lancaster) Contact: P.J.Clark@ed.ac.uk Start Date: 1st February 2011 Duration: 6 months Total Value: £48k Synopsis: Recent techniques of the computational science community to use effectively General Purpose Graphical Processing Units (GPGPUs) and virtualisation are not adopted yet by experimental particle physics. Various scientists in the community are now starting to realise their importance for future computing. This is a unique time to start a theme to allow the eSI to help foster this new area for us. The other aspect is that standard CPU architecture has moved to the many core paradigm requiring substantial changes to our programming model. This project will work in close collaboration with the large eScience collaboration GridPP (Grid for Particle Physics) in tandem with the ATLAS upgrade community, identified industrial experts in companies, and will work closely with EPCC and the lattice QCD community, who have substantial experience in parallel programming and particularly the recent use of openmp directive style GPU programming. ATLAS is a particle physics experiment at the Large Hadron Collider at CERN. ATLAS aims to study the origin of mass, extra dimensions of space, microscopic black holes, and evidence for dark matter candidates in the universe 1. Dr Philip J. Clark (primary contact) is an expert in simulations and particle physics analysis, he has over a decade of experience from the BaBar experiment at Stanford in California. He has also pioneered the idea of using srm (storage resource management) technology in particle physics within the Grid for Particle Physics collaboration. He is the chair of the Scotgrid Tier2 compute and data centre (Durham, Edinburgh and Glasgow). He holds a Professor Partnership award from NVIDIA and is leading the ATLAS group at Edinburgh. Prof. Roger Jones is a GridPP project management board member and represents ATLAS in the ongoing GridPP eScience projects to deploy the required Grid infrastructure for Particle Physics in the UK and to enable the experiment analysis on it. He was the Deputy Chair of the GridPP1 Experiments Board, then became the GridPP2 Applications Co-ordinator, co-ordinating the experiment Gridenablement across the HEP sector (~20FTEs were under his area, ¼ of the project). He was a principal investigator on the £4.5M ESLEA cross-council project, exploiting high bandwidth networking for science. Page 1 of 5 2. Relation to e-Science. 1. Please describe the application areas that would benefit from the outcomes of this theme. • • • • 2. Maximum likelihood fitting techniques for rare particle searches at the LHC, this is predominately particle physics, but the technique is used much further afield. Matrix element analysis, this is a technique heavily utilised by the Tevatron collider at Fermilab, but is limited by CPU time. This would be purely particle physics application. The work would also into Geant4, which is software toolkit heavily used by space, medical and industrial applications to model particle and radiation interaction within materials. There are a variety of applications that could benefit substantially from the using of GPUs, recently we have been helping SMC (Scottish Microelectronics Centre) with calculations with for active holographic displays. Please list the technical areas that would be engaged and developed as a result of the theme. 1. Identified experts from NVIDIA, Intel and DELL for the future computing and GPU aspects. 2. Local particle physics theory colleagues and EPCC 3. Experts from the GridPP collaboration in Glasgow & Lancaster (to enable GPU computing on Grid) and for storage management development (future computing workshops) 4. ECDF local technical support 3. Are there other similar projects to the proposed theme? What would be their relationship to/involvement in this programme? There is some seed-corn effort in GridPP4 for this future cloud computing type work, with which we will interact closely. There is also an ATLAS upgrade work package which is focussing on simulation and computing that has some aspects of novel computing, Dr. Philip Clark is task managing several aspects of this project and Prof. Roger Jones is the workpackage and area manager. There is also a small group of people in UT Arlington who won a recent NSF grant who are focusing on GPU applications. We would invite them to Edinburgh and have collaborated with them successfully in the past. 4. Identify a focus that will ensure the effort is most likely to be productive i.e. a specific test application domain/current unsolved research challenge. We mentioned earlier matrix-element method analyses for Higgs searches. These were originally developed for top-quark measurements at the Tevatron. They exploit knowledge of the physics matrix element, convolved with the detector response, to use all measurable event properties, to weight signal versus background. There are two different applications: one is signal/background discrimination and other is maximum-likelihood fitting, which directly extracts the signal and background yields and/or model parameters such as particle masses. The required Monte Carlo integrals are the result of the convolutions of matrix element with the detector resolution function for the observed kinematics of the final state and the integral over all unconstrained regions of the phase-space (eg. due to unobserved particles). Depending on the process, such integrals take from minutes to hours per single event thereby making building complex fits impractical for analysis. Monte Carlo (MC) integration, key to many particle physics calculations, involves evaluating the integrand function a large number of times using random values and then summing the results. This is ideal for GPU implementation, which can be broken in three stages and parallelised: random number generation, function evaluation and then summing. This can be optimised using the GPU streaming technology to remove the I/O latency. Using the approaches we prototyped for the ATLAS level 2 trigger we will dramatically improve Monte Carlo integration time and disseminate the knowledge. Shown below is a test case example where we parallelised the required numerical integration to simulation particles passing through though a magnetic field. Page 2 of 5 5. Please list any people who have agreed to actively collaborate. There are a large number of people we are starting to work with. These are some of the main contacts. Paolo Calafiura Sverre Jarp Amir Farbin Tim Lanfear David Levinthal Roger Geoff George Jones 6. ATLAS software chief architect (Berkeley) Intel Openlab leader (CERN) Physics analysis tools convenor, UT Arlington NVIDIA Intel Dell Dell Sketch of who is probably working in the area, and/or might be interested UK and USA ATLAS collaborations (probably about 10-20 people will work in this area). In the UK the main Universities are Oxford, Cambridge, Sheffield, Liverpool and Lancaster, with 1-3 people in each. 7. Identify the current key research challenges(s) in the area Experimental particle physics applications are predominantly written in C++, which embraces complex data structures and is inherently type unsafe. This makes it particularly difficult to parallelise and the very large software projects within ATLAS will be extremely difficult to port to new architectures. Currently we are bridging to new devices by using Linux operating system forking, and copy-on-write shared memory. However, as the level of parallelism increases with increasing number of compute cores then this will not scale. New ideas will be required, either breaking the event up into different pieces for parallelisation or some other threaded design to ensure the compute pipelines are sufficiently filled. Hyper-threading is another bridging possibility with the recent Intel CPUs to alleviate the problem (inefficient use of the devices). Lastly, accelerators (GPUs) for time consuming algorithms would be a very major breakthrough in the field and give us a lot of visibility (which has already started) if successful. The other major challenge is that compute performance is limited these days by power costs, memory access and instruction level parallelism. However the NVIDIA roadmap for future GPU devices takes this all into consideration and looks extremely exciting. Page 3 of 5 8. What are the plausible outcomes (deliverables) from the theme? Journal papers, books, reports? Will they be entirely theoretical, or will there be some experiments and/or software produced? 1. Package releases with parallel code and acceleration examples and recipes 2. ATLAS public note (similar to a publication) 3. Later analysis publications with LHC data 9. Sketch of the kind of events (focus/scope) proposed, where they would be held and who would participate. 1. We would likely host 2 international workshops and in addition bring dedicated people over for the week immediately before or after the workshop (to cut travel costs and more efficient for the researchers). 2. We would host 2 UK meetings to bring together our colleagues in between the above mentioned workshops. 10. What difference will be generated by running the theme for 6 or 12 months? Twelve months would allow for far more time for us to establish the project, however, we think six months is an excellent start to the programme and it could run into the ATLAS upgrade project if more funding is not available. This will then ensure more longevity of the work far beyond the end date of the theme. 11. Is the topic of the theme so specific that it can really all be "tied up" in 6 or 12 months time, or should there be some follow-on? If so how might the follow on be funded? (e.g., some of the people that have been active in the theme might make a proposal to EPSRC). Six months will only be enough to start the theme in earnest, however, we expect to utilise STFC funding following the end date of the project. We may also choose to apply for additional STFC funding to support a generic (across experiments) grant to deploy the ideas futher. This was supported and encouraged by the STFC Project Peer Review Panel (PPRP). 12. Are there opportunities for co-funding from other sources? Dr. Philip Clark has been awarded a Professor Partnership with NVIDIA, which contributed $25,000 and pre-release hardware for GPU development for particle physics research. We will use this to help support the theme further and pre-release hardware has already been made available to both Edinburgh and Lancaster via this partnership. Page 4 of 5 13. Please provide a high level project plan with milestones and the resources being applied for. We plan to run a kick-off UK meeting in February followed as soon as possible by the first international workshop, then an intermediate UK meeting, followed by a second international workshop. To ensure attendance at the first one, given the short notice we will try to plan it to be just before, or just after one of the European ATLAS software and computing weeks, for convenience of the experts. We may use one of the UK meetings as a training workshop with a combination of current ATLAS and GridPP software utilities and applications. Followed by an intensive future programming tutorial, probably using (with permission) useful resources from similar very successful state-of-the-art courses held by the CERN Intel Openlab with access to KnightsFerry and KnightCorner many integrated core devices via CERN. Resources Theme Leader (P.J.Clark @ 0.5 FTE for 6 months) Theme leader (R. Jones @0.3 FTE for 6 months) TL and small amount of student travel to/from Edinburgh Budget for visitors/speakers Workshop & meeting costs (largely speaker costs) 2 10 5 Time-plan This is a rough time plan, the main meetings are the deliverables (marked as milestones). The final milestone at the end is a report (probably ATLAS note) of the documented theme deliverables. Page 5 of 5 Curriculum Vitae Personal Details 1. Name Dr. Philip J Clark 2. Family & marital status Married 3. Nationality & Date of UK & 14 May 1974 Birth 4. Address School of Physics & Astronomy, James Clerk Maxwell Building, The Kings Buildings, University of Edinburgh EH9 3JZ, UK 5. Telephone / Fax +44 131 650 5231 (tel) / +44 131 650 7189 (fax) 6. Email P.J.Clark@ed.ac.uk Career Education Physics PhD, University of Edinburgh (2000) Physics BSc, University of Edinburgh (1996) Employment Reader, University of Edinburgh, 2010Lecturer, University of Edinburgh, 2004-2010 Research Associate, University of Colorado, 2003-2004 Research Associate, University of Bristol, 2000-2003 Research Interests Flavour physics I have completed a programme of research in the area of B meson particles. Of primary importance was the discovery of the asymmetry between matter and antimatter in the heavy quark flavour sector. The measurement of this was one of the experimental validations for the CKM matrix which was the subject of the 2008 Nobel Prize in Physics (Kobayashi & Maskawa). ATLAS physics In the last year, I have started to create a new research programme within the ATLAS experiment at the LHC. ATLAS is one of the major particle physics collaborations at the Large Hadron Collider at CERN and is one of the highest priority particle physics programmes globally: its outcomes will be critical to particle physics research for at least the next decade and beyond. I led an initiative which successfully allowed Edinburgh to become a member of the collaboration and I now lead the starting Edinburgh ATLAS efforts. We have completed our entrance qualification work and take seriously the successful operation of the detector and its simulation. Edinburgh is responsible for core detector simulation (Geant4 support) within ATLAS. We were also responsible for the environment detector control systems for the inner semiconductor track detector (pixel and silicon strip based systems). To go beyond what has already been done, we are re-writing an improved geometry for the endcap LAr calorimeter and are heavily involved the ATLAS data management. Distributed scientific computing I created and have led for the past six years the Particle Physics e-Science initiative (GridPP and ScotGrid) within Edinburgh. I established Edinburgh as part of the worldwide computing Grid for the LHC and many other scientific applications. We developed a reliable high performance tier-2 computing centre consisting of Edinburgh, Glasgow and Durham, of which I am chairman (ScotGrid). I helped pioneer the UK’s use of SRM (storage resource management) for distributed data management and successful particle physics use of general purpose clusters. Joining ATLAS has allowed me to consolidate this expertise for data analysis. I am not very interested in novel computing in particle physics, in particular, embracing the new many core architectures and general purpose graphical processing units (GPGPUs), which can result in dramatic algorithm acceleration for data analysis and the ATLAS trigger systems. 1 • • • • • • • Promotions / awards / recognition IOP poster prize in 2000 (displayed in many BaBar institutes,DOE review & translated to German) Received accelerated increment in 2006 for my research & nominated for rising star award Promoted to Reader in 2010 IPPP (Institute for Particle Physics Phenomenology) Associateship in 2010 NVIDIA professor award ($25k) 2010 Awarded several conference grants for travel: DPF, Royal Society, and European Union External examiner at University of Bristol Research supervision Total PhD students: 5 (1st supervisor) 3 (2nd supervisor) Total MSc students: 4 (all theses complete) Current PhD students 3 (1st supervisor) 3 (2nd supervisor) Total MPhys students: 3 (2 complete, 1 on way) Completed PhD theses: 2 (1st supervisor) 3 (2nd supervisor) IT Knowledge Expert in many languages C++ and Java. Proficient in Python. Expert in Linux systems, networks, etc. Professional Affiliations and Memberships Institute of Physics American Physical Society Committee memberships and Positions • ATLAS collaboration board, • GridPP collaboration board, • Chairman of the Scotgrid Tier2 compute centre (Durham, Edinburgh & Glasgow). Four most important primary author publications 1. “Measurement of the CP-violating asymmetry amplitude sin 2β” B. Aubert et al. [BABAR Collaboration] Phys. Rev. Lett. 89, 201802 (2002) (cited 420 times). Definitive sin 2β measurement. 2. B meson decays to eta(‘) K*, eta(‘) rho, eta(‘) pi0, omega pi0 and phi pi0, Phys. Rev. D70:032006, 2004 (72 cites). Review paper of the charmless quark physics analysis methodology. 3. “The BaBar detector” B. Aubert et al. [BABAR Collaboration] Nucl. Instrum. Meth. A 479, 1 (2002) (cited 1239 times). Construction and design of this veritable b-factory detector. 4. Measurements of the branching fraction and CP-violation asymmetries in B0→f(0)(980)K0s, Phys. Rev. Lett. 041802, 2005 (32 cites). CP violation measurement with a scalar meson in the final state. Citation status Peer-reviewed published papers only Renowned papers(500+ cites) : Famous papers (250-499 cites) : Very well-known papers (100-249) : Well-known papers (50-99) : Known papers (10-49) : Less known papers (1-9) : Unknown papers (0) : • • • • 2 3 16 60 228 115 5 Total eligible papers analyzed: Total number of citations: Average citations per paper: H factor: 429 15931 37 65 Some current research grants, their subject, and ongoing grant applications GridPP grant underway (for distributed data management & tier2 support NVIDIA award ($25k) unrestricted award for research (motivated by my interest in GPUs for HEP) University of Edinburgh rolling grant (STFC) pays (only) for some ATLAS academic time (0.15) Seeking ATLAS RA effort in ATLAS UK upgrade programme for simulation 2 CURRICULUM VITAE Roger William Lewis Jones Current and Past Posts Jul Jun Oct Oct. Mar. Jan. Jan. Nov. 2007 - present 2003 - June 2007 2000 - Oct 2001 1999 - Jun 2003 1996 - Oct 1999 1995 - Mar 1996 1993 - Jan 1995 1987 - Jan 1993 Chair, Lancaster University Readership, Lancaster University CERN Research Associate for 1 year Senior Lecturer, Lancaster University Lectureship, Lancaster University R.A., U. of Alberta, seconded to OPAL CERN PPE Fellowship Post-Doctoral Research Assistant, QMW, London. Academic and Professional Qualifications 1988 1983 Ph.D. in Particle Physics, Birmingham University Ph.D. Thesis: Neutral strange particle production and the NC/CC ratios in neutrinoand antineutrino-proton interactions. B.A. Physics, II, 1983 (became an M.A. in 1987), Christ Church, Oxford University 1973 – 1980 Secondary School, Bluecoat School, Church Rd., Liverpool 15. Qualifications obtained: 10 O levels (8A, 2B), 1 O/A level (A), 4 A levels (3A, 1B), 1 S level CERN Fellow 1003-1995 CERN Scientific Associate 2000-2001 Became a Fellow of the Institute of Physics in 2008 Computing Related Research Work Current research on the CERN LHC ATLAS Experiment The LHC is a collider that has only recently started to take data at CERN Geneva, and the experiments are huge projects requiring decades of planning and construction, ATLAS being the largest. This requires major crosscontinent leadership, of which I am part, determining the 20-year program of HEP worldwide.. ATLAS is a collaboration of 3000 physicists, in 169 institutions and 35 countries who will investigate many areas of physics, but notably the origin of mass (the Higgs boson), the reason for the matter-antimatter imbalance in the Universe (CP violation) and will search for new signatures such as Supersymmetry. It will generate 3 Petabytes of data per year that must be processed, stored and analysed. This requires a world-wide integrated computing system and a new generation of software beyond the Internet and the WWW called the Grid. Particle physics is the largest application area driving this development, with GridPP the UK collaboration charged with developing Grid software and providing the UK particle physics Grid. I was from 2003 until 2009 Chair of the of ATLAS International Computing Board, and as such influenced the resource and world-wide computing policy for a collaboration spanning 6 continents and more than 140 institutes. I remain the convenor of the ATLAS Computing Model group and determine the future computing resource planning. For the last two years I have been responsible for the ATLAS Upgrade Computing, covering the computing and software for the ATLAS detector upgrades and also the upgrades required to track the growing datasets and the prevailing technologies available. I head the computing aspects of the ATLAS UK Upgrade project. 1 ATLAS UK Offline Project Leader since 2002, leading the development (~45 UK FTEs in 14 institutions) of the UK computing infrastructure, offline software (physics generation, simulation, reconstruction and physics tools). Also responsible for Grid developments (see GridPP activities below), and latterly for the computing in the ATLAS UK upgrade project From September 2004 I have lead the Lancaster ATLAS group (5 academics, 7 support staff). From July 2006 I have been Head of the High Energy Physics Division at Lancaster University. GridPP and related activities I am a project management board member and represent ATLAS in the ongoing GridPP eScience projects to deploy the required Grid infrastructure for Particle Physics in the UK and to enable the experiment analysis on it. I was the Deputy Chair of the GridPP1 Experiments Board, and stood down in order to become the GridPP2 Applications Co-ordinator, co-ordinating the experiment Grid-enablement across the HEP sector. (~20FTEs under my area, ¼ of the project). I was a principal investigator on the £4.5M ESLEA cross-council project, exploiting high bandwidth networking for science. I ensure this project directly serves the ATLAS and D0 experiments, with a 2-year RA post awarded. It will exploit the excellent light-path networks now centred on Lancaster in the North West. Bibliometric Data From the Web Of science, of the 328 publications it records I am cited 9037 times, and have an h-index of 46. This is despite a relative lull in publications while preparing the ATLAS experiment, made longer by the delay in the accelerator start-up. I anticipate a return to 20-25 publications a year from 2010, with high citations. Administration/Management Current National and International Positions of Leadership International: LEP QCD Working Group (Member since 1997, Chair 1998-). Electroweak Working Group (Member Heavy Flavour group 1994-) Advisory Committee of CERN Users - UK representative 1998-2003, Chair 2000-2003 CERN Science Policy Advisory Board (1999-2004) CERN Academic Training Committee (1998-2004) LHC4 (steering committee for the 4 LHC experiments) LHC C-RRB Memorandum of Understanding Task Force ATLAS UK Computing Project Leader ATLAS International Computing Board UK Representative (2001-), Chair (2003-2009) ATLAS Upgrade Steering Group (2009-) ATLAS Computing Management Board (2003-) ATLAS UK Collaboration Board (2001-) Lancaster ATLAS Team Leader (2004-) (Deputy from 2000) National: PPARC & STFC Scientific Computing Strategy Panel/Computing Advisory Panel GridPP Experiments/User Board (2001- , Deputy Chair 2003-2004); Applications Co-ordinator (2004-); Project Management Board (2003-); Hardware Advisory Group (2001-) UK Particle Physics Users Advisory Committee (1997-), Chair since 2008 Committees and Other Bodies in Lancaster Current • Head of High Energy Physics Division (2006-) & Director of High End Computing 2