Fast Fourier Transform

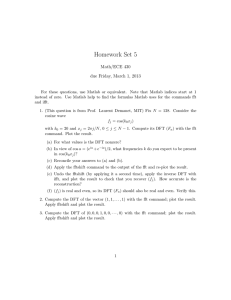

advertisement

Fast Fourier Transform

Giuseppe Scarpa

ENS 2013

Contents

1

Intro

2

2

Matrix formulation

2

3

Decimation-in-time

4

4

Decimation-in-frequence

7

5

Generalization

8

6

Critical aspects

9

7

Special applications

10

1

1

Introduction to FFT algorithms

Recall DFT and IDFT:

(DFT)

X[k] =

N

−1

X

x[n]WNkn , k = 0, 1, . . . , N − 1

n=0

(IDFT)

N −1

1 X

X[k]WN−kn , n = 0, 1, . . . , N − 1

x[n] =

N

k=0

where WN = e−j

2π

N

(twiddle factor).

• The “direct” computation of the DFT requires N 2 multiplications...

• ... plus additional operations of negligible cost

• Therefore the complexity is of the order of O(N 2 ) (floating point operations)

• FFT implementations provide O(N log2 N )

2

FFT matrix formulation

The efficient computation of the DFT stems from two properties of the twiddle factor:

k(n+N )

• WNkn = WN

k(N −n)

• WN

(k+N )n

= WN

kn ∗

= WN−kn = WN

(periodicity in k and n)

(hermitian symmetry)

The “actual” computational cost of the DFT can be better understood by looking at its matrix formulation (assume

N = 8):

1

1

1

1

1

1

1

1

x[0]

1 W8 W82 W83 W84 W85 W86 W87 x[1]

1 W82 W84 W86

x[2]

1

W82 W84 W86

1 W83 W86 W8 W84 W87 W82 W85 x[3]

X = W8 x =

·

1 W84

x[4]

1

W84

1

W84

1

W84

1 W85 W82 W87 W84 W8 W86 W83 x[5]

1 W86 W84 W82

1

W86 W84 W82 x[6]

x[7]

1 W87 W86 W85 W84 W83 W82 W8

Observe that any shuffle of the columns of WN combined with the same shuffle on the rows of x does not change

the result X:

x[0]

X[0]

1

1

1

1

1

1

1

1

X[1] 1 W82 W84 W86

W8

W83

W85

W87

x[2]

6

2

6

2

4

X[2] 1 W84

W8 x[4]

W8

W8

W8

1

W8

7

X[3] 1 W86 W84 W82 W83

W8

W8

W85

· x[6]

=

X[4] 1

1

1

1

−1

−1

−1

−1 x[1]

X[5] 1 W82 W84 W86 −W8 −W83 −W85 −W87 x[3]

X[6] 1 W84

1

W84 −W82 −W86 −W82 −W86 x[5]

1 W86 W84 W82 −W83 −W8 −W87 −W85

x[7]

X[7]

Notice the use of the properties of the twiddle factor: symmetry and periodicity.

Defining the top/bottom and the even/odd components of X and x respectively

2

T

XB , [X[N/2] · · · X[N − 1]]

T

xO , [x[1] x[3] · · · x[N − 1]]

XT , [X[0] · · · X[N/2 − 1]]

xE , [x[0] x[2] · · · x[N − 2]]

T

T

we have:

X=

XT

W4

=

XB

W4

D8 W4

−D8 W4

xE

xO

=

XE + DN XO

XE − DN XO

where we defined D8 , diag{[1 W8 W82 W83 ]},

and let XE and XO be the N2 -point DFTs of xE and xO , respectively.

2

XE → (N/2) ? N/2 − point DFT

2

Costs: X0 → (N/2) ? N/2 − point DFT

DN XO → N/2 merging cost

Divide-and-conquer recursive DFT computation

• The last equation highlights the divide-and-conquer principle which is behind any fast DFT computation.

• Merging cost: N/2 multiplications

• log2 N recursive applications

• Merging cost for a N/2-point DFT: N/4

• Merging cost for two N/2-point DFTs: N/2

• All recursions costs N/2

• Overall complexity: O(N log2 N )

N -point input sequence

n=even

n=odd

N/2-point

sequence

N -point DFT

x[n]

N/2-point

sequence

xE [n]

xO [n]

N/2-point

DFT

N/2-point

DFT

XE [k]

XO [k]

Merge N/2-point DFTs

N -point DFT coefficients

X[k]

FFT categories

A dual divide-and-conquer solution can be obtained by shuffling rows of WN and X instead.

• Decimation-in-time FFT

• Decimation-in-frequency FFT

• Other FFT

3

3

Decimation-in-time FFT algorithms

The DFT coefficients of a N -point vector x can be written as

X[k]

=

N

−1

X

N

2

x[n]WNkn

=

=

−1

X

k(2m)

x[2m]WN

=

−1

X

+

−1

X

k(2m+1)

x[2m + 1]WN

m=0

N

2

x[2m](WN2 )km + WNk

m=0

N

2

N

2

m=0

n=0

N

2

−1

X

−1

X

x[2m + 1](WN2 )km

m=0

N

2

km

+ WNk

a[m]W N

2

m=0

−1

X

km

b[m]W N

m=0

2

= A[k] + WNk B[k],

k = 0, 1, . . . N − 1

WN2 = W N

2

a[m] , x[2n], n = 0, 1, . . . , N2 − 1

(sampling)

being: b[m] , x[2n + 1], n = 0, 1, . . . , N − 1

(shift + sampling)

2

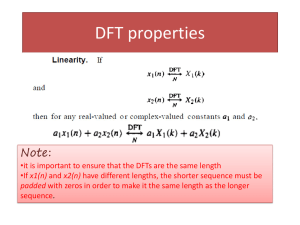

DFT

DFT

a[n] ←−−−−→ A[k] and b[n] ←−−−−→ B[k]

N/2

N/2

• Notice that the above formula holds for all k = 0, 1, . . . , N − 1, although A[k] and B[k] are

N

2 -point

sequences.

• This is not a problem given the inherent periodicity of the DFT.

• The whole DFT can be expressed by the following merging formulas:

X[k]

X[k + N/2]

A[k] + WNk B[k],

k = 0, 1, . . . , N/2 − 1

= A[k] − WNk B[k],

k = 0, 1, . . . , N/2 − 1

=

• The FFT idea is based on the recursive computation of the above merging formulas.

• In the last call, the 2-point DFT merging formulas require 1-point DFTs which are identical transformations:

X[0]

= x[0] + W20 x[1] = x[0] + x[1],

X[1]

= x[0] − W20 x[1] = x[0] − x[1].

8-point decimation-in-time FFT

X[k] = DFT8 {x[0], x[1], x[2], x[3], x[4], x[5], x[6], x[7]}, 0 ≤ k ≤ 7

⇑

A[k] = DFT4 {x[0], x[2], x[4], x[6]}, 0 ≤ k ≤ 3

B[k] = DFT4 {x[1], x[3], x[5], x[7]}, 0 ≤ k ≤ 3

⇑

C[k] = DFT2 {x[0], x[4]},

k = 0, 1

D[k] = DFT2 {x[2], x[6]},

k = 0, 1

E[k] = DFT2 {x[1], x[5]},

k = 0, 1

F [k] = DFT2 {x[3], x[7]},

k = 0, 1

4

In particular:

and then:

A[k]

= C[k] + W82k D[k]

A[k + 2]

= C[k] − W82k D[k]

B[k]

= E[k] + W82k F [k]

B[k + 2]

= E[k] − W82k F [k]

X[k]

= A[k] + W8k B[k]

A[k + 2]

= A[k] − W8k B[k]

,

,

k = 0, 1

,

k = 0, 1

k = 0, 1, 2, 3

Butterfly operation

A careful inspection reveals the use of a standard operation known as butterfly operation at every stage:

Xm [p] = Xm−1 [p] + WNr Xm−1 [q]

X [q] = X

r

m

m−1 [p] − WN Xm−1 [q]

Xm−1 [p]

Xm−1 [q]

Xm [p]

r

WN

−1

Xm [q]

Costs: 1 addition, 1 subtraction, 1 multiplication (complex)

The Overall cost is therefore given by the # of butterflies!

In summary, the FFT computation requires two stages:

Shuffling: The input sequence is successively decomposed in even and odd sequences.

Merging: starting from 1-point DFTs recursive applications of butterfly operations carry to the N -point DFT.

x[0]

x[0]

x[0]

x[0]

x[1]

x[2]

x[4]

x[4]

x[2]

x[4]

x[2]

x[2]

x[3]

x[6]

x[6]

x[6]

x[4]

x[1]

x[1]

x[1]

x[5]

x[3]

x[5]

x[5]

x[6]

x[5]

x[3]

x[3]

x[7]

x[7]

x[7]

x[7]

Merge two

1-point

DFTs

Merge two

1-point

DFTs

Merge two

1-point

DFTs

Merge two

1-point

DFTs

X[0]

5

X[2]

Merge two

4-point

DFTs

Merge two

2-point

DFTs

X[3]

X[4]

X[5]

X[6]

X[7]

Merging

Shuffling

X[1]

Merge two

2-point

DFTs

Shuffling

• Shuffling has a negligible computational cost

• Very strict memory constraints may require special solutions

• Bit-reversed ordering: it is easy to verify that the required shuffling can be obtained by reversing the bit order

of the index binary code

swap

x[(b2 b1 b0 )2 ] ←−−−→ x[(b0 b1 b2 )2 ]

swap

x[4] = x[(100)2 ] ←−−−→ x[(001)2 ] = x[1]

• It allows for in-place swap

Merging

x[0]

x[4]

W80

−1

x[2]

x[6]

W80

W80

W80

Stage 1

N

2

C[1]

A[1]

D[0]

W80

W82

−1

E[0]

F [0]

F [1]

−1

W80

W82

Stage 2

X[0]

X[1]

A[2]

−1

E[1]

−1

x[3]

x[7]

A[0]

D[1]

−1

x[1]

x[5]

C[0]

−1

X[2]

A[3]

X[3]

B[0]

W80

B[1]

W81

B[2]

W82

B[3]

−1

W83

Stage 3

−1

−1

−1

−1

X[4]

X[5]

X[6]

X[7]

log2 N = 4 log2 8 = 12 butterflies =⇒ Complexity O(N log N )

FFT with both input and output in natural order

x[0]

X[0]

0

WN

x[1]

0

WN

x[2]

x[5]

x[6]

x[7]

X[2]

0

WN

x[3]

x[4]

X[1]

0

WN

0

WN

0

WN

0

WN

1

WN

−1

−1

−1

−1

X[4]

2

WN

−1

2

WN

2

WN

X[3]

−1

−1

−1

−1

−1

3

WN

−1

−1

6

X[5]

X[6]

X[7]

FFT with identical stages

x[0]

x[4]

X[0]

0

WN

0

WN

0

WN

0

WN

0

WN

1

WN

X[1]

x[2]

x[6]

X[2]

−1

X[3]

−1

−1

x[1]

x[5]

X[4]

0

WN

4

2

WN

−1

−1

x[3]

x[7]

2

WN

−1

−1

−1

0

WN

−1

2

WN

3

WN

−1

−1

−1

X[5]

X[6]

X[7]

Decimation-in-frequence FFT algorithms

In order to get a dual “decimation-in-frequency” FFT rearrange even and odd DFT coefficients as follows:

X[2k]

=

N

−1

X

(2k)n

x[n]WN

N

−1

X

=

n=0

n=0

−1

X

n=0

2

N

2

N

2

=

kn

x[n]W N

kn

x[n]W N

+

2

−1 X

N

k(n+ N

2 )

x n+

WN

2

2

n=0

N

2

=

−1 X

N

kn

,

x[n] + x n +

WN

2

2

n=0

k = 0, 1, . . . ,

N

−1

2

Similarly, for odd coefficients, we get:

N

2

X[2k + 1] =

−1 X

n=0

N

kn

WNn W N

,

x[n] − x n +

2

2

k = 0, 1, . . . ,

The above equations represent N2 -point DFTs performed on the following sequences:

(

a[n] , x[n] + x n + N2

N

n , n = 0, 1, . . . ,

−1

N

2

b[n] , x[n] − x n + 2 WN

That is

(

X[2k] = A[k] = DFT N {a[0], . . . , a[N/2 − 1]}

2

X[2k + 1] = B[k] = DFT N {b[0], . . . , b[N/2 − 1]}

2

Again a divide-and-conquer strategy is possible.

Decimation-in-frequence butterfly operation

In this case the butterfly operation needed is the following:

Xm [p] = Xm−1 [p] + Xm−1 [q]

X [q] = (X

r

m

m−1 [p] − Xm−1 [q])WN

7

N

−1

2

Xm−1 [p]

Xm [p]

r

WN

Xm−1 [q]

−1

Xm [q]

8-point decimation-in-frequence FFT

x[0]

X[0]

0

WN

x[1]

x[2]

−1

x[3]

x[4]

−1

x[5]

5

0

WN

X[6]

−1

X[1]

0

WN

2

WN

−1

x[7]

2

WN

1

WN

−1

x[6]

X[2]

−1

0

WN

X[4]

−1

0

WN

0

WN

−1

3

WN

−1

X[5]

−1

X[3]

2

WN

0

WN

−1

X[7]

−1

FFT Generalization

The previous decimation-in-time/frequency FFTs can be viewed as particular cases of a general approach.

N = N1 N2

⇓

x[n] and X[k] can be split in N1(2) sub-sequences of length N2(1)

Let:

n = N2 n 1 + n 2 ,

n1 = 0, 1, . . . , N1 − 1,

n2 = 0, 1, . . . , N2 − 1,

k = k1 + N1 k2 ,

k1 = 0, 1, . . . , N1 − 1,

k2 = 0, 1, . . . , N2 − 1,

Therefore:

(N2 n1 +n2)(k1 +N1 k2 )

WNnk = WN

= WNN2 n1 k1 WNk1 n2 WNN2 N1 n1 k2 WNN1 n2 k2 = WNn11 k1 WNk1 n2 WNn22 k2

2π

2π

In fact WNN1 =e−j N N1 = e−j N1 N 2 N1 = e−j N2 = WN2 , and ...

The DFT X[k] = X[k1 + N1 k2 ] can be written:

2π

X[k1 + N1 k2 ]

=

N

−1

X

x[n]WNkn

n=0

=

NX

2 −1

"

n2 =0

=

NX

2 −1

NX

1 −1

!

#

x[N2 n1 + n2 ]WNk11n1 WNk1 n2 WNk22n2

n1 =0

Xn2 [k1 ]WNk1 n2 WNk22n2

n2 =0

=

NX

2 −1

n2 =0

8

xk1 [n2 ]WNk22n2

• Shifting (n2 ) + sampling (N2 ) of x[n] yields:

DFT

NP

1 −1

N1

n1 =0

xn2 [n1 ] , x[N2 n1 + n2 ] ←−−−−→ Xn2 [k1 ] =

xn2 [n1 ]WNk11n1

• xk1 [n2 ] , WNk1 n2 Xn2 [k1 ]

Summarizing, a N -point DFT can be decomposed in lower order DFTs by means of the following steps:

Xn2 [k1 ] = DFTN1 {x[N2 n1 + n2 ]} (N2 ×)

xk1 [n2 ] = WNk1 n2 Xn2 [k1 ]

(N2 ×)

X[k + N k ] = DFT {x [n ]} (N ×)

1

1 2

N2

k1 2

1

The N1 - and N2 -point DFTs can be computed with the same procedure if N1 and N2 are composite numbers.

⇓

Recursion

(divide-and-conquer)

• Complexity (single stage): N2 N12 + N1 N22 = N (N1 + N2 )

• Complexity direct DFT: N 2

• (N1 + N2 ) << N ⇒ large gain

Special cases:

• N2 = 2, N1 =

N

2:

first stage decimation-in-time FFT

• N1 = 2, N2 =

N

2:

first stage decimation-in-frequence FFT

• N = 2ν : radix-2 FFT algorithm

• N = Rν : radix-R FFT algorithm

• N = R1 R2 · · · RM : mixed radix FFT

• ... (many others)

All radix solutions have complexity of order O(N log N ), although they have different absolute complexity.

For example, radix-4 has half multiplications with respect to the radix-2...

6

Critical aspects

How to get the best performance in your own problem?

Critical factors

Twiddle factors. There are three ways:

• Computing anew each factor when needed. This is a computationally expensive solution.

• Computing one (recursively the remaining) factor per stage. This solution can be useful for device with

strict memory constraints.

9

• Look-up table, LUT. Fastest and most used in practice.

Butterfly op. Traditionally the bottleneck for FFT algorithms. It is no longer so. Arithmetic, indexing and transfer operations are becoming equally important in modern computer architectures. Therefore, neglecting nonarithmetic ops when assessing the complexity is no longer (or, at least, not always) acceptable.

Indexing. Some solutions aimed at reducing the number of butterfly ops may pay this as indexing (computation of

indexes for data access)

.

Memory access. Memory hierarchies (CPU registers→cache→main memory→external) have increasing (decreasing) capacity (speed).

An inefficient memory use may degrade performances.

Shuffling. Bit-reversed reordering of data has its weight on the overall computational cost. However in-place solutions

are still preferred in the most cases.

The overall complexity is finally due to three interrelated elements:

• FFT algorithm: O(N 2 ) → O(N log N )

• Compiler

• Target processor

The chosen FFT should match the underling hardware: parallelization, vectorization and memory hierarchy.

For a fixed FFT solution it is also important to take care of the programming technique.

FFTW

It is worth mentioning the FFTW algorithm1

FFTW

The FFTW searches over the possible factorizations of length N and empirically determines the one with the best

performance for the target architecture.

Using the FFTW library involves two stages:

• planning (optimization based N )

• execution (as many times as needed with the given plan)

Important note: FFTW is behind the family of fft commands in the latest release of M ATLAB

7

Special applications

Goertzel’s algorithm

Since WN−kN = 1, the DFT can be expressed as

X[k] =

N

−1

X

x[n]WNkn =

n=0

n=0

Example: N = 4

1 FFTW

N

−1

X

stands for “Fastest Fourier Transform in the West”

10

−k(N −n)

x[n]WN

3

P

X[k] =

= W4−k

−k(4−n)

= x[3]W4−k + x[2]W4−2k + x[1]W4−3k + x[0]W4−4k

x[3] + W4−k x[2] + W4−k x[1] + W4−k x[0]

.

n=0

x[n]W4

Hence we can use a recursive computation:

yk [−1] = 0,

yk [0] = x[0] + W4−k yk [−1],

yk [1] = x[1] + W4−k yk [0],

yk [2] = x[2] + W4−k yk [1],

yk [3] = x[3] + W4−k yk [2],

yk [4] = x[4] + W4−k yk [3] = W4−k yk [3].

More in general we can write:

X[k] = WN−k x[N − 1] + WN−k x[N − 2] + WN−k . . . + WN−k x[1] + WN−k x[0] . . .

for which we can use the

Recursive computation of DFTcoefficients

yk [n] = WN−k yk [n − 1] + x[n], 0 ≤ n ≤ N

X[k] = y [N ]

k

0≤k ≤N −1

In other words, we have N 1st order AR systems with functions:

Hk (z) =

1

, 0≤k ≤N −1

1 − WN−k z −1

Features

• Cost for a single coefficient: N multiplications + additions

• Only one twiddle factor is to be computed. The others are implicitly computed by means of the recursion.

• Computation starts as soon as the first sample is available.

• No need to store the whole signal.

• If only M < log2 N coefficients are required, it is faster than FFT algorithms

The computation efficiency can be further improved...

Hk (z)

=

1

1 − WNk z −1

=

−k −1

−k −1

1 − WN z

(1 − WN z )(1 − WNk z −1 )

1 − WNk z −1

1 − 2z −1 cos(2πk/N ) + z −2

1

=

1 − WNk z −1

1 − 2z −1 cos(2πk/N ) + z −2

=

That is a cascade of two systems

• a 2nd order IIR with real coefficients

• a 1st order FIR

11

Eventually we have

Modified Goertzel’s

algorithm

vk [n] = 2 cos(2πk/N ) vk [n − 1] − vk [n − 2] + x[n], 0 ≤ n ≤ N

X[k] = y [N ] = v [N ] − W k v [N − 1],

k

k

N k

0≤k ≤N −1

with initial conditions vk [−1] = vk [−2] = 0, ∀k.

Gain:

• For each coefficient we have N real multiplications and 1 complex one, instead of N complex multiplications

⇒ 50% complexity saving (if x[n] is real)

• Observing that vk [n] = vN −k [n] ⇒ (additional) 50% complexity saving (no matter x[n] real or not)

M ATLAB : The function goertzel is available with the SP toolbox

Chirp transform algorithm (CTA)

Using the identity kn = 12 k 2 − (n − k)2 + n2 we can write:

X[n]

N

−1

X

=

n2

x[k]WNkn = WN2

k2

−

x[k]WN2 WN

(n−k)2

2

k=0

k=0

=

N

−1

X

n2

2

x[n]WN

2

− n2

∗ WN

n2

WN2 ,

0 ≤ n ≤ N − 1.

Three steps:

−n2 /2

• x[n] −→ x̃[n] , x[n]/h[n], where we defined h[n] , WN

• convolve x̃[n] ∗ h[n]

• again divide by h[n]

−n2 /2

πn

Notice that h[n] = WN

= ej N n is a complex exponential of increasing frequency nω0 , with ω0 = π/N (a

chirp signal).

This algorithm is easily generalized to compute M DTFT values over equally spaced frequencies in a given range

∆ω = ωH − ωL :

∆ω

ωn , ωL +

n = ωL + δω n, 0 ≤ n ≤ M − 1

M −1

where δω , ∆ω /(M − 1).

They are given by

X[n] = X(e

jωn

)

=

N

−1

X

−1

n

ok NX

−j(ωL +δω n)

x[k] e

x[k]e−jωL k e−jδω

=

k=0

=

N

−1

X

k=0

g[k]W nk ,

n = 0, 1, . . . , M − 1

k=0

where g[n] , x[n]e−jωL n and W , e−jδω .

With the same manipulations made above we get the CTA for an arbitrary frequency range

Chirp Transform Algorithm (CTA)

Given a N -point signal x[n] its DTFT values over equally spaced frequencies ωn are given by

n

o

2

2

2

X[n] = X(ejωn ) =

g[n]W n /2 ∗ W −n /2 W n /2 , 0 ≤ n ≤ M − 1

where

12

nk

• g[n] = x[n]e−jωL n

• W = e−jδω

• ωL is the smallest frequency

• δω is the frequency spacing

• ωn = ωL + δω n

Block diagram of the CTA

x[n]

×

e−jωL W

W−

n2

2

n2

2

×

W

X[n]

n2

2

Advantages wrt FFT

• No need to have N = M

• No need for N and M to be composite numbers

• arbitrary frequency resolution δω

• arbitrary ωL , hence arbitrary frequency range

⇓

high-density narrowband spectral analysis

M ATLAB implementation of the CTA

function [X,w] = cta(x,M,wL,wH)

% Chirp Transform Algorithm (CTA)

% Given x[n] CTA computes M equispaced

% DTFT values X[k] on the unit circle

% over wL <= w <= wH

Dw = wH-wL; dw = Dw/(M-1); W = exp(-1j*dw);

N = length(x); nx = 0:N-1;

K = max(M,N); n = 0:K; Wn2 = W.ˆ(n.*n/2);

g = x.*exp(-1j*wL*nx).*Wn2(1:N);

nh = -(N-1):M-1; h = W.ˆ(-nh.*nh/2);

y = conv(g,h);

X = y(N:N+M-1).*Wn2(1:M); w = wL:dw:wH;

• Computational cost is of the order of O(M N )

• It can be reduced by using FFT to get the convolution

13

Chirp z-transform (CZT)

The so-called Chirp z-transform (CZT) is a slight modification of the CTA obtained with the following substitutions:

ejωL → RejωL

ejδω → rejδω

⇒ g[n] = x[n]

⇒ W =

1 −jωL

e

R

1 −jδω

e

r

(R > 0)

(r > 0)

it is easy to prove that these changes provide the values of the z-transform on a spiraling contour sampled in

n

zn = RejωL rejδω = Rrn ej(ωL +δω n) , 0 ≤ n ≤ M − 1

r < 1 ⇒ spiral-in;

r > 1 ⇒ spiral-out

The z-transform samples will be therefore given by

o

n

2

2

2

X[n] = X(zn ) =

g[n]W n /2 ∗ W −n /2 W n /2 , 0 ≤ n ≤ M − 1

• M ATLAB : The SP Toolbox provides the function czt

• Observe that the CTA is a special case of the CZT

The zoom-FFT

Target

• CTA and CZT are examples of zoomed DFT.

• However they are computationally expensive.

• The zoom-FFT algorithm presented here is also computationally efficient.

Problem setting

1. δω ,

2π

N ,

target spectral resolution

2. ∆ω = ωH − ωL , target spectral range

3. M , # of desired samples (such that N = M L, with eventual zero-padding of x[n])

Notice the constraints given by such conditions. They become negligible for large values of N and M .

Let the frequency index range be

kL ≤ k ≤ kL + M − 1 = kH

where ωL = kL δω and ωH = kH δω .

Consider the L samplings of length M

xl [m] , x[l + mL], 0 ≤ m ≤ M − 1, 0 ≤ l ≤ L − 1

The desired coefficient X[k], for kL ≤ k ≤ kH , are given by

x[k]

=

N

−1

X

x[n]WNnk =

n=0

=

L−1

−1

XM

X

x[l + mL]WN (l+mL)k

l=0 m=0

L−1

X

"M −1

X

l=0

m=0

#

xl [m]W mk

M

14

WNlk ,

kL ≤ k ≤ kH

Notice that it is the first stage of a DIT-FFT (Decimation-in-time FFT).

The expression in square brackets is a M -point DFT evaluated over the range kL ≤ k ≤ kH . Defined

Xl [r] ,

M

−1

X

mr

xl [m]WM

, 0 ≤ r ≤ M − 1,

m=0

thanks to the inherent periodicity of the DFT, we can write

M

−1

X

mk

xl [m]WM

= Xl [hkiM ],

kL ≤ k ≤ kH

m=0

Which yields the final formula of the zoom-FFT...

zoom FFT algorithm

X[k] =

L−1

X

Xl [hkiM ]WNlk ,

kL ≤ k ≤ kH

l=0

steps:

(i) eventual zero-padding of x[n] to length N

(ii) sampling/decimation by L in M -length sequences xl [m]

(iii) M -point FFT for each sub-sequence xl [m]

(iv) reindexing of the DFTs Xl [r] through modulo-M

(v) final weighted sum ∀k ∈ [kL , kH ].

Complexity = O(N log M + 2N ), instead of O(N log N ). In fact:

(iii) O(LM log M ) = O(N log M ) (assuming M power of 2)

(v) O(LM ) = O(N ) (lin. comb.) + O(N ) (twiddle exp.)

Other solutions

Further solutions (just a mention):

• QFT (a quick Fourier Transform)

• SDFT (sliding DFT)

Learning with M ATLAB

Problem on the use of goertzel - part 1/2

The Goertzel algorithm can be used to detect single tone sinusoidal signals. We want to detect single tone signals

of frequencies 490, 1280, 2730, and 3120 Hz generated by an oscillator operating at 8 kHz. The Goertzel algorithm

computes only one relevant sample of an N -point DFT for each tone to detect it.

(a) What should be the minimum value of DFT length N so that each tone is detected with no leakage in the adjacent

DFT bins (synchronous sampling)? The same algorithm is used for each tone.

(b) Generate one second duration samples for each tone and use the goertzel function on each set of samples to

obtain the corresponding X vector. Identify and use the same index vector k in all cases. k should index the 4

frequencies of interest.

15

(c) Define and test a proper criterion to detect the tones given X

(d) What happens in case of asynchronous frequency sampling? To answer the question force asynchronous sampling with a careful choice of N . For example increase the value obtained in (a) to fall in a “worst case”.

Problem on the use of goertzel - part 2/2

Dual-tone multifrequency (DTMF) is a generic name for a push-button signaling scheme used in Touch-Tone

and telephone banking systems in which a combination of high-frequency tone and a low-frequency tone represent a

specific digit. In one version of this scheme, there are eight frequencies arranged as shown in figure, to accommodate

16 characters. The system operates at a sampling rate of 8 kHz.

1

2

3

A

691

4

5

6

B

770

7

8

9

C

852

∗

0

#

D

941

1209

1336

1477

1633

[Hz]

(a) Write a M ATLAB function x = sym2TT(S) that generates samples of high and low frequencies of one-half

second duration, given a symbol S according to the above expression.

(b) Using the goertzel function develop M ATLAB function S = TT2sym(x) that detects the symbol S given

the touch-tone signal in x.

On the use of the CTA

Referring to the previous exercise write a M ATLAB script to do the following:

(a) Generate a one second duration signal x containing the combination of all 8 tones.

(b) Generate and plot the two segments of the related DTFT, capturing the 4 low frequency tones and the 4 high

frequency tones, respectively. Use the CTA by means of the M ATLAB function czt

16