A New Linear Adaptive Controller: Design - www

advertisement

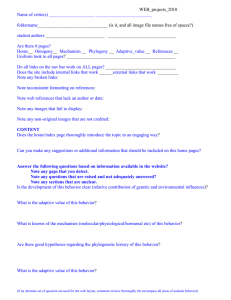

IEEE TRANSACTIONS ON AUTOMATIC CONTROL, VOL. 45, NO. 5, MAY 2000 883 A New Linear Adaptive Controller: Design, Analysis and Performance Youping Zhang and Petros A. Ioannou Abstract—The certainty equivalence and polynomial approach, widely used for designing adaptive controllers, leads to “simple” adaptive control designs that guarantee stability, asymptotic error convergence, and robustness, but not necessarily good transient performance. Backstepping and tuning functions techniques, on the other hand, are used to design adaptive controllers that guarantee stability and good transient performance at the expense of a highly nonlinear controller. In this paper, we use elements from both design approaches to develop a new certainty equivalence based adaptive controller by combining backstepping based control law with a normalized adaptive law. The new adaptive controller guarantees stability and performance, as well as parametric robustness for the nonadaptive controller, that are comparable with the tuning functions scheme, without the use of higher order nonlinearities. I. INTRODUCTION T HE “certainty equivalence” adaptive linear controllers with normalized adaptive laws have received considerable attention during the last two decades [5], [8], [15]. These controllers are obtained by independently designing a control law that meets the control objective assuming knowledge of all parameters, along with an adaptive law that generates on-line parameter estimates that are used to replace the unknowns in the control law. The normalized adaptive law could be a gradient or least squares algorithm [5], [8], [15]. The control law is usually based on polynomial equalities resulting from a model reference or pole assignment objective based on linear systems theory. An important feature of this class of adaptive controllers is the use of error normalization, which allows the complete separation of the adaptive and control laws design. Since the “normalizing signal” depends on closed-loop signals, the performance bounds are hard to quantify a priori, and there is no systematic way of improving them. In fact, it was shown in [19] that the performance of these controllers can be quite poor. With asymptotic performance characterized in the form or mean-squared-error (MSE) bound, the undesirable of an phenomena such as bursting [6] cannot be prevented. Efforts to improve performance include the use of fixed compensators [18], [1] to depress the effect of estimation error, and the introduction of “dynamic certainty equivalence” which avoids Manuscript received April 7, 1998; revised March 24, 1999 and June 16, 1999. Recommended by Associate Editor, M. Krstic. This work was supported in part by NASA under Grant NAGW-4103 and in part by NSF under Grant ECS-9877193. Y. Zhang is with Numerical Technologies, Inc., San Jose, CA 95134-2134 USA (e-mail: yzhang@numeritech.com). P. A. Ioannou is with the Department of Electrical Engineering-Systems, University of Southern California, Los Angeles, CA 90089-2562 USA (e-mail: ioannou@rcf.usc.edu). Publisher Item Identifier S 0018-9286(00)04158-1. direct error normalization by imposing a passive identifier with “higher order tuners” [13], [16], [2]. All these controllers, however, involve an increase of controller order, especially the “dynamic certainty equivalence” controller. Moreover, all these controllers are “direct adaptive controllers” which directly estimate the controller parameters. This may increase the number of parameters to be estimated relative to the number of actual unknowns as the controller usually contains more parameters than the plant for higher relative degree plants, and a priori knowledge about the plant parameters is generally not readily usable. In [10] and [9], an adaptive control approach that deviates from the traditional certainty equivalence ones was introduced by employing integrator backstepping, nonlinear damping, and tuning functions, which results in a relatively complex controller structure. The design procedure is performed recursively, and the adaptive law is determined without error normalization. The resulting controller guarantees good transient performance in the ideal case, and the performance bounds can be computed and reduced systematically [12]. The adaptive law estimates the unknown plant parameters directly, allowing full utilization of a priori knowledge and eliminating the possible overparameterization introduced by traditional “direct” approach [8], [9]. Another remarkable feature of the new controller is the parametric robustness when adaptation is switched off [10]. These properties are achieved, however, at the expense of a highly nonlinear controller, even though the plant is linear. Higher order nonlinear terms can potentially lead to difficulty in proving robustness at the presence of high-frequency unmodeled dynamics, especially those that lead to fast nonminimum phase zeros and change in high-frequency gain sign [22]. In such a case, the tuning function’s design can only guarantee local stability when these “undesirable” unmodeled dynamics are present [7]. Moreover, since the tuning functions design is Lyapunov-based, it excludes the flexibility of choosing among different types of adaptive law. In this paper, we use elements from both design approaches to develop a new certainty equivalence adaptive controller by combining a backstepping control law with a normalized adaptive law. All conditions equal, the new controller guarantees performance that is comparable with the tuning functions adaptive backstepping controllers of [10] and [9], without introducing higher order nonlinear terms, extra parameter estimates, or increasing controller order. Despite the presence of a normalizing and performance bounds are computable and signal, the can be reduced systematically via appropriate choice of design parameters. Similar parametric robustness and performance of the nonadaptive controller as shown in [12] are also achieved. 0018–9286/00$10.00 © 2000 IEEE 884 IEEE TRANSACTIONS ON AUTOMATIC CONTROL, VOL. 45, NO. 5, MAY 2000 The paper is organized as follows: In Section II, we introduce the new design procedure that gives a class of adaptive controllers with various control and adaptive laws and prove stability of the resulting adaptive control system. In Section III, we establish performance bounds and parameter convergence properties and explain how performance can be systematically improved. Section IV shows the parametric robustness and performance when adaptation is switched off. Before concluding the paper in Section VI, we present some simulation results to demonstrate the properties of the new controller in Section V. , respectively, as the Notations: We denote vector Euclidean norm and matrix Frobenius norm, i.e., For a time funcdenotes the norm; tion denote the norms, respectively; and denote and induced norm, respectively, of a stable proper the Unless specified otherwise, denotes transfer function denotes the estimation error. the estimate of and as an identity matrix, In addition, we denote as an zero matrix, as the zero vector. Finally, we will use the symbol to denote interchangeably either the operator Laplace variable in the frequency domain, or the in the time domain. II. ADAPTIVE CONTROL DESIGN The plant under consideration is the following singleinput/single-output (SISO) linear time invariant (LTI) system: (1) with the following standard assumption. Assumption: The plant parameters are unknown constants; an upper bound for the nominal plant order the plant relative degree and the sign of the are known; the plant is minimum high frequency gain is Hurwitz. phase, i.e., The control objective is to design an output feedback control law, such that all the closed-loop signals are uniformly bounded, asymptotically tracks a given reference and the plant output with known, bounded derivatives. signal The adaptive controller is designed based on the certainty equivalence principle. The control law is designed by employing backstepping, while the adaptive law is chosen by using a certain plant parameterization independent of the controller parameters. A. Filter Design and Plant Parameterization We introduce the standard filters as used in traditional adaptive controllers [8], [15]: (2) where is a Hurwitz matrix defined by (3) Based on the plant input–output relation (1), we can get a representation of the tracking error in terms of the filter signals as follows: (4) where (5) (6) $ (7) is the initial condition of the state of the plant (1) in its observer canonical form, and $ represents the inverse Laplace transform. Equation (4) gives a standard bilinear parameterization in terms of the unknown parameters for the tracking error The signals, compared with filter structure (2) involves a total of signals in the Kresselmeier observer filters the -filters and used in [10] and [9], which involves -filters. Though the overall orders are the same for both filter structures our filter design greatly reduces the complexity of the filter structure and subsequently the controller structure by reducing the number of signals involved considerably. B. Backstepping Control Law Design To demonstrate the use of backstepping, we ignore the trivial case and assume that relative degree 1) Design Procedure—Initialization: A unique feature of our backstepping design relative to that of [9] is that instead of targeting at the tracking error directly from the first step, as is mostly done for backstepping design [11], we change our control objective to achieve convergence to zero of the estimated tracking error based on (4) (8) are the estimates of In subsequent design where steps, we continue to replace wherever it appears with its estimate Since this is a certainty equivalence design, we treat as if they were the true parameters and consequently do not attempt through “tuning to eliminate the terms containing functions.” Nor do we attempt to apply “nonlinear damping” to achieve independent input-to-state-stability which may lead to higher order nonlinear terms. were the control input, we would choose In view of (8), if so that which achieves the tracking obor Since is not the control input, jective ZHANG AND IOANNOU: A NEW LINEAR ADAPTIVE CONTROLLER: DESIGN, ANALYSIS AND PERFORMANCE becomes the first error signal. The output mismatch and the tracking error are represented in terms of the parameter error as (9) (10) defined, we can proceed With the first error signal to Step 1. For convenience, we introduce the variable Step 1: Using (6), (8), (2), and compute as follows: we (11) where 885 to the term as the “stabilizing damping” which places the dynamics to a desired one. On the other hand, the parameter terms, which we refer to as the “normalizing dependent damping,” is used to normalize the effect of the uncanceled and terms. We will explain what this normalization means later in the section. The two types of damping carry different missions and will be used over and over again in the following steps. is not the control input, the design does not stop As becomes the second error here. The difference signal, and the error dynamic equation for is (20) follow a unified proceThe subsequent steps 2 through dure which can be performed recursively as follows. : The following design steps will be Step 2: we described in a recursive manner. After finishing step stabilizing functions will have obtained (21) (12) (13) Differentiating and substituting in (11), we get (14) where is an vector of continuously where The stabilizing functions are so differentiable functions of chosen that the dynamics of the th error system is (22) are continuously where differentiable with respect to To proceed to step we differentiate and substitute in (21), (22), and (11). Following some straightforward arrangements, we arrive at (23) where (15) In the known parameter and nonadaptive case, would be zero and would be exponentially decaying. So we would treat as the “virtual” control and design the first stabilizing function to be (16) (24) (25) where (17) (26) (18) (19) bitrary are design constants, and is an arconstant matrix. We refer (27) 886 IEEE TRANSACTIONS ON AUTOMATIC CONTROL, VOL. 45, NO. 5, MAY 2000 and are again design constants. From (23), we see that to stabilize the sub-system, we can select the th stabiwhich results in lizing function in the form of (21) with the error dynamic equation (22) for Now we have finished step and have inductively proven (21), We can proceed to the next step. (22) for Step : In step , the control appears after differentiating By following the derivation of step we can easily reach where is a design constant, which we refer to as the normalizing coefficient, that determines the strength of normalizais described by tion. The normalizing property of (28) As the value of approaches zero, where diminishes. the normalizing property of The advantage of the adaptive law (32) based on bilinear parameterization is that we circumvent the requirement of inverting an estimated parameter by estimating the parameter and its inverse simultaneously. In return, it has the drawback of estimating an extra parameter, and it excludes a least square (LS) based adaptive law. 2) Adaptive Law with Linear Parameterization: By way of and at a linear parameterization, we can avoid estimating the same time, which is typically done in most standard “indirect” adaptive control schemes. Specifically, we estimate only one of the two unknowns and obtain the other one by inversion. However, it requires such a parameter estimate to be bounded away from zero, which is achieved with parameter projection if we have additional knowledge on the bound of the true parameter. In particular, we assume the following. Assumption: An upper bound of the value of the high-freis known. quency gain, Alternatively, we can also assume knowledge of a lower which was considered in [20]. The knowledge of bound of means that Hence we can use parameter projection to ensure the estimate of is bounded from below by Then we can invert to obtain The resulting paramis eter error for This way we are able to obtain a linear parameterization from (9) as follows: are functions of defined the same way where Since the control input is now availas (24)–(27) for able for design, we can choose the same way we chose the stabilizing functions to cancel the undesirable terms, i.e., (29) that leads to (30) An alternative control law is to consider the fact that this is the last design step, the control law will be fixed herein and no further differentiation will occur. Hence we are allowed to and as choose the control to cancel well, both of which are available. Specifically, we choose the following control law: (31) where the “normalizing damping” term, due to the elimination and becomes unnecessary, so that of This finishes the control law design. we can set We observe that during the intermediate design steps, the sigare absent from the stabilizing functions and nals their positions have been completely replaced by the new variables which is different from the tuning functions design [9]. This is important for the performance results of Section III to be valid. (34) (35) where C. Normalized Adaptive Law 1) Adaptive Law with Bilinear Parameterization: Equation (4) is the standard bilinear equation error model that appears in many adaptive control systems [8], [15], based on which a gradient based adaptive scheme is available that takes the form of [8], [15] (36) we can choose the following update Considering that law for based on the linear parameterization (35): (32) (37) are constant adaptive gains, and is the normalizing signal, which is used to guarantee A typical choice is which has been considered in [20]. Here we employ a slightly different version of the normalizing signal where (33) is the adapwhere tation gain matrix. In the case of a gradient scheme, is constant. In the case of a LS scheme, is determined by the following dynamical equation: (38) ZHANG AND IOANNOU: A NEW LINEAR ADAPTIVE CONTROLLER: DESIGN, ANALYSIS AND PERFORMANCE 887 TABLE I where is a large constant. The projection operator projects the estimate of from the boundary of and is to set when projection of is in effect the operator in the LS scheme. Based on certainty equivalence, our controller design does not involve implementation of in the stabilizing functions. Therefore is not further differentiated, and unlike the tuning functions scheme, differentiability of the projection operator is not an issue. is chosen to be the same as in the The normalizing signal is ensured by parameter projection, bilinear case. Since is described by the normalizing property of (39) 3) The Known High Frequency Gain Case: Going one step further, if we have complete knowledge of the high-frequency then the adaptive law is further simplified. That is, we gain and from the list of unknown parameters. The drop both control law is again totally unchanged, except we set whereas the adaptive law is simplified to (40) Proof: We first consider the adaptive law with bilinear parameterization. Consider the Lyapunov function (44) and its derivative along the solution of (37) (45) where the last inequality is a result of completion of squares. is nonincreasing, i.e., Therefore which implies from which (41) follows. Integrating (45) leads to as a direct consequence of error (42). Moreover, together with and normalization. Finally, imply The proof for the adaptive law with linear parameterization is the same by adopting the Lyapunov function for the unknown high-frein quency gain case and the known high-frequency gain case. D. Error System Property The error system equation resulted from the control law (31) can be written in the following compact form: (46) is the adaptation gain where matrix similar to that of the linear parameterization case. The known high-frequency gain case is of particular interest as many performance, passivity, or parametric robustness results are obtained under this assumption [1], [12], [9], [11]. 4) Stability and Performance of Adaptation System: The above adaptive laws (32), (37), (40) guarantee the following stability and performance properties regardless of the stability of the closed-loop control system. Lemma 1 (Performance of Adaptive Law): The adaptive law (32), (37), or (40) for the equation error model (4) guaranSpecifically, the tees that following performance bounds hold: where .. . .. . .. . .. . .. . .. . .. . .. . (47) and (bilinear only) (41) (48) (42) in the case of control law (29), or where (43) and are defined in Table I. (49) in the case of control law (31). The homogenous part of the error state equation (46) is exponentially stable due to the uniformly 888 IEEE TRANSACTIONS ON AUTOMATIC CONTROL, VOL. 45, NO. 5, MAY 2000 negative definite (or screw symmetric) The terms and due to the output mismatch and the adaptation, now act as perturbations to the -system. with completion of Using (46), and the definition of squares, we evaluate the derivative of Essentially, the terms perform similar tasks as the terms. we are able to eliminate the deBy appropriately choosing with respect to the design parameters and the pendency of parameter estimates. We defer the discussion on this to the performance analysis section. Proceeding from (50), we have (56) where (50) (57) in the case of control law (31) and in where the case of control law (29). We now consider the last term of (50) for different adaptive laws. The Bilinear Parameterization Case: The adaptive law (32) can be expressed in the form of Integrating (56) gives (51) from which we get (58) Further integrating the first inequality of (58) gives (52) where in obtaining the constant we have used the inequalities (53) (59) and and Inequalities (58) and (59) directly relate the norms of the backstepping error with the norm of the through a simple relation parameter estimation error and where the effect of design parameters is clear: if is independent of the design parameters and the parameter estimates, then there exist upper bounds of the quantities that are computable a priori and only decrease with increasing Linear Parameterization Case: Notice that the equivalent can be derived as adaptive law for The adaptive law (37) can be rewritten as E. Stability Analysis (54) Therefore (52) holds true for the linear parameterization case as well. Known High frequency Gain Case: This is only a special in case of the above two cases. Here we simply set So we have (52) with (55) is independent of the filter paIt is interesting to notice that rameter vector as compared to that defined in (53). We are now in the position to state and prove the stability properties of the proposed adaptive controller. Theorem 2 (Stability and Asymptotic Tracking): Consider the LTI plant (1). The adaptive controller with either control law (31) or (29), and any of the adaptive law (32), (37), or (40) guarantees uniform boundedness of all closed-loop signals and asymptotic convergence of the tracking error to zero. Proof: We prove this theorem by first showing uniform The idea is to conboundedness of the normalizing signal namely and and establish sider the two components of involving the normalized estimation error an inequality for that is suitable for applying the Bellman–Gronwall lemma. ZHANG AND IOANNOU: A NEW LINEAR ADAPTIVE CONTROLLER: DESIGN, ANALYSIS AND PERFORMANCE The partial regressor can be written in the following transfer function form: where 889 are defined by (68) (60) is the strictly proper transfer function matrix from where to Substituting in (10) and applying Lemma 6 (61) In view of (61), (59), and (58), we have (62) where (63) (64) Therefore the normalizing signal equality satisfies the integral in- Therefore the inequalities similar to (58) and (59) still hold. do not provide much insight, beHowever, the quantities cause they now depend on the closed-loop behavior, including the parameter estimates as well as all the design parameters, in a complex manner, and we are no longer able to predict what will as the parameter estimates happen to are updated or as the design parameters are changed. Remark 2: Using the “normalizing damping” instead of “nonlinear damping,” we manage to preserve the linearity of control law (31) or (29). Our adaptive controller design guaranregardless of the boundedness of tees the closed-loop signals. Hence all the terms in (31) or (29) are [in the sense that ], which con“linear” in tains all the closed-loop states, including and This assures that the control signal can be bounded by the normalizing signal i.e., Such preservation of linearity is crucial for proving stability when error normalization is used as it allows us to arrive at an integral inequality in terms of that is suitable for applying the Bellman–Gronwall lemma. Such “linearity” of control law is also crucial for robustness with respect to high-frequency unmodeled dynamics [8], [22]. III. PERFORMANCE AND PARAMETER CONVERGENCE (65) Applying the Bellman–Gronwall lemma, we obtain In this section, we study the performance of the new adaptive control system with or without persistent excitation (PE). A. Transient Performance (66) so Since all adaptive laws guarantee Boundedness of implies by (66) Bounded together with bounded and its derivatives imply bounded and therefore bounded which together with Finally, inspecting (10), (31), or bounded implies (29), we easily see that all components are uniformly bounded, and Therefore all the so also closed-loop signals are uniformly bounded. Bounded In view of (59), we immediately implies that which together with imply Since have by Barbalat’s lemma, we or as which completes conclude that the proof. Remark 1: The stability analysis depends heavily on (56) in It is, however, not necessary for which we assume to be nonzero to guarantee stability. Suppose we set then instead of (56), we have an equivalent inequality For traditional adaptive linear controllers with normalized adaptive law, the unnormalized performance bounds are usually proportional to the size of the normalizing signal which may grow as design parameters are tuned toward improving performance of the normalized performance bounds. Consequently, these adaptive controllers lack computable performance that can be systematically improved. For the proposed adaptive controller, however, an upper bound of the normalizing signal can be determined that does not grow as the design parameters tune to reduce the normalized performance bounds. 1) Filter Polynomial Design: Let where is a free constant that determine the role of in is defined the performance, and the Hurwitz polynomial by (69) (70) Hence (67) (71) 890 IEEE TRANSACTIONS ON AUTOMATIC CONTROL, VOL. 45, NO. 5, MAY 2000 The relationship between and applying the Parseval’s theorem can be determined by Bilinear Parameterization Case: Consider defined in (53). Notice that is a constant matrix, so if we choose then (77) (72) which Using (72) in (43), we obtain depends only on the plant and the initial conditions, provided to be a constant. Moreover, is we maintain That is, inmonotonically decreasing with respect to leads to decrease in creasing without changing On the other hand, assume we have the decomposition of the biproper transfer function where is a constant, is a polynomial both independent of Then of degree $ $ $ $ which is independent of and only decreases as increases or decreases. Linear Parameterization Case: In the linear parameterization case, the matrix defined by (54) is not constant, therefore We note that we cannot directly choose (78) Let matrix and consider the (79) (73) represents time domain convolution. As is where independent of Hurwitz, there exists constant such that $ Let then Since and the determinant of is (80) the Hermitian matrix is nonnegative definite. Therefore (81) (74) For the gradient adaptive law, so that choose are constant, so we can are independent of (72), (74) imply Since the constants that the effect of nonzero initial conditions on the plant in the and norms can be arbitrarily reduced sense of both the by increasing Next we decomposed to (82) (75) because the coFor the LS scheme, variance matrix does not increase. Hence, which suggests the choice of In either case, we have where is now biproper. The are bounded by induced norms of (83) (76) which monotonically decrease to zero as goes to since is independent of 2) Normalizing Damping: In the controller design, we state that the matrix can be arbitrary. While we may arbitrarily choose without losing stability, appropriate choice of bears critical importance for performance. In particular, we need to does not increase as or choose such that the size of increases or decreases. which is again independent of and strictly decreasing with Practically, since the adaptation gain can be respect to or very large, the resulting can also be very large. Therefore should be chosen small to avoid extremely high gain. Known High-Frequency Gain Case: In this special case, defined in (55) does not involve the filter coefficients the hence we can choose where the last two rows and and are columns of associated with the adaptation of zeros, and From the above analysis, the choice of the damping coefficients, namely do not depend explicitly on any state variables. Therefore these “normalizing damping” terms are not ZHANG AND IOANNOU: A NEW LINEAR ADAPTIVE CONTROLLER: DESIGN, ANALYSIS AND PERFORMANCE aimed at, as the “nonlinear damping”s of [11] are, damping out the effect of these perturbation terms by way of nonlinear gains. In contrast, the purpose of these coefficients is to normalize the terms to eliminate the parameter depencoefficients of the normalizes the dency of these coefficients. The coefficient term, and normalizes the parameter coefficients of the term. dependencies of the 3) Performance: Lemma 1 gives the performance bounds on the normalized estimation error These normalized performance bounds are translated to their unnormalized versions via From (66), we obtain the bound of the normalizing signal (84) then from (63), (64), we can see that is a decreasing function of is a nonincreasing function of Therefore is noninand independent of creasing with respect to Using we have the following performance properties. Theorem 3 (Performance): Consider the LTI plant (1). The adaptive controller with control law (31) or (29), and adapnorm of the tive law (32), (37), or (40), guarantees that the tracking error satisfies the following inequality: If (85) in the case of adaptive law (32), in the case of adaptive law (37), and in the case of adaptive law (40). If in addition the plant highis known, then the adaptive controller with frequency gain control law (31) or (29), and adaptive law (40) guarantees the bound for the tracking error following where (86) Proof: The proof of (85) follows by substituting (42), (59), (58) into the following inequality: (87) performance (86) is and plugging in the bound of (42). The shown by applying (74) and (76) to the following inequalities: (88) and plugging in the bounds (41) and (42) developed in Lemma 1, and the inequality which is a direct . consequence of the normalizing property of 891 The performance is only available when the high-frequency gain is known. We recall that the tuning functions scheme has the same limitation [12]. Let us now discuss the roles of various design parameters in performance improvement. 1) Reference signal and filter initialization: As we see from the definitions of the constants involved in the performay mance bounds, the nonzero initial condition of However, by be an increasing function of simply zeroing the initial condition of the reference model then regardless of the initial so that condition of the plant. This greatly simplifies the complex filter initialization process that is required for the tuning functions design [9]. Alternatively, for nonzero we can choose which also leads a similar process as the tuning functions deto sign. The effect of however, cannot be eliminated due to the unknown plant parameters. This is one drawback compared with the tuning functions design. 2) Adaptation gain : Large adaptive gain which attenuates the effect of initial parameter error is vital for good performance. This means that fast adaptation is needed to minimize the energy of the tracking error caused by the initial parameter error. Meanwhile, the condition number needs to be kept small of the adaptation gain matrix (e.g., a constant adaptation gain, 3) Filter parameter : A large is critical for both the and performance in two senses. First, for nonzero perforplant initial condition, in order to guarantee so that when we mance, we must fix the ratio of we proportionally increase Otherwise, increase due increasing with fixed could lead to increasing to nonzero initial condition, which in turn results in inThis is, however, not necessary when the creasing Second, plant initial condition is zero such that but increase alone. Though the suppose we fix is limited, it has a deterministic effect on effect on performance shown in (86). the improvement of the correParticularly when we increase the value of can be arbitrarily reduced. spondingly, the size of : The role of is to atten4) Damping coefficients or tracking error due to the backstepping uate the are not necessary for error We have shown that appear in the denominator in stability. However, as are all the performance bounds, it is essential that chosen nonzero to achieve the performance bounds (85), can lead to improve(86). Moreover, increasing performance, as ment in performance, especially the evident from (86). 5) Normalizing coefficient : Decreasing leads to weaker normalization, which renders smaller normalizing signals and hence improved performance. As which is the unnormalized case. We point out that if we had not used the normalizing damping then we would not have been able to terms that is as find an upper bound for the normalizing signal straightforward as the one shown in Lemma 2. Not only are 892 IEEE TRANSACTIONS ON AUTOMATIC CONTROL, VOL. 45, NO. 5, MAY 2000 we unable to predict the possible effect on as we tune the design parameters, but we are also unable to establish perfordue mance bounds even for the normalized tracking error to the complex relation of the backstepping error and the pahence large rameter estimation error We notice that or small implies stronger “normalizing damping,” and consequently, large feedback gain. It is interesting to see from the first term of (86) that though may result in good performance, it is not required. good In essence, large leads to a fast filter, which increases the controller bandwidth and feedback gain. A large will result in a small estimation error for all time, therefore undermines the adaptation process, leading to slower adaptation. So fast adaptation can be counteracted by high gain feedback. This point is manifested in the next section where adaptation is completely switched off. We also observe that the tracking error differs from the error signal used for backstepping by the estimation error This mismatch, however, does not prevent us from establishing performance for the tracking error as the effect of can be reduced by employing fast adaptation and fast filter. 4) Performance Comparison with Tuning Functions Design: To compare the performance of the new controller with the tuning functions controller, we consider the performance bounds derived in [12] with the conditions shown as follows: (89) (90) are constants determined by the chosen where and the initial conditions, which, however, cannot filter be systematically decreased with the filter design. We observe and that increasing can lead to improvement in both performance, and increasing can result in further improveperformance only. Increasing the adaptation gain ment in may help to further improve performance through, for example, Detailed discussion on the role of adaplimited reduction in tation can be found in [12]. However, it is unclear how the filter design can play a role in further performance improvement. We point out that the new controller can achieve the same level of performance as promised by the tuning functions design, though the way they achieve such performance is different, i.e., the parameters used to shape the performance are different. Table II compares the two approaches. In the table, the word “primary” and “secondary” refers to irreplaceable and replaceable role, respectively, in achieving arbitrary reduction in performance bounds, whereas “limited” refers to limited reduction in performance bounds. Another difference is the process of filter initialization. The tuning function’s scheme employs a complex signal tracking process for filter initialization to achieve complete elimination of the effect of nonzero initial conditions. With our controller, is automatically set to zero if we the initial condition of use zero initial condition for all filters and the reference model. However, we are unable to initialize the filters so that the effect COMPARISON OF TABLE II NEW ADAPTIVE CONTROL DESIGN AND FUNCTIONS DESIGN THE TUNING of nonzero initial conditions of the plant can be completely eliminated from the tracking error. This is caused by the fact that the tuning functions design starts with the tracking error, while the new controller design starts with the “estimated” tracking error. This effect, though, is exponentially decaying to zero which can be made to die arbitrarily fast with large initial control effort. When plant has nonzero initial condition, our controller may experience high initial control effort due to high gain, whereas the tuning functions design may not require large control effort due to “nonlinear damping.” B. Parameter Convergence Finally, we establish the parameter convergence property of the new adaptive controller. Theorem 4 (Parameter Convergence): Assume that the two and in (1) are coprime. Consider plant polynomials the adaptive controller with the control law (31) or (29), we have the following parameter convergence properties. 1) Known high-frequency gain with adaptive law (40). If the reference signal is sufficiently rich (SR) of order then the parameter error vector as well as the error converge to zero exponentially. signals 2) Known upper bound of high frequency gain with adaptive law (37). If the reference signal is SR of order then the parameter error vector as well as the error converge to zero exponentially. signals 3) Unknown high-frequency gain with adaptive law (32). converges asymptotically to a The parameter estimate (not necessarily equal to the true value fixed constant If in addition the reference signal is SR of order then the parameter error converges asymptotthen for sufficiently ically to Furthermore, if the convergence rate for is exponential. large Proof: We prove only the last case. The first two cases follow closely. hence We have shown that which implies that converges to a fixed constant not necessarily equal to Next we consider the regressor vector which we express as (91) transfer functions It is straightforward to verify that the are linearly independent. Hence when in the first term the reference signal is SR of order of in (91) is PE. The second term is an LTI operator applied signal, which is again So is PE [8]. With this PE to an ZHANG AND IOANNOU: A NEW LINEAR ADAPTIVE CONTROLLER: DESIGN, ANALYSIS AND PERFORMANCE condition, we first show asymptotic convergence of the parameter estimates. From the adaptive law (32), we have (92) then Hence when Let is PE, so is So the homogeneous system of (92) is exponentially stable [15], [8], i.e., is an exponentially stable time varying matrix. Since the last therefore term in (92) converges to zero due to as Now let us first consider the equation. We redefine the conwhere stant to be is a constant to be determined. Also for convenience of notaThen instead tion, we introduce of (56), we have 893 Since neither nor nor is a growing function of therefore (97) can always be satisfied by design. This means that the closed loop is exponentially stable, which is stronger than the asymptotic stability that is obtained in traditional overparameterized adaptive system. As a consequence of the backstepping design, the new adaptive controller also possesses stronger parameter convergence property as it can guarantee exponential convergence, as compared with traditional linear adaptive schemes with bilinear parameterization, which has at most asymptotic convergence. IV. PARAMETRIC ROBUSTNESS AND NONADAPTIVE PERFORMANCE For the nonadaptive controller, Hence (46) becomes (98) which defines an LTI relationship between and (99) (93) where we have used the fact that From (92), we get is an vector, $ is the exponentially decaying to zero term due to According to (58), possible nonzero initial condition of and satisfy (59), where (100) (94) The quantity (99) can be rewritten as (101) Let be a positive constant such that where is an arbitrary constant, then Next let us consider the quantity Define (102) (95) Since there exists such that If satisfies then is a stable proper LTI transfer function which Obviously Noticing that is independent of the design parameters we have and (103) where we have used the fact that tuting (101) and (103) into (10) results in Substi- (96) exponentially, (96) implies that exponenSince tially, which in turn implies that exponentially fast. Since is arbitrary, the condition on is satisfied if we choose to satisfy (104) Solving (104) for (97) we get (105) 894 IEEE TRANSACTIONS ON AUTOMATIC CONTROL, VOL. 45, NO. 5, MAY 2000 the high frequency gain itself, then by choosing that where and such (111) (106) (112) (107) (109) will be satisfied, and closed-loop stability will be guaranteed. As a special case, when the high-frequency gain is then (109) degenerates to known precisely so that which can be satisfied simply by choosing the filter to be sufficiently fast. then we are only able to Note that when obtain the MSE performance defined as (113) (108) are the closed-loop sensitivity functions with respect to respectively. Since are stable, according to the Small Gain theorem [3], the closed-loop system described by (105) is stable if (109) This means that the tracking error will not converge to zero then and asymptotically. However, if asymptotic tracking can be achieved. These nonadaptive properties are summarized by the following theorem. Theorem 5 (Parametric Robustness): The nonadaptive controller (31) or (29) applied to the LTI plant (1) guarantees that for any given parameter error except for the high-frequency gain if satisfy the inequalities which satisfies (111) and (112), then all the closed-loop signals are uniformly bounded, and the tracking error satisfies the following performance bounds, as shown in (114)–(116) at the bottom of this $ is an exponenpage, where tially decaying to zero term. Proof: The results can be shown by applying Lemma 6 and the following inequalities to (105): where (110) and in reaching (109) we have used the fact that (117) It is now obvious that if i.e., the uncertainty on the high frequency gain is strictly smaller than the value of (118) (114) (115) (116) ZHANG AND IOANNOU: A NEW LINEAR ADAPTIVE CONTROLLER: DESIGN, ANALYSIS AND PERFORMANCE 895 (119) and using (100) and As in the adaptive case, the performance bounds given in (114) and (115) or (116) can be improved by increasing the , or But the achievable performance is limvalue of is. The case where the ited by how small the parameter error is known exactly so that is a high-frequency gain special case considered in [10], [12], in which parametric robustness and nonadaptive performance are established. Under such assumption, and with zero initial condition, the performance bounds can be further simplified that allow systematic improvement. This can be easily seen from (114) and (115) by Specifically, both the MSE (or and the setting performance can be arbitrarily improved by increasing the value of i.e., using faster filters. V. NUMERICAL EXAMPLE In this section, we use a numerical example to illustrate the new adaptive controller and the effect of different parameters on the closed loop performance. Consider the following plant transfer function: (120) is known but are considwhere ered unknown. The control objective is to make the closed loop follow the reference model (121) All the initial conditions are set to zero, and we consider only the control law (29) with the gradient adaptive law where The filter polynomial is chosen to be We note that since the reference signal is SR of order and we are estimating parameters. Therefore we expect parameter estimates convergence to their true values. The baseline set of parameters are chosen to be The results are shown in Fig. 1. A large transient is observed, and the tracking error settles in about 20 s when the parameter estimates settle. For comparison of performance, we consider different parameter settings. In the first case, reducing the strength of normalization ten times improves the transient overshoot by more than 10 times (Fig. 2). In the second case, increasing the adaptation gain ten times results in even more dramatic reduction in transient overshoot (more than 25 times), and the speed of parameter convergence is also faster. In the mean time, the tracking error perforsettles in about 15 s, showing an improvement of mance. In the third case, we retain the original adaptation gain and normalization coefficient but increase the value of to 10, 20, 1, respectively, then from Fig. 2 we can see that the transient overshoot is restrained to be nearly 0.007 more than 50 times as good as the previous case. However, the convergence is Fig. 1. Controller performance for baseline parameter settings ( = 1; 1; c = c = 1; d = d = 0:1; % = 1): = very slow—continued simulation shows that it takes over 3000 s to achieve a ten times lower magnitude of the tracking error. This verifies that faster filter and larger damping coefficients undermine the role of adaptation dramatically. If we switch off the adaptation, then the first two cases result in instability, whereas with the third case, stability is maintained, but performance is not as good as with the adaptive case (Fig. 2). Moreover, the tracking error does not tend to zero anymore. This demonstrates the parametric robustness and nonadaptive performance of the new controller. Finally, we simulate a case where we estimate and by applying a bilinear parameter estimator. For no particular reason, the parameters are chosen to be As Fig. 3 shows, both the tracking error and the parameter errors converge quickly after a brief transient performance is not guaranteed, we cannot overshoot. Since get rid of the spike. However, by increasing the adaptation gain we can reduce the settling time which represents partially the performance. VI. CONCLUSION In this paper, we propose a new linear adaptive design approach. The new controller combines a backstepping control law with a traditional equation error model based adaptive law 896 Fig. 2. Systematic performance improvement via parameter settings. IEEE TRANSACTIONS ON AUTOMATIC CONTROL, VOL. 45, NO. 5, MAY 2000 Fig. 3. ( via certainty equivalence. The resulting adaptive controller possesses nice properties of both the traditional certainty equivalence adaptive controllers and the tuning functions adaptive backstepping controller: linear, separation of control and adaptive law design and freedom of choice of adaptive law, direct estimation of plant parameters, use of error normalization, computable performance bounds, parametric robustness, and least possible controller order. A significant contribution of this paper is that we can establish performance while preserving linearity of the control law and error normalization in the adaptive law. The use of error normalization has been responsible for the difficulty in quantifying performance for traditional certainty equivalence adaptive linear controllers. Consequently, performance improvement are mostly obtained by way of eliminating normalization [16], [2], [12]. For the new controller, with the use of “normalizing damping” in the backstepping design, we eliminate the dependency of the size of the normalizing signal on the design constants or parameter estimates, leading to an upper bound of the normalizing signal that can be computed a priori. This ensures the performance of the unnormalized error signals once performance of the normalized error signals is established. This is accomplished without introducing additional dynamic compensator like [18] and [1]. Performance of adaptive controller with unknown high frequency gain = 10; = 2; c =; d = 0:01; % = 0:5): Error normalization was initially introduced in [4] to obtain a stable parameter estimator independent of the closed-loop stability to achieve controller–estimator separation. Later it was shown that such “static” error normalization contributes to the robustness of the resulting adaptive control system [14]. A stronger form of normalization is the “dynamic” normalization introduced in [17] and later developed in [8], which further strengthens the robustness of the adaptive controller. Since the new controller preserves the two key properties—linearity and error normalization—we expect the same level of robustness for the new controller as the traditional certainty equivalence adaptive controller as described by [8], the ability to guarantee global signal boundedness in the presence of input unmodeled dynamics that is only required to be small in the low frequency range. In [21], such robustness property was established for a similar adaptive controller using a simple modification to the adaptive law with parameter projection and dynamic normalization. Following [14], it is also possible to show robustness of the new controller with parameter projection only. With the tuning functions design, however, due to nonlinearity, it is unclear how to modify the controller design to achieve this level of robustness, or even it is possible, as controllers with higher order nonlinear terms are generally unable to guarantee ZHANG AND IOANNOU: A NEW LINEAR ADAPTIVE CONTROLLER: DESIGN, ANALYSIS AND PERFORMANCE robustness with high frequency unmodeled dynamics in the global sense [22]. APPENDIX INPUT–OUTPUT STABILITY Lemma 6 [3], [8]: Let where is an th order stable proper transfer function. Then the following inequalities hold: (122) If in addition, is strictly proper, then 897 [16] R. Ortega, “On morse’s new adaptive controller: Parameter convergence and transient performance,” IEEE Trans. Automat. Contr., vol. 38, pp. 1191–1202, 1993. [17] L. Praly, “Robustness of model reference adaptive control,” in Proc. III Yale Workshop Adaptive Syst., 1983, pp. 224–226. [18] J. Sun, “A modified model reference adaptive control scheme for improved transient performance,” IEEE Trans. Automat. Contr., vol. 38, pp. 1255–1259, 1993. [19] Z. Zang and R. Bitmead, “Transient bounds for adaptive control systems,” in Proc. 30th IEEE Conf. Decision Contr., 1990, pp. 171–175. [20] Y. Zhang, “Stability and performance of a new linear adaptive backstepping controller,” in Proc. IEEE Conf. Decision Contr., San Diego, CA, Dec. 1997, pp. 3028–3033. [21] Y. Zhang and P. Ioannou, “Linear robust adaptive control design using nonlinear approach,” in Proc. 1996 IFAC Symp., vol. K, San Francisco, CA, 1996, pp. 223–228. , “Robustness of nonlinear control systems with respect to unmod[22] eled dynamics,” IEEE Trans. Automat. Contr., vol. 44, pp. 119–124, 1999. (123) and (124) REFERENCES [1] A. Datta and P. A. Ioannou, “Performance analysis and improvement in model reference adaptive control,” IEEE Trans. Automat. Contr., vol. 39, pp. 2370–2387, 1994. [2] , “Directly computable L and L performance bounds for morse’s dynamic certainty equivalence adaptive controller,” Int. J. Adaptive Contr. Signal Processing, vol. 9, pp. 423–432, 1995. [3] C. A. Desoer and M. Vidyasagar, Feedback Systems: Input–Output Properties. New York: Academic, 1975. [4] B. Egardt, “Stability of adaptive controllers,” in Lecture Notes in Control and Information Science. Berlin, Germany: Springer-Verlag, 1979, vol. 20. [5] G. C. Goodwin and K. C. Sin, Adaptive Filtering Prediction and Control. Englewood Cliffs, NJ: Prentice-Hall, 1984. [6] L. Hsu and R. Costa, “Bursting phenomena in continuous-time adaptive control systems with -modification,” IEEE Trans. Automat. Contr., vol. AC-32, pp. 84–86, 1987. [7] F. Ikhouane and M. Krstić, “Robustness of the tuning functions adaptive backstepping design for linear systems,” IEEE Trans. Automat. Contr., vol. 43, pp. 431–437, 1998. [8] P. Ioannou and J. Sun, Robust Adaptive Control. Englewood Cliffs, NJ: Prentice-Hall, 1996. [9] M. Krstić, I. Kanellakopoulos, and P. V. Kokotović, “Nonlinear design of adaptive controllers for linear systems,” IEEE Trans. Automat. Contr., vol. 39, pp. 738–752, 1994. [10] , “Passivity and parametric robustness of a new class of adaptive systems,” Automatica, vol. 30, pp. 1703–1716, 1994. [11] , Nonlinear and Adaptive Control Design. New York: Wiley, 1995. [12] M. Krstić, P. V. Kokotović, and I. Kanellakopoulos, “Transient performance improvement with a new class of adaptive controllers,” Syst. Contr. Lett., vol. 21, pp. 451–461, 1993. [13] A. S. Morse, “High-order parameter tuners for the adaptive control of nonlinear systems,” in Proc. US-Italy Joint Seminar Syst., Models Feedback Theorem Appl., Capri, Italy, June 1992. [14] S. M. Naik, P. R. Kumar, and B. E. Ydstie, “Robust continuous-time adaptive control by parameter projection,” IEEE Trans. Automat. Contr., vol. 37, pp. 182–197, 1992. [15] K. S. Narendra and A. M. Annaswamy, Stable Adaptive Systems. Englewood Cliffs, NJ: Prentice-Hall, 1989. Youping Zhang received the Bachelor’s degree in 1992 from the University of Science and Technology of China, Hefei, Anhui, PRC, and the M.S. and Ph.D. degrees in electrical engineering from the University of Southern California, Los Angeles, CA, in 1994 and 1996, respectively. From 1996 to 1999, he was an Associate Research Engineer with the United Technologies Research Center in East Hartford, CT. He joined Numerical Technologies, Inc. in San Jose, CA, in July, 1999, where he is currently a Senior Engineer. His research interests are in the areas of intelligent control systems, optimizations, and computer aided designs. Dr. Zhang is a member of IEEE, Phi Kappa Phi, and Eta Kappa Nu. Petros A. Ioannou received the B.Sc. degree with First Class Honors from University College, London, England, in 1978 and the M.S. and Ph.D. degrees from the University of Illinois, Urbana, IL, in 1980 and 1982, respectively. Dr. Ioannou joined the Department of Electrical Engineering-Systems, University of Southern California, Los Angeles, CA in 1982. He is currently a Professor in the same Department and the Director of the Center of Advanced Transportation Technologies. He held positions as a Professor at the Department of Mathematics and Statistics and Dean of the school of Pure and Applied Sciences at the University of Cyprus and as Visiting Professor at the Technical University of Crete. His research interests are in the areas of dynamics and control, adaptive control, neural networks, intelligent transportation systems, computer networks and control applications. In 1984, he was a recipient of the Outstanding Transactions Paper Award for his paper, An Asymptotic Error Analysis of Identifiers and Adaptive Observers in the Presence of Parasitics, which appeared in the IEEE TRANSACTIONS ON AUTOMATIC CONTROL in August 1982. Dr. Ioannou is also the recipient of a 1985 Presidential Young Investigator Award for his research in Adaptive Control. He has been an Associate Editor for the IEEE TRANSACTIONS ON AUTOMATIC CONTROL, the International Journal of Control and Automatica. Currently he is an Associate Editor at Large of the IEEE TRANSACTIONS ON AUTOMATIC CONTROL and an Associate Editor of the IEEE TRANSACTIONS ON INTELLIGENT TRANSPORTATION SYSTEMS. Dr. Ioannou is a fellow of IEEE, a Member of the AVCS Committee of ITS America and a Control System Society Member on IEEE TAB Committee on ITS. He is the author/co-author of 1 patent, 5 books, and more than 300 research papers in the area of controls, transportation, and neural networks. He served as a Technical Consultant with Lockheed, Ford Motor Company, Rockwell International, General Motors, ACORN Technologies and other companies dealing with design, implementation and testing of control systems.