Sound localization in the human brain: neuromagnetic

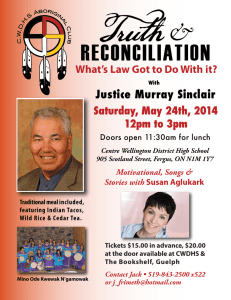

advertisement

NEUROREPORT AUDITORY AND VESTIBULAR SYSTEMS Sound localization in the human brain: neuromagnetic observations Kalle PalomaÈki,CA Paavo Alku, Ville MaÈkinen,1,3 Patrick May2 and Hannu Tiitinen1,3 1 Laboratory of Acoustics and Audio Signal Processing, Helsinki University of Technology, PO Box 3000, Fin-02015 TKK; Cognitive Brain Research Unit and 2 Department of Psychology, University of Helsinki; 3 BioMag Laboratory, Medical Engineering Centre, Helsinki University Central Hospital, Finland CA Corresponding Author Received 15 February 2000; accepted 1 March 2000 Acknowledgements: This study was supported by the Academy of Finland. Sound location processing in the human auditory cortex was studied with magnetoencephalography (MEG) by producing spatial stimuli using a modern stimulus generation methodology utilizing head-related transfer functions (HRTFs). The stimulus set comprised wideband noise bursts ®ltered through HRTFs in order to produce natural spatial sounds. Neuromagnetic responses for stimuli representing eight equally spaced sound source directions in the azimuthal plane were measured from 10 subjects. The most prominent response, the cortically generated N1m, was investigated above the left and right hemisphere. We found, ®rstly, that the HRTF-based stimuli presented from different directions elicited contralaterally prominent N1m responses. Secondly, we found that cortical activity re¯ecting the processing of spatial sound stimuli was more pronounced in the right than in the left hemisphere. NeuroReport 11:1535±1538 & 2000 Lippincott Williams & Wilkins. Key words: Auditory; Head-related transfer function; HRTF; Magnetoencephalography; MEG; N1m; Sound localization INTRODUCTION Non-invasive measurements utilizing electroencephalography (EEG) and magnetoencephalography (MEG) have recently been used to study how stimulus features are represented in the human brain. These measurements have successfully revealed how, for example, tone frequency and intensity are encoded in tonotopic [1±3] and amplitopic maps [4], respectively. Invasive animal studies further suggest that auditory cortex contains representations for various dynamic features, such as stimulus periodicity [5] and duration [6]. In addition to their spectral and temporal structure, natural sounds are perceived as having a location in auditory space. Behavioural studies indicate that sound localization in humans is based on the processing of binaural cues from interaural time and level differences (ITD and ILD, respectively) and monaural spectral cues from the ®ltering effects of the pinna, head and body [7]. The physiological basis of auditory space perception has mainly been invasively studied in animal models. These studies have revealed that the mammalian brain maps three-dimensional sound locations [8] with neurons in auditory cortex exhibiting tuning for stimulus location in auditory space [9]. While the processing of spatial sound in the human brain has remained largely unexplored, eventrelated potential (ERPs) and magnetic ®eld (EMFs) measurements of, for example, the N1(m) response (for review 0959-4965 & Lippincott Williams & Wilkins see [10]) might offer a useful tool for studying sound localization in the human cortex. To date, the study of auditory spatial processing in the human brain has relied on two stimulus delivery methods: the use of headphones coupled with ITDs [11] and ILDs [12] and the use of multiple loudspeakers [12±14]. However, both stimulus delivery methods have certain restrictions when addressing how the human brain processes spatial auditory environments. On the one hand, stimuli delivered through headphones are experienced as lateralized between the ears inside the head, rather than being localized outside the head as in natural spatial auditory perception [7]. On the other hand, the use of loudspeakers introduces variables such as room reverberation, which may contaminate response patterns. Furthermore, the use of loudspeakers is impossible with MEG because magnetic components can not be placed inside the magnetically shielded measurement room. Recent developments in sound reproduction technology allow the delivery of natural spatial sound through headphones. These developments are based on so-called headrelated transfer functions (HRTFs), digital ®lters capable of reproducing the ®ltering effects of the pinna, head and body [15]. HRTFs allow one to generate stimuli whose angle of direction can be adjusted as desired and to feed them directly to the ear canals using sound delivery systems with no magnetic parts. Thus, HRTFs allow us, for Vol 11 No 7 15 May 2000 1535 NEUROREPORT È KI ET AL. K. PALOMA the ®rst time, to use MEG in studying how the human cortex performs sound localization. The cerebral processing of spatial sounds produced with HRTFs has recently been investigated in two studies applying PET, where it was found that blood¯ow increased in superior parietal and prefrontal areas during spatial auditory tasks [16,17]. These studies, however, left unresolved the role of auditory cortex in sound localization as well as the differences in brain activity elicited by sounds originating from different source direction angles. These issues are addressed in the present study. We focus on the role of auditory cortex in the processing of sounds representing a natural spatial environment by analyzing the magnetic responses evoked by spatial stimuli generated using HRTFs. MATERIALS AND METHODS A total of 10 volunteers (nine right-handed, two female, mean age 29 years) served as subjects with informed consent and the approval of the Ethical Committee of Helsinki University Central Hospital (HUCH). The stimuli for the auditory localization task were produced using HRTFs provided by the University of Wisconsin [15]. The stimuli consisted of eight HRTF-®ltered noise sequences (bandwidth 11 kHz, stimulus duration 50 ms, onset-to-onset interstimulus interval 800 ms) corresponding to eight azimuthal directions (0, 45, 90, 135, 180, 225, 270 and 3158) in the horizontal plane (see Fig. 1). In the MEG experiment, the stimuli were played by randomizing their direction angle. Stimulus intensity was scaled by adjusting the sound sample from 08 azimuth to 75 dB SPL. Binaural loudness of stimuli from all directions was equalized using the loudness model [18]. The EMFs elicited by auditory stimuli were recorded (passband 0.03±100 Hz, sampling rate 400 Hz) with a 122channel whole-head magnetometer (Neuromag-122) which measures the gradients äBz /äx and äBz /äy of the magnetic ®eld component Bz at 61 locations over the head. The subject, sitting in a reclining chair, concentrated on watching a silent movie and was instructed not to pay attention to the auditory stimuli. Brain activity time locked to stimulus onset was averaged over a 500 ms post-stimulus period (baseline-corrected with respect to a 100 ms prestimulus period) and ®ltered with a passband of 1±30 Hz. Electrodes monitoring both horizontal (HEOG) and vertical (VEOG) eye movements were used in removing artifacts, de®ned as activity in excess of 150 ìV. Over 100 instances of each stimulus type were presented to each subject. Data from channel pairs above the temporal lobes with the largest N1m amplitude were analyzed separately for both hemispheres, and the N1m amplitude was determined as a vector sum from these channel pairs. As MEG sensors pick up brain activity maximally directly above the source [19], the channel pair at which the maximum was obtained indicates the approximate location of the underlying source. Statistical analyses (ANOVA) were performed for data obtained by MEG. In order to determine the perceptual quality of the HRTF stimuli, the subjects were also tested with a behavioural match-to-sample task. The eight stimuli were ®rst played in a clockwise sequence with the direction angle of the ®rst sound randomized. Then a test sample from a random direction was played three times after which the subject Left hemisphere N1m responses Right hemisphere N1m responses 08 08 458 3158 908 2708 908 2708 1358 2258 458 3158 1358 2258 40 fT/cm 1808 2100 ms 1808 500 ms 240 fT/cm Fig. 1. N1m responses of both hemispheres (grand-averaged over 10 subjects) from the sensor maximally detecting N1m activity shown for each of the eight direction angles. MEG measurements were conducted by using spatial sounds corresponding to eight different source locations as stimuli. The stimulus set comprised HRTF-®ltered noise bursts whose direction angle was varied between 0 and 3158 in the azimuthal plane. The obtained magnetic responses were quanti®ed by using the channels with the largest N1m amplitude over the left and the right hemisphere. 1536 Vol 11 No 7 15 May 2000 NEUROREPORT SOUND LOCALIZATION: MEG OBSERVATIONS indicated the direction angle of this test sample by a button press. Hit rates were calculated for each angle. RESULTS The stimulus set used in the present experiment approximated the horizontal auditory plane well, with the behavioural match-to-sample task indicating that the samples were localized correctly with 67% accuracy. The probability of correctly performed localization increased to 86% when errors within the so-called cones of confusion [7] (i.e. between angles 0 and 1808, 45 and 1358, 225 and 3158) were ignored. These cones are regions in auditory space within which behavioural discrimination of the direction between sound sources is weak due to ITD and ILD varying only slightly because of the asymmetry of the head. In the MEG recordings, all eight stimuli elicited prominent N1m responses, as can be seen in the grand-averaged responses of Fig. 1. Both hemispheres exhibited tuning to the direction angle, with a contralateral maximum (direction angles 908 and 2708 for the left and right hemisphere, respectively) and an ipsilateral minimum (direction angles 2708 and 908 for the left and right hemisphere, respectively) in the amplitude of the N1m. The amplitude variation as a function of azimuthal degree was statistically signi®cant over both the left (F(1,7) 3.09, p , 0.01) and the right (F(1,7) 7.74, p , 0.001) hemisphere. Fig. 2 shows the grand-averaged N1m amplitude over the eight stimulation conditions. The results indicate a right-hemispheric preponderance in the processing of the HRTF-®ltered noise stimuli: The N1m responses were considerably larger in amplitude over the right than over the left hemisphere. The mean N1m amplitude across the eight direction angles was 39.1 fT/cm in the right and 24.5 fT/ cm in the left hemisphere (F(1,9) 17.76, p , 0.01). Further, the N1m amplitude variation (i.e. the difference between the maximum and mimimum across azimuthal angle) was larger in the right (28.6 fT/cm) than in the left (14.8 fT/cm) hemisphere. DISCUSSION The present results demonstrate that it is possible to extend non-invasive MEG research to studies of cortical processes in complex auditory environments by using HRTF-based stimuli. Our results show that both cortical hemispheres are sensitive to the location of sound in three-dimensional sound space. The amplitude of the N1m exhibited tuning to the sound direction angle in both hemispheres, with response maxima and minima occurring for contralateral and ipsilateral stimuli, respectively. This contralateral preponderance of the amplitude of the N1m response is not surprising, given that connections between the ear and cortical hemisphere are crossed [20] and that previous brain research has demonstrated contralaterally larger auditory evoked electric [21] and magnetic [22±24] responses. The present results also seem to suggest a degree of right-hemispheric specialization in the processing of auditory space. Firstly, the N1m of the right hemisphere was, on the average, of a considerably larger magnitude than that of the left hemisphere. Secondly, the right hemisphere appeared to be more sensitive to changes in the direction angle, with the variation of the amplitude of the N1m across direction angle being almost twice as large in the right hemisphere than in the left. Previous behavioural studies have shown that naturalsounding spatial auditory environments can be created by using non-individualized HRTFs [25]. This is supported by our behavioural test, which showed that the HRTF-stimuli were localized relatively well by the subjects in all eight directions. Hence, already the use of non-individualized HRTFs provide realistic enough spatial sound conditions for MEG measurement purposes. Sound localization is known to be easy for sound sources of wide frequency bandwidth [7]. Therefore, noise bursts with a frequency band of 11 kHz were used in the present study. This is in contrast to several previous experiments applying sinusoidal stimuli [12,22±24], which contain poor spectral cues for sound localization. Our future aim is to analyse the behaviour of the N1m in sound localization using different types of stimuli (e.g. periodic signals and speech, as well as sounds generated with individualized HRTFs). CONCLUSION Auditory localization in the human cerebral cortex as indexed by the N1m response was studied with MEG Right hemisphere N1m amplitude 50 65 45 60 N1m amplitude (fT/cm) N1m amplitude (fT/cm) Left hemisphere N1m amplitude 40 35 30 25 20 15 10 0 45 90 135 180 225 270 315 angle (deg) 55 50 45 40 35 30 25 0 45 90 135 180 225 270 315 angle (deg) Fig. 2. The N1m amplitude (grand-averaged over 10 subjects) calculated as the vector sum from the channel pair maximally detecting N1m activity over the left and right hemisphere. The behaviour of the N1m amplitude reveals contralateral and right-hemispheric preponderance of brain activity elicited by stimuli from the different directions angles (error bars indicate s.e.m.). Vol 11 No 7 15 May 2000 1537 NEUROREPORT measurements using HRTF-®ltered wideband noise bursts as stimuli. The amplitude behaviour of the N1m revealed that spatial stimuli elicited predominantly contralateral activity in the auditory cortex. Moreover, the N1m amplitude, as well as its variation across direction angle of stimulation, was larger in the right than in the left hemisphere, which suggests right-hemispheric speci®city in the cortical processing of spatial auditory information. REFERENCES 1. Romani GL, Williamson SJ and Kaufman L. Science 216, 1339±1340 (1982). 2. Bertrand O, Perrin F, Echallier J et al. Topography and model analysis of auditory evoked potentials: tonotopic aspects. In: Pfurtscheller G and Lopes da Silva FH, eds. Functional Brain Imaging. Toronto: Hans Huber Publishers; 1988, pp. 75±82. 3. Pantev C, Hoke M, Lehnertz K et al. Electroencephalogr Clin Neurophysiol 69, 160±170 (1988). 4. Pantev C, Hoke M, Lehnertz K et al. Electroencephalogr Clin Neurophysiol 72, 225±231 (1989). 5. Schreiner CE. Curr Opin Neurobiol 2, 516±521 (1992). 6. He J, Hashikawa T, Ojima H et al. J Neurosci 17, 2615±2625 (1997). 7. Blauert J. Spatial Hearing. Cambridge: MIT Press; 1997. 8. Kuwada S and Yin TCT. Physiological studies of directional hearing. In: Yost WA and Gourevitch G, eds. Directional Hearing. New York: 1538 Vol 11 No 7 15 May 2000 È KI ET AL. K. PALOMA 9. 10. 11. 12. 13. 14. 15. 16. 17. 18. 19. 20. 21. 22. 23. 24. 25. Springer-Verlag; 1987, 146±176. Brugge JF, Reale RA and Hind JE. J Neurosci 16, 4420±4437 (1996). NaÈaÈtaÈnen R and Picton T. Psychophysiology 24, 375±425 (1987). McEvoy L, Hari R, Imada T et al. Hear Res 67, 98±109 (1993). Paavilainen P, Karlsson M-L, Reinikainen K et al. Electroencephalogr Clin Neurophysiol 73, 129±141 (1989). Butler RA. Neuropsychol 10, 219±225 (1972). Teder-SaÈlejaÈrvi W and Hillyard SA. Percept Psychophys 60, 1228±1242 (1998). Wightman FL and Kistler DJ. J Acoust Soc Am 85, 858±878 (1989). See also http://www.waisman.wisc.edu/hdrl/index.html Weeks RA, Aziz-Sultan A, Bushara KO et al. Neurosci Lett 262, 155±158 (1999). Bushara KO, Weeks RA, Ishii K et al. Nature Neurosci 2, 759±766 (1999). Moore BCJ and Glasberg BR. Acta Acoustica 82, 335±345 (1996). Knuutila JET, Ahonen AI, HaÈmaÈlaÈinen MS et al. IEEE Trans Magn 29, 3315±3320 (1993). Aitkin LM. The Auditory Cortex: Structural and Functional Bases of Auditory Perception. London: Chapman and Hall, 1990. Wolpaw JR and Penry JK. Electroencephalogr Clin Neurophysiol 43, 99±102 (1977). Reite M, Zimmerman JT and Zimmerman JE. Electroencephalogr Clin Neurophysiol 51, 388±392 (1981). Pantev C, Lutkenhoner B, Hoke M et al. Audiology 25, 54±61 (1986). Pantev C, Ross B, Berg P et al. Audiol Neurootol 3, 183±190 (1998). Wenzel EM, Arruda M, Kistler DJ et al. J Acoust Soc Am 94, 111±123 (1993).