The Long-Term Evaluation of Fisherman in a Partial

advertisement

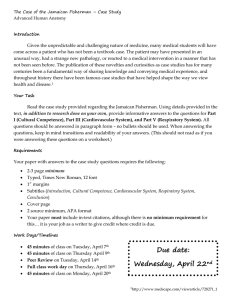

The Long-Term Evaluation of Fisherman in a Partial-Attention Environment Xiaobin Shen Andrew Vande Moere xrshen@unimelb.edu.au University of Melbourne andrew@arch.usyd.edu.au University of Sydney Peter Eades peter@it.usyd.edu.au National ICT Australia University of Sydney Seokhee Hong seokhee.hong@usyd.edu.au University of Sydney “ambient display” generically for the subfield in visualization research that conveys information through for the periphery of user attention. This paper treats ambient display as a specific type of information visualization characterized by two design principles: attention and aesthetics. Information visualization demands full-attention (i.e. users explore, zoom and select information mainly in the primary focus of their attention [18]), while ambient display only requires partial attention, so that human attention can also be committed to other tasks at hand. In addition, aesthetics is a secondary consideration in the design of most information visualization applications (versus the focus on effectiveness and functional design [9]). Aesthetics is a key issue in the development of ambient displays, for its aims is to be visually unobtrusive in the architectural space to draw user interest by way of curiosity and ambiguity, and to encourage comprehension by providing a positive user experience. ABSTRACT Ambient display is a specific subfield of information visualization that only uses partial visual and cognitive attention of its users. Conducting an evaluation while drawing partial user attention is a challenging problem. Many normal information visualization evaluation methods (full attention) may not suit the evaluation of ambient displays. Inspired by concepts in the social and behavioral science, we categorize the evaluation of ambient displays into two methodologies: intrusive and non-intrusive. The major difference between these two approaches is the level of user involvement, as an intrusive evaluation requires a higher user involvement than a non-intrusive evaluation. Based on our long-term (5 months) non-intrusive evaluation of Fisherman presented in [16], this paper provides a detailed discussion of the actual technical and experimental setup of unobtrusively measurement of user gaze over a long period by using a face-tracking camera and IR sensors. In addition, this paper also demonstrates a solution to the ethical problem of using video cameras to collect data in a semi-public place. Finally, a quantitative term of “interest” measurement with three remarks is also addressed. Several ambient display applications [8, 11, 12, 15, 2] have already been designed and developed, but relatively little progress has been made in determining appropriate evaluation strategies. As effective evaluation methods aim to measure and improve a display’s performance, we believe that further research in evaluation methodologies should be a priority for researchers. In particular, it is still an open question whether the user interest and comprehensibility of an ambient display alters over time, especially when its initial novelty effect wears of. Keywords Ambient displays, intrusive evaluation, information visualization, human computer interaction Following on from our previous paper presentation [16], this paper aims to address more technical and practical aspects related to the question “How can we conduct an evaluation of an information display system for partial user attention?” More specifically, we further discuss the differences between intrusive and non-intrusive from participants points of view; we detail the actual experimental setup to collect meaningful usage data by using face tracking camera and IR sensors; we present a solution to the ethical problem of using a video camera offending personal privacy; and we draw new conclusions based on these new results. 1. INTRODUCTION Ambient displays to some extent originate from the ubiquitous computing ideal, which was first proposed by Weiser [18]. He stated that “the most profound technologies are those that disappear. They weave themselves into the fabric of everyday life until they are indistinguishable from it”. Currently, there are many terminologies moving in this general research direction, such as disappearing computing [3], tangible computing [8], pervasive computing [6], peripheral display [11], ambient display [10], informative art [4], notification system [12] or ambient information system [14]. The qualitative differences between some of these terms are not immediately obvious although some subtle disparities might exist. In this paper, we use the term 2. INTRUSIVE EVALUATION AND NON-INTRUSIVE Our previous paper [16] proposed two evaluation styles: intrusive and non-intrusive evaluation. Intrusive evaluation is where the user’s normal behavior is consciously “disrupted” by the evaluation experiment, but non-intrusive evaluation is not. A brief discussion of differences from participant’s points of view is below: Permission to make digital or hard copies of all or part of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page. To copy otherwise, or republish, to post on servers or to redistribute to lists, requires prior specific permission and/or a fee. BELIV’08, April 5, 2008, Florence, Italy. Copyright 2008 ACM 978-1-60558-016-6/08/0004...$5.00. An intrusive evaluation method is determined by a predefined number of participants in each experimental setup, while because of its unobtrusive and real-world setting, a non-intrusive 1 evaluation cannot predict the size of the participant cohort. Many intrusive evaluation experiments only require a small number of participants to reveal significant results. For a non-intrusive evaluation, a larger pool of participants is typically required; in our case we consider all the potential participants passing by an ambient display in a semi-public environment. We believe a welldesigned long-term non-intrusive evaluation should have enough number of participants to reveal significant results, in comparison to intrusive evaluation. ambient displays, although none are expert. The study lasted five months, from September 2005 to January 2006. One should note that every single person passing by Fisherman was a subject of this experiment, as it was based on the assumption that an effective evaluation study of a publicly accessible ambient display should be derived from actual “use” of the display in a real-life environment. Furthermore, for an intrusive evaluation it may be difficult to choose participants with various backgrounds, while non-intrusive study has less limitation on this issue. This is because a nonintrusive evaluation counts all users passing by the display as a potential evaluation subject. In some circumstances this can lead to a braod variety of participants, from various backgrounds; this can improve the validity of experimental results. A non-intrusive evaluation aims to draw less user awareness than intrusive evaluation. This leads to important privacy issues for nonintrusive evaluations; these issues are quite different to ethical issues in intrusive studies. Finally, an intrusive evaluation normally results in a higher cognitive load of the participant, due to the required high level user involvement, high level short-term memory load and typical psycho-physiological stress. This can affect the validity of results [1]. In contrast, a non-intrusive evaluation can potentially have better results because of its lower cognitive load, due to participants not consciously knowing that they are being observed. 3. NON-INTRUSIVE FISHERMAN EVALUATION Figure 1. Implementation of Fisherman Three questionnaires were scheduled within the five months time span. The first questionnaire was scheduled in the middle of second month (17-19 Oct., 2005); the second was scheduled at the end of fourth month (19-20 Dec., 2005); and the last was scheduled at the end of last month (26-27 Jan., 2006). About 30 researchers and students regularly passed by Fisherman to enter/leave their office. Participants for each questionnaire were randomly chosen from this group of frequent users. Participants were not allowed to look at the display while answering questions. OF In this section, a detailed discussion of the non-intrusive evaluation of Fisherman is introduced, partly based on our previous paper [16]. A quantitative term of “interest” measurement is proposed by combining consideration of the total number of participants passing by the display and the total number of participants looking at the display (we assume the more interest the display holds, the more participants will look at it). Some significant remarks are made in this section. Each questionnaire was specifically designed to measure three attributes: the comprehension, usefulness and aesthetics of our Fisherman. Our comprehension questions [16] were: CQ1: Does Fisherman convey any information? CQ2: How many types of information are represented in Fisherman? CQ3: What kind of information does Fisherman represent? CQ4: Have you ever noticed changes in Fisherman? The usefulness question was: UQ1: Is Fisherman useful to you? UQ2: Why? The aesthetic questions were: AQ1: Do you think Fisherman is visually appealing? AQ2: If possible, would you put Fisherman in your home/office? AQ3: Why? The general aim of work is to discover the relationship between comprehension and the terms of interest to the ambient display over time. We hypothesize that the comprehension and subject interest in Fisherman increases with time, on the basis that participants do not keep up their interest in the display unless they understand it. 3.1 The experiment The actual experimental environment and detailed settings are described in Figure 1. Specifically, the display was located in a purpose-built frame, which also enclosed an infrared sensor and a video camera1. The Fisherman metaphor was described on an A4 size paper on the frame, for all passers-by to see (see Figure 1). Because of its unobtrusive setting in an everyday environment, the privacy issues become very important. Australia, the state of New South Wales, and local authorities have a number of relevant laws (see, for example, [5]). Further, institutional and professional guidelines need to be respected. It is clear from the legislation that all cameras mounted inside of a building can only be used for security purposes, while 11 additional principles are listed to guide actual video capture, recording, access and storage. Here, we used a camera and an IR sensor to collect data on user gaze events. The frame was placed in a semi-public area in a research institute. As the actual users of the display are mainly academic researchers, many have some knowledge of the general idea of 1 Since the system included a sensor and camera in a semi-public place, legal opinion was obtained to ensure that the system complied with privacy legislation. To resolve these ethics issues, we used a modified face detection and recognition program based on Intel OpenCV [7] so that our system only recorded the number of faces (versus storing the 2 actual image or video files). This file was saved to the hard disk every day of the experiment. Each file only lists two pieces of information: actual face detection time and the number of faces detected. This modified OpenCV program ran continuously for 5 months with a regular weekly checks to ensure accuracy. Furthermore, to meet the New South Wales state government legislation, a paper notice announcing a continually running a web camera was mounted on the built frame (see Figure 1). 3.2 Results Three parameters were analyzed in the non-intrusive evaluation of Fisherman. • The Mean Comprehension Rate (MCR), based on the answers from the comprehension questionnaire (CQ1-CQ4). A higher MCR indicates better understanding of the display. • The Total number of Subjects Passing by Fisherman (TSP) in one day, measured using the IR sensor. The function of the camera and IR sensor is as follows: • • • • The Total number of Subjects Looking at Fisherman (TSL) in one day, measured by the facial detection system. The Intel OpenCV face detection program (camera) was used to discover whether subjects that passed by looked at Fisherman or not. The face detection program only identified human faces when subjects looked at the display. It is clear that TSL ≤ TSP, as TSP also counts subjects passing by Fisherman without looking at the display. In this paper, we propose a quantitative term of “interest” defined as below: The Intel OpenCV face recognition program (camera) was used to determine how many different subject faces looked at our display within one day. ES = TSL/TSP The IR sensor was used to count how many subjects passing by Fisherman. 3000 The purpose of using an IR sensor was to have a more accurate count of the number of subjects passing; this count is more accurate than that given by the face detection program. The IR sensor had a parallel interface (25-pin), which connected to the local PC and a small script was written in C++ to count the number of subjects passing by the display within one day and saved as an individual file daily. Week 1 Week 2 Week 3 Week 4 2000 1500 1000 500 Two thresholds settings of face detection program were used: • Total number of subjects Passing 2500 Also, to meet the ethical requirements, the subjects’ faces were not pre-recorded in the database. The face recognition only distinguishes between different subjects, instead of attempting to recognize the identity of each face. • (1) We hypothesize that the more interest an ambient display can attract, the more participants will be disrupted in their primary tasks, have a look, and engage in the secondary task. 0 Sep., 05 A participant is only counted if he/she stays in front of our display more than 10 second. Oct., 05 Nov., 05 Dec., 05 Jan., 06 Figure 2. Total number of participants passing by Fisherman A participant is be counted as the second visit, if he/she left the display more than 1 minute. The total number of participants passing by Fisherman within the 5 months was about 28,388. The average number of participants passing by was approximately 5678 per month and 1419 per week. The total number of participants passing by Fisherman in each month is in Figure 2. We did a pilot study that indicated that the performance of the face recognition depended on the local environment conditions (i.e. lighting condition, camera pose angle, or even different image resolutions). Of course this is a well known practical problem for face recognition, and it is expected that current research will improve things considerably. . In this paper, we simply use Intel OpenCV face detection program as a tool to collect data; we expect that the similar experiments in the future will have more accurate data. The total number of participants who looked at the display within a week is available in Figure 3. It shows that the peak number of visits occurred at the beginning of the study. This may be because of the novelty effect, which is a strong factor in drawing the attention of passers-by. It also shows how the number of visits dramatically decreases after two weeks and stabilized after about four weeks. To make a rough estimate of the error rate of face detection program, results from face detection program were calibrated with IR sensor data. For example, if the face detection program reported a participant passing by display at 10:30am, 18 Oct., 2005, but there is no record in IR sensor, then there is an error. We treat this is an error made by face detection program. Our pilot study shows that the error rate of face detection program is about 30% and the errors occur consistently over time. This face detection error rate sounds high, but it meets our experimental requirements. 3 1000 900 Total number of subjects Looked Table 1 shows the mean value of “interest” with standard deviation in each week (the first value in each cell is the mean value of “interest”; the second value is the standard deviation). From Table 1, it seems that interest decreases at the beginning, thenit starts to stabilize. This can be explained by the novelty effect: many participants were initially interested to take a look. Over time, some participants lost interest. At the same time, some participants appreciated Fisherman so much that they went to check the display a couple of times a day. Week 1 Week 2 800 Week 3 700 Week 4 600 500 400 300 Week 1 Week 2 Week 3 Week 4 Sep., 05 34.8%/0.1 32.9%/0.2 16.9%/0.12 16.7%/0.1 Oct., 05 8.4%/0.03 9.0%/0.04 8.1%/0.03 7.2%/0.01 Nov, 05 7.4%/0.03 6.1%/0.02 5.7%/0.03 5.3%/0.02 Dec., 05 4.3%/0.02 4.7%/0.01 4.1%/0.01 Holiday Jan., 05 Holiday 4.1%/0.01 3.9%/0.01 4.1%/0.01 200 100 0 Sep., 05 Oct., 05 Nov., 05 Dec., 05 Jan., 06 Figure 3. Total number of subjects looked at Fisherman Furthermore, we discovered that the largest number of visits occurred in three separate time periods (see Figure 4): early morning (8:50AM—10:00AM); lunch time (12:00AM-- 1:45PM) and late afternoon (4:50PM --5:45PM). This reflects arrival at work, lunchtime, and leaving work.Note that subjects seem to have more time at lunchtime than in the morning and afternoon “rush” hours. Table 1. Mean value of “interest” in each week Finally, our aesthetic judgment measurement achieved very good results. Table 2 shows results of the aesthetics question 1 and 2. 1st test 2nd test 3rd test AQ1 100% 100% 100% AQ2 56% 70% 74% Table 2. results of aesthetics questions Table 2 shows that 100% of participants think our Fisherman are visually appealing in all three tests (AQ1: Do you think Fisherman is visually appealing?). Furthermore, Table 2 also shows that the percentage of participants who want Fisherman is increasing as time goes (AQ2: If possible, would you put Fisherman in your home/office?). 3.3 Remarks The results of the evaluation study of Fisherman show that the term of “interest” in Fisherman had a “peaked” at the beginning of the experiment. The interest stabilized after one month. This can be partially explained that the novelty of the display easily drew the interest of passers-by, although many stopped visiting Fisherman for various reasons. Our post-questionnaire and informal feedback shows that there are two possible reasons to support this particular observation: Figure 4. The number of visiting in November, 2005. Figure 5 shows the user performance with respect to comprehension of the display from the three questionnaires. The results in Figure 5 show that Mean Comprehension Rate (MCR) in each question of the questionnaire increases with time. This result supports our hypothesis that the comprehension of an ambient display such as Fisherman increases over time. • The data source does not interest users • Lack of reference in the visual metaphor. For example, a typical comment from subject was: “I notice the color, the number of trees and the position of the boat changing but I can’t get precise information from this change. Also I can’t tell the difference between small percentages of change in these three metaphors. There is a lack of reference for the difference between heaviest and heavier fog. In addition, a further analysis by combining comprehension and “interest”, we conclude that the user comprehension increases over time, and some of the participants’ interest to Fisherman stabilized after a time period. This leads us to the following new conclusion: Figure 5. Results of Mean Comprehension Rate 4 Remark 1. An effective ambient display can be understood over time, but should also retain its interest over time. 5. REFERENCES A question in many evaluations is: “When should an evaluation study be conducted?” Our case study shows that to measure the true value of an ambient display such as Fisherman, one should wait until the value of “interest” stabilizes over time. This is because a stable “interest” value means that the display itself integrates into the environment and will not draw unusual attention from users. As a result, testing an ambient display immediately after installment might significantly skew the results due to the novelty effect. Remark 2. Non-intrusive evaluation cannot be tested until the display integrates into the environment and the term of “interest” has been sufficiently stabilized. After conducting this experiment, we also found that the boundary between intrusive and non-intrusive evaluation methodologies is not necessarily well defined. Our previously described categorization is more similar to extreme endpoints on a continuous range than separate buckets. Thus, it is possible that an experiment that was planned to be conducted in a non-intrusive evaluation style becomes intrusive in some way. For example, our questionnaire interview can result in unintended attention being paid to the Fisherman experiment itself, potentially even influencing the results or renewing the interest in the displays. [1] Chalmer, A.P., The role of cognitive theory in humancomputer interface, Computer in human behavior, 2003. 19: 593-607 [2] Cleveland, W.S. et al., Graphical perception: Theory, experimentation, and application to the development of graphical methods. Journal of the American Statistical Association, 1984. 79(387), 531-546. [3] Disappearing Computer. Available at: http://www.disappearing-computer.net, accessed on 23 Oct., 2007. [4] Future Application Lab, Available at: http://www.viktoria.se/fal/, accessed on 23 Oct., 2007 [5] Human Resource Committee, National statement on ethical conduct in research involving humans: Part 18-Privacy of information”, http://www.nhmrc.gov.au/publications/humans/part18.htm, accessed on 23 Oct., 2007 [6] IBM Pervasive Computing. Available at http://wireless.ibm.com/pvc/cy/, accessed on 23 Oct., 2007 [7] Intel Open CV, Available at http://www.intel.com/research/, accessed on 23 Oct., 2007. [8] Ishii, H. et al. Tangible bits: towards seamless interfaces between people, bits and atoms, in Proceedings of CHI’97 (Atlanta, USA), ACM Press, 234-241. [9] Lau, A., et al. Towards a model of information aesthetics in information visualization. In Proceedings of the 11th international conference information visualization 2007. 87-92 The major difference between these two evaluation methodologies is the level of user involvement, with the intrusive evaluation having a higher user involvement than non-intrusive evaluation. Intrusive evaluation seems to be ideal at quantitative measurement of parameters. As commonly applied intrusive evaluations are task-oriented and often occur in well-controlled laboratory environments, most existing evaluation methods in information visualization are part of the intrusive evaluation category. In contrast, a non-intrusive evaluation relies on tracing users by video/image processing or alternative, unobtrusive sensors to collect more candid results (that require less or no user interruption). Many of these techniques are still under development (i.e. it is even difficult to robustly distinguish two different faces under various environment). [10] Mankoff, J., et al., Heuristic evaluation of ambient Remark 3. Non-intrusive evaluation is a better way to conduct in the evaluation of ambient displays, but it may be limited by current sensor technologies and important privacy concerns. [11] Matthews, T., et al., A toolkit for managing user displays, in Proceedings of CHI’03, ACM Press, 169176 attention in peripheral displays, in Proceedings of UIST’04, p. 247-256. 4. CONCLUSION This paper focused on how to conduct a long-term ambient display evaluation study without requiring focused user attention. Firstly, it made a brief discussion of the difference between intrusive and non-intrusive evaluations, which was proposed in our previous paper [16]. Secondly, a non-intrusive evaluation case study was applied and its technical implementation was described in detail. In this case study, a quantitative term of “interest” measurement was proposed to quantify the impact of Fisherman. The results show that the user comprehension increases over time, but the user interest is decreasing. Finally, three remarks are drawn based on our new results. [12] McCrickard, D.S., et al., A model for notification systems evaluation--Assessing user goals for multitasking activity, ACM Transactions on Computer-Human Interaction, ACM Press, 10(4), 312-338. [13] Peters, B., Remote testing versus lab testing, http://boltpeters.com/articles/versus.html, accessed on 23 Oct., 2007 [14] Pousman, Z. et al., A taxonomy of ambient information systems: Four patterns of design. In Proceedings of the AVI’06, ACM Press 67-74. This work is still in progress. Our future plans include further experiments to gain experience in both intrusive and non-intrusive evaluation studies. [15] Shami et al., Context of use evaluation of peripheral displays. In Proceedings of the INTERACT’05. Springer, 579-587. 5 st [16] Shen, X., et al. Intrusive and Non-intrusive [18] Weiser, M. The computer for the 21 century. Evaluation of Ambient Displays, in workshop at Pervasive’07: Design and Evaluating Information Systems, 2007. p. 30-36. Scientific American, 1991. 265(3), 66-75 [17] Somervell, J., et al. An evaluation of information visualization in attention-limited environments. In Proceedings of the symposium on Data Visualisation 2002. 2002: Barcelona, Spain. 6