Computational

North-Holland

Statistics

& Data Analysis

1.5 (1993) l-11

Bartlett correction factors

in logistic regression models

Lawrence

H. Moulton

*

University of Michigan, Ann Arbor, MI, USA

Lisa A. Weissfeld

University of Pittsburgh, Pittsburgh, PA, USA

Roy T. St. Laurent

**

Unicersity of Michigan, Ann Arbor, MI, USA

Received March 1991

Revised July 1991

Abstract: Bartlett correction

factors for likelihood ratio tests of parameters

in conditional

and

unconditional

logistic regression models are calculated. The resulting tests are compared

to the

Wald, likelihood ratio, and score tests, and a test proposed by Moolgavkar and Venzon in Modern

Statistical Methods in Chronic Disease Epidemiology, (Wiley, New York, 1986).

Keywords: Conditional

logistic regression;

Likelihood

ratio test; Score test; Wald test.

1. Introduction

Epidemiologic

case-control

studies frequently

have small sample sizes due to

limited numbers of cases. It is common in such studies for logistic regression

analyses to be employed, using maximum likelihood estimation

and likelihood

ratio, score, or Wald tests of parameter

effects. However, these procedures

are

only asymptotically

valid, and depend on the accuracy of the large sample

approximations

employed. The Wald test is attractive due to the ease with which

it may be constructed.

However, it is not invariant to parameter transformations.

In addition, as Jennings (1986) illustrates it can exhibit unreliable behavior in

that for a given sample size, parameter

estimates farther from the null value

may be judged less significant than those closer to the null value (see also Hauck

and Donner, 1977).

Correspondence to: Roy St. Laurent, Ph.D., Department

of Biostatistics,

School of Public Health,

University of Michigan, 109 South Observatory,

Ann Arbor, MI 48109-2029, USA.

* Now with the Department

of International

Health at The Johns Hopkins University.

* * Authorship

is randomly ordered.

0167-9473/93/$06.00

0 1993 - Elsevier

Science

Publishers

B.V. All rights reserved

2

L.H. Moulton et al. / Bartlett correction factors

Numerous methods for drawing inferences about the parameters in the

logistic regression model have been developed for use in small sample situations. Cox (1970) has described an exact approach to inference about a single

parameter of the model. This approach has been extended and rendered more

feasible via efficient algorithms for calculating joint and conditional distributions

of the sufficient statistics (Bayer and Cox, 1979; Tritchler, 1984; Kirji, Mehta

and Patel, 1987). However, the method still may take hours of computation, and

current software permits only binary covariates to be included in the regression

model.

Moolgavkar and Venzon (1986) have proposed a small sample correction to

Wald-based confidence regions motivated by differential geometric considerations. These regions, based on transformations of the standard Wald regions,

are obtained by solving a system of quasi-linear differential equations and may

be inverted in the usual fashion to obtain a test of hypothesis.

Another approach to parameter inference is to improve upon the small

sample performance of the likelihood ratio test. Bartlett (1937) proposed multiplying the likelihood ratio test statistic by a correction factor so that its

distribution under the null hypothesis would more closely follow that of a x2

random variable. This approach, generalized by Lawley (1956), consists of

matching the first moment of the test statistic to that of a x2 random variable.

The effect is to bring the moments of the corrected statistic to within O(n-*) of

those of the appropriate chi-squared distribution (Lawley, 1956).

In this paper we give explicit expressions for the Bartlett correction factor in

tests of location parameters in conditional and unconditional logistic regression

models. In addition, we evaluate the performance of the resulting corrected

likelihood ratio test statistic and compare its performance to the usual likelihood ratio, Wald, and score test statistics as well as the Moolgavkar-Venzon

modified Wald procedure.

2. Calculation

of Bartlett correction

factors

2.1. Introduction

Let L(p) denote the log lik_elihood function for the p-vector p based on II

observations, and let L and L, denote the value of the log likelihood evaluated

at the true parameter vector /3, and the maximum likelihood estimate a,

respectively. Lawley (1956) showed that under mild regularity conditions 2E(L,

-L) =p + lp + O(n-*>, where the correction term cp is of order n-l and may

be written (Cordeiro, 1983)

Ep =

KrSKtU(

$Krst,-

Kg;

+ Kzs"'} -

KrsKIUKuW

3

L.H. Moulton et al. / Bartlett correctionfactors

Here all of the indices run from 1 to p and the usual summation

applied. The K’S are defined in terms of L(p) as

convention

is

and so on, where p, is the rth component of p. In this notation, the information

matrix A = { - K,~) and its inverse is denoted A - ’ = ( -Key).

Suppose that PT = (pr,r,. . . , &,

&,

. . . , &_J

and under the null hypothesis p2,r = * ** = &,_, = 0. Let L, be the maximized log likelihood under the

null hypothesis, and lq be the correction term calculated und_er the null model.

Then dividing the usual likelihood ratio test statistic 2(L, - LJ by the Bartlett

factor 1 + (eP - eq)/(p - q) will improve the approximation of its distribution

by a Xi_, random variable under the null model. Generally the correction

factor will be a function of j3 and should be estimated from the null model via

maximum likelihood.

2.2. Unconditional logistic regression

The unconditional

logistic regression model may be written

i=l

logit P( yi = 1 I xi) =x:/3,

7* * * 94

(1)

where xi is the p-vector of covariates for the ith observation, the yi are

independent Bernoulli random variables, and p is a p-dimensional parameter

vector. The log likelihood is then

L(P) = 2 [ Yi log{&/(l

- Q}

+ lo@

- eJ] 9

(2)

i=l

with

Oi= exp( .rTp)/{ 1 + exp( XT@)},

Cordeiro (1983, equation 81, gives an expression for lp applicable

generalized linear model. Applying his result to (21, we have

Ep = +tr( HZ:) + +I,TF(2Z’3’ + 3Z,ZZ,)Fl,,

to any

(3)

where the n x n matrices F, H, Z, and Z, are given by F = V(l,, - 201,

H= 1/(61/-L,), Z =X(XTVX-lXT,

and Z, is a diagonal matrix composed of

the diagonal elements of Z. Here I/= diag{8,(1 - O,)}and 8 = diag{O,} are n x n

matrices and 1, is an n-vector of 1’s.

2.3. Conditional logistic regression

Case-control epidemiologic studies often employ matching or stratification in

order to increase efficiency and reduce bias. In a regression framework, the

preferred analysis of highly stratified data involves conditioning on stratum

4

L.H. Moulton et al. / Bartlett correction factors

membership

(Breslow et al., 1978). The resulting conditional

is then maximized to yield conditional

maximum likelihood

Consider a case-control

study with K matched sets (or

stratum consists of one case and R controls, yt = K( R + 11,

case in each stratum, so that yOk = 1, and yjk = 0 for j #

and j = 0,. . . , R. We consider the logistic model

likelihood function

estimates.

strata), where each

j = 0 will denote the

0. Here i = 1,. . ., K

logit P( yjk = 1 I xjk) = ak +x$p,

where (Ye depends on the values of the matching variables. Conditioning

1: R structure

of the stratum

leads to elimination

of CX~ resulting

conditional

log likelihood function

L(p) = ;

5

Yjk

lOg(Pjk)

k=l j=O

=

2 l”g(POk)’

on the

in the

(4)

k=l

Here pjk depends upon the parameter vector p through the pair of transformations rjk = exp(xiT,p) and pj, = 5-jk/C5-mk (notation of Pregibon,

1984). Here

xjk is the p-vector of covariates for the jth individual in the kth matched set

and rjk is the corresponding

odds ratio. The model depends upon /3 only

through the odds ratio, therefore

xsp need not include an unknown constant,

as it is not estimable.

The conditional

logistic regression model is not a generalized

linear model,

hence the expressions for the correction

term lp developed by Cordeiro (1983)

cannot be applied to (4).

Since pok + . . . +pRk = 1,

R

Xjk = Xjk -

c

pmkxmk,

m=O

can be thought of as ‘centered’ x’s. The Bartlett adjustment for the conditional

model is a function of the covariates only through the ijk’s. For example, the

p X p information

matrix A, may be written A = { -K,,} = CA,,

where A, =

&Z..~~~_?~~ijTk

=J?~W,~k.

Here the (R + 1) xp matrix of centered

covariates is

and

W,

is

an

(R

+

1)

x

(R

+

1)

diagonal

matrix

with ~~~

x, = t&k,. . . , &IT,

matrix can be

on the diagonal, j = 0,. .., R. It follows that the information

written A =zTIV%,

where W is block diagonal with blocks IV,, . . . , W,, and 2?

is the K(R + 1) xp matrix of centered covariates combined over matched sets.

The correction

term for the one-to-R matched design is

where

Tk = tr(A,A-‘1,

and the (R + 1) X (R + 1) matrix

U = cw,<gk

A,12?z)(2)Wk. In addition, P =_f(J?TW%-l%T

and Pd = diag(pii>, where pii is

the ith diagonal element of P, i = 1,. . . , K(R + 1). Pc3) is the matrix whose

elements are the cube of the elements of P. A derivation of this result can be

found in the Appendix. Note that our calculations

are carried out with respect

5

L.H. Moulton et al. / Bartlett correction factors

to the number of strata, K. The moments of the resulting

statistics thus are corrected to order 0(K2> (Lawley, 1956).

It is informative to write (5) as

Ep =

I-2

4P13

likelihood

ratio

++p;,-+p,,

where p:, = lnTpdTWPVT’dl,, pi, = l~WPC3)Wl,,, and p4 = l~P~WPdl, - 2(1:+,

UIR+,) - CT:. The terms p:,, pt, measure the squared skewness, and p4

measures the kurtosis of the score vector (McCullagh and Cox, 1986).

3. Numerical

comparisons

3.1. Study design

To assess the agreement between the nominal and actual level of the adjusted

likelihood ratio test statistic in unconditional and conditional logistic regression,

and to explore the power of the test, a numerical study was conducted comparing the score, likelihood ratio, and Wald tests to the Bartlett corrected likelihood ratio test when testing a single element of p to be equal to zero. In

addition, these results were compared to the confidence interval based test of

Moolgavkar and Venzon (1986).

For each of several sample sizes iz, B replicates of the basic experiment were

performed. For each replicate, an n-vector x of independent pseudo-random

Uniform (0, 1) deviates was generated. For y1I 10, the exact level and power

were calculated eased on a complete enumeration of the possible 2” response

vectors y, each weighted by its likelihood. Computing restrictions required

simulation of the y vectors from the logistic model when y1> 10. These values

were generated from another set of Uniform (0, 1) deviates. The multiplicativecongruential uniform pseudo-random

generator of the GAUSSTM system was

employed. For each sample size, we report the observed level and power as the

mean rejection rate of the null hypothesis over the B replicates.

3.2. Unconditional logistic regression results

Here we used the model XT@= PO + plxi, and compared the level and power of

the five methods for testing the null hypothesis p1 = 0 at nominal level (Y= 0.05.

For this model with p = 2, the Moolgavkar-Venzon

limits for p require the

simultaneous solution of the following equations:

6

L.H. Moulton et al. / Bartlett correction factors

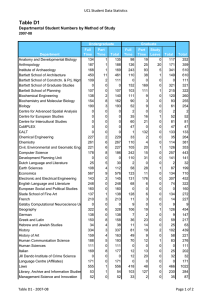

Table 1

Comparison

of average rejection rates for five tests, at nominal level (Y= 0.05, of HO : PI = 0 in an

unconditional

logistic regression model. n = total number of observations.

Columns 3-7, average

rejection rate X 100. Column 8, maximum standard error across the five averages x 100.

Wald

MoolgavkarVenzon

Score

Likelihood

ratio

Bartlett

corrected

Maximum

std. error

PI

n

0

10

20

40

0

3.35

4.56

9.00

7.22

6.15

5.68

5.58

5.31

9.01

6.42

5.73

6.35

5.28

5.14

0.27

0.25

0.15

1

10

20

40

0

4.94

12.52

10.96

12.72

16.22

7.42

9.73

14.31

11.76

11.22

15.37

8.44

9.52

14.21

0.28

1.05

0.70

2

10

20

40

0

6.09

30.24

13.97

24.71

39.45

11.01

15.99

34.77

18.15

19.19

37.41

13.35

16.33

35.25

0.79

3.56

1.45

with p(O) = p^, L(p) and oi defined in (1) and (2). Here Z,,, is the (1 - Lu/2)th

percentage point of the standard normal distribution and sg = - 1, + 1 for the

lower and upper confidence limits respectively. These equations are integrated

from t = 0 to 1. The solution to these equations can be obtained numerically

using the Adams-Gear algorithm (Moolgavkar and Venzon, 1986).

Results are presented for 12= 10, 20, 40, with PO = 0 for all runs. In Table 1

we give the estimates of level, and power for /3r = 1, 2, for each of the five

methods. Table 1 also includes the maximum standard error of the mean over

the five tests, calculated from the B replicates. For y1= 10, B = 25 replicates

were used, while for II = 20 and 40, we took B = 5 replicates of 5000 simulations

each.

We see that the poor performance of the Wald statistic was markedly

improved by the Moolgavkar-Venzon

correction. The score test and the Bartlett

corrected likelihood ratio test both outperform the likelihood ratio test in the

level (pr = 0) runs, the latter being too liberal. Even though the Bartlett

correction factor is designed to improve performance in small samples, at the

smallest sample size (n = 10) it performed slightly worse than the score test.

However, for y1= 20 and 40, rejection rates for the Bartlett corrected likelihood

ratio test were closest to the nominal level.

The likelihood ratio test and Moolgavkar-Venzon

test are both rather liberal,

which translates into greater power, as seen in the & = 1 and & = 2 runs,

where their rejection rates are all greater than those of the score and Bartlett

corrected likelihood ratio test. For these runs, the power for the score and

Bartlett corrected likelihood ratio test are very close.

Considering the relative ease with which the score test may be calculated, and

the similarity between its behavior and that of the Bartlett corrected likelihood

ratio test, based on these results it would appear that the score test is the

preferred method of testing in this situation.

7

L.H. Moulton et al. / Bartlett correction factors

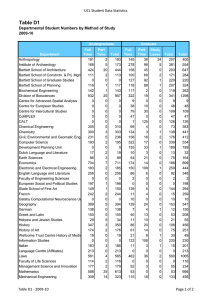

Table 2

Comparison

of average rejection rates for five tests, at nominal level (Y= 0.05, of H,, : p, = 0 in a

conditional

logistic regression model. R = 1, K = number of strata. Columns 3-7, average rejection rate x 100. Column 8, maximum standard error across the five averages X 100.

Pl

0

Wald

K

5

0

6

7

8

9

0

10

20

40

0

MoolgavkarVenzon

Score

Likelihood

ratio

Bartlett

corrected

Maximum

std. error

2.12

3.89

1.00

4.25

6.44

7.63

8.36

8.25

6.97

5.77

1.25

3.00

3.81

3.75

4.06

4.17

4.77

4.82

10.75

9.00

8.19

6.91

6.94

6.71

5.87

5.29

7.50

6.63

6.19

5.44

5.31

5.29

5.13

4.93

0.68

0.64

0.41

0.38

0.32

0.24

0.51

0.14

0

0

0

1

5

6

7

8

9

10

20

40

0

0

0

0

0

0

6.34

21.76

0.96

5.21

8.13

10.23

12.13

12.82

16.23

27.17

1.78

4.33

5.87

6.34

7.08

7.63

12.38

24.71

13.22

11.84

11.48

10.67

11.19

11.34

14.54

25.99

9.43

9.01

8.95

8.70

8.92

9.31

13.14

25.04

0.81

0.77

0.63

0.60

0.44

0.47

1.77

1.44

2

5

6

7

8

9

10

20

40

0

0

0

0

0

0

20.85

65.06

1.16

7.07

12.38

17.60

21.69

24.20

40.18

70.97

3.18

7.96

11.51

13.58

15.51

17.31

33.69

68.38

19.57

19.25

20.16

20.66

22.44

23.64

37.27

69.86

14.45

15.31

16.37

17.54

18.72

20.27

34.95

68.82

1.40

1.40

1.37

1.44

1.19

1.30

6.13

3.20

3.3. Conditional logistic regression results

Here the Moolgavkar-Venzon

related differential

equations.

integrating

confidence

interval is obtained by solving two

For p = 1, the interval endpoints

are found by

from t = 0 to 1, with p(O) = p^ and Tj,(t> = exp{xiT,P(t>}. Numerical

solutions

are obtained using the Adams-Gear

algorithm.

For the conditional

model, the performance

of the five methods was compared in the one-to-one

matched case-control

(R = 1) model with one covariate

via a test of the null hypothesis p, = 0 at nominal level (Y= 0.05.

Results are presented

for K = 5(1)10, 20, 40 matched sets. Table 2 gives the

simulation

estimates of level and power (for p, = 1, 2) for each of the five

8

L.H. Moulton et al. / Bartlett correction factors

methods and the maximum standard error of the mean, across the five tests. For

K I 10, B = 25 replicates were used, while for K = 20 and 40, B = 5 replicates

were run of 5000 simulations each.

In general, the simulation results for the conditional model parallels those of

the unconditional

model. For small sample sizes, the score and Wald tests are

generally too conservative,

while the likelihood ratio test and Bartlett corrected

likelihood

ratio test are too liberal. The Moolgavkar-Venzon

procedure

performs rather erratically (moving from conservative to liberal as K increases) and

poorly overall when compared to most other methods. The rejection rates for

the Bartlett corrected likelihood ratio test, with the exception of the K = 6 run,

are closest to the nominal level.

In the power runs, p, = 1, 2, the Bartlett

corrected

likelihood

ratio test

clearly performed

better than the score test. The Moolgavkar-Venzon

test and

likelihood ratio test exhibited the highest power, but this performance

should be

discounted by their liberal results in the level runs.

4. Discussion

For the conditional

analysis of one-to-one

matched data with a single covariate

(p = l), the Bartlett correction

for the null hypothesis /3i = 0 has a particularly

simple form. Let zjk =xjk -X,k, the stratum mean centered covariate, and let S;

be the variance of xjk within the kth stratum. Then & = & = Tf/K and

skewness and kurtosis

p4 = (f, - 3C*)/K, w h ere q1 and q2 are the standardized

of Zjk (j=O )...) R,k=l,...,

K), respectively,

and C is the coefficient

of

variation of S; (k = 1, . . . , K). Thus pi = (59: - 3q2 + 9C2)/(12K).

In the case of one-to-one

matching (R = 11, q, = 0 and E, may be simplified

to

El

=

1

-

c @Ok_-Xlkj4

2

‘Ok

-

‘lk)*)*

’

where sums in the numerator

and denominator

run over k = 1,. . . , K. This

expression may also be written in terms of deviations xjk -X,k. In either form,

the correction

is essentially a measure of the kurtosis of the distribution

of the

covariates.

If in addition, xjk is a binary covariate, then pi = (2m)-’

where m is the

number of strata in which xok + xlk (0 I m I K), so that the Bartlett factor

becomes

1 + (2m)-‘.

Here the likelihood

ratio test statistic may be written

2m{log2 + r logr + (1 - r) log(1 - r)}, where r is the proportion

of the m strata

for which xok # xlk and xok = 1 (so that 1 - r is the proportion

for which

Xik = 1). Performing

calculations

similar to those of Frydenberg

and Jensen

(1989) for this likelihood, we found the order of the correction in this case to be

less than O(Kp’).

This agrees with their findings that in the case of fully

discrete data, the Bartlett correction

offers little, if any, improvement.

More-

L.H. Moulton et al. / Bartlett correction factors

9

over, for up to at least K = 50 strata, the tail-area probability

of the Bartlett

corrected

likelihood ratio test is the same as for that of the score (McNemar)

test at size (Y= 0.05. This stands in contrast to our results for the continuous

covariate

case (Table 2), where the Bartlett

corrected

likelihood

ratio test

performs better than the score test.

The results of our runs for both the conditional

and unconditional

models

indicate that the computational

burden of obtaining the Moolgavkar-Venzon

interval may not be worth its modest performance

when used for testing. Instead

of trying to improve the Wald test, better results are had by improving the

likelihood ratio test via the relatively simple Bartlett correction.

Many factors may conspire to render even a data set with a large number of

observations

one in which standard large-sample

asymptotics

do not provide

sufficiently accurate approximations.

These include extreme response probabilities, many estimated

parameters

with respect to the number of observations,

sparse corners of the covariate space, and link functions that yield relatively flat

likelihood

functions.

In such situations,

it may be prudent to use a Bartlett

corrected likelihood ratio test. For the logit link case we have studied here, the

score test is also to be preferred

over the Wald and likelihood ratio test.

Appendix: Derivation

of (5)

From the definitions

established:

of pjk and

ijk following

(4), these

identities

are easily

(A *I)

5 /-Q&k = 0,

j=O

a

(A.2)

a

R

rijk=

w

-

C

Pmkxmk’Tmk=

-

m=O

C

P,ki,k’FTmk’

m=O

Taking second partial derivatives

the identities above, gives

of the log likelihood

(A.3)

in (4), and making use of

where ijkr is the rth element of the p-vector +Cjk.As the elements of the second

partial derivative matrix in (A.41 are non-stochastic,

it follows that the right-hand

side of (A.41 is -K,,.

Hence

A=

2

k=l

~~~~~~~~~~

j=O

which also may be written

A = X’JKf.

L.H. Moulton et al. / Bartlett correction factors

From (A.41 it also follows that &) = ~,,t, K:) = ~,,t~,

the expression for the Bartlett correction may be written

Ep =

~K~~K~~K,,~,

-

Kr’KtUKuW{~KrtuKSUW

+

~K,~~K,,~}.

and so on. Therefore

(A.3

Taking partial derivatives of K,~ from (A.41, and employing the identities

(A.lNA.31,

the expression for a mixed third partial derivative of L(p) is

written

a”L(p)

K

K

+

‘St =

a&a&a&

C

k=l

R

C

(A-6)

Pjkijkrijks’j.4t’

j=()

The expression for a mixed fourth partial derivative of L(p) is obtained from

in a similar fashion, yielding

K rSt

K

rStU =

d”L(P)

ap,ap,ap,ap,

= f

5

k=l

-

pjkijkrijksijktijku

j=O

5

k=l

@krsAktu

+AkrtAksu

+AkruAkst),

(A-7)

where Akrs =

Substituting the expressions (A.41, (A.6) and (A.7) into (AS) and employing a

fair amount of matrix algebra inspired by McCullagh (1987, page 2181, and

equation (11) of Cordeiro (19831, we obtain the matrix form for the Bartlett

correction given in (5).

CT=()pjk%jkr%jks.

References

Bartlett, M.S., Properties

of sufficiency and statistical tests, Proceedings of the Royal Statistical

Society A, 160 (1937) 268-282.

Bayer, L., and C. Cox, Algorithm AS142: Exact tests of significance in binary regression models,

Appl. Statist., 28 (1979) 319-324.

Breslow, N.E., N.E. Day, K.T. Halvorsen,

R.L. Prentice and C. Sabai, Estimation

of multiple

relative risk functions

in matched

case-control

studies,

Amer. J. Epidemiol., 108 (1978)

299-307.

Cordeiro, G.M., Improved likelihood ratio statistics for generalized

linear models, J. Roy. Statist.

Sot. B, 45 (1983) 404-413.

Cox, D.R., The Analysis of Binary Data (Methuen,

London, 1970).

Frydenberg,

M., and J.L. Jensen, Is the ‘improved likelihood ratio statistic’ really improved in the

discrete case?, Biometrika, 76 (1989) 655-661.

Hauck, W.W., and A. Donner, Wald’s test as applied to hypotheses in logit analysis, J. Am. Statist.

Assoc., 72 (1977) 851-853.

Jennings, D.E., Judging inference adequacy in logistic regression,

J. Am. Statist. Assoc., 81 (1986)

471-476.

Kirji, K.F., C.R. Mehta and N.R. Patel, Computing distributions

for exact logistic regression,

J.

Amer. Statist. Assoc., 82 (1987) 1110-1117.

L.H. Moulton et al. / Bartlett correction factors

Lawley, D.N., A general method for approximating

to the distribution

11

of likelihood ratio criteria,

Biomettika, 43 (1956) 295-303.

McCullagh, P., Tensor Methods in Statistics (Chapman

and Hall, London, 19871.

McCullagh, P., and D.R. Cox, Invariants and likelihood ratio statistics, Ann. Statist., 14 (1986)

1419-1430.

Moolgavkar, S.H., and D.J. Venzon, Confidence regions for case-control and survival studies with

general relative risk functions, in: S.H. Moolgavkar and R.L. Prentice (Eds.), Modern Statistical

Methods in Chronic Disease Epidemiology (Wiley, New York, 1986).

Pregibon, D., Data analytic methods for matched case-control studies, Biometrics, 40 (1984)

639-651.

Tritchler,

709-711.

D., An algorithm

for exact logistic regression,

J. Amer. Statist. Assoc., 79 (1984)

![School Risk Register [DOCX 19.62KB]](http://s2.studylib.net/store/data/014980461_1-ba10a32430c2d15ad9059905353624b0-300x300.png)