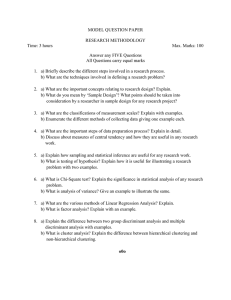

LECTURE 10: Linear Discriminant Analysis

advertisement

LECTURE 10: Linear Discriminant Analysis

Linear Discriminant Analysis, two classes

g Linear Discriminant Analysis, C classes

g LDA vs. PCA example

g Limitations of LDA

g Variants of LDA

g Other dimensionality reduction methods

g

Introduction to Pattern Analysis

Ricardo Gutierrez-Osuna

Texas A&M University

1

Linear Discriminant Analysis, two-classes (1)

g

The objective of LDA is to perform dimensionality reduction while

preserving as much of the class discriminatory information as

possible

n

n

Assume we have a set of D-dimensional samples {x(1, x(2, …, x(N}, N1 of which

belong to class ω1, and N2 to class ω2. We seek to obtain a scalar y by projecting

the samples x onto a line

y = wTx

Of all the possible lines we would like to select the one that maximizes the

separability of the scalars

g

This is illustrated for the two-dimensional case in the following figures

x2

x2

x1

Introduction to Pattern Analysis

Ricardo Gutierrez-Osuna

Texas A&M University

x1

2

Linear Discriminant Analysis, two-classes (2)

g

In order to find a good projection vector, we need to define a measure

of separation between the projections

g

The mean vector of each class in x and y feature space is

µi =

g

g

1

Ni

∑x

and

x∈ωi

~ = 1

µ

i

Ni

1

∑y = N ∑w

y∈ωi

i x∈ωi

T

x = w Tµi

We could then choose the distance between the projected means as our objective

function

~ −µ

~ = w T (µ − µ )

J( w ) = µ

1

2

1

2

However, the distance between the projected means is not a very good measure since it

does not take into account the standard deviation within the classes

x2

µ1

This axis yields better class separability

µ2

x1

This axis has a larger distance between means

Introduction to Pattern Analysis

Ricardo Gutierrez-Osuna

Texas A&M University

3

Linear Discriminant Analysis, two-classes (3)

g

The solution proposed by Fisher is to maximize a function that represents the

difference between the means, normalized by a measure of the within-class

scatter

n

For each class we define the scatter, an equivalent of the variance, as

~

si2 =

2

i

y∈ωi

)

+~

s22 is called the within-class scatter of the projected examples

The Fisher linear discriminant is defined as the linear function wTx that maximizes the

criterion function

~ −µ

~ 2

µ

1

2

J( w ) = ~2 ~2

s +s

g

n

(~s

∑ (y − µ~ )

where the quantity

2

1

1

n

Therefore, we will be looking for a projection

where examples from the same class are

projected very close to each other and, at the

same time, the projected means are as farther

apart as possible

2

x2

µ1

µ2

x1

Introduction to Pattern Analysis

Ricardo Gutierrez-Osuna

Texas A&M University

4

Linear Discriminant Analysis, two-classes (4)

n

n

In order to find the optimum projection w*, we need to express J(w) as an explicit function of w

We define a measure of the scatter in multivariate feature space x, which are scatter matrices

Si =

∑ (x − µ )(x − µ )

T

i

i

x∈ωi

S1 + S 2 = S W

g

n

where SW is called the within-class scatter matrix

The scatter of the projection y can then be expressed as a function of the scatter matrix in

feature space x

~

si2 =

∑ (y − µ~ )

2

y∈ωi

i

=

∑ (w

x∈ωi

T

x − w Tµi

) = ∑ w (x − µ )(x − µ ) w = w S w

2

T

T

i

x∈ωi

i

T

i

~

s12 + ~

s22 = w T S W w

n

Similarly, the difference between the projected means can be expressed in terms of the means

in the original feature space

T

(µ~1 − µ~2 )2 = (w Tµ1 − w Tµ2 )2 = w T (1

µ1 − µ2 )(µ1 − µ2 ) w = w T SB w

44

42444

3

SB

g

n

The matrix SB is called the between-class scatter. Note that, since SB is the outer product of two vectors,

its rank is at most one

We can finally express the Fisher criterion in terms of SW and SB as

w T SB w

J( w ) = T

w SW w

Introduction to Pattern Analysis

Ricardo Gutierrez-Osuna

Texas A&M University

5

Linear Discriminant Analysis, two-classes (5)

n

To find the maximum of J(w) we derive and equate to zero

T

d

[J( w )] = d wT SB w = 0 ⇒

dw

dw w S W w

d w T SB w

d w TS W w

T

T

⇒ w SW w

− w SB w

=0 ⇒

dw

dw

⇒ w T S W w 2SB w − w T SB w 2S W w = 0

][

[

[

n

] [

]

][

]

[

]

Dividing by wTSWw

[w S w ]S w − [w S w ] S

[w S w ]

[w S w ]

T

T

W

T

W

B

B

T

W

w =0 ⇒

W

⇒ SB w − JS W w = 0 ⇒

⇒ S −W1SB w − Jw = 0

n

Solving the generalized eigenvalue problem (SW-1SBw=Jw) yields

w T SB w

−1

w* = argmax T

= S W (µ1 − µ2 )

w

w SW w

g

This is know as Fisher’s Linear Discriminant (1936), although it is not a discriminant but rather a

specific choice of direction for the projection of the data down to one dimension

Introduction to Pattern Analysis

Ricardo Gutierrez-Osuna

Texas A&M University

6

LDA example

g

Compute the Linear Discriminant projection for

the following two-dimensional dataset

n

n

g

10

8

X1=(x1,x2)={(4,1),(2,4),(2,3),(3,6),(4,4)}

X2=(x1,x2)={(9,10),(6,8),(9,5),(8,7),(10,8)}

SOLUTION (by hand)

n

6

x2

The class statistics are:

0.80 - 0.40

1.84 - 0.04

S1 =

; S2 =

- 0.40 2.60

- 0.04 2.64

µ1 = [3.00 3.60 ];

µ2 = [8.40 7.60 ]

n

The within- and between-class scatter are

2.64 - 0.44

29.16 21.60

=

;

S

SB =

W - 0.44 5.28

21.60 16.00

n

4

2

0

wLDA

0

2

4

x1

6

8

10

The LDA projection is then obtained as the solution of the generalized eigenvalue problem

S -W1 SB v = λv ⇒ S -W1 SB − λI = 0 ⇒

11.89 - λ

8.81

= 0 ⇒ λ = 15.65

5.08

3.76 - λ

v 1 v 1 0.91

11.89 8.81 v1

5.08 3.76 v = 15.65 v ⇒ v = 0.39

2

2 2

n

Or directly by

w* = S −W1 (µ1 − µ2 ) = [− 0.91 − 0.39 ]

T

Introduction to Pattern Analysis

Ricardo Gutierrez-Osuna

Texas A&M University

7

Linear Discriminant Analysis, C-classes (1)

g

Fisher’s LDA generalizes very gracefully for C-class problems

n

Instead of one projection y, we will now seek (C-1) projections [y1,y2,…,yC-1] by means of

(C-1) projection vectors wi, which can be arranged by columns into a projection matrix

W=[w1|w2|…|wC-1]:

T

yi = w i x ⇒ y = W T x

g

x2

Derivation

n

The generalization of the within-class scatter is

C

S W = ∑ Si

SW1

µ1

i=1

where Si =

n

∑ (x − µ )(x − µ )

T

i

x∈ωi

i

1

and µi =

Ni

SB1

∑x

x∈ωi

µ

The generalization for the between-class scatter is

C

SB = ∑ Ni (µi − µ)(µi − µ)

T

SB2

SB3

µ3

SW3

µ2

i=1

where µ =

g

1

1

x = ∑ Niµi

∑

N ∀x

N x∈ωi

where ST=SB+SW is called the total scatter matrix

Introduction to Pattern Analysis

Ricardo Gutierrez-Osuna

Texas A&M University

SW2

x1

8

Linear Discriminant Analysis, C-classes (2)

n

Similarly, we define the mean vector and scatter matrices for the projected samples as

~ =1

µ

i

Ni

∑y

y∈ωi

~ =1 y

µ

∑

N ∀y

n

C

~

~ )(y − µ

~ )T

S W = ∑ ∑ (y − µ

i

i

i=1 y∈ωi

C

~

~ −µ

~)(µ

~ −µ

~)T

SB = ∑ Ni (µ

i

i

i=1

From our derivation for the two-class problem, we can write

~

S W = W TS W W

~

SB = W T SB W

n

Recall that we are looking for a projection that maximizes the ratio of between-class to

within-class scatter. Since the projection is no longer a scalar (it has C-1 dimensions), we

then use the determinant of the scatter matrices to obtain a scalar objective function:

~

SB

W T SB W

J( W ) = ~ =

W TSW W

SW

n

And we will seek the projection matrix W* that maximizes this ratio

Introduction to Pattern Analysis

Ricardo Gutierrez-Osuna

Texas A&M University

9

Linear Discriminant Analysis, C-classes (3)

n

It can be shown that the optimal projection matrix W* is the one whose columns are the

eigenvectors corresponding to the largest eigenvalues of the following generalized

eigenvalue problem

[

W* = w | w | L | w

g

*

1

*

2

*

C −1

]

W T SB W

= argmax T

W S W W

*

⇒ (SB − λ iS W )w i = 0

NOTES

n

SB is the sum of C matrices of rank one or less and the mean vectors are constrained by

1 C

∑ µi = µ

C i=1

g

g

n

n

Therefore, SB will be of rank (C-1) or less

This means that only (C-1) of the eigenvalues λi will be non-zero

The projections with maximum class separability information are the eigenvectors

corresponding to the largest eigenvalues of SW-1SB

LDA can be derived as the Maximum Likelihood method for the case of normal classconditional densities with equal covariance matrices

Introduction to Pattern Analysis

Ricardo Gutierrez-Osuna

Texas A&M University

10