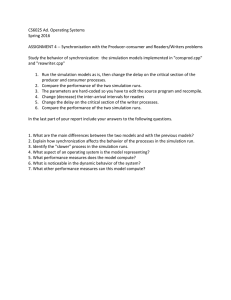

A Survey on Parallel and Distributed Multi-Agent Systems for

advertisement