The Trigger Control System and the Common GEM and Silicon

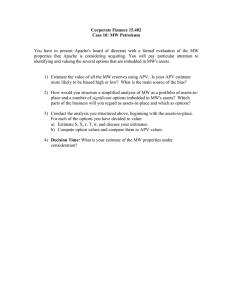

advertisement