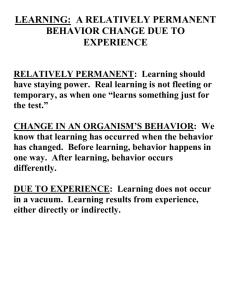

Different mechanisms are responsible for

advertisement