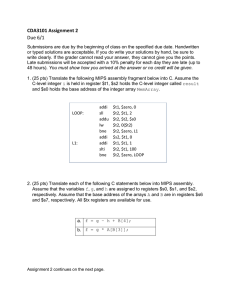

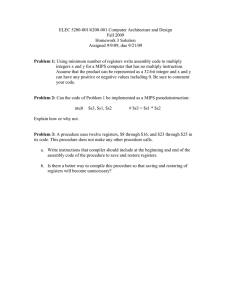

ALU Architecture and ISA Extensions

advertisement

ALU Architecture and ISA

Extensions

Lecture notes from MKP, H. H. Lee and S. Yalamanchili

Reading

• Sections 3.2-3.5 (only those elements covered

in class)

• Sections 3.6-3.8

• Appendix B.5

• Practice Problems: 26, 27

• Goal: Understand the

v ISA view of the core microarchitecture

v Organization of functional units and register files into

basic data paths

(2)

1

Overview

• Instruction Set Architectures have a purpose

v Applications dictate what we need

• We only have a fixed number of bits

v Impact on accuracy

• More is not better

v We cannot afford everything we want

• Basic Arithmetic Logic Unit (ALU) Design

v Addition/subtraction, multiplication, division

(3)

Reminder: ISA

byte addressed memory

Register File (Programmer Visible State)

Memory Interface

stack

0x00

0x01

0x02

0x03

Processor Internal Buses

0x1F

Dynamic Data

Data segment

(static)

Text Segment

Programmer Invisible State

Instruction

register

Kernel

registers

Reserved

0xFFFFFFFF

Program

Counter

Arithmetic Logic Unit (ALU)

Memory Map

Who sees what?

(4)

2

Arithmetic for Computers

• Operations on integers

v Addition and subtraction

v Multiplication and division

v Dealing with overflow

• Operation on floating-point real numbers

v Representation and operations

• Let us first look at integers

(5)

Integer Addition(3.2)

• Example: 7 + 6

n

Overflow if result out of range

n

n

Adding +ve and –ve operands, no overflow

Adding two +ve operands

n

n

Overflow if result sign is 1

Adding two –ve operands

n

Overflow if result sign is 0

(6)

3

Integer Subtraction

• Add negation of second operand

• Example: 7 – 6 = 7 + (–6)

+7:

–6:

+1:

0000 0000 … 0000 0111

1111 1111 … 1111 1010

0000 0000 … 0000 0001

2’s complement

representation

• Overflow if result out of range

v Subtracting two +ve or two –ve operands, no overflow

v Subtracting +ve from –ve operand

o Overflow if result sign is 0

v Subtracting –ve from +ve operand

o Overflow if result sign is 1

(7)

ISA Impact

• Some languages (e.g., C) ignore overflow

v Use MIPS addu, addui, subu instructions

• Other languages (e.g., Ada, Fortran) require

raising an exception

v Use MIPS add, addi, sub instructions

v On overflow, invoke exception handler

o Save PC in exception program counter (EPC) register

o Jump to predefined handler address

o mfc0 (move from coprocessor register) instruction can

retrieve EPC value, to return after corrective action

(more later)

• ALU Design leads to many solutions. We look at

one simple example

(8)

4

Integer ALU (arithmetic logic unit)(B.5)

• Build a 1 bit ALU, and use 32 of them

(bit-slice)

operation

a

op a

b

res

result

b

(9)

Single Bit ALU

Implements only AND and OR operations

Operation

0

A

Result

1

B

(10)

5

Adding Functionality

• We can add additional operators (to a point)

• How about addition?

CarryIn

cout = ab + acin + bcin

sum = a ⊕ b ⊕ cin

a

Sum

b

CarryOut

• Review full adders from digital design

(11)

Building a 32-bit ALU

CarryIn

a0

b0

Operation

Operation

CarryIn

ALU0

Result0

CarryOut

CarryIn

a1

a

0

b1

1

2

b

CarryIn

ALU1

Result1

CarryOut

Result

a2

b2

CarryIn

ALU2

Result2

CarryOut

CarryOut

a31

b31

CarryIn

ALU31

Result31

(12)

6

Subtraction (a – b) ?

• Two's complement approach: just negate b

and add 1.

• How do we negate?

sub

Binvert

CarryIn

a0

CarryIn

ALU0

b0

Operation

Result0

CarryOut

• A clever solution:

Binvert

Operation

a1

a

ALU1

0

0

Result1

CarryOut

1

b

CarryIn

b1

CarryIn

a2

CarryIn

ALU2

b2

Result2

CarryOut

Result

2

1

a31

CarryOut

b31

CarryIn

ALU31

Result31

(13)

Tailoring the ALU to the MIPS

• Need to support the set-on-less-than instruction(slt)

v remember: slt is an arithmetic instruction

v produces a 1 if rs < rt and 0 otherwise

v use subtraction: (a-b) < 0 implies a < b

• Need to support test for equality (beq $t5, $t6, $t7)

v use subtraction: (a-b) = 0 implies a = b

(14)

7

What Result31 is when (a-b)<0?

Binvert

CarryIn

a0

b0

CarryIn

ALU0

Less

CarryOut

a1

b1

0

CarryIn

ALU1

Less

CarryOut

a2

b2

0

CarryIn

ALU2

Less

CarryOut

Operation

Result0

Binvert

Operation

CarryIn

a

0

Result1

1

Result

b

0

Result2

2

1

Less

3

CarryOut

CarryIn

a31

b31

0

CarryIn

ALU31

Less

Result31

Set

Overflow

Unsigned vs. signed support

(15)

Test for equality

Bnegate

Operation

• Notice control lines:

000

001

010

110

111

=

=

=

=

=

and

or

add

subtract

slt

• Note: zero is a 1 when the result is zero!

a0

b0

CarryIn

ALU0

Less

CarryOut

Result0

a1

b1

0

CarryIn

ALU1

Less

CarryOut

Result1

a2

b2

0

CarryIn

ALU2

Less

CarryOut

Result2

Zero

Note test for overflow!

a31

b31

0

CarryIn

ALU31

Less

Result31

Set

Overflow

(16)

8

ISA View

CPU/Core

$0

$1

$31

ALU

• Register-to-Register data path

• We want this to be as fast as possible

(17)

Multiplication (3.3)

• Long multiplication

multiplicand

multiplier

product

1000

1001

1000

0000

0000

1000

1001000

×

Length of product

is the sum of

operand lengths

(18)

9

A Multiplier

• Uses multiple adders

v Cost/performance tradeoff

n

Can be pipelined

n

Several multiplication performed in parallel

(19)

MIPS Multiplication

• Two 32-bit registers for product

v HI: most-significant 32 bits

v LO: least-significant 32-bits

• Instructions

v mult rs, rt / multu rs, rt

o 64-bit product in HI/LO

v mfhi rd / mflo rd

o Move from HI/LO to rd

o Can test HI value to see if product

overflows 32 bits

v mul rd, rs, rt

o Least-significant 32 bits of product –

> rd

Study Exercise: Check out signed and

unsigned multiplication with QtSPIM

(20)

10

Division(3.4)

quotient

dividend

1001

1000 1001010

-1000

divisor

10

101

1010

-1000

10

remainder

• Check for 0 divisor

• Long division approach

v If divisor ≤ dividend bits

o 1 bit in quotient, subtract

v Otherwise

o 0 bit in quotient, bring down

next dividend bit

• Restoring division

n-bit operands yield n-bit •

quotient and remainder

v Do the subtract, and if

remainder goes < 0, add

divisor back

Signed division

v Divide using absolute values

v Adjust sign of quotient and

remainder as required

(21)

Faster Division

• Can’t use parallel hardware as in multiplier

v Subtraction is conditional on sign of remainder

• Faster dividers (e.g. SRT division) generate

multiple quotient bits per step

v Still require multiple steps

• Customized implementations for high

performance, e.g., supercomputers

(22)

11

MIPS Division

• Use HI/LO registers for result

v HI: 32-bit remainder

v LO: 32-bit quotient

• Instructions

v div rs, rt / divu rs, rt

v No overflow or divide-by-0

checking

o Software must perform checks

if required

v Use mfhi, mflo to access result

Study Exercise: Check out signed

and unsigned division with QtSPIM

(23)

ISA View

CPU/Core

$0

$1

$31

Multiply

Divide

ALU

Hi

Lo

• Additional function units and registers (Hi/Lo)

• Additional instructions to move data to/from

these registers

v mfhi, mflo

• What other instructions would you add? Cost?

(24)

12

Floating Point(3.5)

• Representation for non-integral numbers

v Including very small and very large numbers

• Like scientific notation

normalized

v –2.34 × 1056

v +0.002 × 10–4

v +987.02 × 109

not normalized

• In binary

v ±1.xxxxxxx2 × 2yyyy

• Types float and double in C

(25)

IEEE 754 Floating-point Representation

Single Precision (32-bit)

31 30 29 28 27 26 25 24 23 22 21 20 19 18 17 16 15 14 13 12 11 10 9 8 7 6 5 4 3 2 1 0

S

exponent

significand

1bit

23 bits

8 bits

(–1)sign x (1+fraction) x 2exponent-127

Double Precision (64-bit)

63 62 61 60 59 58 57 56 55 54 53 52 51 50 49 48 47 46 45 44 43 42 41 40 39 38 37 36 35 34 33 32

S

exponent

significand

1bit

20 bits

11 bits

significand (continued)

32 bits

(–1)sign x (1+fraction) x 2exponent-1023

(26)

13

Floating Point Standard

• Defined by IEEE Std 754-1985

• Developed in response to divergence of

representations

v Portability issues for scientific code

• Now almost universally adopted

• Two representations

v Single precision (32-bit)

v Double precision (64-bit)

(27)

FP Adder Hardware

• Much more complex than integer adder

• Doing it in one clock cycle would take too long

v Much longer than integer operations

v Slower clock would penalize all instructions

• FP adder usually takes several cycles

v Can be pipelined

Example: FP Addition

(28)

14

FP Adder Hardware

Step 1

Step 2

Step 3

Step 4

(29)

FP Arithmetic Hardware

• FP multiplier is of similar complexity to FP

adder

v But uses a multiplier for significands instead of an

adder

• FP arithmetic hardware usually does

v Addition, subtraction, multiplication, division,

reciprocal, square-root

v FP ↔ integer conversion

• Operations usually takes several cycles

v Can be pipelined

(30)

15

ISA Impact

• FP hardware is coprocessor 1

v Adjunct processor that extends the ISA

• Separate FP registers

v 32 single-precision: $f0, $f1, … $f31

v Paired for double-precision: $f0/$f1, $f2/$f3, …

o Release 2 of MIPs ISA supports 32 × 64-bit FP

reg’s

• FP instructions operate only on FP registers

v Programs generally do not perform integer ops on FP

data, or vice versa

v More registers with minimal code-size impact

(31)

ISA View: The Co-Processor

Co-Processor 1

CPU/Core

$0

$1

$0

$1

$31

$31

Multiply

Divide

ALU

Hi

FP ALU

Lo

Co-Processor 0

BadVaddr

Status

Causes

EPC

later

• Floating point operations access a separate set

of 32-bit registers

v Pairs of 32-bit registers are used for double precision

(32)

16

ISA View

• Distinct instructions operate on the floating

point registers (pg. A-73)

v Arithmetic instructions

o add.d fd, fs, ft, and add.s fd, fs, ft

double precision

single precision

• Data movement to/from floating point

coprocessors

v mcf1 rt, fs and mtc1 rd, fs

• Note that the ISA design implementation is

extensible via co-processors

• FP load and store instructions

v lwc1, ldc1, swc1, sdc1

o e.g., ldc1 $f8, 32($sp)

Example: DP Mean

(33)

Associativity

• Floating point arithmetic is not commutative

• Parallel programs may interleave operations in

unexpected orders

v Assumptions of associativity may fail

(x+y)+z

x+(y+z)

-1.50E+38

x -1.50E+38

y 1.50E+38 0.00E+00

z

1.0

1.0 1.50E+38

1.00E+00 0.00E+00

n

Need to validate parallel programs under varying

degrees of parallelism

(34)

17

Performance Issues

• Latency of instructions

v Integer instructions can take a single cycle

v Floating point instructions can take multiple cycles

v Some (FP Divide) can take hundreds of cycles

• What about energy (we will get to that shortly)

• What other instructions would you like in

hardware?

v Would some applications change your mind?

• How do you decide whether to add new

instructions?

(35)

Characterizing Parallelism

Today serial computing cores

(von Neumann model)

Instruction Streams

Data Streams

SISD

SIMD

MISD

MIMD

Single instruction

multiple data stream

computing, e.g., SSE

Today’s Multicore

• Characterization due to M. Flynn*

*M. Flynn, (September 1972). "Some Computer Organizations and Their Effectiveness". IEEE Transactions

on Computers, C–21 (9): 948–960t

(36)

18

Parallelism Categories

From http://en.wikipedia.org/wiki/Flynn%27s_taxonomy

(37)

Multimedia (3.6, 3.7, 3.8)

• Lower dynamic range and precision

requirements

v Do not need 32-bits!

• Inherent parallelism in the operations

(38)

19

Vector Computation

• Operate on multiple data elements (vectors) at

a time

• Flexible definition/use of registers

•

Registers hold integers, floats (SP), doubles DP)

128-bit Register

1x128 bit integer

2x64-bit double precision

4 x 32-bit single precision

8x16 short integers

(39)

Processing Vectors

• When is this more efficient?

Memory

vector registers

• When is this not efficient?

• Think of 3D graphics, linear algebra and media

processing

(40)

20

Case Study: Intel Streaming SIMD

Extensions

• 8, 128-bit XMM registers

v X86-64 adds 8 more registers XMM8-XMM15

• 8, 16, 32, 64 bit integers (SSE2)

• 32-bit (SP) and 64-bit (DP) floating point

• Signed/unsigned integer operations

• IEEE 754 floating point support

• Reading Assignment:

v http://en.wikipedia.org/wiki/Streaming_SIMD_Extensions

v http://neilkemp.us/src/sse_tutorial/sse_tutorial.html#I

(41)

Instruction Categories

• Floating point instructions

v Arithmetic, movement

v Comparison, shuffling

v Type conversion, bit level

register

memory

register

• Integer

• Other

v e.g., cache management

• ISA extensions!

• Advanced Vector

Extensions (AVX)

v Successor to SSE

(42)

21

Arithmetic View

• Graphics and media processing operates on

vectors of 8-bit and 16-bit data

v Use 64-bit adder, with partitioned carry chain

o Operate on 8×8-bit, 4×16-bit, or 2×32-bit vectors

v SIMD (single-instruction, multiple-data)

• Saturating operations

v On overflow, result is largest representable value

o c.f. 2s-complement modulo arithmetic

v E.g., clipping in audio, saturation in video

4x16-bit

2x32-bit

(43)

SSE Example

// A 16byte = 128bit vector struct

struct Vector4

{

float x, y, z, w;

};

More complex

example (matrix

multiply) in Section

3.8 – using AVX

// Add two constant vectors and return the resulting vector

Vector4 SSE_Add ( const Vector4 &Op_A, const Vector4 &Op_B )

{

Vector4 Ret_Vector;

__asm

{

MOV EAX Op_A

MOV EBX, Op_B

MOVUPS XMM0, [EAX]

MOVUPS XMM1, [EBX]

ADDPS XMM0, XMM1

MOVUPS [Ret_Vector], XMM0

}

}

return Ret_Vector;

// Load pointers into CPU regs

// Move unaligned vectors to SSE regs

// Add vector elements

// Save the return vector

From http://neilkemp.us/src/sse_tutorial/sse_tutorial.html#I

(44)

22

www.anandtech.com

Intel Xeon Phi

www.techpowerup.com

www.anandtech.com

(45)

Data Parallel vs. Traditional Vector

Vector Architecture

Vector

Register

A

Vector

Register

C

Vector

Register

B

pipelined functional unit

Data Parallel Architecture

registers

Process each square in

parallel – data parallel

computation

(46)

23

ISA View

SIMD Registers

CPU/Core

$0

$1

XMM0

XMM1

$31

XMM15

Multiply

Divide

ALU

Hi

Vector ALU

Lo

• Separate core data path

• Can be viewed as a co-processor with a distinct

set of instructions

(47)

Domain Impact on the ISA: Example

Scientific Computing

•

•

•

•

Floats

Double precision

Massive data

Power

constrained

Embedded Systems

•

•

•

•

•

Integers

Lower precision

Streaming data

Security support

Energy

constrained

(48)

24

Summary

• ISAs support operations required of application

domains

v Note the differences between embedded and

supercomputers!

v Signed, unsigned, FP, SIMD, etc.

• Bounded precision effects

v Software must be careful how hardware used e.g.,

associativity

v Need standards to promote portability

• Avoid “kitchen sink” designs

v There is no free lunch

v Impact on speed and energy à we will get to this later

(49)

Study Guide

• Perform 2’s complement addition and subtraction

(review)

• Add a few more instructions to the simple ALU

v Add an XOR instruction

v Add an instruction that returns the max of its inputs

v Make sure all control signals are accounted for

• Convert real numbers to single precision floating

point (review) and extract the value from an

encoded single precision number (review)

• Execute the SPIM programs (class website) that

use floating point numbers. Study the memory/

register contents via single step execution

(50)

25

Study Guide (cont.)

• Write a few simple SPIM programs for

v Multiplication/division of signed and unsigned

numbers

o Use numbers that produce >32-bit results

o Move to/from HI and LO registers ( find the instructions

for doing so)

v Addition/subtraction of floating point numbers

• Try to write a simple SPIM program that

demonstrates that floating point operations are

not associative (this takes some thought and

review of the range of floating point numbers)

• Look up additional SIMD instruction sets and

compare

v AMD NEON, Altivec, AMD 3D Now

(51)

Glossary

• Co-processor

• Data parallelism

• Data parallel

computation vs.

vector

computation

• Instruction set

extensions

• Overflow

• MIMD

• Precision

• SIMD

• Saturating

arithmetic

• Signed arithmetic

support

• Unsigned

arithmetic

support

• Vector processing

(52)

26