Print this article

advertisement

Mirza Abdulla, et al International Journal of Computer and Electronics Research [Volume 5, Issue 3, June 2016]

An O(n^(4/3)) Worst Case Time

Selection Sort Algorithm

Mirza Abdulla

Computer Science Department, AMA international University, Bahrain

mirza999@hotmail.com

Abstract─ Sorting is one of the most important and

fundamental problems in Computer Science. Techniques like

Insertion sort, selection, or bubble sort were seen as inefficient due

to their 𝑶(𝒏𝟐 ) worst case time complexity. However, this didn’t

prevent researchers from attempting to improve these bounds.

Shell sort was an improvement over Insertion sort. We present a

modified selection sort technique that runs in 𝑶(𝒏𝟒/𝟑 ) in the

worst case. Thus we obtain an asymptotic improvement of a factor

of 𝑶(𝒏𝟐/𝟑 ) over the classical selection sort technique worst case

running time.

Keywords: Selection sort; time complexity; O notation

1. INTRODUCTION

Ever since the construction of modern day computer, the

problems of sorting and searching were fundamental to the

solution of most problems. This stems from the fact we need to

access data as fast possible, and some arrangements or

permutations of data can improve the access time substantially.

Indeed, sorting amounts to about half the processing and data

manipulations performed I commercial applications.

Sorting is a permutation of data. More formally, given a set of

n elements 𝑎1 , 𝑎2 , 𝑎3 , … . , 𝑎𝑛 , we need to permute the set

into 𝑎𝜋(1) , 𝑎𝜋(2) , 𝑎𝜋(3) , … . , 𝑎𝜋(𝑛) . Usually, we want to reorder

the data into ascending or descending sequence. In this case we

have𝑎𝜋(𝑖) ≤ 𝑎𝜋(𝑗) ,for 𝑖 < 𝑗, when the ordering is to be

ascending, and 𝑎𝜋(𝑖) ≥ 𝑎𝜋(𝑗) when the ordering is to be

descending. In comparing sorting algorithms we may also

seek other criteria to be satisfied. Some of the criteria that are

usually considered when comparing sorting algorithms are:

Time efficiency of the sorting technique. There are

different measures of time. These include best,

average and worst case asymptotic performance.

Space efficiency of the sorting technique.

Number of comparisons made.

Number of data movements made

Stability of the sort technique.

Some of the most well known sorting techniques include

bubble, Insertion, and Selection sort. These sort techniques

share two common criteria among them: simplicity of coding,

make about n passes over data, gross inefficiency in running

©http://ijcer.org

e- ISSN: 2278-5795

time, and a good introduction to sorting at introductory

programming level courses. The behavior of these techniques

on some other properties is presented in Table 1.

Table 1:.Properties

Sort Properties

Selection

Insertion

Bubble

Not stable

Stable

Stable

O(1)

O(1)

O(1)

extra space

extra space

extra space

Θ(n2)

O(n2)

O(n2)

comparisons

comparisons

comparisons

Θ(n) swaps

O(n2) swaps

O(n2) swaps

Not adaptive

Adaptive:

Adaptive:

O(n)

time O(n)

when

when nearly nearly sorted

sorted

In this paper we seek to convert such grossly inefficient

sorting techniques to more efficient ones by identifying areas of

inefficiency and attempt to do away with unduly repetitive

tasks, with focus on the Selection sort technique.

Enhancements to Selection sort technique appear in the

literature. For example, [1] consider improving the data

movement in the selection sort process.

The asymptotic

performances remains as bad as it was before, however, in the

best case less data movements are made. Along the same lines

[2] make some improvements to data movement thus reducing

the running time of selection sort to some extent but with no

effect on the asymptotic time performance and the expense of

extra space. Other work such as that of [3-5] don’t make any

asymptotic time improvement in the running time of the sorting

algorithm. On the other hand [9] makes a substantial worst

case improvement by marrying the ideas of insertion sort and

selection sort to get an 𝑂(𝑛√𝑛) worst case running time sorting

technique. [11] improves that idea even further to make it

apply to just selection sort without scarifying the running time

of 𝑂(𝑛√𝑛)or the use of 𝑂(1) extra space.

In this paper, we present an improved worst case running

time selection algorithm. We prove that it is substantially

better and with 𝑂(𝑛2/3 ) factor of time improvement than the

classical Selection sort technique. Section 2 gives a brief

p- ISSN: 2320-9348

Page 36

Mirza Abdulla, et al International Journal of Computer and Electronics Research [Volume 5, Issue 3, June 2016]

background of the improvement to the classical Selection sort

obtained by [11] and worst case running time analysis. In

section 3 we present the concept of Improved Selection Sort

(ISS) and the pseudo code as well as the theoretical time

analysis of the algorithm.

2.

contains the minimum element of the block.

However, when any of the other elements in the

current block becomes the current element

block, it may not have the minimum of the

block in that position initially, and therefore, a

search over that block is needed.

WORST CASE SELECTION SORT ALGORITHM

𝑶(𝒏. √𝒏)Worst case time Selection Sort algorithm.

In selection sort we have two partitions: one sorted and the

other unsorted. Initially the sorted list is empty, and all the

elements are in the unsorted lists. For an unsorted list that

initially has n items, n-1 iterations are made on the list where

each time the minimum is found and swapped with the first

element in the list. Thus increasing the sorted list by and

similarly decreasing the unsorted list by one. Unfortunately,

and despite its simplicity, this technique requires an overall of

𝑂(𝑛2 ) operations in the best-average-worst case. However,

[11] showed that the use of blocks can significantly improve

the time for the selection of the minimum element of the

unsorted list during each iteration of Selection sort algorithm.

The idea is to treat the array of 𝑛 elements to be composed of

2

2

√𝑛 consecutive blocks each of size √𝑛 elements, which were

referred to as level 0 or 𝐿0 blocks.The method used to achieve

this improvement required that we always keep the minimum of

a block in the first location of that block.During each iteration

we only search thru the unsorted list but only the items of the

block to which the current item belongs to, and the first element

of the other blocks in the unsorted list.The minimum item

found is thus the minimum of the unsorted list and therefore is

swapped with the current item. After the swap is made the

block from which the minimum was found may now not

contain in its first position the minimum of that block and

therefore another search for the minimum of the block is

conducted, and the minimum found then is swapped with the

first item of that block. Such an action guarantees that all right

blocks contain the minimum of their elements in the first

position prior to the start of each iteration of the improved

selection sort algorithm.

𝟐

The 𝑶(𝒏 √𝒏) time algorithm

Step0 find the minimum element in each block and

interchanges it with the first element in that

block.This step is to make sure that prior to step 1 all

the blocks in the array would contain the minimum of

that block in the first position of the block.

Step1 iterate over steps 2 to 6 until the whole array is

sorted. Thisis the main loop in the selection sort

algorithm.

Step 2find the minimum element in the current

block. Note that first position of a block

©http://ijcer.org

e- ISSN: 2278-5795

Step3 find the minimum element in the entire

unsorted list. The loop need only inspect the

first element in each block of the unsorted list

so far to compare it with the minimum.

Step4 find the number of the block where the

minimum of the unsorted list was found.

This value can be easily obtained by dividing

the location by the size of a block. The quotient

may be incremented by one if the number of the

block is to start from 1.

Step 5 we have the location of the minimum element

of the entire unsorted list and therefore, we

can swap it with the current element.

Step 6 find and put the minimum of the block where

the minimum of the entire unsorted elements

was found (step 4) in the first position of that

block. This operations is needed since the

minimum of the entire unsorted list was

swapped with the current element, the block

which contained that element before the swap

may not have the minimum of the block in the

first position. Therefore, we may need to find

the minimum of the block and interchange it

with the first element of the block.

[11]Proved that each of the steps from 2 to 6 can be

2

performed in 𝑂( √𝑛) time. step 1 iterates over the entire list of

2

size n, and hence steps 1 to 6 can be performed in 𝑂(𝑛 √𝑛)

time. step 0 can be performed in 𝑂(𝑛) time. Therefore, the

2

entire algorithm can be performed in 𝑂(𝑛 √𝑛) time in the worst

case.

3. IMPROVED SELECTION SORT

The introduction of the idea of 𝐿0 blocks in [11] led tothe

2

better bound of 𝑂(𝑛 √𝑛)in selection sort. Such an idea may not

be improved by increasing the number of blocks or the size of a

block. However, the technique can be enhanced further by

introducing another level of blocks: the 𝐿1 blocks.

Definition: given an array, A[1..n], holding n elements,

1.

We assume that the sorted list is built starting from

lower indexed items going towards the higher indexed

items. We will refer to the higher indexed elements as

p- ISSN: 2320-9348

Page 37

Mirza Abdulla, et al International Journal of Computer and Electronics Research [Volume 5, Issue 3, June 2016]

2.

3.

4.

5.

6.

7.

appearing to the right of the lower indexed elements of

the array.

We shall refer to all consecutive locations in the array

of length 𝒖 = ⌊𝑛1/3 ⌋starting from location𝒏 − 𝒖𝟑 +

𝟏as the level 0 (or 𝐿0 ) block. Thus we have 𝒖𝟑 such

blocks. Moreover, all 𝐿0 blocks start after the

locations 1 to 𝑛 − 𝒖𝟑 in the input array. In other words

the blocks are right aligned in the array, when the

lower indexed elements start from the left.

Locations 1 to 𝑛 − 𝒖𝟑 mayconstitute a 𝐿0 block of

length less than 𝒖, which we shall refer to as partial 𝐿0

block

(or

𝑃0

block).

We shall refer to all consecutive locations in the array

of length 𝒗 = 𝑢2 starting from location 𝑛 − 𝒗𝟑 as the

level 1 (or 𝐿1 ) block. Thus each 𝐿1 block contains

𝒖of𝐿0 blocks, and there are𝒖 such𝐿1 blocks.

A block (𝐿0 or 𝐿1 ) that has the minimum of that

respective block in the first position of the block is

called block rule compliant.

The first element after all the elements of the sorted

lists in the selection sort algorithm, or the leftmost

element of the unsorted list is referred to as the

currentelement and the𝐿0 or𝐿1 block to which it

belongs is called the current𝐿0 or

𝐿1 block

respectively.

All elements in the current 𝐿0 or 𝐿1 block but not yet

in the sorted list are referred to as rightelements to the

current element. All 𝐿1 blocks to the right of the

current element but in the same 𝐿0 block are referred

to as right𝐿1 blocks. All 𝐿0 blocks to the right of the

current block are referred to as right𝐿0 blocks.

The index of the minimum of the unsorted list is

referred to as the minIndex, and the block in which

the minimum resided is referred to as the min block.

Therefore, the𝐿0 or𝐿1 block to which min item

belongs is called the min𝐿0 or 𝐿1 block respectively.

𝑃0 = 𝑃1 𝑚𝑜𝑑𝑛1/3

//size of partial 𝐿0 block

0. for i = 1+𝑃1 to n step 𝐿1 { //make all 𝐿1 blocks

contain min in 1st position

min = i;

forj = i to 𝒊 + 𝐿1 − 1

if a[j] < a[min] then j=min;

swap a[i] and a[j];

}

fori = 1+𝑃0 to n step 𝐿0 { //make all 𝐿0 blocks

contain min in 1st position

min = i;

forj = i to 𝒊 + 𝐿0 − 1

if a[j] < a[min] then j=min;

swap a[i] and a[j];

}

1. for i = 1 to n

{

2. ifi< = 𝑃0 then 𝑆0 = 1 + 𝑃0

//location of next

𝐿0 block to the right.

else𝑆0 = 1 + ⌈

3.

4.

5.

6.

7.

8.

9.

10.

11.

12.

13.

14.

17.

Improved Algorithm:

𝑃1 = 𝑛𝑚𝑜𝑑𝑛2/3

partial 𝐿1 block

©http://ijcer.org

𝐿0 = 𝑛1/3

𝐿1 = 𝑛2/3

//size

of

e- ISSN: 2278-5795

𝐿0

⌉ ∗ 𝐿0 + 𝑃1

ifi< = 𝑃1 then 𝑆1 = 1 + 𝑃1

𝐿1 block to the right.

else𝑆1 = 1 + ⌈

15.

16.

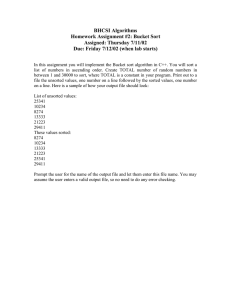

Figure 1: Level 1 blocks contain level 0 blocks which contain

the elements of the array.

(𝑖−𝑃1)

18.

19.

20.

21.

22.

23.

24.

//location of next

(𝑖−𝑃1)

𝐿1

⌉ ∗ 𝐿1 + 𝑃1

min= i

for j=1+ i to 𝑆0 − 1

if a[j] <min then

min = j;

for j=𝑆0 to 𝑆1 − 1 step 𝐿0

if a[j] <min then

min = j;

for j=𝑆1 to 𝑛 step 𝐿1

if a[j] <min then

min = j;

if i ≠ min then swap a[i] and a[min];

else{ if 𝑆0 <min<𝑆1 then {

// min is in one of

𝐿0 blocks in current 𝐿1 block

min1 = min;

for j=minto 𝑆0 − 1 if a[j] <min1 then min1 =

j;

if min1≠ min then swap a[min1] and a[min];

}

If min ≥ 𝑆1 then

// min is in one of

𝐿1 to the right of current block

min1 = min;

for j=minto min +𝐿0 − 1

if

a[j]

<min1 then min1 = j;

for j=min +𝐿0 to min +𝐿1 − 1 step 𝐿0

if a[j] <min1 then min1 = j;

if min1≠ min then

swap a[min1] and a[min];

If min1>min+𝐿0 − 1then {

min2 = min1;

for j= min2 to min2 +𝐿0 − 1 if a[j] <min2 then

min2 = j;

swap a[min1] and a[min2];

}

p- ISSN: 2320-9348

Page 38

Mirza Abdulla, et al International Journal of Computer and Electronics Research [Volume 5, Issue 3, June 2016]

25.

make that 𝐿0 block rule compliant.

This action is performed in these

steps.

}

Explanation of the pseudo code:

Variables: 𝑳𝟎 is the size of 𝐿0 block, 𝑳𝟏 is the size of

𝐿1 block, 𝑷𝟎 is the size of partial 𝐿0 block, and 𝑷𝟏 is

the size of partial 𝐿1 block

Step 0: This step is necessary to make the array block

rule compliant. The idea is to repeat thru the array in

steps equal to the size of 𝐿1 find the minimum in each

block and swap it with the first location of that block.

We repeat again thru the array in steps equal to the

size of 𝐿0 by finding the minimum in each such block

and swap it with the first location of that block.

Step 1: We iterate thru the unsorted list, and each

time:

o Steps 2 and 3: The location of the first 𝐿0

block to the right of the current item (𝑆0 ), and

the location of the first 𝐿1 block to the right

of the current item (𝐿1 ), are found.

o Steps 4 and 5: The minimum of the current

partial𝐿0 block is found.

o Step 6: The minimum of the current partial

𝐿0 block is found, and becomes the minimum

so far

o Step 7: The minimum of the right 𝐿1 blocks

is found and becomes the minimum.

o Step 8: if the current item is not the

minimum of the unsorted list the minimum

found is swapped with the current item. This

way the minimum is found and is added to

the sorted list.

o Step 9: if the location of the minimum was

in the current partial 𝐿1 block but not in the

current partial 𝐿0 block, then the 𝐿0 block

from which it was obtained may not be min

block compliant. So

Steps 10 and 11: The minimum of

that 𝐿0 block is found, and

Step 12: we swap the first item of

that 𝐿0 block with the minimum of

that block.

o Step 14: on the other hand if the minimum

of the unsorted list was obtained from an 𝐿1

block to the right of the current 𝐿1 block:

Steps 15and 16: Find the minimum

of the first 𝐿0 block in that 𝐿1

block, and

Step 17: Find the minimum of the

remaining 𝐿0 blocks in that 𝐿1

block.

Steps 18 and 19: the minimum

found in step 17 is the minimum of

the 𝐿1 and is swapped with the first

item in that 𝐿1 block.

Steps 20 and 23: if the minimum

found in step 17 was obtained from

a block other than the first 𝐿0 block

in that 𝐿1 block, we would have to

©http://ijcer.org

e- ISSN: 2278-5795

Correctness of algorithm

Proposition 1. The first element in a 𝐿1 block is also the first

element in the first 𝐿0 block within the 𝐿1 block, and it is the

minimum of both blocks.

Proof. Follows form the definition of the blocks.

Proposition 2. The minimum element of the unsorted list

prior to any iteration in step 1 of the algorithm is one of the

elements of the unsorted part of the current partial𝐿0 block, the

right 𝐿0 blocks in the current 𝐿1 block and the right 𝐿1 blocks.

Proof. The minimum element of the unsorted list prior to any

iteration in step 1of the algorithm is one of the elements of the

unsorted part of the current 𝐿1 block and the right 𝐿1 blocks

.However, the first position of the current 𝐿1 block may have

already been inspected and added to the sorted list. It follows

therefore that it should not be considered in the search of the

minimum of the unsorted list. Nevertheless, each of the 𝐿0

right blocks in the current partial𝐿1 block contains the

minimum of the block in its first position. Therefore, these

blocks and the not yet sorted elements in the current partial𝐿0

block must have between them the minimum of unsorted

elements in the current partial𝐿1 block. This minimum can be

compared with the minimum of the right 𝐿1 blocks to obtain

the overall minimum of the unsorted list.

It follows therefore that when a swap is made between the

minimum element in the unsorted list and the current item, the

current position will contain the minimum of the unsorted list

and the swapped item may be in either

1.

2.

3.

the current partial𝐿0 block, or

the first position in one of the right 𝐿0 blocks within

the current 𝐿1 block or

The first position in one of the right 𝐿1 blocks.

In the first case, no violation to our restriction of having the

first element of a block having the minimum of that block,

since now the first position of the block already contains the

minimum of the block.

In the second and third cases, we have a violation of the first

position containing the min of a block rule and it needs to be

dealt with. Dealing with the second case all that needs to be

performed is finding the minimum of the block and swap it

with item in the first position, after of which the min rule is

maintained for the entire array.

Similarly, for the third case we find the minimum of all the

elements in the 𝐿1 block where the swapped item was moved

to, and the min of all the right 𝐿1 blocks to that block with their

p- ISSN: 2320-9348

Page 39

Mirza Abdulla, et al International Journal of Computer and Electronics Research [Volume 5, Issue 3, June 2016]

𝐿0 block. Again the min rule is maintained after this operation,

and this is exactly what steps 7 to 16 of the algorithm perform.

Therefore, we have:

Proposition3. At the end of each iteration, and prior to the

start of a new iteration, the current position contains the

minimum of the unsorted list and the min rule is maintained.

Lemma 1. The algorithm correctly sorts the entire list.

Proof. Prior to the start of the main loop of the algorithm a

scan is made over the entire array of elements to make sure that

the min rule is maintained. The algorithm iterates over all

elements of the array and by proposition 5, each time finds the

minimum and places it in the current position as well as make

sure that the min rule is maintained prior to the start of a new

iteration.

Time Complexity Analysis of algorithm.

In step 0, and prior to the start of the main loop of the algorithm

a scan is made on the array to block rule compliant. The scan is

performed in 𝐿(𝐿) time.

Step 1 is repeated n times , and during each pass:

Steps 2 to 4 take 𝐿(1) or constant time

Step 5 is repeated for the remainder of the current 𝐿0

and thus performs 𝐿(|𝐿0 |) operations at the

most.

Step 6 is repeated a maximum of 𝐿(|𝐿0 |) since there

are a maximum of that many 𝐿0 blocks in

any 𝐿1 block.

Step 7 is repeated a maximum of 𝐿(|𝐿0 |) since the

number of 𝐿1 blocks in the array is |𝐿0 |.

Steps 8 to 10 require 𝐿(1) or constant time.

Step 11 can be performed in at most 𝐿(|𝐿0 |) time

since the loop makes that many iterations

Steps 12 to 15 require 𝐿(1) or constant time.

Step 16 can be performed in at most 𝐿(|𝐿0 |) time

since the loop makes that many iterations

Step 17 is repeated a maximum of 𝐿(|𝐿0 |) since there

are a maximum of that many 𝐿0 blocks in

any 𝐿1 block.

Steps 18 to 21 require 𝐿(1) or constant time.

Steps 22 can be performed in at most 𝐿(|𝐿0 |) time

since the loop makes that many iterations

Step 23 requires 𝐿(1) or constant time.

It follows, therefore, that the body of the main loop performs

𝐿(|𝐿0 |) at the most, from which it follows that the improved

selection sort algorithm runs in 𝐿(𝐿|𝐿0 |) = 𝐿(𝐿. 𝐿1/3 ) time

in the worst case.

1

Theorem 1. The ISS2 algorithm runs in 𝐿(𝐿. 𝐿3 ) time.

4.

CONCLUSIONS

1

In this paper we presented an𝐿(𝐿. 𝐿3 ) worst case running

1

time selection sort variant, which is an improvement of 𝐿(𝐿3 )

factor on the classical selection sort technique.

This

substantial in the asymptotic performance improvement of

©http://ijcer.org

e- ISSN: 2278-5795

selection sort was obtained by restricting the search of the

minimum item in each pass of the sort to a small subset of the

unsorted list. Thus we avoid making unnecessary comparisons

to most of the elements in the unsorted list.

REFERENCES

[1] JehadAlnihoud and Rami Mansi, An Enhancement of Major

Sorting Algorithms, The International Arab Journal of

Information Technology, Vol. 7, No. 1, January 2010, pp.

55-62.

[2] Md. Khairullah, Enhancing Worst Sorting Algorithms,

International Journal of Advanced Science and Technology

Vol. 56, July, 2013, pp.13-26.

[3] Muhammad Farooq Umar, EhsanUllahMunir, Shafqat Ali

Shad and Muhammad WasifNisar, Enhancement of

Selection, Bubble and Insertion Sorting Algorithm, Research

Journal of Applied Sciences, Engineering and Technology

8(2): 263-271, 2014, Published: July 10, 2014, ISSN: 20407459; e-ISSN: 2040-7467.

[4] Ankit R. Chadha, RishikeshMisal, TanayaMokashi,

AmanChadha, ARC Sort: Enhanced and Time Efficient

Sorting Algorithm, International Journal of Applied

Information Systems (IJAIS) – ISSN : 2249-0868,

Foundation of Computer Science FCS, New York, USA,

Volume 7– No. 2, April 2014 – www.ijais.org

[5] JyotiDua, Enhanced Bidirectional Selection Sort,

International Journal of Computer, Electrical, Automation,

Control and Information Engineering Vol:8, No:7, 2014

[6] Md. Khairullah, An Enhanced Selection Sort Algorithm,

SUST Journal of Science and Technology, Vol. 21, No. 1,

2014; P:9-15

[7] SurenderLakra , Divya, Improving the performance of

selection sort using a modified double-ended selection

sorting, International Journal of Application or Innovation in

Engineering & Management (IJAIEM), Web Site:

www.ijaiem.org

Email:

editor@ijaiem.org,

editorijaiem@gmail.com Volume 2, Issue 5, May 2013

ISSN 2319 – 4847.

[8] Mrs.P.Sumathi, Mr.V.V.Karthikeyan, A New Approach for

Selection Sorting, International Journal of Advanced

Research in Computer Engineering & Technology

(IJARCET) Volume 2, Issue 10, October 2013.

[9] Mirza Abdulla, Marrying Inefficient SortingTechniques Can

Give Birth to aSubstantially More Efficient Algorithm,

International Journal of Computer Science and Mobile

Applications, Vol.3 Issue. 12, December- 2015, pg. 15-21.

[10] Mirza Abdulla, Optimal Time and Space Sorting Algorithm

on Hierarchical Memory with Block Transfer, International

Journal of Electrical & Computer Sciences IJECS-IJENS

Vol:16 No:01, Feb-2016, Pg. 10-13.

[11] Mirza Abdulla,Selection Sort with improved asymptotic

time bounds, The International Journal of Engineering and

Science (THE IJES), To Appear May-2016.

[12] Knuth, D., "The Art of Computer programming Sorting and

Searching", 2nd edition, vol.3. Addison- Wesley, 1998.

p- ISSN: 2320-9348

Page 40

Mirza Abdulla, et al International Journal of Computer and Electronics Research [Volume 5, Issue 3, June 2016]

[13] J. L. Bentley and R. Sedgewick, 1997, "Fast Algorithms for

Sorting and Searching Strings", ACM-SIAM SODA

‟97,360-369.

[14] T. H. Cormen, C. E. Leiserson, R. L. Rivest, and C. Stein,

2001,."Introduction

to

Algorithms".MIT

Press,

Cambridge,MA, 2nd edition.

[15] Flores, I., Jan 1961, "Analysis of Internal Computer

Sorting". J.ACM 8, 41-80.

©http://ijcer.org

e- ISSN: 2278-5795

[16] V.Estivill-Castro and D.Wood, 1992,"A Survey of Adaptive

Sorting Algorithms", Computing Surveys, 24:441-476.

[17] Cocktail Sort Algorithm or Shaker Sort Algorithm,

http://www.codingunit.com/cocktail-sort-algorithm-orshaker-sortalgorithm.

p- ISSN: 2320-9348

Page 41