I Lev Manovich The Language of New Media Copyright MIT Press

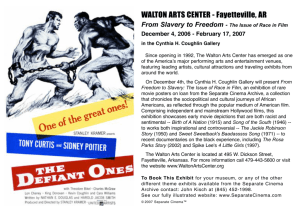

advertisement