Spoken Knowledge Organization by Semantic

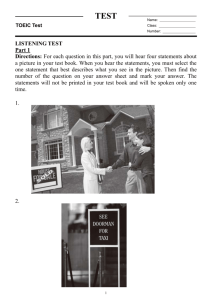

advertisement