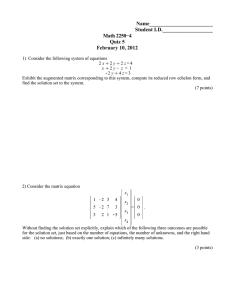

Matrices III

advertisement

FACULTY OF MATHEMATICAL STUDIES

MATHEMATICS FOR PART I ENGINEERING

Lectures

MODULE 18

MATRICES III

1. Rank of a matrix

2. General systems of linear equations

3. Eigenvalues and eigenvectors

1. Rank of a matrix

Sets of n simultaneous linear equations in n unknowns (in the form AX = b) were considered in module

17. When the determinant of A is non-zero (i.e. |A| =

6 0) it proved easy to obtain solutions. However,

when |A| = 0 it is much harder, and to clarify the position it is helpful to introduce the concept of the

rank of a matrix.

Consider the system AX = b . To solve this system using the elimination method discussed in module 17

you reduce the matrix A to upper triangular form with non-zero elements along the principal diagonal.

This procedure is possible when |A| =

6 0 . However, the rearrangement fails when |A| = 0 , since in this

case the matrix can only be reduced to echelon form. An example of a matrix in echelon form is shown

below, with the dots denoting any non-zero quantities whereas the letters denote quantities which can be

zero or non-zero:

· a b c d

0 0 · e f

.

0 0 0 0 ·

0 0 0 0 0

Observe that in this echelon form the first non-zero elements in each row do not all lie on the principal

diagonal, as they do when the matrix is in upper triangular form.

Def.

The number of non-zero rows in the echelon form of a matrix A equals the rank of A .

In the above example the rank of the matrix is 3. It is important to point out that rank is defined for

matrices of all orders, not just square matrices.

The rank of a matrix gives the number of essentially different rows in the matrix (i.e. rows which cannot

be written as linear combinations of some or all of the other rows). Understanding this point is important

in understanding the different types of solution that are possible for general sets of equations of the form

AX = b .

[The usual mathematical definition of rank is that it is the number of rows in the largest square sub-matrix

with non-zero determinant. A square sub-matrix is found from A by deleting a number of rows and columns

to obtain a square matrix. It is possible to show that the two definitions of rank are identical. Calculating

the rank using sub-matrices can be tricky when the matrix is large, and in practice it is usually better to

adopt the echelon approach.]

For a system AX = b , define the augmented matrix (A : b) as the matrix A with the column vector

b added to it as an extra column. With this definition we can state the following result.

Result

If rank A 6= rank (A : b) then AX = b has NO solution (equations are inconsistent);

if rank A = rank (A : b) then AX = b has a solution (equations are consistent) and this solution has s

free parameters (where s = number of unknowns − rank A).

1

Ex 1.

1

0

Find the rank of the matrix A = 1

2

2

0

1

1

3

2

−1

1 −1

1 −1

1

0

0

0 .

1 −1

1

0

0

0

We now use the elimination process to reduce the matrix A to echelon form, by considering each column

in turn. Leaving the first row unaltered we first ensure the remaining non-zero elements in the first column

become zero; then leaving the first two rows unaltered we make zero any non-zero elements in the bottom

part of the second column, continuing this process column by column until the matrix is in echelon form.

With this procedure

A

→

1

0

0

0

0

→

1

0

0

0

0

0

1

1

3

2

−1

1

1

3

2

1 −1

−1

1

−1

1,

−3

3

−2

2

row 3 − row 1

row 4 − 2 × row 1

row 5 − 2 × row 1

0

1

0

0

0

−1

1 −1

1 −1

1

0

0

0,

0

0

0

0

0

0

row 3 − row 2

row 4 − 3 × row 2

row 5 − 2 × row 2

The matrix is now in echelon form and the rank is the number of non-zero rows. Hence, it follows that

rank A = 2.

2. General systems of equations

In real situations the systems will contain a large number of equations, but to illustrate the ideas let us

consider very small systems.

(a) Under-specified sets of equations (number of equations less than number of unknowns)

x

1

1 1 1

.

y =

Case (i)

Solve

2

1 2 3

z

This set is under-specified because when the matrices are multiplied out we have two equations in three

unknowns. Forming the augmented matrix, and then reducing it to echelon form gives

1 1

1 2

1 1

3 2

→

1 1

0 1

1

2

1

1

,

row 2 − row 1 .

The rank of the augmented matrix, the number of non-zero rows in the full reduced matrix above, is clearly

2. Ignoring the final column gives the matrix A and the number of non-zero rows in the reduced form of

this is again 2. Since the ranks are equal, the set of equations is consistent.

The reduced set of equations can be read off from the reduced form of the augmented matrix, the first three

columns giving the coefficients of the unknowns on the LHS, whereas the final column provides the new

column vector on the RHS. Thus the reduced system is

x + y + z = 1,

y + 2z = 1.

2

Put z = C, a constant, then the second equation implies y = 1 − 2C . Substituting these quantities into

the first of the reduced equations then yields x = 1 − y − z = 1 − (1 − 2C) − C = C . Thus the solution is

x

C

y = 1 − 2C ,

z

C

for any constant C .

[Note that in the above example the number of unknowns in the original set of equations = 3, rank A = 2

and so the general result stated earlier implies there is one free parameter, namely the constant C in the

above solution.]

x

1 1 1

1

Case (ii)

y =

.

Solve

2 2 2

1

z

Here again there are two equations in three unknowns. Reducing the augmented matrix gives

1 1

2 2

1

2

1

1

→

1

0

1 1

0 0

1

−1

,

row 2 − 2 × row 1 .

In this case the rank of the augmented matrix is 2, but the rank of the matrix A is 1. These values are

not the same and so the system does NOT have a solution – the set of equations is inconsistent (which is

obvious, of course, from the original equations).

(b) Over-specified sets of equations (more equations than unknowns)

−1

1 1 x

= 0 .

Solve 1 2

Case (iii)

y

1

1 3

The system has three equations in two unknowns. Reduction of the augmented matrix leads to

1 1

1 2

1 3

−1

0

1

→

→

1

0

0

1

0

0

1 −1

1

1 ,

2

2

1 −1

1

1 ,

0

0

row 2 - row 1

row 3 - row 1

row 3 − 2 × row 2 .

Hence rank A = 2 and rank (A : b) = 2 , the same, and so the equations are consistent. The reduced

system is

x + y = −1,

y = 1,

0=

0.

Hence, it follows that y = 1 and x = −1 − y = −1 − 1 = −2 , and the solution is

−2

x

.

=

1

y

[Number of original unknowns =2, rank A = 2 , so there are 2 − 2 = 0 free parameters in the above solution.]

In all cases the rank of the augmented matrix gives the number of independent equations in the given system,

i.e. equations which cannot be obtained as combinations of other equations in the system.

3

0

1 1 x

= 1 .

Case (iv)

Solve 1 2

y

−2

1 3

Again there are three equations in two unknowns.

to

1

1 1

0

1 2

1 → 0

0

1 3 −2

1

→ 0

0

In this case manipulation of the augmented matrix leads

1

0

row 2 − row 1

1

1 ,

row 3 − row 1

2 −2

1

0

1

1 ,

row 3 − 2 × row 2 .

0 −4

Thus rank A = 2 and rank (A : b) = 3 , which are different. The equations are inconsistent with no

solution.

For the above four systems of equations the types of solution are easily spotted. However, the situation is

much less obvious if you consider larger systems (say 10 equations in 9 unknowns). The procedure discussed

above provides a framework for determining the appropriate solution for systems of all sizes.

3. Eigenvalues and eigenvectors

The theory of eigenvalues is of importance in many physical problems, e.g. considering the stability of

numerical methods for solving differential equations and determining the normal modes of oscillation of

vibrating systems.

(a) 2 × 2 matrix

Let us illustrate the method with a 2 × 2 matrix A. Suppose

x1

a11 a12

,

X=

,

A=

a21 a22

x2

then consider the eigenvalue equation

AX = λX,

(1)

where λ is a scalar (a number). Equation (1) can be written

AX − λX = 0,

→

AX − λIX = (A − λI)X = 0,

(2)

where I is the unit 2 × 2 matrix. Written in full (2) is a set of two simultaneous linear equations in two

unknowns:

a12 x2 = 0,

(a11 − λ) x1 +

(3)

a21 x1 + (a22 − λ) x2 = 0.

Obviously the two equations have the trivial solution x1 = x2 = 0 (i.e. X = 0) but this solution is usually

of no physical interest. However, for particular values of λ non-zero solutions for x1 and x2 may exist and

these often do have physical significance. Equations (3) can be written BX = 0, where B = A−λI, and you

know from module 17 that for this set of equations to have a non-zero solution it is necessary that |B| = 0,

i.e. |A − λI| = 0.

For the 2 × 2 matrix A , condition (4) becomes

a11 − λ

a21

a12 = 0,

a22 − λ i.e. (a11 − λ)(a22 − λ) − a12 a21 = 0,

4

(4)

→

λ2 − (a11 + a22 )λ + a11 a22 − a12 a21 = 0.

(5)

This is a quadratic equation in λ, known as the characteristic equation of A, and the left-hand side

is called the characteristic polynomial of A. The two solutions of equation (5), λ1 , λ2 say (normally

distinct), are called the eigenvalues of A. (In some texts the word eigenvalue is replaced by characteristic

value or latent root). When the solution λ = λ1 is substituted back into the system (3), the resulting

equations can be solved to produce non-zero solutions for x1 and x2 , called “the eigenvector corresponding

to the eigenvalue λ1 ”. In a similar way you obtain the eigenvector corresponding to the eigenvalue λ2 .

Let us now illustrate the above procedure for a particular 2 × 2 matrix.

1 2

.

Find the eigenvalues and eigenvectors of the matrix A =

Ex 2.

0 3

We need to solve the matrix equation AX =λX, or (A − λI)X = 0. Non-zero solutions for λ exist only if

|A − λI| = 0,

1− λ

2 = 0,

i.e. 0

3 − λ

(1 − λ)(3 − λ) − 0(2) = 0,

(1 − λ)(3 − λ) = 0,

yielding λ = 1, 3

(these are the eigenvalues).

We now calculate in turn the eigenvector corresponding to each eigenvalue. For λ = 1 it is necessary to solve

(A−1I)X = 0,

x1

1−1

2

0

,

=

0

3−1

0

x2

0 2

x1

0

=

,

0 2

x2

0

which gives the two equations 2x2 = 0 and 2x2 = 0. These are obviously identical and both are satisfied

when x2 = 0. There

restriction

on x1 , however, which can have any value, = C say. Hence the

isno 1

C

x1

=C

=

, for any constant C.

eigenvector is

0

0

x2

For the other eigenvalue λ = 3, a similar procedure is adopted. The system (A−3 I)X = 0 corresponds to

1−3

2

x1

0

=

,

x2

0

3−3

0

x1

−2 2

0

,

=

0 0

0

x2

leading to −2x1 + 2x2 = 0,

0. Here

choosex2= D, an arbitrary constant, then it follows that x1 = D.

0=

D

1

x1

=

=D

, for any constant D.

Hence the eigenvector is

x2

D

1

Two comments should be made about the above solution. Firstly, eigenvectors always contain at least one

free parameter, similar to C and D in the above example. If your answer does not possess this feature you

have made a mistake.

Secondly, you can always check that the answers satisfy the eigenvalue equation, AX = λX. In the above

example, for instance, the eigenvector corresponding to the eigenvalue 3 satisfies

1 2

D

D + 2D

3D

D

AX =

=

=

=3

= 3X = λX.

0 3

D

0 + 3D

3D

D

Similarly, for the other eigenvector you obtain

C

C

C+0

C

1 2

.

=1

=

=

0

0

0+0

0

0 3

5

(b) n × n matrix The theory presented above for a 2 × 2 matrix can easily be extended to a general n × n

matrix. Let A be an n × n matrix and X be an n × 1 column matrix defined by

a1n

a2n

..

.

a11

a21

A=

...

a12

a22

..

.

...

...

..

.

an1

an2

. . . ann

and

x1

x2

X=

... .

xn

Then the equation AX = λX, where λ is a number, becomes (A − λI) X = 0, where I is now the unit

n × n matrix. Written out in full we have a set of n simultaneous homogeneous equations in the n variables

x1 , x2 , . . . , xn :

a12 x2 + . . . +

a1n xn = 0

(a11 − λ)x1 +

a2n xn = 0

a21 x1 + (a22 − λ)x2 + . . . +

(6)

..

..

..

..

.

.

.

.

an1 x1

+

an2 x2

+

...

+

(ann − λ)xn

=

0

The above system always has the trivial solution x1 = x2 = . . . = 0 (i.e. X = 0) but non-zero solutions can

exist for values of λ which satisfy:

a12

...

a1n a11 − λ

a22 − λ . . .

a2n a21

= 0.

|A − λI| = ..

..

..

..

.

.

.

.

an1

an2

. . . ann − λ

(7)

Condition (7) again leads to the characteristic equation of A, which is now a polynomial equation of

degree n in λ. The n roots λi (i = 1, 2 . . . n) of (7) (which are not necessarily all distinct) are called the

eigenvalues of A. If a particular eigenvalue λi is substituted back into (6) the resulting equations can be

solved for x1 , x2 , . . . , xn to give the eigenvector (always non-zero) corresponding to the eigenvalue λi .

Let us work through an example for a 3 × 3 matrix.

0

2

3

Ex 3. Find the eigenvalues and eigenvectors of the matrix A = 1 −2 −1 .

2

1

1

Need to solve |A − λI| = 0 ,

i.e.

−λ

2

3 1 −2 − λ

−1 = 0 .

2

1

1 − λ

We know the characteristic equation here will be cubic (since A is a 3 × 3 matrix) and there are two ways

to proceed. The determinant can be expanded directly but in order to find the eigenvalues it will then

be necessary to determine the roots of the third order polynomial. This is usually not too difficult, but it

usually involves spotting factors and can be tricky. The alternative approach is to manipulate the elements

in the determinant before the evaluation in order to simplify the calculations and pull out common factors

(involving λ ). Both methods will be given below.

Direct way Expanding the determinant by the first row

−λ {(−2 − λ)(1 − λ) − (−1)1} − 2 {1(1 − λ) − (−1)2} + 3 {1(1) − (−2 − λ)2} = 0,

−λ{λ2 + λ − 2 + 1} − 2{1 − λ + 2} + 3{1 + 4 + 2λ} = 0,

−λ(λ2 + λ − 1) − 2(3 − λ) + 3(2λ + 5) = 0,

i.e.

− λ3 − λ2 + λ − 6 + 2λ + 6λ + 15 = 0,

λ3 + λ2 − 9λ − 9 = 0.

6

This cubic must now be factorised, so it is usually necessary to spot a value of λ which satisfies the equation,

say λ = a , then use the remainder theorem which states that λ − a is then a factor of the cubic. Here you

could spot that λ = −1 satisfies the cubic, but it is easier to observe that the cubic can be rearranged to

display a common factor:

λ3 + λ2 − 9λ − 9 = λ2 (λ + 1) − 9(λ + 1) = (λ + 1)(λ2 − 9)

therefore

(λ + 1)(λ2 − 9) = 0

(λ + 1)(λ − 3)(λ + 3) = 0

i.e.

λ = −1, 3, −3 (three eigenvalues)

Alternative method We spot that adding together the first and third columns of |A − λI| produces a zero

element and two others with a common factor dependent on λ, so

3−λ

2

3 col.1 + col.3

−2 − λ

−1 ,

|A − λI| = 0

3−λ

1

1 − λ

0

1

1

2

3 −1 = (3 − λ) 0 −2 − λ

= (3 − λ) 0 −2 − λ

1

1

1

1

1 − λ

2 + λ −1 , row 1-row 3.

1 − λ

Notice that we have carefully recorded the row and column operations we have carried out–very useful if you

need to check your working subsequently!

Only one element now remains in the first column and a common factor has already been extracted, hence

|A − λI| = (3 − λ)(−1)3+1 1(−1) − (2 + λ)(−2 − λ) = (3 − λ) − 1 + (2 + λ)2

= (3 − λ)(−1 + 4 + 4λ + λ2 ) = (3 − λ)(3 + 4λ + λ2 )

= (3 − λ)(1 + λ)(3 + λ) ,

and the same eigenvalues are derived as using the direct method.

Whichever way you have found the eigenvalues, the eigenvectors must be calculated by solving the matrix

equation (A − λI)X = 0.

λ = −3 Need to solve (A − (−3)I)X = 0, which can be written (A + 3I)X = 0. The set of equations to

be solved, found by adding 3 to the diagonal elements, is

3x1 + 2x2 + 3x3 = 0,

x1 + x2 − x3 = 0,

2x1 + x2 + 4x3 = 0.

It is convenient to interchange the first and second equations giving

x1 + x2 − x3 = 0,

3x1 + 2x2 + 3x3 = 0,

2x1 + x2 + 4x3 = 0.

Then using (eqn.2 – 3 × eqn.1) and (eqn.3 – 2 × eqn.1) the system becomes

x1 + x2 − x3 = 0,

− x2 + 6x3 = 0,

− x2 + 6x3 = 0.

7

Clearly the second and third equations are identical, so the last one can be ignored. If we choose x3 = C,

where C is any non-zero constant, then the second equation implies x2 = 6C and, after substitution into the

first equation, x1 = −5C. Hence the eigenvector corresponding to λ = −3 is

−5C

x1

x2 = 6C .

C

x3

λ = −1

Solve (A − (−1)I)X = 0, or (A + I)X = 0, equivalent to

x1 + 2x2 + 3x3 = 0,

x1 − x2 − x3 = 0,

2x1 + x2 + 2x3 = 0.

Subtract the first equation from the second equation, and 2× eqn.1 from the third equation, to give

x1 + 2x2 + 3x3 = 0,

− 3x2 − 4x3 = 0,

− 3x2 − 4x3 = 0.

Again the last two equations are identical. On putting x3 = D we find that x2 = − 34 D, and then x1 = − 13 D,

so that the eigenvector corresponding to λ = −1 is

x1

− D/3

x2 = −4D/3 ,

x3

D

for any D.

λ = 3 In this case we must solve (A−3I)X = 0. For the two previous eigenvalues we found the eigenvectors

by manipulating equations, but we could easily work with a matrix representation. We shall illustrate the

matrix method for this third eigenvalue. Thus, we need to find the solution of

0

−3

2

3

x1

1 −5 −1 x2 = 0 ,

x3

0

2

1 −2

and interchanging the first and second rows (equivalent to interchanging the first and second equations) gives

0

1 −5 −1

x1

−3

2

3 x2 = 0 .

x3

0

2

1 −2

Simplifying using the elimination method, by calculating (row 2 + 3 × row 1) and (row 3 - 2 × row 1), leads

to

0

1 −5 −1

x1

0 −13

0 x2 = 0 .

x3

0

0

11

0

This is equivalent to the reduced system

x1 − 5x2 − x3 = 0

− 13x2

+ 11x2

8

=0

= 0.

The last pair of equations imply x2 = 0, and hence using the first equation x1 = x3 . Choosing x3 = E we

obtain

E

x1

x2 = 0 , for any E .

E

x3

It is important to note that there is more than one way in which to write the eigenvectors. For instance,

in finding the eigenvector corresponding to λ = −3 in the above Example we could have chosen x2 = F ,

in which case it follows that x3 = F/6 and hence x1 = −5F/6. In this case it is easily seen that the new

solution is identical to the previous one after a redefinition of the arbitrary constant (F = 6C).

Concluding comments Before finishing the module two further points are briefly mentioned.

(i) It can be shown that if A is symmetric, a situation which occurs in many applications, then the

eigenvalues are real and the eigenvectors corresponding to different eigenvalues are perpendicular.

(ii) In all the cases considered in this module the roots of the characteristic equation are real and distinct.

Clearly this is not always the case. When some, or all, of the roots are equal then the calculation of the

eigenvectors is more complicated. This more difficult case is not considered in this module.

rec/00lm3

9