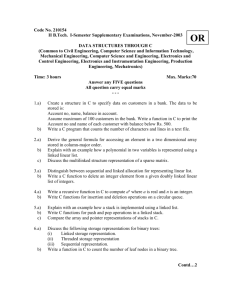

Data Stucture - SS Publications

advertisement

SYLLABUS

ADVANCED CONCEPTS OF C AND INTRODUCTION TO DATA STRUCTURES

DATA TYPES, ARRAYS, POINTERS, RELATION BETWEEN POINTERS AND ARRAYS, SCOPE

RULES AND STORAGE CLASSES, DYNAMIC ALLOCATION AND DE-ALLOCATION OF

MEMORY,DANGLING POINTER PROBLEM, STRUCTURES, ENUMERATED CONSTANTS ,

UNIONS

COMPLEXITY OF ALGORITHMS

PROGRAM ANALYSIS, PERFORMANCE ISSUES, GROWTH OF FUNCTIONS, ASYMPTOTIC

NOTATIONS, TIME-SPACE TRADE OFFS, SPACE USAGE, SIMPLICITY, OPTIMALITY

INTRODUCTION TO DATA AND FILE STRUCTURE

INTRODUCTION, PRIMITIVE AND SIMPLE STRUCTURES,

STRUCTURES , FILE ORGANIZATIONS

ARRAYS

LINEAR

AND

NONLINEAR

SEQUENTIAL ALLOCATION, MULTIDIMENSIONAL ARRAYS , ADDRESS CALCULATIONS ,

GENERAL MULTIDIMENSIONAL ARRAYS , SPARSE ARRAYS

STRINGS

INTRODUCTION , STRING FUNCTIONS , STRING LENGTH , STRING COPY, STRING COMPARE ,

STRING CONCATENATION

ELEMENTARY DATA STRUCTURES

STACK , OPERATIONS ON STACK, IMPLEMENTATION OF STACKS, RECURSION AND STACKS

,EVALUATION OF EXPRESSIONS USING STACKS, QUEUE, ARRAY IMPLEMENTATION OF

QUEUES, CIRCULAR QUEUE , DEQUES , PRIORITY QUEUES

LINKED LISTS

SINGLY LINKED LISTS, IMPLEMENTATION OF LINKED LIST, CONCATENATION OF LINKED

LISTS , MERGING OF LINKED LISTS, REVERSING OF LINKED LIST, DOUBLY LINKED LIST,

IMPLEMENTATION OF DOUBLY LINKED LIST, CIRCULAR LINKED LIST, APPLICATIONS OF THE

LINKED LISTS

GRAPHS

ADJACENCY MATRIX AND ADJACENCY LISTS , GRAPH TRAVERSAL, IMPLEMENTATION,

SHORTEST PATH PROBLEM , MINIMAL SPANNING TREE,

OTHER TASKS

TREES

INTRODUCTION, PROPERTIES OF A TREE , BINARY TREES, IMPLEMENTATION, TRAVERSALS

OF A BINARY TREE, BINARY SEARCH TREES (BST), INSERTION IN BST , DELETION OF A

NODE, SEARCH FOR A KEY IN BST, HEIGHT BALANCED TREE, B-TREE, INSERTION,

DELETION

FILE ORGANIZATION INTRODUCTION, TERMINOLOGY , FILE ORGANISATION, SEQUENTIAL

FILES, DIRECT FILE ORGANIZATION , DIVISION-REMAINDER HASHING, INDEXED

SEQUENTIAL FILE ORGANIZATION

SEARCHING

INTRODUCTION, SEARCHING TECHNIQUES, SEQUENTIAL SEARCH, BINARY SEARCH,

HASHING, HASH FUNCTIONS, COLLISION RESOLUTION

SORTING

INTRODUCTION, INSERTION SORT, BUBBLE SORT, SELECTION SORT, RADIX SORT, QUICK

SORT, 2-WAY MERGE SORT, HEAP SORT, HEAPSORT VS. QUICKSORT

S S PUBLICATIONS

D.NO: 10-13-36, sistla vari street, Repalle-522265, Guntur (Dt), A.P, INDIA

Email: mdsspublications@gmail.com , Web-site: www.sspublications.co.in

UNIT 1 ADVANCED CONCEPTS OF C AND INTRODUCTION TO DATA STRUCTURES

1.1

1.2

1.3

INTRODUCTION

DATA TYPES

ARRAYS

1.3.1 HANDLING ARRAYS

1.3.2 INITIALIZING THE ARRAYS

1.4 MULTIDIMENSIONAL ARRAYS

1.4.1 INITIALIZATION OF TWO DIMENSIONAL ARRAY

1.5 POINTERS

1.5.1 ADVANTAGES AND DISADVANTAGES OF POINTERS

1.5.2 DECLARING AND INITIALIZING POINTERS

1.5.3 POINTER ARITHMETIC

1.6

1.7

1.8

1.9

ARRAY OF POINTERS

PASSING PARAMETERS TO THE FUNCTIONS

RELATION BETWEEN POINTERS AND ARRAYS

SCOPE RULES AND STORAGE CLASSES

1.9.1 AUTOMATIC VARIABLES

1.9.2 STATIC VARIABLES

1.9.3 EXTERNAL VARIABLES

1.9.4 REGISTER VARIABLE

1.10 DYNAMIC ALLOCATION AND DE-ALLOCATION OF MEMORY

1.10.1 FUNCTION MALLOC(SIZE)

1.10.2 FUNCTION CALLOC(N,SIZE)

1.10.3 FUNCTION FREE(BLOCK)

1.11 DANGLING POINTER PROBLEM.

1.12 STRUCTURES.

1.13 ENUMERATED CONSTANTS

1.14 UNIONS

UNIT 2

COMPLEXITY OF ALGORITHMS

2.1. PROGRAM ANALYSIS

2.2. PERFORMANCE ISSUES

2.3. GROWTH OF FUNCTIONS

2.4. ASYMPTOTIC NOTATIONS

2.4.1. BIG-O NOTATION (O)

2.4.2. BIG-OMEGA NOTATION ()

2.4.3. BIG-THETA NOTATION ()

2.5. TIME-SPACE TRADE OFFS

2.6. SPACE USAGE

2.7. SIMPLICITY

2.8. OPTIMALITY

UNIT 3

3.1

3.2

3.3

3.4

INTRODUCTION TO DATA AND FILE STRUCTURE

INTRODUCTION

PRIMITIVE AND SIMPLE STRUCTURES

LINEAR AND NONLINEAR STRUCTURES

FILE ORGANIZATIONS

UNIT 4 ARRAYS

4.1 INTRODUCTION

4.1.1. SEQUENTIAL ALLOCATION

4.1.2. MULTIDIMENSIONAL ARRAYS

4.2. ADDRESS CALCULATIONS

4.3. GENERAL MULTIDIMENSIONAL ARRAYS

4.4. SPARSE ARRAYS

UNIT 5 STRINGS

5.1 INTRODUCTION

5.2 STRING FUNCTIONS

5.3 STRING LENGTH

5.3.1 USING ARRAY

5.3.2 USING POINTERS

5.4 STRING COPY

5.4.1 USING ARRAY

5.4.2 USING POINTERS

5.5 STRING COMPARE

5.5.1 USING ARRAY

5.6 STRING CONCATENATION

UNIT 6 ELEMENTARY DATA STRUCTURES

6.1 INTRODUCTION

6.2 STACK

6.2.1

DEFINITION

6.2.2

OPERATIONS ON STACK

6.2.3

IMPLEMENTATION OF STACKS USING ARRAYS

6.2.3.1 FUNCTION TO INSERT AN ELEMENT INTO THE STACK

6.2.3.2 FUNCTION TO DELETE AN ELEMENT FROM THE STACK

6.2.3.3 FUNCTION TO DISPLAY THE ITEMS

6.3 RECURSION AND STACKS

6.4 EVALUATION OF EXPRESSIONS USING STACKS

6.4.1 POSTFIX EXPRESSIONS

6.4.2 PREFIX EXPRESSION

6.5 QUEUE

6.5.1 INTRODUCTION

6.5.2 ARRAY IMPLEMENTATION OF QUEUES

6.5.2.1 FUNCTION TO INSERT AN ELEMENT INTO THE QUEUE

6.5.2.2 FUNCTION TO DELETE AN ELEMENT FROM THE QUEUE

6.6 CIRCULAR QUEUE

6.6.1 FUNCTION TO INSERT AN ELEMENT INTO THE QUEUE

6.6.2 FUNCTION FOR DELETION FROM CIRCULAR QUEUE

6.6.3 CIRCULAR QUEUE WITH ARRAY IMPLEMENTATION

6.7 DEQUES

6.8 PRIORITY QUEUES

UNIT 7 LINKED LISTS

7.1. INTRODUCTION

7.2. SINGLY LINKED LISTS.

7.2.1. IMPLEMENTATION OF LINKED LIST

7.2.1.1. INSERTION OF A NODE AT THE BEGINNING

7.2.1.2. INSERTION OF A NODE AT THE END

7.2.1.3. INSERTION OF A NODE AFTER A SPECIFIED NODE

7.2.1.4. TRAVERSING THE ENTIRE LINKED LIST

7.2.1.5. DELETION OF A NODE FROM LINKED LIST

7.3. CONCATENATION OF LINKED LISTS

7.4. MERGING OF LINKED LISTS

7.5. REVERSING OF LINKED LIST

7.6. DOUBLY LINKED LIST.

7.6.1. IMPLEMENTATION OF DOUBLY LINKED LIST

7.7. CIRCULAR LINKED LIST

7.8. APPLICATIONS OF THE LINKED LISTS

UNIT 8 GRAPHS

8.1 INTRODUCTION

8.2 ADJACENCY MATRIX AND ADJACENCY LISTS

8.3 GRAPH TRAVERSAL

8.3.1 DEPTH FIRST SEARCH (DFS)

8.3.1.1 IMPLEMENTATION

8.3.2 BREADTH FIRST SEARCH (BFS)

8.3.2.1 IMPLEMENTATION

8.4 SHORTEST PATH PROBLEM

8.5 MINIMAL SPANNING TREE

8.6 OTHER TASKS

UNIT 9 TREES

9.1. INTRODUCTION

9.1.1. OBJECTIVES

9.1.2. BASIC TERMINOLOGY

9.1.3. PROPERTIES OF A TREE

9.2. BINARY TREES

9.2.1. PROPERTIES OF BINARY TREES

9.2.2. IMPLEMENTATION

9.2.3. TRAVERSALS OF A BINARY TREE

9.2.3.1.

IN ORDER TRAVERSAL

9.2.3.2.

POST ORDER TRAVERSAL

9.2.3.3.

PREORDER TRAVERSAL

9.3. BINARY SEARCH TREES (BST)

9.3.1. INSERTION IN BST

9.3.2. DELETION OF A NODE

9.3.3. SEARCH FOR A KEY IN BST

9.4. HEIGHT BALANCED TREE

9.5. B-TREE

9.5.1. INSERTION

9.5.2. DELETION

UNIT 10 FILE ORGANIZATION

10.1. INTRODUCTION

10.2. TERMINOLOGY

10.3. FILE ORGANISATION

10.3.1. SEQUENTIAL FILES

10.3.1.1. BASIC OPERATIONS

10.3.1.2. DISADVANTAGES

10.3.2. DIRECT FILE ORGANIZATION

10.3.2.1. DIVISION-REMAINDER HASHING

10.3.3. INDEXED SEQUENTIAL FILE ORGANIZATION

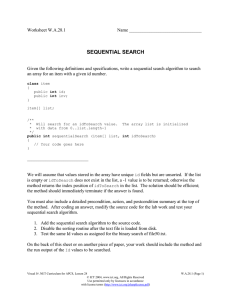

UNIT 11 SEARCHING

11.1.

INTRODUCTION

11.2. SEARCHING TECHNIQUES

11.2.1. SEQUENTIAL SEARCH

11.2.1.1. ANALYSIS

11.2.2. BINARY SEARCH

11.2.2.1. ANALYSIS

11.3. HASHING

11.3.1. HASH FUNCTIONS

11.4. COLLISION RESOLUTION

UNIT 12 SORTING

12.1. INTRODUCTION

12.2. INSERTION SORT

12.2.1. ANALYSIS

12.3. BUBBLE SORT

12.3.1. ANALYSIS

12.4. SELECTION SORT

12.4.1. ANALYSIS

12.5. RADIX SORT

12.5.1. ANALYSIS

12.6. QUICK SORT

12.6.1. ANALYSIS

12.7. 2-WAY MERGE SORT

12.8. HEAP SORT

12.9. HEAPSORT VS. QUICKSORT

UNIT 1 ADVANCED CONCEPTS OF C AND INTRODUCTION TO DATA STRUCTURES

1.1. INTRODUCTION

1.2. DATA TYPES

1.3. ARRAYS

1.3.1. HANDLING ARRAYS

1.3.2. INITIALIZING THE ARRAYS

1.4. MULTIDIMENSIONAL ARRAYS

1.4.1. INITIALIZATION OF TWO DIMENSIONAL ARRAY

1.5. POINTERS

1.5.1. ADVANTAGES AND DISADVANTAGES OF POINTERS

1.5.2. DECLARING AND INITIALIZING POINTERS

1.5.3.

POINTER ARITHMETIC

1.6.

1.7.

1.8.

1.9.

ARRAY OF POINTERS

PASSING PARAMETERS TO THE FUNCTIONS

RELATION BETWEEN POINTERS AND ARRAYS

SCOPE RULES AND STORAGE CLASSES

1.9.1. AUTOMATIC VARIABLES

1.9.2. STATIC VARIABLES

1.9.3. EXTERNAL VARIABLES

1.9.4. REGISTER VARIABLE

1.10.

DYNAMIC ALLOCATION AND DE-ALLOCATION OF MEMORY

1.10.1. FUNCTION MALLOC(SIZE)

1.10.2. FUNCTION CALLOC(N,SIZE)

1.10.3. FUNCTION FREE(BLOCK)

1.11.

DANGLING POINTER PROBLEM.

1.12.

STRUCTURES.

1.13.

ENUMERATED CONSTANTS

1.14.

UNIONS

1.1.

INTRODUCTION

This chapter familiarizes you with the concepts of arrays, pointers and dynamic memory

allocation and de-allocation techniques. We briefly discuss about types of data structures and

algorithms. Let us start the discussion with data types.

1.2.

DATA TYPES

As we know that the data, which will be given to us, should be stored and again referred

back. These are done with the help of variables. A particular variable‟s memory requirement

depends on which type it belongs to. The different types in C are integers, float (Real numbers),

characters, double, long, short etc. These are the available built in types.

Many a times we may come across many data members of same type that are related.

Giving them different variable names and later remembering them is a tedious process. It would

be easier for us if we could give a single name as a parent name to refer to all the identifiers of the

same type. A particular value is referred using an index, which indicates whether the value is

first, second or tenth in that parents name.

We have decided to use the index for reference as the values occupy successive memory

locations. We will simply remember one name (starting address) and then can refer to any value,

using index. Such a facility is known as ARRAYS.

1.3.

ARRAYS

An array can be defined as the collection of the sequential memory locations, which can be

referred to by a single name along with a number, known as the index, to access a

particular field or data.

When we declare an array, we will have to assign a type as well as size.

e.g.

When we want to store 10 integer values, then we can use the following declaration.

int A[10];

By this declaration we are declaring A to be an array, which is supposed to contain in all 10

integer values. When the allocation is done for array a then in all 10 locations of size 2 bytes for

each integer i.e. 20 bytes will be allocated and the starting address is stored in A. When we say

A[0] we are referring to the first integer value in A.

| ----------------- Array----------------|

A[0]A[1]

A[9]

fig(1). Array representation

Hence if we refer to the ith value in array we should write A[i-1]. When we declare the array of SIZE

elements, where, SIZE is given by the user, the index value changes from 0 to SIZE-1.

Here it should be remembered that the size of the array is „always a constant‟ and not a variable.

This is because the fixed amount of memory will be allocated to the array before execution of the

program. This method of allocating the memory is known as „static allocation‟ of memory.

1.3.1

HANDLING ARRAYS

Normally following procedure is followed for programming so that, by changing only one #define

statement we can run the program for arrays of different sizes.

#define SIZE 10

int a[SIZE], b[SIZE];

Now if we want this program to run for an array of 200 elements we need to change just the

#define statement.

1.3.2 INITIALIZING THE ARRAYS.

One method of initializing the array members is by using the „for‟ loop. The following for loop

initializes 10 elements with the value of their index.

#define SIZE 10

main()

{

int arr[SIZE], i;

}

for(i = 0; i < SIZE ; i++ )

{

arr[i] = i;

}

An array can also be initialized directly as follows.

int arr[3] = {0,1,2};

An explicitly initialized array need not specify size but if specified the number of elements

provided must not exceed the size. If the size is given and some elements are not explicitly

initialized they are set to zero.

e.g.

int arr[] = {0,1,2};

int arr1[5] = {0,1,2}; /* Initialized as {0,1,2,0,0}*/

const char a_arr3[6] = ”Daniel”; /* ERROR; Daniel has 7elements 6 in Daniel and a \0*/

To copy one array to another each element has to be copied using for structure.

Any expression that evaluates into an integral value can be used as an index into array.

e.g.

arr[get_value()] = somevalue;

1.4 MULTIDIMENSIONAL ARRAYS

An array in which the elements need to be referred by two indices it is called a twodimensional array or a “matrix” and if it is referred using more than two indices, it will be

Multidimensional Array.

e.g.

int arr[4][3];

This is a two-dimensional array with 4 as row dimension and 3 as a column dimension.

1.4.1 INITIALIZATION OF TWO DIMENSIONAL ARRAY

Just like one-dimensional arrays, members of matrices can also be initialized in two ways – using

„for‟ loop and directly. Initialization using nested loops is shown below.

e.g.

int arr[10][10];

for(int i = 0;i< 10;i++)

{

for(int j = 0; j< 10;j++)

{

arr[i][j] = i+j;

}

}

Now let us see how members of matrices are initialized directly.

e.g.

int arr[4][3] = {{0,1,2},{3,4,5},{6,7,8},{9,10,11}};

The nested brackets are optional.

1.5 POINTERS

The computer memory is a collection of storage cells. These locations are numbered sequentially

and are called addresses. Pointers are addresses of memory location. Any variable, which

contains an address is a pointer variable. Pointer variables store the address of an object,

allowing for the indirect manipulation of that object. They are used in creation and management

of objects that are dynamically created during program execution.

1.5.1 ADVANTAGES AND DISADVANTAGES OF POINTERS

Pointers are very effective when

-

The data in one function can be modified by other function by passing the address.

Memory has to be allocated while running the program and released back if it is

not required thereafter.

Data can be accessed faster because of direct addressing.

-

The only disadvantage of pointers is, if not understood and not used properly can

introduce bugs in the program.

1.5.2 DECLARING AND INITIALIZING POINTERS

Pointers are declared using the (*) operator. The general format is:

data_type *ptrname;

where type can be any of the basic data type such as integer, float etc., or any of the userdefined data type. Pointer name becomes the pointer of that data type.

e.g.

int

*iptr;

char *cptr;

float *fptr;

The pointer iptr stores the address of an integer. In other words it points to an integer,

cptr to a character and fptr to a float value.

Once the pointer variable is declared it can be made to point to a variable with the help of

an address (reference) operator(&).

e.g.

int num = 1024;

int *iptr;

iptr = &num; // iptr points to the variable num.

The pointer can hold the value of 0(NULL), indicating that it points to no object at present.

Pointers can never store a non-address value.

e.g.

iptr1=ival;

// invalid, ival is not address.

A pointer of one type cannot be assigned the address value of the object of another type.

e.g.

double dval, *dptr = &dval; // allowed

iptr = &dval ;

//not allowed

1.5.3 POINTER ARITHMETIC

The pointer values stored in a pointer variable can be altered using arithmetic operators. You can

increment or decrement pointers, you can subtract one pointer from another, you can add or

subtract integers to pointers but two pointers can not be added as it may lead to an address that

is not present in the memory. No other arithmetic operations are allowed on pointers than the

ones discussed here. Consider a program to demonstrate the pointer arithmetic.

e.g.

# include<stdio.h>

main()

{

int a[]={10,20,30,40,50};

ptr--> F000

10

int *ptr;

F002

20

int i;

F004

30

ptr=a;

F006

40

for(i=0; i<5; i++)

F008

50

{

printf(“%d”,*ptr++);

}

}

Output:

10 20 30 40 50

The addresses of each memory location for the array „a‟ are shown starting from F002 to F008.

Initial address of F000 is assigned to „ptr‟. Then by incrementing the pointer value next values are

obtained. Here each increment statement increments the pointer variable by 2 bytes because the

size of the integer is 2 bytes. The size of the various data types is shown below for a 16-bit

machine. It may vary from system to system.

char

int

float

long int

double

short int

1byte

2bytes

4bytes

4bytes

8bytes

2bytes

1.6 ARRAY OF POINTERS

Consider the declaration shown below:

char *A[3]={“a”, “b”, “Text Book”};

The example declares „A‟ as an array of character pointers. Each location in the array points to

string of characters of varying length. Here A[0] points to the first character of the first string and

A[1] points to the first character of the second string, both of which contain only one character.

however, A[2] points to the first character of the third string, which contains 9 characters.

1.7 PASSING PARAMETERS TO THE FUNCTIONS

The different ways of passing parameters into the function are:

Pass by value( call by value)

Pass by address/pointer(call by reference)

In pass by value we copy the actual argument into the formal argument declared in the function

definition. Therefore any changes made to the formal arguments are not returned back to the

calling program.

In pass by address we use pointer variables as arguments. Pointer variables are particularly

useful when passing to functions. The changes made in the called functions are reflected back to

the calling function. The program uses the classic problem in programming, swapping the values

of two variables.

void val_swap(int x, int y)

{

int t;

// Call by Value

}

t = x;

x = y;

y = t;

void add_swap(int *x, int *y) // Call by Address

{

int t;

}

t = *x;

*x = *y;

*y = t;

void main()

{

int n1 = 25, n2 = 50;

printf(“\n Before call by Value : ”);

printf(“\n n1 = %d n2 = %d”,n1,n2);

val_swap( n1, n2 );

printf(“\n After call by value : ”);

printf(“\n n1 = %d n2 = %d”,n1,n2);

printf(“\n Before call by Address : ”);

printf(“\n n1 = %d n2 = %d”,n1,n2);

val_swap( &n1, &n2 );

printf(“\n After call by value : ”);

printf(“\n n1 = %d n2 = %d”,n1,n2);

}

Output:

Before call by value : n1 = 25 n2 = 50

After call by value

: n1 = 25 n2 = 50 // x = 50, y = 25

Before call by address : n1 = 25 n2 = 50

After call by address : n1 = 50 n2 = 25 //x = 50, y = 25

1.8

RELATION BETWEEN POINTERS AND ARRAYS

Pointers and Arrays are related to each other. All programs written with arrays can also be written

with the pointers. Consider the following:

int arr[] = {0,1,2,3,4,5,6,7,8,9};

To access the value we can write,

arr[0] or *arr;

arr[1] or *(arr+1);

Since „*‟ is used as pointer operator and is also used to dereference the pointer variables, you have

to know the difference between them throughly.

*(arr+1) means the address of arr is increased by 1 and then the contents are fetched.

*arr+1 means the contents are fetched from address arr and one is added to the content.

Now we have understood the relation between an array and pointer. The traversal of an

array can be made either through subscripting or by direct pointer manipulation.

e.g.

void print(int *arr_beg, int *arr_end)

{

while(arr_beg ! = arr_end)

{

printf(“%i”,*arr_beg);

++arr_beg;

}

}

void main()

{

int arr[] = {0,1,2,3,4,5,6,7,8,9}

print(arr,arr+9);

}

arr_end initializes element past the end of the array so that we can iterate through all the

elements of the array. This however works only with pointers to array containing integers.

1.9 SCOPE RULES AND STORAGE CLASSES

Since we explained that the values in formal variables are not reflected back to the calling

program, it becomes important to understand the scope and lifetime of the variables.

The storage class determines the life of a variable in terms of its duration or its scope. There are

four storage classes:

- automatic

- static

- external

- register

1.9.1 AUTOMATIC VARIABLES

Automatic variables are defined within the functions. They lose their value when the function

terminates. It can be accessed only in that function. All variables when declared within the

function are, by default, „automatic‟. However, we can explicitly declare them by using the

keyword ‘automatic’.

e.g.

void print()

{

auto int i =0;

printf(“\n Value of i before incrementing is %d”, i);

i = i + 10;

printf(“\n Value of i after incrementing is %d”, i);

}

main()

{

}

print();

print();

print();

Output:

Value of i before incrementing is : 0

Value

Value

Value

Value

Value

of

of

of

of

of

i

i

i

i

i

after incrementing is : 10

before incrementing is : 0

after incrementing is : 10

before incrementing is : 0

after incrementing is : 10

1.9.2. STATIC VARIABLES

Static variables have the same scope as automatic variables, but, unlike automatic variables,

static variables retain their values over number of function calls. The life of a static variable

starts, when the first time the function in which it is declared, is executed and it remains in

existence, till the program terminates. They are declared with the keyword static.

e.g.

void print()

{

static int i =0;

printf(“\n Value of i before incrementing is %d”, i);

i = i + 10;

printf(“\n Value of i after incrementing is %d”, i);

}

main()

{

}

print();

print();

print();

Output:

Value

Value

Value

Value

Value

Value

of

of

of

of

of

of

i

i

i

i

i

i

before incrementing is : 0

after incrementing is : 10

before incrementing is : 10

after incrementing is : 20

before incrementing is : 20

after incrementing is : 30

It can be seen from the above example that the value of the variable is retained when the function

is called again. It is allocated memory and is initialized only for the first time.

1.9.3. EXTERNAL VARIABLES

Different functions of the same program can be written in different source files and can be

compiled together. The scope of a global variable is not limited to any one function, but is

extended to all the functions that are defined after it is declared. However, the scope of a global

variable is limited to only those functions, which are in the same file scope. If we want to use a

variable defined in another file, we can use extern to declare them.

e.g.

// FILE 1 – gis global and can be used only in main() and // // fn1();

int g = 0;

void main()

{

:

}

:

void fn1()

{

:

:

}

// FILE 2 If the variable declared in file1 is required to be used in file2 then it is to be

declared as an extern.

extern int g = 0;

void fn2()

{

:

:

}

void fn3()

{

:

}

1.9.4. REGISTER VARIABLE

Computers have internal registers, which are used to store data temporarily, before any operation

can be performed. Intermediate results of the calculations are also stored in registers. Operations

can be performed on the data stored in registers more quickly than on the data stored in memory.

This is because the registers are a part of the processor itself. If a particular variable is used often

– for instance, the control variable in a loop, can be assigned a register, rather than a variable.

This is done using the keyword register. However, a register is assigned by the compiler only if it

is free, otherwise it is taken as automatic. Also, global variables cannot be register variables.

e.g.

void loopfn()

{

register int i;

}

for(i=0; i< 100; i++)

{

printf(“%d”, i);

}

1.10 DYNAMIC ALLOCATION AND DE-ALLOCATION OF MEMORY

Memory for system defined variables and arrays are allocated at compilation time. The size of

these variables cannot be varied during run time. These are called „static data structures‟.

The disadvantage of these data structures is that they require fixed amount of storage. Once the

storage is fixed if the program uses small memory out of it remaining locations are wasted. If we

try to use more memory than declared overflow occurs.

If there is an unpredictable storage requirement, sequential allocation is not recommended. The

process of allocating memory at run time is called ‘dynamic allocation’. Here, the required amount

of memory can be obtained from free memory called „Heap‟, available for the user. This free

memory is stored as a list called ‘Availability List’. Getting a block of memory and returning it to

the availability list, can be done by using functions like:

malloc()

calloc()

free()

1.10.1 FUNCTION MALLOC(SIZE)

This function is defined in the header file <stdlib.h> and <alloc.h>. This function allocates a block

of „size’ bytes from the heap or availability list. On success it returns a pointer of type „void‟ to

the allocated memory. We must typecast it to the type we require like int, float etc. If required

space does not exist it returns NULL.

Syntax:

ptr = (data_type*) malloc(size);

where

ptr is a pointer variable of type data_type.

data_type can be any of the basic data type, user defined or derived data type.

size is the number of bytes required.

e.g.

ptr =(int*)malloc(sizeof(int)*n);

allocates memory depending on the value of variable n.

#

#

#

#

include<stdio.h>

include<string.h>

include<alloc.h>

include<process.h>

main()

{

char *str;

if((str=(char*)malloc(10))==NULL) /* allocate memory for

string */

{

printf(“\n OUT OF MEMORY”);

exit(1);

/* terminate the program */

}

}

strcpy(str,”Hello”);

printf(“\n str= %s “,str);

free(str);

/* copy hello into str */

/* display str */

/* free memory */

In the above program if memory is allocated to the str, a string hello is copied into it. Then str is

displayed. When it is no longer needed, the memory occupied by it is released back to the memory

heap.

1.10.2 FUNCTION CALLOC(N,SIZE)

This function is defined in the header file <stdlib.h> and <alloc.h>. This function allocates

memory from the heap or availability list. If required space does not exist for the new block or n,

or size is zero it returns NULL.

Syntax:

ptr = (data_type*) calloc(n,size);

where

-

ptr is a pointer variable of type data_type.

data_type can be any of the basic data type, user defined or derived data type.

size is the number of bytes required.

n is the number of blocks to be allocated of size bytes.

and a pointer to the first byte of the allocated region is returned.

e.g.

#

#

#

#

include<stdio.h>

include<string.h>

include<alloc.h>

include<process.h>

main()

{

char *str = NULL;

str=(char*)calloc(10,sizeof(char)); /* allocate memory for string */

if(str == NULL);

{

printf(“\n OUT OF MEMORY”);

exit(1);

/* terminate the program */

}

strcpy(str,”Hello”);

printf(“\n str= %s “,str);

free(str);

/* copy hello into str */

/* display str */

/* free memory */

}

1.10.3 FUNCTION FREE(BLOCK)

This function frees allocated block of memory using malloc() or calloc(). The programmer can use

this function and de-allocate the memory that is not required any more by the variable. It does

not return any value.

1.11 DANGLING POINTER PROBLEM.

We can allocate memory to the same variable more than once. The compiler will not raise any

error. But it could lead to bugs in the program. We can understand this problem with the

following example.

# include<stdio.h>

# include<alloc.h>

main()

{

int *a;

a= (int*)malloc(sizeof(int));

*a = 10;

a= (int*)malloc(sizeof(int));

*a = 20;

---->

---->

10

20

}

In this program segment memory allocation for variable „a‟ is done twice. In this case the variable

contains the address of the most recently allocated memory, thereby making the earlier allocated

memory inaccessible. So, memory location where the value 10 is stored is inaccessible to any of

the application and is not possible to free it so that it can be reused.

To see another problem, consider the next program segment:

main()

{

int *a;

a= (int*)malloc(sizeof(int));

*a = 10;

free(a);

}

---->

10

---->

?

Here, if we de-allocate the memory for the variable „a‟ using free(a), the memory location pointed

by „a‟ is returned to the memory pool. Now since pointer „a‟ does not contain any valid address we

call it as ‘Dangling Pointer’. If we want to reuse this pointer we can allocate memory for it again.

1.12 STRUCTURES

A structure is a derived data type. It is a combination of logically related data items. Unlike

arrays, which are a collection of similar data types, structures can contain members of different

data type. The data items in the structures generally belong to the same entity, like information of

an employee, players etc.

The general format of structure declaration is:

struct tag

{

type member1;

type member2;

type member3;

:

:

}variables;

We can omit the variable declaration in the structure declaration and define it separately as

follows :

struct tag variable;

e.g.

struct Account

{

int accnum;

char acctype;

char name[25];

float balance;

};

We can declare structure variables as :

struct Account oldcust;

We can refer to the member variables of the structures by using a dot operator (.).

e.g.

newcust.balance = 100.0;

printf(“%s”, oldcust.name);

We can initialize the members as follows :

e.g.

Account customer = {100, „w‟, „David‟, 6500.00};

We cannot copy one structure variable into another. If this has to be done then we have to do

member-wise assignment.

We can also have nested structures as shown in the following example:

struct Date

{

int dd, mm, yy;

};

struct Account

{

int accnum;

char acctype;

char name[25];

float balance;

struct Date d1;

};

Now if we have to access the members of date then we have to use the following method.

Account c1;

c1.d1.dd=21;

We can pass and return structures into functions. The whole structure will get copied into formal

variable.

We can also have array of structures. If we declare array to account structure it will look like,

Account a[10];

Every thing is same as that of a single element except that it requires subscript in order to know

which structure we are referring to.

We can also declare pointers to structures and to access member variables we have to use the

pointer operator -> instead of a dot operator.

Account *aptr;

printf(“%s”,aptr->name);

A structure can contain pointer to itself as one of the variables, also called self-referential

structures.

e.g.

struct info

{

int i, j, k;

info *next;

};

In short we can list the uses of the structure as:

-

Related data items of dissimilar data types can be logically grouped under a

common name.

Can be used to pass parameters so as to minimize the number of function

arguments.

When more than one data has to be returned from the function these are useful.

Makes the program more readable.

1.13 ENUMERATED CONSTANTS

Enumerated constants enable the creation of new types and then define variables of these types

so that their values are restricted to a set of possible values. There syntax is:

where

enum identifier {c1,c2,...}[var_list];

-

e.g.

enum is the keyword.

identifier is the user defined enumerated data type, which can be used to declare

the variables in the program.

{c1,c2,...} are the names of constants and are called enumeration constants.

var_list is an optional list of variables.

enum Colour{RED, BLUE, GREEN, WHITE, BLACK};

Colour is the name of an enumerated data type. It makes RED a symbolic constant with the value

0, BLUE a symbolic constant with the value 1 and soon.

Every enumerated constant has an integer value. If the program doesn‟t specify otherwise, the

first constant will have the value 0, the remaining constants will count up by 1 as compared to

their predecessors.

Any of the enumerated constant can be initialised to have a particular value, however, those that

are not initialised will count upwards from the value of previous variables.

e.g.

enum Colour{RED = 100, BLUE, GREEN = 500, WHITE, BLACK = 1000};

The values assigned will be RED = 100,BLUE = 101,GREEEN = 500,WHITE = 501,BLACK = 1000

You can define variables of type Colour, but they can hold only one of the enumerated values. In

our case RED, BLUE, GREEEN, WHITE, BLACK .

You can declare objects of enum types.

e.g.

enum Days{SUN, MON, TUE, WED, THU, FRI, SAT};

Days day;

Day = SUN;

Day = 3; // error int and day are of different types

Day = hello;

// hello is not a member of Days.

Even though enum symbolic constants are internally considered to be of type unsigned int we

cannot use them for iterations.

e.g.

enum Days{SUN, MON, TUE, WED, THU, FRI, SAT};

for(enum i = SUN; i<SAT; i++)

//not allowed.

There is no support for moving backward or forward from one enumerator to another. However

whenever necessary, an enumeration is automatically promoted to arithmetic type.

e.g.

if( MON > 0)

{

printf(“ Monday is greater”);

}

int num = 2*MON;

1.14 UNIONS

A union is also like a structure, except that only one variable in the union is stored in the

allocated memory at a time. It is a collection of mutually exclusive variables, which means all of

its member variables share the same physical storage and only one variable is defined at a time.

The size of the union is equal to the largest member variables. A union is defined as follows:

union tag

{

type memvar1;

type memvar2;

type memvar3;

:

:

};

A union variable of this data type can be declared as follows,

union tag variable_name;

e.g.

union utag

{

int num;

char ch;

};

union tag ff;

The above union will have two bytes of storage allocated to it. The variable num can be accessed

as ff.num and ch is accessed as ff.ch. At any time, only one of these two variables can be referred

to. Any change made to one variable affects another.

Thus unions use memory efficiently by using the same memory to store all the variables, which

may be of different types, which exist at mutually exclusive times and are to be used in the

program only once.

In this chapter we have studies some advanced features of C. We have seen how the flexibility of

language allowed us to define a user-defined variable, is a combination of different types of

variables, which belong to some entity. We also studies arrays and pointers in detail. These are

very useful in the study of various data structures.

UNIT 2

COMPLEXITY OF ALGORITHMS

2.9. PROGRAM ANALYSIS

2.10.

PERFORMANCE ISSUES

2.11.

GROWTH OF FUNCTIONS

2.12.

ASYMPTOTIC NOTATIONS

2.12.1. BIG-O NOTATION (O)

2.12.2. BIG-OMEGA NOTATION ()

2.12.3. BIG-THETA NOTATION ()

2.13.

TIME-SPACE TRADE OFFS

2.14.

SPACE USAGE

2.15.

SIMPLICITY

2.16.

OPTIMALITY

2.1 PROGRAM ANALYSIS

The program analysis is defined what happens when a program is executed - the sequence of

actions executed and the changes in the program state that occur during a run.

There are many ways of analysing a program, for instance:

(i) verifying that it satisfies the requirements.

(ii) proving that it runs correctly without any logic errors.

(ii) determining if it is readable.

(iii) checking that modifications can be made easily, without introducing new errors.

(iv) we may also analyze program execution time and the storage complexity associated with it i.e.

how fast does the program run and how much storage it requires.

Another related question can be : how big must its data structure be and how many steps will be

required to execute its algorithm?

Since this course concerns data representation and writing programs, we shall analyse programs

in terms of storage and time complexity.

2.2 PERFORMANCE ISSUES

In considering the performance of a program, we are primarily interested in

(i) how fast does it run?

(ii) how much storage does it use?

Generally we need to analyze efficiencies, when we need to compare alternative algorithms and

data representations for the same problem or when we deal with very large programs.

We often find that we can trade time efficiency for space efficiency, or vice-versa. For finding any

of these i.e. time or space efficiency, we need to have some estimate of the problem size.

2.3 GROWTH OF FUNCTIONS

Informally, an algorithm can be defined as the finite sequence of steps designed to sort out a

computationally solvable problem. By this definition, it is immaterial, what time an algorithm

takes to solve a problem of a given size. But this is impractical to choose an algorithm that finds

the solution of a particular problem in a very long time. Fortunately, to estimate the computation

time of an algorithm is fairly possible. The computation time of an algorithm can be formulated in

terms of a function f(n), where n is the size of the input instancei. Let me try to explain this

situation. Below is a C-function isprime(int) that returns true if the integer n is prime else returns

false.

int isprime(int n)

{

int divisor=2;

while(divisor<=n/2)

{

if(n % divisor = = 0)

return 0;

divisor ++;

}

return 1;

}

Figure 1

The while loop in the fig 1 makes n comparisons in the worst case, if the input n is not a prime

number. In case, it is prime it makes at most n/2 comparisons. Obviously, the number of

comparisons is directly proportional to the size of n (alternatively, it depends upon the no of bits

required to represent the number n). That is f(n)=n in worst case n is not prime else f(n)= n/2 if

prime. However, it would had been better if the while loop looked like

while( divisor<= n) {………}

Doing so, irrespectively the number being prime the worst case of computation remains same.

Similar things can be done for different algorithms devised to solve an entirely different problem.

Many a times we are interested in finding out the facts like:

1) What is the longest time interval that it takes to complete a particular algorithm for any

random input.

2) What is the smallest time interval a given algorithm can take to solve any input instance.

Since there may be many possible input instances, we need to just estimate the running time

of the algorithm. This fact finding is called computation of order of run time of an algorithm.

2.4 ASYMPTOTIC NOTATIONS

2.4.1. BIG-O NOTATION(O)

Let f and g be functions from the set of integers or the set of real numbers to the set of real

numbers. We say that f(x) is O(g(x)) if there are constants C and k such that f(x) <= Cg(x) whenever

x > k. For example, when we say the running time T(n) of some program is O(n 2), read “big oh of n

squared” or just “oh of n squared,” we mean that there are positive constants c and n 0 such that

for n equal to or greater than n 0, we have T(n)<=cn2. In support to the above discussion I am

presenting a few examples to visualize the effect of this definition.

Example 1: Analyze the running time of factorial(x). Input size is the value of x. Given n as input,

factorial(n) recursively makes n+1 calls to factorial. Each call to factorial makes a few constant

time operations such as multiply, if-control, and return. Let the time taken for each call be the

constant k. Therefore, the total time taken for factorial(n) is k*(n+1). This is O(n) time. In other

words, factorial(x) has O(x) running time. In other words, factorial(x) has linear running time.

int factorial(int n)

{

if(n==0)

return 1;

else

return (n * factorial(n-1));

}

Figure 2

Example 2: Let f(x)=x2 +2x+1 be a function in x. Now x2 >x andx2>1, for every x>1.Therefore for

every x>1 we have, x2 +2x+1 >= x2 +2x+ x2. Alternatively, for every x>1, x2 +2x+1 >= 4x2.

Comparing the situation with f(x)<= Cg(x) we get C=4 and k=1. So that function f(x)=x 2 +2x+1 is

O(x2).

Figure 3

Since the Big-O Notation finds the upper limit of the completion of a function it is also called the

upper bound of a function or an algorithm.

2.4.2 BIG-OMEGA NOTATION ()

Let f and g be functions from the set of integers or the set of real numbers to the set of real

numbers. We say that f(x) is (g(x)) if there are constants C and k such that f(x) >= Cg(x) whenever

x > k.

In terms of limits,

lim

g(x)

f(x) = a constant (possibly 0) iff , f(n) = (g(x))

Example 3: Let f(x) = 8x3 + 5x2 + 7 >= 8x3 (x >= 0)

Therefore, f(x) = (x3)

2.4.3. BIG-THETA NOTATION ()

For the similar functions f and g as discussed in above two definitions, we say that f(x) is (g(x)) if

there are constants C1 ,C2 andk such that,

0<= C1f(x)<=f(x) <= C2f(x) whenever x > k.

Since, (g(x)) bounds a function from both upper and lower sides, it is also called tight bound for

the function f(x).

Mathematically,

lim

g(x)

nf(x)

= a constant. In other words f(n) = (g(x)) iff f(x) and g(x) have same leading terms and except for

possibly different constant factors.

Example 4: f(x) = 3x2 + 8x log x is (x2)

2.5. TIME-SPACE TRADE OFFS

Over 50 years of researches for algorithms related to different problem areas like decision-making,

optimization etc, scientists have tried to concentrate on computational hardness of different

solution methods. The study of time-space trade-offs, i.e., formulae that relate the most

fundamental complexity measures, time and space, was initiated by Cobham, who studied

problems like recognizing the set of palindromes on Turing machines. There are two main lines of

motivation for such studies: one is the lower bound perspective, where restricting space allows

you to prove general lower bounds for decision problems; the other line is the upper bound

perspective where one attempts to find time efficient algorithms that are also space efficient (or

vice versa). Also, upper bounds are interesting for finding, in conjunction with lower bounds, the

computational complexity of fundamental problems such as sorting. So, mainly algorithms are

constrained under two resources i.e. time and space. However, there are certain problems for

which it is difficult or even impossible to find such a solution that is both time and space efficient.

In such cases, the algorithms are written for specific resource configuration and also taking in

consideration nature of input instance. Fundamentally, we measure either of the space and time

complexities as a function of the size of the input instance. However, it is also likely to depend

upon nature of the input. So, let us define the time and space complexity as below:

Time Complexity T (n): An algorithm A for a problem P is said to have time complexity of T(n) if

the number of steps required to complete its run for an input of size n is always less than equal to

T(n).

Space Complexity S(n): An algorithm A for a problem P is said to have space complexity of S(n) if

the no. of bits required to complete its run for an input of size n is always less than equal to S(n).

If we consider, both time and space requirements, general format is to use the quantity (time *

space) for a given algorithm. T(n)*S(n) is therefore quite handy to estimate the overall efficiency of

an algorithm in general*. The amount of space can easily be estimated for most of the algorithms

directly and the measurement of run-time of algorithm mathematically or manually by testing can

tell us the efficiency of the algorithm. From here we can establish, the range of this T*S term, that

we can afford. All algorithms that lie in this range are then acceptable to us.

Many a times algorithms need to do certain implicit tasks that are actually not the part of run

time of algorithm. These implicit tasks, for most of the times are, one-time investments. For

Example, sorting of list for doing binary search in randomly accessible storage containing the list

of elements. So, in order to actually evaluate the importance of each of the operations in the

algorithm over a data-structure and the complexity of the over all algorithm it is desirable to find,

the time required to perform a sequence of operations averaged over all the operations performed.

This computation is called amortized analysis. The usefulness of this computation is reflected

when one has to establish a fact that, an operation under investigation is however costly in a local

perspective but the overall cost of algorithm is minimized due to its use and thus the operation is

very useful.

A simple algorithm may consist of some initialization instructions and a loop. The number of

passes made through the body of the loop is a fairly good indication of the work done by such an

algorithm. Of course, the amount of work done in one pass through a loop may be much more

than the amount done in another pass, and one algorithm may have longer loop bodies than

another algorithm, but we are narrowing in on a good measure of work. Though some loops may

have, say, five steps and some nine, for large inputs the number of passes through the loops will

generally be large compared to the loop sizes. Thus counting the passes through all the loops in

the algorithm is a good idea.

In many cases, to analyze an algorithm we can isolate a particular operation fundamental to the

problem under study (or to the types of algorithms being considered), ignore initialization, loop

control, and other bookkeeping, and just count the chosen, or basic, operations performed by the

algorithm. For many algorithms, exactly one of these operations is performed on each pass

The function T(n), can be compared with another function f(n) to find the order of T(n) and hence the

bounds of the algorithm can be evaluated.

* Generalization of efficiency is required because efficiency also depends on the nature of input. For Example,

an algorithm may be efficient for one sequence of elements to be sorted and not for other, size being the

same.

through the main loops of the algorithm, so this measure is similar to the one described in the

previous paragraph.

Here are some examples of reasonable choices of basic operations for several problems:

Problem

Operation

Find x in an array of names.

Comparison of x with an entry in the array

Multiply two

numbers

matrices

Multiplication of two real with real entries.

(or multiplication and addition of real no‟s)

Sort an array of numbers

Comparison of two array entries

Traverse a binary tree

Traversing an edge

Any non-iterative procedure,

including recursive

Procedure invocation.

So long as the basic operations are chosen well and the total number of operations performed is

roughly proportional to the number of basic operations, we have a good measure of the work done

by an algorithm and a good criterion for comparing several algorithms. This is the measure we

use in this chapter and in several other chapters in this book. You may not yet be entirely

convinced that this is a good choice; we will add more justification for it in the next section. For

now, we simply make a few points.

First, in some situations, we may be intrinsically interested in the basic operation: It might be a

very expensive operation compared to the others, or it might be of some theoretical interest.

Second, we are often interested in the rate of growth of the time required for the algorithm, as the

inputs get larger. So long as the total number of operations is roughly proportional to the basic

operations, just counting the latter can give us a pretty clear idea of how feasible it is to use the

algorithm on large inputs.

Finally, this choice of the measure of work allows a great deal of flexibility. Though we will often

try to choose one, or at most two, specific operations to count, we could include some overhead

operations, and, in the extreme, we could choose as the basic operations the set of machine

instructions for a particular computer. At the other extreme, we could consider “one pass

through a loop” as the basic operation. Thus by varying the choice of basic operations, we can

vary the degree of precision and abstraction in our analysis to fit our needs.

What if we choose a basic operation for a problem and then find that the total number of

operations performed by an algorithm is not proportional to the number of basic operations?

What if it is substantially higher? In the extreme case, we might choose a basic operation for a

certain problem and then discover that some algorithms for the problem use such different

methods that they do not do any of the operations we are counting. In such a situation, we have

two choices. We could abandon our focus on the particular operation and revert to counting

passes through loops. Or, if we are especially interested in the particular operation chosen, we

could restrict our study to a particular class of algorithms, one for which the chosen operation is

appropriate. Algorithms that use other techniques for which a different choice of basic operation

is appropriate could be studied separately. A class of algorithms for a problem is usually defined

by specifying the operations that may be performed on the data. (The degree of formality of the

specifications will vary; usually informal descriptions will suffice in this book).

Throughout this section, we have often used the phrase “the amount of work done by an

algorithm.” It could be replaced by the term “the complexity of an algorithm.” Complexity means

the amount of work done, measured by some specified complexity measure, which in many of our

examples is the number of specified basic operations performed. Note that, in this sense,

complexity has nothing to do with how complicated or tricky an algorithm is; a very complicated

algorithm may have low complexity. We will use the terms “complexity,:” “Amount of work done,”

and “number of basic operations done” almost interchangeably in this book done” almost

interchangeably in this book.

Average and Worst-Case Analysis

Now that we have a general approach to analyzing the amount of work done by an algorithm, we

need a way to present the results of the analysis concisely. A single number cannot describe the

amount of work done because the number of steps performed is not the same for all inputs. We

observe first that the amount of work done usually depends on the size of the input. For example,

alphabetizing an array of 1000 names usually requires more operations than alphabetizing an

array 100 names, using the same algorithm. Solving a system of 12 linear equations in 12

unknowns generally takes more work than solving a system of 2 linear equations in 2 unknowns.

We observe, secondly, that even if we consider inputs of only one size, the number of operations

performed by an algorithm may depend on the particular input. An algorithm for alphabetizing

an array of names may do very little work if only a few of the names are out of order, but it may

have to do much more work on an array that is very scrambled. Solving a system of 12 linear

equations may not require much work if most of the coefficients are zero.

The first observation indicates that we need a measure of the size of the input for a problem. It is

usually easy to choose a reasonable measure of size. Here are some examples:

Problem

Find x in an array of names

Size of input

The number of names in the array

Multiply two matrices

The dimensions of the matrices

Sort an array of numbers

The number of entries in the array

Traverse a binary tree

The number of nodes in the tree

Solve a system of linear equations

The number of equations, or the

number of unknowns, or both

Solve a problem concerning a graph

The number of nodes in the graph, or the

number of edges or both

The number of operations performed may

at, say, n, depend on the particular input.

Even if the input size is fixed

How, then, are the results of the analysis of an algorithm to be expressed? Most often we describe

a behavior of an algorithm by stating its worst-case complexity.

Worst-case complexity

Let Dn be the set of inputs of size n for the problem under consideration, and let I be an element

of Dn. Let t(I) be the number of basic operations performed by the algorithm on input I. We define

the function W by

W(n)=max{t(I)|I Dn}

The function W(n) is called the worst-case complexity of the algorithm. W(n) is the maximum

number of basic operations performed by the algorithm on any input of size n.

It is often not very difficult to compute W(n). The worst-case complexity is valuable because it

gives an Upper bound on the work done by the algorithm. The worst-case analysis could be used

to help form an algorithm. We will do worst-case analysis for most of the algorithms presented in

this book. Unless otherwise stated, whenever we refer to the amount of work done by an

algorithm, we mean the amount of work done in the worst case.

It may seem that a more useful and natural way to describe the behavior of an algorithm is to tell

how much work it does on the average; that is, to compute the number of operations performed

for each input of size n and then take the average. In practice some inputs might occur much

more frequently than others so a weighted average is more meaningful.

Average complexity

Let Pr(I) be the probability that input I occurs. Then the average behavior of the algorithm is

defined as

A(n)=

I D

Pr(I)t(I).

n

We determine t(I) by analyzing the algorithm, but Pr(I) cannot be computed analytically. The

function Pr(I) is determined from experience and/or special information about the application for

which the algorithm is to be used, or making some simplifying assumption (e.g., that all inputs of

size n are equally likely to occur). If Pr(I) is complicated, the computation of average behavior is

difficult. Also, of course, if Pr(I) depends on a particular application of the algorithm, the function

A describes the average behavior of the algorithm for only that application. The following examples

illustrate worst-case and average analysis.

Example

Problem: Let E be an array containing n entries (called keys), E[0],…..,E[n-1], in no particular

order. Find an index of a specified key K, if K is in the array; return – I as the answer if K is not

in the array.

Strategy: Compare K to each entry in turn until a match is found or the array is exhausted. If K

is not in the array, the algorithm returns – 1 as its answer.

There is a large class of procedures similar to this one, and we call these procedures generalized

searching routines. Often they occur as subroutines of more complex procedures.

Generalized searching routine

A generalized searching routine is a procedure that processes an idefinite amount of data until it

either exhausts the data or achieves its goal. It follows this high-level outline:

If there is no more data to examine:

Fail

Else

Examine one datum

If this datum is what we want:

Succeed.

Else

Keep searching in remaining data.

The scheme is called generalized searching because the routine often performs some other simple

operations as it searches, such as moving data elements, adding to or deleting from a data

structure, and so on.

Sequential Search, Unordered

Input: E, n, K, where E is an array with n entries (indexed 0,….n-1), and K is the item sought.

For simplicity, we assume that K and the entries of E are integers, as is n.

Output:

Returns „ans‟, the location of K in E (-1 if K is not found).

int seqSearch(int E[], int n, int K)

1.

int ans, index;

2.

ans=-1;//Assume failure

3.

for (index = 0; index <n; index ++)

4.

if (k==E[index])

5.

ans=index;//Success!

6.

break;//Take the rest of the afternoon off.

//Continue loop.

7.

return ans;

Basic Operation: Comparison of x with an array entry.

Worst-Case Analysis: Clearly W(n)=n. The worst cases occur when K appears only in the last

position in the array and when K is not in the array at all. In both of these cases K is compared

to all n entries.

Average Behavior Analysis: We will make several simplifying assumptions first to do an easy

example then, do a slightly more complicated analysis with different assumptions. We assume

that the elements in the array are distinct and that if K is in the array, then it is equally likely to

be in any particular position.

For our first case, we assume that K is in the array and we denote this event by “succ,” in

accordance with the terminology of probabilities. The inputs can be categorized according to

where in the array K appears, so there are n inputs to consider. For 0<I<n, let Ii represent the

event that K appears in the ith position in the array. Then, let t(I) be the number of comparisons

done (the number of times the condition in line 4 is tested) by the algorithm on input I. Clearly,

for <i<n,t(Ii)=I+1. Thus

n-1

Asucc(n)= Pr(Ii|succ)t(Ii)

i=0

n-1

i=0

n

=1) (i+1) = (1) n (n+1)=n+1

n

The subscript “succ” denotes that we are assuming a successful search in this computation. The

result should satisfy our intuition that on the average, about half the array will be searched. Now,

let us consider the event that K is not in the array at all, which we call “fail”. There is only one

input for this case, which we call I fail. The number of comparisons in this case is t(Ifail)=n, so

Afail=n. Finally, we combine the cases in which K is in the array and is not in the array. Let q be

the probability that K is in the array. By the law of conditional expectations

A(n)=Pr(succ)Asucc(n)+Pr(fail)Afail(n)

=q(1/2(n+1))+(1-q)n=n(1-1/2q)+q.

If q=1, that is if K is always in the array, then A(n)=(n+1)/2, as before, If q=1/2, that is, if there is

a 50-50 chance that K is not in the array, then A(n)=3n/4+1/4; roughly three-fourths of the

entries are examined.

Example

Illustrates how we should interpret D n, the set of inputs of size n. Rather than consider all

possible arrays of names, numbers, or whatever, that could occur as inputs, we identify the

properties of the inputs that affect the behavior of the algorithm; in this case, whether K is in the

array at all and, if so, where it appears. An element I in Dn may be thought of as a set (or

equivalence class) of all arrays and values for K such that K occurs in the specified place in the

array (or not at all). Then t(I) is the number of operations done for any one of the inputs in I.

Observe also that the input for which an algorithm behaves worst depends on the particular

algorithm, not on the problem. A worst case occurs when the only position in the array containing

K is the last. For an algorithm that searched the array only position in the array containing K is

the last. For an algorithm that searched the array backwards (i.e., beginning with index=n-1), a

worst case would occur if K appeared only in position 0. (Another worst case would again be

when K is not in the array at all).

Example

Illustrates an assumption we often make when doing average analysis of sorting and searching

algorithms: that the elements are distinct. The average analysis for the case of distinct elements

gives a fair approximation for the average behavior analysis for the case of distinct elements gives

a fair approximation for the average behavior in cases with few duplicates. If there might be many

duplicates, it is harder to make reasonable assumptions about the probability that K‟s first

appearance in the array occurs at any particular position.

2.6 SPACE USAGE

The number of memory cells used by a program, like the number of seconds required executing a

program, depends on the particular implementation. However, just examining an algorithm can

make some conclusions about space usage. A program will require storage space for the

instruction, the constants and variables used by the program, and the input data. It may also

use some workspace for manipulating the data and storing information needed to carry out its

computations. The input data itself may be re-presentable in several forms, some of which

require more space than others.

If the input data have one natural form (say, an array of numbers or a matrix), then we analyze

the amount of extra space used, aside from the program and the input. If the amount of extra

space is constant with respect to the input size, the algorithm is said to work in place. This term

is used especially in reference to sorting algorithms. (A relaxed definition of in place is often used

when the extra space is not constant, but is only a logarithmic function of the input size, because

the log function grows so slowly; we will clarify any cases in which we use the relaxed definition).

If the input can be represented in various forms, then we will consider the space required for the

input itself as well as any extra space used. In general, we will refer to the number of “cells” used

without precisely defining cells. You may think of a cell as being large enough to hold one

number or one object. If the amount of space used depends on the particular input, worst-case

and average-case analysis can be done.

2.7 SIMPLICITY

It is often, though not always, the case that the simplest and most straightforward way to solve a

problem is not the most efficient. Yet simplicity in an algorithm easier, and it makes writing,

feature. It may make verifying the correctness of the algorithm easier, and it makes writing,

debugging, and modifying a program easier. The time needed to produce a debugged program

should be considered when choosing an algorithm, but if the program is to be used very often, its

efficiency will probably be the determining factor in the choice.

2.8 OPTIMALITY

No matter how clever we are, we can‟t improve an algorithm for a problem beyond a certain point.

Each problem has inherent complexity; that is, there is some minimum amount of work required

to solve it. To analyze the complexity of a problem, as opposed to that of a specific algorithm, we

choose a class of algorithms (often by specifying the types of operations the algorithms will be

permitted to perform) and a measure of complexity, for example, the basic operation(s) to be

counted. Then we may ask how many operations are actually needed to solve the problem. We

say that an algorithm is optimal (in the worst case) if there is no algorithm in the class under

study that performs fewer basic operations (in the worst case). Note that when we speak of

algorithms in the class under study, we don‟t mean only those algorithms that people have

thought of. We mean all possible algorithms, including those not yet discovered. “Optimal”

doesn‟t mean “the best known”; it means “the best possible.”

SUMMARY:If the problem size doubles and the algorithm takes one more step, we relate the number of steps

to the number of steps to the problem size by

O(log2N). It is read as order of log2N.

- If the problem size doubles and the algorithm takes twice as many steps, the number of steps

is related to problem size by O(N) i.e. order of N, i.e.number of steps is directly proportional to N.

- If the problem size doubles and the algorithm takes more than twice as many steps, i.e. the

number of steps required grow faster than the problem size, we use the expression

O(N log2 N)

You may notice that the growth rate complexities is more than the double of the growth rate of

problem size, but it is not a lot fasten

- If the number of steps used is proportional to the square of problem size ,we say the com plexity is of the order of N2 or O(N2).

- If the algorithm is independent of problem size, the complexity is constant in time and space, i.e.

O(1).

The notation being used, i.e. a capital O() is called Big- Oh notation.

*************************************************************************************************************

UNIT 3

INTRODUCTION TO DATA AND FILE STRUCTURE

3.5 INTRODUCTION

3.6 PRIMITIVE AND SIMPLE STRUCTURES

3.7 LINEAR AND NONLINEAR STRUCTURES

3.8 FILE ORGANIZATIONS

3.1 INTRODUCTION

Data Structures are very important in computer systems. In a program, every variable is of some

explicitly or implicitly defined data structure, which determines the set of operations that are legal

upon that variable. Knowledge of data structures is required for people who design and develop

computer programs of any kind : systems software or applications software. The data structures

that are discussed here are termed logical data structure. There may be several different physical

organizations on storage possible for each logical data structure. The logical or mathematical

model of a particular organization of data is called a data structure.Often the different data values

are related to each other. To enable programs to make use of these relationships, these data

values must be in an organised form. The organised collection of data is called a data structure.

The programs have to follow certain rules to access and process the structured data. We may,

therefore, say data are represented that:

Data Structure = Organised Data + Allowed Operations.

If you recall, this is an extension of the concept of data type. We had defined a data type as:

Data Type = Permitted Data Values + Operations

The choice of a particular data model depends on two considerations:

To identify and develop useful mathematical entities and operations.

To determine representations for those entities and to implement operations on these

concrete representations. The representation should be simple enough that one can

effectively process the data when necessary.

Data Structures can be categorized according to figure 1.1.

Data Structure

Primitive

Compound

Data Structure

-integer

-float

-character

-String

-Array

-Record

-Sets

File Structure

Structure

Simple Data

File organization

Data Structure -Sequential

-Indexed Sequential

Linear

-Linked List

-Stack

-Queue

Non Linear

Binary

-Binary Tree

-Binary Search Tree

N-ary

-Graph

-General Tree

-B-Tree

-B+Tree

Figure 1.1: Charaterisation of Data Structure

3.2 PRIMITIVE AND SIMPLE STRUCTURES

They are the data structures that are not composed of other data structures. We will consider

briefly examples of three primitives: integers, Booleans, and characters. Other data structures can

be constructed from one or more primitives. The simple data structures build from primitives that

are strings, arrays, sets, and records(or structure in some programming languages). Many

programming languages support these data structures. In other words These are the data

structures that can be manipulated directly by machine instructions. The integer, real, character

etc., are some of primitive data structures. In C, the different primitive data structures are int,

float, char and double

Example of primitive Data Structure

The primitive data structures are also known as data types in computer language. The example of

different data items are:

integers :

10, 20, 5, - 15, etc. i.e., a subset of integer. In C language an integer is declared as

:

int x;

Each of the integer occupied 2 bytes of memory space.

float:

6.2, 7.215162, 62.5 etc i.e., a subset of real number. In C language a float variable

is declared as:

float y;

Each of the float number occupied 4 bytes of memory space.

character:

Any character enclosed within single quotes is treated as character data. e.g., „a‟,

1‟, „?‟, „‟*‟ etc are the character data types. Characters are declared as:

char c ;

Each character occupied 1 byte of memory space.

Example of Simple Data Structure

The simple data types are composed primitive data structure.

Array:

Array is the collection of similar type of data item under the same name.

e.g. int x [20]

declares a collection of 20 integers under the same name x.

Records:

The records are also known as structure in C/C++ language

There are collection of different data items under the same name.

e.g., in C language the declaration

struct student {

char name [30];

char fname [30];

int roll;

char class [5];

} y;

define structure describing the record of a student. Obviously this structure contains different

types of data items. The size of structure is depends on the constituents of the structure. In this

example the six of structure is 30 + 30 + 2 + 5 = 37 bytes.

3.3 LINEAR AND NONLINEAR STRUCTURES

Simple data structures can be combined in various ways to from more complex structures. The

two fundamental kinds of more complex data structures are linear and nonlinear, depending on

the complexity of the logical relationships they represent. The linear data structures that we will

discuss include stack, queues, and linear lists. The nonlinear data structures include trees and

graphs. We will find that there are many types of tree structures that are useful in information

systems. In other words These data structures cannot be manipulated directly by machine

instructions. Arrays, linked lists, trees etc., are some non-primitive data structures. These data

structures can be further classified into „linear’ and ‘non-linear’ data structures.

The data structures that show the relationship of logical adjacency between the elements

are called linear data structures. Otherwise they are called non-linear data structures.

Different linear data structures are stack, queue, linear linked lists such as singly linked list,

doubly linked list etc.

Trees, graphs etc are non-linear data structures.

3.4 FILE ORGANIZATIONS

The data structuring techniques applied to collections of data that are managed as “black boxes”

by operating systems are commonly called file organizations. A file carries a name, contents, a

location where it is kept, and some administrative information, for example, who owns it and how

big it is. The four basic kinds of file organization that we will discuss are sequential, relative,

indexed sequential, and multi-key file organizations. These organizations determine how the

contents of files are structured. They are built on the data structuring techniques.

************************************************************************************************************

UNIT 4 ARRAYS

4.1 INTRODUCTION

4.4.1. SEQUENTIAL ALLOCATION

4.4.2. MULTIDIMENSIONAL ARRAYS

4.5. ADDRESS CALCULATIONS

4.6. GENERAL MULTIDIMENSIONAL ARRAYS

4.7. SPARSE ARRAYS

4.1 INTRODUCTION

The simplest type of data structure is a linear Array.

A linear array is a list of a finite number n, of homogeneous data elements (i.e., data

elements of the same type) such that :

(a)

The elements of the arrays are referenced respectively by an index set consisting of n

consecutive numbers.

(b)

The elements of the arrays are stored respectively in successive memory locations.

The number n of elements is called the length or size of the array. If not explicity stated, we will

assume the index set consists of the integers 0, 1…, n-1. In general, the length or the number of

data elements of the array can be obtained from the index set by the formula

Length = UB – LB + 1

(2.1)

where UB is the largest index, called the upper bound, and LB is the smallest index, called the

lower bound, of the array. Note that:

length = UB

when LB = 1

The elements of an array A may be denoted by the subscript notation

A0, A1, A2, A3, …., An - 1

or by the parentheses notation(used in FORTRAN,PL/1, and BASIC)

A(0), A(1), A(2), ….., A(n-1)

or by the bracket notation(used in Pascal)

A[0], A[1], A[2], A[3], ….., A[n-1]

We will usually use the subscript notation or the bracket notation. Regardless of the notation,

The number i in A[i] is called a subscript or an index and A[i]is called subscripted variable.

Note that subscripts allow any elements of A to be referenced by its relative position in A.

Example 1

(a)

Let DATA be a 6-element linear array of integers such that

DATA[0] =247 , DATA[1] =56 , DATA[2] =429 , DATA[3] =135 , DATA[4]=87 , DATA[5] =156

Sometimes we denote such an array by simply writing

DATA : 247,56,429,135,87,156

The array DATA is frequently pictured as in Fig. 2.1(a) or Fig. 2.1(b)

DATA

0

2247

1

56

2

429

3

135

4

87

5

156