Comparing Pairwise Testing in Software Product Lines: A

advertisement

Submitted for publication. Please do not distribute.

Made available to support ICSE14 submission.

Comparing Pairwise Testing in Software

Product Lines: A Framework Assessment

Roberto E. Lopez-Herrejon1 , Javier Ferrer2 , Evelyn Nicole Haslinger1 ,

Francisco Chicano2 , Alexander Egyed1 , and Enrique Alba2

1

Systems Engineering and Automation

Johannes Kepler University, Linz,Austria

{roberto.lopez, evelyn.haslinger, alexander.egyed}@jku.at

2

University of Malaga, Malaga, Spain

{ferrer, chicano, eat}@lcc.uma.es

Abstract. Software Product Lines (SPLs) are families of related software systems, which provide different feature combinations. In the past

few years many SPL testing approaches have been proposed, among

them, those that support pairwise testing. Recently a comparison framework for pairwise testing in SPLs has been proposed, however it has only

been applied to two algorithms, none of them search based, and only on

a limited number of case studies. In this paper we address these two

limitations by comparing two search based approaches with a leading

greedy alternative on 181 case studies taken from two different sources.

We make a critical assessment of this framework based on an analysis

of how useful it is to select the best algorithm among the existing approaches and an examination of the correlation we found between its

metrics.

Keywords: Feature Models, Genetic Algorithms, Simulated Annealing,

Software Product Lines, Pairwise Testing

1

Introduction

A Software Product Line (SPL) is a family of related software systems, which

provide different feature combinations [1]. The effective management and realization of variability – the capacity of software artifacts to vary [2] – can lead to

substantial benefits such as increased software reuse, faster product customization, and reduced time to market.

Systems are being built, more and more frequently, as SPLs rather than

individual products because of several technological and marketing trends. This

fact has created an increasing need for testing approaches that are capable of

coping with large numbers of feature combinations that characterize SPLs. Many

testing alternatives have been put forward [3–6]. Salient among them are those

that support pairwise testing [7–13]. With all these pairwise testing approaches

available the question now is: how do they compare? To answer this question,

Perrouin et al. have recently proposed a comparison framework [14]. However,

this framework has only been applied to two algorithms, none of them search

based, and only on a limited number of case studies. In this paper we address

these two limitations by comparing two search based approaches with a leading

greedy alternative on 181 case studies taken from two different sources.

Our interest (and our contribution) is in answering these two questions:

– RQ1: Is there any correlation between the framework’s metrics?.

– RQ2: Is this framework useful to select the best one among the testing

algorithms?

Based on the obtained answers, we make a critical assessment of the framework

and suggest open venues for further research.

The organization of the paper is as follows. In Section 2 we provide some

background on the Feature Models used in Software Product Lines. Section 3

describes Combinatorial Interaction Testing and how it is applied to test SPLs

and Section 4 presents the algorithms used in our experimental section. In Section 5 the comparison framework of Perrouin et al. is presented and it is evaluated

in Section 6. Some related work is highlighted in Section 7 and we conclude the

paper and outline future work in Section 8.

2

Feature Models and Running Example

Feature models have become a de facto standard for modelling the common and

variable features of an SPL and their relationships collectively forming a tree-like

structure. The nodes of the tree are the features which are depicted as labelled

boxes, and the edges represent the relationships among them. Thus, a feature

model denotes the set of feature combinations that the products of an SPL can

have [15].

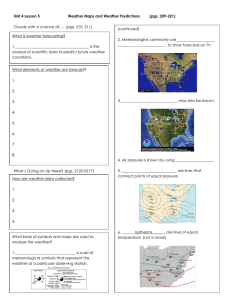

Figure 1 shows the feature model of our running example, the Graph Product

Line (GPL), a standard SPL of basic graph algorithms that has been extensively

used as a case study in the product line community [16]. A product has feature

GPL (the root of the feature model) which contains its core functionality, a driver

program (Driver) that sets up the graph examples (Benchmark) to which a

combination of graph algorithms (Algorithms) are applied. The types of graphs

(GraphType) can be either directed (Directed) or undirected (Undirected), and

can optionally have weights (Weight). Two graph traversal algorithms (Search)

are available: either Depth First Search (DFS) or Breadth First Search (BFS).

A product must provide at least one of the following algorithms: numbering of

nodes in the traversal order (Num), connected components (CC), strongly connected components (SCC), cycle checking (Cycle), shortest path (Shortest),

minimum spanning trees with Prim’s algorithm (Prim) or Kruskal’s algorithm

(Kruskal).

In a feature model, each feature (except the root) has one parent feature

and can have a set of child features. Notice here that a child feature can only

be included in a feature combination of a valid product if its parent is included

as well. The root feature is always included. There are four kinds of feature

relationships:

Fig. 1. Graph Product Line Feature Model

– Mandatory features are depicted with a filled circle. A mandatory feature

is selected whenever its respective parent feature is selected. For example,

features Driver and GraphType.

– Optional features are depicted with an empty circle. An optional feature may

or may not be selected if its respective parent feature is selected. An example

is feature Weight.

– Exclusive-or relations are depicted as empty arcs crossing over a set of lines

connecting a parent feature with its child features. They indicate that exactly

one of the features in the exclusive-or group must be selected whenever the

parent feature is selected. For example, if feature Search is selected, then

either feature DFS or feature BFS must be selected.

– Inclusive-or relations are depicted as filled arcs crossing over a set of lines

connecting a parent feature with its child features. They indicate that at

least one of the features in the inclusive-or group must be selected if the

parent is selected. If for instance, feature Algorithms is selected then at

least one of the features Num, CC, SCC, Cycle, Shortest, Prim, and Kruskal

must be selected.

Besides the parent-child relations, features can also relate across different branches

of the feature model with the so called Cross-Tree Constraints (CTC). Figure 1

shows some of the CTCs of our feature model3 . For instance, Cycle requires

DFS means that whenever feature Cycle is selected, feature DFS must also be

selected. As another example, Prim excludes Kruskal means that both features cannot be selected at the same time on any product. These constraints as

well as those implied by the hierarchical relations between features are usually

expressed and checked using propositional logic, for further details refer to [18].

Definition 1. Feature List (FL) is the list of features in a feature model.

The FL for the GPL feature model is [GPL, Driver, Benchmark,

GraphType, Directed, Undirected, Weight, Search, DFS, BFS,

Algorithms, Num, CC, SCC, Cycle, Shortest, Prim, Kruskal].

3

In total, the feature model has 13 CTCs for further details please refer to [16] and [17].

FS GPL Dri Gtp W Se Alg B D U DFS BFS N CC SCC Cyc Sh Prim Kru

fs0 X

X X X

X X

X

X

fs1 X

X X X X X X

XX

X

X

fs2 X

X X

X X XX

X

X

X

fs3 X

X X X X X XX

X X

X

fs4 X

X X X X X XX

X

X

X

X X

fs5 X

X X X X X X

XX

XX

X

X

fs6 X

X X X X X X

X

X XX

X

fs7 X

X X X X X X

XX

XX

X

Driver (Dri), GraphType (Gtp), Weight (W), Search (Se), Algorithms (Alg),

Benchmark (B), Directed (D), Undirected (U), Num (N), Cycle (Cyc), Shortest (Sh),

Kruskal (Kr).

Table 1. Sample Feature Sets of GPL

Definition 2. A feature set, also called product in SPL, is a 2-tuple [sel,sel]

where sel and sel are respectively the set of selected and not-selected features of

a member product4 . Let FL be a feature list, thus sel, sel ⊆ FL, sel ∩ sel = ∅,

and sel ∪ sel = FL. The terms p.sel and p.sel respectively refer to the set of

selected and not-selected features of product p.

Definition 3. A feature set fs is valid in feature model fm, i.e. valid(fs, fm)

holds, iff fs does not contradict any of the constraints introduced by fm. We will

denote with F S the set of valid feature sets for a feature model (we omit the

feature model in the notation to alleviate the notation).

Definition 4. A feature f is a core feature if it is selected in all the valid feature

sets of a feature model fm, and is a variant feature if it is selected in some of the

feature sets.

For example, the feature set fs0=[{GPL, Driver, GraphType, Weight,

Algorithms, Benchmark, Undirected, Prim}, {Search, Directed, DFS,

BFS, Num, CC, SCC, Cycle, Shortest, Kruskal}] is valid. As another

example, a feature set with features DFS and BFS would not be valid because it

violates the constraint of the exclusive-or relation which establishes that these

two features cannot appear selected together in the same feature set. In our

running example, the feature model denotes 73 valid feature sets. Some of them

are depicted in Table 1, where selected features are ticked (X) and unselected

features are empty. For the sake of space, in this table we use as column labels

shortened names for some features (e.g. Dri for feature Driver). Notice that

features GPL, Driver, Benchmark, GraphType and Algorithms are core features

and the remaining features are variant features.

4

Definition based on [18].

3

Combinatorial Interaction Testing in SPLs

Combinatorial Interaction Testing (CIT) is a testing approach that constructs

samples to drive the systematic testing of software system configurations [19].

When applied to SPL testing, the idea is to select a representative subset of

products where interaction errors are more likely to occur rather than testing

the complete product family [19]. In this section we provide the basic terminology

of CIT within the context of SPLs.

Definition 5. A t-set ts is a 2-tuple [sel,sel] representing a partially configured

product, defining the selection of t features of the feature list FL, i.e. ts.sel ∪

ts.sel ⊆ F L ∧ ts.sel ∩ ts.sel = ∅ ∧ |ts.sel ∪ ts.sel| = t. We say t-set ts is covered

by feature set fs iff ts.sel ⊆ f s.sel ∧ ts.sel ⊆ f s.sel.

Definition 6. A t-set ts is valid in a feature model fm if there exists a valid

feature set fs that covers ts. The set of all valid t-sets for a feature model is

denoted with T S (we also omit here the feature model in the notation for the

sake of clarity).

Definition 7. A t-wise covering array tCA for a feature model fm is a set of

valid feature sets that covers all valid t-sets denoted by fm.5 We also use the term

test suite in this paper to refer to a covering array.

Let us illustrate these concepts for pairwise testing, meaning t=2. From the

feature model in Figure 1, a valid 2-set is [{Driver},{Prim}]. It is valid because

the selection of feature Driver and the non-selection of feature Prim do not

violate any constraints. As another example, the 2-set [{Kruskal,DFS}, ∅] is

valid because there is at least one feature set, for instance fs1 in Table 1, where

both features are selected. The 2-set [∅, {SCC,CC}] is also valid because there

are valid feature sets that do not have any of these features selected, for instance

feature sets fs0, fs1, and fs3. Notice however that the 2-set [∅,{Directed,

Undirected}] is not valid. This is because feature GraphType is present in all the

feature sets (mandatory children of the root) so either Directed or Undirected

must be selected. In total, our running example has 418 valid 2-sets.

Based on Table 1, the three valid 2-sets just mentioned above are covered

as follows. The 2-set [{Driver},{Prim}] is covered by feature sets fs1, fs2,

fs3, fs4, fs6, and fs7. Similarly, the 2-set [{Kruskal,DFS}, ∅] is covered by

feature set fs1, and [∅, {SCC,CC}] is covered by feature sets fs0, fs2, and fs3.

4

Algorithms Overview

In this section we briefly sketch the three testing algorithms we analyse in this

paper: CASA, PGS, and ICPL.

5

Definition based on [20].

4.1

CASA Algorithm

CASA is a simulated annealing algorithm that was designed to generate n-wise

covering arrays for SPLs [9]. CASA relies on three nested search strategies. The

outermost search performs one-sided narrowing, pruning the potential size of the

test suite to be generated by only decreasing the upper bound. The mid-level

search performs a binary search for the test suite size. The innermost search

strategy is the actual simulated annealing procedure, which tries to find a pairwise test suite of size N for feature model FM. For more details on CASA please

refer to [9].

4.2

Prioritized Genetic Solver

The Prioritized Genetic Solver (PGS) is an evolutionary approach proposed

by Ferrer et al. [21] that constructs a test suite taking into account priorities

during the generation. PGS is a constructive genetic algorithm that adds one new

product to the partial solution in each iteration until all pairwise combinations

are covered. In each iteration the algorithm tries to find the product that adds

the most coverage to the partial solution. This paper extends and adapts PGS

for SPL testing. PGS has been implemented using jMetal [22], a Java framework

aimed at the development, experimentation, and study of metaheuristics for

solving optimization problems. For further details on PGS, please refer to [21].

4.3

ICPL

ICPL is a greedy approach to generate n-wise test suites for SPLs, which has been

introduced by Johansen et. al [20]6 . It is basically an adaptation of Chvátal’s

algorithm to solve the set cover problem. First, the set T S of all valid t-sets

that need to be covered is generated. Next, the first feature set (product) f s is

produced by greedily selecting a subset of t-sets in T S that constitute a valid

product in the input feature model and added to the (initially empty) test suite

tCA. Henceforth all t-sets that are covered by product f s are removed from T S.

ICPL then proceeds to generate products and adding them to the test suite tCA

until T S is empty, i.e. all valid t-sets are covered by at least on product. To

increase ICPLs performance Johansen et. al made several enhancements to the

algorithm, they for instance parallelized the data independent processing steps.

For further details on ICPL please refer to [20].

5

Comparison Framework

In this section we present the four metrics that constitute the framework proposed by Perrouin et al. for the comparison of pairwise testing approaches for

SPLs [14]. We define them based on the terminology presented in Section 2 and

Section 3.

6

ICPL stands for “ICPL Covering array generation algorithm for Product Lines”.

For the following metric definitions, let tCA be a t-wise covering array of

feature model fm.

Metric 1. Test Suite Size is the number of feature sets selected in a covering

array for a feature model, namely n = |tCA|.

Metric 2. Performance is the time required for an algorithm to compute a test

suite covering array.

Metric 3. Test Suite Similarity. This metric is defined based on Jaccard’s similarity index and applied to variable features. Let F M be the set of all possible

feature models, f s and gs be two feature sets in F S, and var : F S × F M → F L

be an auxiliary function that returns from the selected features of a feature set

those features that are variable according to a feature model. The similarity

index of two feature sets is thus defined as follows:

(

Sim(f s, gs, f m) =

|var(f s,f m) ∩ var(gs,f m)|

|var(f s,f m) ∪ var(gs,f m)|

if var(f s, f m) ∪ var(gs, f m) 6= ∅

0

otherwise

(1)

And the similarity value for the entire covering array is defined as:

P

T estSuiteSim(tCA, f m) =

f si∈tCA

P

f sj∈tCA

Sim(f si, f sj, f m)

| tCA |2

(2)

It should be noted here that the second case of the similarity index, when

there are no variable features on both feature sets is not part of the original

proposed comparison framework [14]. We added it because we found several

feature sets formed with only core features in the feature models analysed in

Section 6.3.

Metric 4. Tuple Frequency. Let occurrence : T S × 2F S → N be an auxiliary

function that counts the occurrence of a t-set in all the feature sets of a covering

array of a single feature model. The metric is thus defined as:

T upleF requency(ts, tCA) =

occurence(ts, tCA)

| tCA |

(3)

The first two metrics are the standard measurements used for comparison

between different testing algorithms, not only within the SPL domain. To the

best of our understanding, the intuition behind the Test Suite Similarity is that

the more dissimilar the feature sets are, as the value approaches 0, the higher

chances to detect any faulty behaviour when the corresponding t-wise tests are

instrumented and performed. Along the same lines, the rationale behind tuple

frequency is that by reducing this number, the higher the chances of reducing

repeating the execution of t-wise tests.

Let us provide some examples for the latter two metrics. Consider for instance, feature sets fs0, fs1, fs2 and fs7 from Table 1. The variable features

in those feature sets are:

var(f s0, gpl) = {U ndirected, W eight, P rim}

var(f s1, gpl) = {U ndirected, W eight, Search, DF S, Connected, Kruskal}

var(f s2, gpl) = {Directed, Search, DF S, N umber, Cycle}

var(f s7, gpl) = {U ndirected, W eight, Search, DF S, Connected, N umber, Cycle}

An example is the similarity value between feature sets fs0 and fs2, that

is Sim(f s0, f s2, gpl) = 0/8 = 0.0. The value is zero because those two feature sets do not have any selected variable features in common. Now consider

Sim(f s1, f s7, gpl) = 5/8 = 0.625 which yields a high value because those feature

sets have the majority of their selected features in common.

To illustrate the Tuple Frequency metric, let us assume that the set of feature

sets in Table 1 is a 2-wise covering array of GPL denoted as tCAgpl 7 . For example,

the 2-set ts0 = [{Driver},{Prim}] is covered by feature sets fs1, fs2, fs3,

fs4, fs6, and fs7. Thus, its frequency is equal to occurrence(ts0, tCAgpl )/8 =

6/8 = 0.75. As another example, the 2-set ts1 = [{Kruskal,DFS}, ∅] is covered

by feature set fs1. Thus its frequency is equal to occurrence(ts1, tCAgpl )/8 =

1/8 = 0.125.

6

Evaluation

For the comparison of CASA, PGS and ICPL we created two groups of feature

models based on their provenance. In this section, we describe the salient characteristics of both groups, their evaluation with the comparison framework of

Section 5, and a thorough comparison analysis of the three algorithms. All the

data and code used in our analysis is available on [17].

6.1

Feature Model Selection

Open Source Projects. The first group of feature models comes from existing

SPLs whose feature models and their code implementation is readily available as

open source projects. Some of them were reverse engineered from single systems

and some were developed as SPLs from the outset. We selected the feature models

for this group from the SPL Conqueror project [23]. The goal of this project is

to provide reliable estimates of measurable non-functional properties such as

performance, main memory consumption, and footprint. Table 2 summarizes

the 13 feature models of this group. The rationale behind the creation of this

group is that they actually represent real case studies, used both in academic

and industrial environments.

Feature Models Repository. The second group of selected feature models

comes from the SPLOT repository [24]. This repository contains a large number

7

There are 24 2-wise pairs, out of the 418 pairs that GPL contains, which are no

covered.

SPL Name NF NP Description

Apache

10 256

web server

BerkeleyDBF 9

256

database

BerkeleyDBM 9 3840

database

BerkeleyDBP 27 1440

database

Curl

14 1024 command line

LinkedList

26 1440 data structures

LLVM

12 1024 compiler tool

NFs: Number of Features, NP: Number

SPL Name

PKJab

Prevayler

SensorNetwork

Wget

x264

ZipMe

NF NP

Description

12

72

messenger

6

32

persistence

27 16704

simulation

17 8192

file retrieval

17 2048

video encoding

8

64 compression library

of Products, Desc: Description of SPL domain

Table 2. Open SPL Projects Characteristics.

of feature models that are not necessarily associated with open source projects

and that has been made available to the product line community carrying out

research on formal analysis and reasoning of feature models. From this source,

we selected 168 feature models with: i) number of features ranging from 10 to

67, with a median of 16.5 and an interquartile range of 10, and ii) number of

products between 14 and 73,728 with a median of 131.5 and an interquartile

range of 535.25. The rationale behind the creation of this group was to assess

the framework on a larger and more diverse set of feature models.

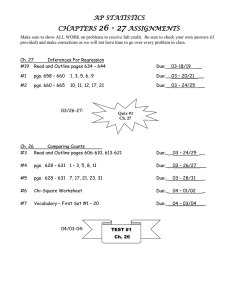

Figure 2 depicts the feature models selected based on their number of features and products. Notice that the filled circles correspond to the open source

projects.

Number of Products

1,60E+04

1,60E+03

1,60E+02

1,60E+01

0

10

20

30

Number of Features

40

50

SPLOT

60

70

SPLConquerorExamples

Fig. 2. Summary of analysed feature models.

6.2

Experimental Set Up

The three algorithms, CASA, PGS and ICPL, are non-deterministic. For this

reason we performed 30 independent runs for a meaningful statistical analysis. All the executions were run in a cluster of 16 machines with Intel Core2

Quad processors Q9400 (4 cores per processor) at 2.66 GHz and 4 GB memory running Ubuntu 12.04.1 LTS and managed by the HT Condor 7.8.4 cluster

manager. Since we have 3 algorithms and 181 feature models the total number

of independent runs is 3 · 181 · 30 = 16, 290. Once we obtained the resulting

test suites we applied the metrics defined in Section 5 and we report summary

statistics of these metrics. In order to check if the differences between the algorithms are statistically significant or just a matter of chance, we applied the

Wilcoxon rank-sum [25] test and highlight in the tables the differences that are

statistically significant.

6.3

Analysis

The first thing we noticed is the inadequacy of the metric Tuple Frequency

for our comparison purposes. Its definition, as shown in Equation (3), applies

on a per tuple basis. In other words, given a test suite, we should compute

this metric for each tuple and we should provide the histogram of the tuple

frequency. This is what the authors of [14] do. This means we should show

16, 290 histograms, one per test suite we have. Obviously, this is not viable, so

we thought in alternatives to summarize all this information. The first idea, also

present in [14], is to compute the average of the tuple frequencies in a test suite

taking into account all the tuples. But, unfortunately we found that this average

says nothing about the test suite. We can prove using counting arguments that

this average depends only on the number of features and the number of valid

tuples of the model, so this average is the same for all the test suites associated to

a feature model. The reader can find the formal proof in the paper’s additional

resources [17]. In the following we omit any information related to the Tuple

Frequency metric and defer to future work the issue of how to summarize this

metric. We computed the remaining three metrics on all the independent runs

of the three algorithms.

In order to answer RQ1 we calculated the Spearman rank’s correlation coefficient for each pair of metrics. Table 3 shows the results obtained plus the

correlation values with the number of products and the number of features of

the feature models (first two columns and two rows of the table).

Products

Features

Size

Performance

Similarity

Products Features Size Performance Similarity

1

0.591 0.593

0.229

-0.127

0.591

1 0.545

0.283

-0.159

0.593

0.545

1

0.278

-0.371

0.229

0.283 0.278

1

0.168

-0.127 -0.159 -0.371

0.168

1

Table 3. Spearman’s correlation coefficients of all models and algorithms.

We can observe a positive and relatively high correlation among the number

of products, features and test suite size. This is somewhat expected because

the number of valid products is expected to increase when more features are

added to a feature model. In the same sense, more features usually implies more

combinations of features that must be covered by the test suite and this usually

means that more test cases must be added.

Regarding performance, we expect the algorithms to take more time to generate the test suites for larger models. The positive correlation between the

performance and the three previous size-related measures (products, features

and size) supports this idea. However, the value is too low (less than 0.3) to

clearly claim that larger models require more computation time.

The correlation coefficient between the similarity metric and the rest of metrics is low and the sign changes, which means that there is no correlation. We

would expect larger test suites to have more similar test suites (positive correlation with size), but this is not the case in general. In our opinion we can

find the justification for this somehow unexpected result in the definition of the

similarity metric. In Equation (1) the similarity between two products does not

take into account the features that are not present in the two products, which

we would argue is also a source of similarity. In other words, two products are

similar not only due to the shared selected features they have, but also due to

their shared unselected features. Thus, our conclusion regarding this metric is

that it is not well-defined and the intuition on how it should behave does not

work, as evidenced in Table 3.

Let us now analyse the metrics results grouped by algorithms to address

RQ2. In Table 4 we show the results for the models in the SPLOT repository

and in Table 5 we show the results for the 13 open source models. In these two

tables we highlight with dark gray the results that are better, with statistically

significant difference, than the other two algorithms, and with a light gray the

results that are better than only one of the other algorithms

Algorithm

CASA

ICPL

PGS

Size Performance Similarity

9.31

3617.26

0.3581

11.06

304.11

0.3309

10.88

77245.21

0.3635

Table 4. Average of the metrics computed on the test suites generated by the three

algorithms for the models in SPLOT.

The results for the SPLOT models reveal that CASA is the best algorithm

regarding the size of the test suite (with a statistically significant difference)

and PGS is the second best algorithm. If we focus on computation time, ICPL

is the clear winner followed by CASA. PGS is outperformed by CASA in test

suite size and computation time. Regarding the similarity metric, ICPL is the

algorithm providing more dissimilar products and CASA is the second one. This

is somewhat counterintuitive. ICPL is the algorithm providing larger test suites

in their solutions. We would expect that a solution with more products to have

more similar products because the number of features is finite in the feature

models. Thus, we would expect higher similarity between the products of larger

test suites. The results of ICPL, however, contradict this idea. In our opinion

the reason is, again, that the similarity measure is not well-defined because it is

just considering the features that appear in any product but not the ones that

don’t appear in both products even though the t-tuples with unselected features

must all be covered as well.

Model

Algor.

CASA

Apache

ICPL

PGS

CASA

BerkeleyDBF ICPL

PGS

CASA

BerkeleyDBM ICPL

PGS

CASA

BerkeleyDBP ICPL

PGS

CASA

SensorNetwork ICPL

PGS

CASA

Prevayler

ICPL

PGS

CASA

LinkedList

ICPL

PGS

Size Performance Similarity Model Algor. Size Performance Similarity

6

550.00

0.3614

CASA 8.00

933.33

0.3527

8.00

217.70

0.3044

Curl ICPL 12.00

276.37

0.2634

8.07

20139.17

0.3482

PGS 12.07

42065.53

0.3541

6.00

583.33

0.3660

CASA 6.00

583.33

0.3620

7.00

169.63

0.3232 LLVM ICPL 9.00

228.77

0.2320

8.13

20007.07

0.3485

PGS 8.80

26453.40

0.3622

30.00

7600.00

0.3456

CASA 6.00

616.67

0.3726

31.00

511.70

0.2684 PKJab ICPL 7.00

195.80

0.3439

30.87

188908.70

0.3731

PGS 7.80

24700.60

0.3675

9.80

3500.00

0.3904

CASA 9.00

733.33

0.3560

10.00

365.83

0.3576 Wget ICPL 12.00

343.33

0.2685

11.37

51854.07

0.3796

PGS 12.40

51234.40

0.3499

10.67

1650.00

0.3748

CASA 16.00

2966.67

0.3510

13.00

431.43

0.3630

x264 ICPL 17.00

343.20

0.2568

13.00

69371.53

0.3806

PGS 16.90

70243.93

0.3668

6.00

533.33

0.3518

CASA 6.00

533.33

0.3654

8.00

153.10

0.2677 ZipMe ICPL 7.00

160.90

0.3428

6.70

20138.13

0.3690

PGS 7.33

23574.37

0.3781

12.03

2533.33

0.4055

14.00

451.43

0.3996

14.87

75813.10

0.4081

Table 5. Average of the metrics computed on the test suites generated by the three

algorithms for the 13 open source models.

Let us finally analyse the results of the algorithms for the open source models.

Regarding the test suite size we again observe that CASA obtains the best results

(smaller test suites). The second best in this case is usually ICPL (with the only

exception of Prevayler). Regarding the computation time, ICPL is the fastest

algorithm followed by CASA in all the models. PGS is the slowest algorithm.

If we observe the similarity metric the trend is also clear: ICPL obtains the

lowest similarity measures in all the models. In summary, the results obtained

for the open source models support the same conclusions obtained for the SPLOT

models: CASA obtains the smallest test suites, ICPL is the fastest algorithm and

it also obtains the most dissimilar products.

7

Related Work

In the area of Search-Based Software Engineering a major research focus has

been software testing [26, 27]. A recent overview by McMinn highlights the major achievements made in the area and some of the open questions and challenges

that remain [28]. There exists substantial literature on SPL testing [3–6]; however, except for a few efforts [9,13], the application of search based techniques to

SPL remains largely unexplored. A related work on computing covering arrays

with a simulated annealing approach is presented by Torres-Jimenez et al. [29].

They propose improvements of the state-of-the-art techniques for Constrained

Combinatorial Interaction Testing (CCIT), an extension to CIT that also considers constraints on the inputs.

In the search based literature, there exists a plethora of articles that compare testing algorithms using different metrics. For example, Mansour et al. [30],

where the authors compare five algorithms for regression testing using eight different metrics (including quantitative and qualitative criteria). Similarly, Uyar

et al. [31] compare different metrics implemented as fitness functions to solve the

problem of test input generation in software testing. To the best of our knowledge, in the literature on test case generation there is no well-known comparison

framework for the research and practitioner community to use. Researchers usually apply their methods to open source programs and compute some metrics

directly such as the success rate, the number of test cases and performance. The

closest to a common comparison framework, can be traced back to the work

of Rothermel and Harrold [32] where they propose a framework for regression

testing.

8

Conclusions and Future Work

In this paper, we make an assessment of the comparison framework for pairwise testing of SPLs proposed by Perrouin et al. on two search based algorithms

(CASA and PGS) and one greedy approach (ICPL). We used a total of 181

feature models from two different provenance sources. We identified two shortcomings of the two novel metrics of this framework: similarity does not consider

features that are not selected in a product, and tuple frequency is applicable on

a per tuple basis only. Overall, CASA obtains the smallest test suites, ICPL is

the fastest algorithm and it also obtains the most dissimilar products.

We plan to expand our work by considering other testing algorithms, larger

feature models, but, most importantly, by researching other metrics that could

provide more useful and discerning criteria for algorithm selection. For this latter goal we will follow the guidelines for metrics selection suggested in [33]. In

addition, we want to study how to enhance the effectiveness of covering arrays

by considering information from the associated source code, along the lines of

Shi et al. [34].

Acknowledgments

This research is partially funded by the Austrian Science Fund (FWF) project

P21321-N15 and Lise Meitner Fellowship M1421-N15, the Spanish Ministry

of Economy and Competitiveness and FEDER under contract TIN2011-28194

(roadME project).

References

1. Pohl, K., Bockle, G., van der Linden, F.J.: Software Product Line Engineering:

Foundations, Principles and Techniques. Springer (2005)

2. Svahnberg, M., van Gurp, J., Bosch, J.: A taxonomy of variability realization

techniques. Softw., Pract. Exper. 35(8) (2005) 705–754

3. Engström, E., Runeson, P.: Software product line testing - a systematic mapping

study. Information & Software Technology 53(1) (2011) 2–13

4. da Mota Silveira Neto, P.A., do Carmo Machado, I., McGregor, J.D., de Almeida,

E.S., de Lemos Meira, S.R.: A systematic mapping study of software product lines

testing. Information & Software Technology 53(5) (2011) 407–423

5. Lee, J., Kang, S., Lee, D.: A survey on software product line testing. In: 16th

International Software Product Line Conference. (2012) 31–40

6. do Carmo Machado, I., McGregor, J.D., de Almeida, E.S.: Strategies for testing

products in software product lines. ACM SIGSOFT Software Engineering Notes

37(6) (2012) 1–8

7. Perrouin, G., Sen, S., Klein, J., Baudry, B., Traon, Y.L.: Automated and scalable

t-wise test case generation strategies for software product lines. In: ICST, IEEE

Computer Society (2010) 459–468

8. Oster, S., Markert, F., Ritter, P.: Automated incremental pairwise testing of software product lines. In Bosch, J., Lee, J., eds.: SPLC. Volume 6287 of Lecture

Notes in Computer Science., Springer (2010) 196–210

9. Garvin, B.J., Cohen, M.B., Dwyer, M.B.: Evaluating improvements to a metaheuristic search for constrained interaction testing. Empirical Software Engineering

16(1) (2011) 61–102

10. Hervieu, A., Baudry, B., Gotlieb, A.: Pacogen: Automatic generation of pairwise

test configurations from feature models. In Dohi, T., Cukic, B., eds.: ISSRE, IEEE

(2011) 120–129

11. Lochau, M., Oster, S., Goltz, U., Schürr, A.: Model-based pairwise testing for

feature interaction coverage in software product line engineering. Software Quality

Journal 20(3-4) (2012) 567–604

12. Cichos, H., Oster, S., Lochau, M., Schürr, A.: Model-based coverage-driven test

suite generation for software product lines. In: Proceedings of Model Driven Engineering Languages and Systems. (2011) 425–439

13. Ensan, F., Bagheri, E., Gasevic, D.: Evolutionary search-based test generation

for software product line feature models. In Ralyté, J., Franch, X., Brinkkemper,

S., Wrycza, S., eds.: CAiSE. Volume 7328 of Lecture Notes in Computer Science.,

Springer (2012) 613–628

14. Perrouin, G., Oster, S., Sen, S., Klein, J., Baudry, B., Traon, Y.L.: Pairwise testing

for software product lines: comparison of two approaches. Software Quality Journal

20(3-4) (2012) 605–643

15. Kang, K., Cohen, S., Hess, J., Novak, W., Peterson, A.: Feature-Oriented Domain Analysis (FODA) Feasibility Study. Technical Report CMU/SEI-90-TR-21,

Software Engineering Institute, Carnegie Mellon University (1990)

16. Lopez-Herrejon, R.E., Batory, D.S.: A standard problem for evaluating productline methodologies. In Bosch, J., ed.: GCSE. Volume 2186 of Lecture Notes in

Computer Science., Springer (2001) 10–24

17. Lopez-Herrejon,

R.E.,

Ferrer,

J.,

Haslinger,

E.N.,

Chicano,

F.,

Egyed, A., Alba, E.:

Paper code and data repository (2013)

http://neo.lcc.uma.es/staff/javi/resources.html.

18. Benavides, D., Segura, S., Cortés, A.R.: Automated analysis of feature models 20

years later: A literature review. Inf. Syst. 35(6) (2010) 615–636

19. Cohen, M.B., Dwyer, M.B., Shi, J.: Constructing interaction test suites for highlyconfigurable systems in the presence of constraints: A greedy approach. IEEE

Trans. Software Eng. 34(5) (2008) 633–650

20. Johansen, M.F., Haugen, Ø., Fleurey, F.: An algorithm for generating t-wise covering arrays from large feature models. In: 16th International Software Product

Line Conference. (2012) 46–55

21. Ferrer, J., Kruse, P.M., Chicano, J.F., Alba, E.: Evolutionary algorithm for prioritized pairwise test data generation. In Soule, T., Moore, J.H., eds.: GECCO,

ACM (2012) 1213–1220

22. Durillo, J.J., Nebro, A.J.: jmetal: A java framework for multi-objective optimization. Advances in Engineering Software 42(10) (2011) 760 – 771

23. Siegmund, N., Rosenmüller, M., Kästner, C., Giarrusso, P.G., Apel, S., Kolesnikov,

S.S.: Scalable prediction of non-functional properties in software product lines:

Footprint and memory consumption. Information & Software Technology 55(3)

(2013) 491–507

24. SPLOT:

Software Product Line Online Tools (2013) http://www.splotresearch.org.

25. Sheskin, D.J.: Handbook of Parametric and Nonparametric Statistical Procedures.

Chapman & Hall/CRC; 4 edition (2007)

26. Harman, M., Mansouri, S.A., Zhang, Y.: Search-based software engineering:

Trends, techniques and applications. ACM Comput. Surv. 45(1) (2012) 11

27. de Freitas, F.G., de Souza, J.T.: Ten years of search based software engineering: A

bibliometric analysis. In Cohen, M.B., Cinnéide, M.Ó., eds.: SSBSE. Volume 6956

of Lecture Notes in Computer Science., Springer (2011) 18–32

28. McMinn, P.: Search-based software testing: Past, present and future. In: ICST

Workshops, IEEE Computer Society (2011) 153–163

29. Torres-Jimenez, J., Rodriguez-Tello, E.: New bounds for binary covering arrays

using simulated annealing. Information Sciences 185 (2012) 137–152

30. Mansour, N., Bahsoon, R., Baradhi, G.: Empirical comparison of regression test

selection algorithms. Journal of Systems and Software 57 (2001) 79–90

31. Uyar, H.T., Uyar, A.S., Harmanci, E.: Pairwise sequence comparison for fitness

evaluation in evolutionary structural software testing. In: Genetic and Evolutionary

Computation Conference. (2006) 1959–1960

32. Rothermel, G., Harrold, M.J.: A framework for evaluating regression test selection techniques. In: Proceedings of the 16th International Conference on Software

Engineering. (1994) 201–210

33. Meneely, A., Smith, B.H., Williams, L.: Validating software metrics: A spectrum

of philosophies. ACM Trans. Softw. Eng. Methodol. 21(4) (2012) 24

34. Shi, J., Cohen, M.B., Dwyer, M.B.: Integration testing of software product lines

using compositional symbolic execution. In de Lara, J., Zisman, A., eds.: FASE.

Volume 7212 of Lecture Notes in Computer Science., Springer (2012) 270–284

35. de Almeida, E.S., Schwanninger, C., Benavides, D., eds.: 16th International Software Product Line Conference, SPLC ’12, Salvador, Brazil - September 2-7, 2012,

Volume 1. In de Almeida, E.S., Schwanninger, C., Benavides, D., eds.: SPLC (1),

ACM (2012)

36. Whittle, J., Clark, T., Kühne, T., eds.: Model Driven Engineering Languages and

Systems, 14th International Conference, MODELS 2011, Wellington, New Zealand,

October 16-21, 2011. Proceedings. In Whittle, J., Clark, T., Kühne, T., eds.:

MoDELS. Volume 6981 of Lecture Notes in Computer Science., Springer (2011)