An Electricity Primer for Energy Economists: Basic - Econ

An Electricity Primer for Energy Economists:

Basic EE to LMP Calculation

Richard M. Benjamin

Round Table Group

January 23, 2013

Chapter 1

Basic EE and PTDFs

1.1

Introduction

This manuscript provides a technical primer for the energy economist wishing to study the optimal power flow problem and locational marginal pricing (LMPP), but, who like this paper’s author, finds it difficult to simply plow through Schweppe et al. (1988). This work aims to provide the reader with sufficient technical background to calculate power transfer distribution factors (PTDFs, or network shift factors) for a more complicated model than

the simple 3-node model common to energy economics studies.

The point of departure for this work is basic electrical engineering fundamentals. We start off Section 1.2 with a simple direct current (DC) circuit analysis, demonstrating Ohms law and Kirchhoffs laws.

As these are captured by very basic equations, we quickly move on to alternating current

(AC) analysis. Here, we spend quite a bit of effort manipulating fairly straightforward

(i.e. sinusoidal) AC voltage equations to derive equations for average voltage, current, and power in an AC circuit. We delve next into phase angles, showing how electrical components such as inductors and capacitors bring voltage and current “out of phase.” We then demonstrate both graphically and algebraically the effect of non-zero phase angles on resistance and power relations in an AC circuit. The goal of Section 1.2 is to familiarize the reader with concepts that economists and operations researchers generally take for granted, but are quite foreign to those of us who are self-taught.

Getting this background under our belts, we move on to DC network analysis, taking this intermediate step to demonstrate circuit concepts to the reader in the simple setting of a DC network. We then introduce the reader to the linearized version of the AC network model in Section 1.3. In this section we demonstrate a methodology for linearizing the real-power

1

See e.g.

Bushnell and Stoft (1997) for a detailed analysis of the three-node model.

1

AC network equations (the latter of which we derive in Appendix A). After linearizing the AC network equations, we quickly demonstrate DC sensitivity analysis in section 1.4.

We conclude Chapter 1 by demonstrating the calculation of the PTDFs for the linearized

(DC) version of the AC network in section 1.5. Sections 1.2, 1.3, and 1.5 of this chapter aim to provide the reader with sufficient technical background to understand basic power equations, as presented in energy economics papers, along with deriving PTDFs. Section

1.4 offers a conceptual preview of locational marginal price calculation, as demonstrated in chapter 3.

In Chapter 2, we present the AC power flow model and a powerful method for solving the

AC power flow problem, the Newton-Rhapson Method. Chapter 2 serves two purposes.

First, it familiarizes the energy economist with the type of problems setup that one sees in electricity papers written by operations research analysts.

The economist spending any time at all in the study of electricity will almost certainly run into such, and this chapter provides some nice background material. Second, the method we use to solve the DC optimal power flow algorithm corresponds to a single run of the Newton-Rhapson algorithm for solving nonlinear equations. Thus, this serves as valuable material for the economist wishing to place our solution method in its proper context.

In Chapter 3 we demonstrate LMP calculations. Fortunately, chapters 1 and 2 do most of the ”heavy lifting” of AC optimal power flow setup, allowing the reader to focus on the specifics of LMP derivation, while simply reviewing OPF principles.

1.2

Electrical Engineering Fundamentals

We begin our power flow analysis with a DC series circuit.

In a basic series circuit, a source of electromagnetic force (emf) is connected to one or more sources of resistance

(e.g. light bulbs), which form a circuit, as shown below:

− +

Battery

R b

R a

Figure 1.1: A Basic DC Circuit

2

Other DC circuits include paralled circuits, where current flow splits at junctures into parallel paths.

2

In a DC circuit current flows in one direction only: from negative to positive (as opposed to AC, where current alternates direction several times per second). Thus, current flows in a counterclockwise direction in the example above. The mathematics of this circuit are governed by three laws: Ohm’s Law, Kirchhoff’s Current Law (KCL), and Kirchhoff’s

Voltage Law (KVL). These three laws are as follows: Ohm’s law: current, resistance and voltage in a circuit are related as follows:

V = I × R.

(1.1) where:

• V is voltage, or emf, measured in volts,

• I is current, measured in amperes, and

• R is resistance, measured in ohms.

Applied to our analysis, Ohm’s law reveals that the voltage “drop” across resistors a and b is equal to the resistor’s resistance value times the current flowing through the resistor.

Kirchhoff’s voltage law (KVL): The directed sum of the electrical potential differences (voltage) around any closed circuit is zero.

Kirchhoff’s current law (KCL): At any node in an electrical circuit, the sum of currents flowing into that node is equal to the sum of currents flowing out of that node.

Kirchhoff’s laws are both conservation laws.

KVL states that the sum of the voltage dropped across the two resistors is equal to the voltage produced by the current source

(battery). KCL is likewise the law of conservation of current. For a circuit with only one path, KCL implies that current is constant throughout the circuit. Given these three laws and sufficient values for the circuit, we may solve for the remaining unknown values.

Assume that we have a 9-volt battery, the value of the first resistor, R a

, is 3 ohms(Ω), and an ammeter yields a measurement of 2 amps. We are tasked with finding the remaining values. The easiest, most organized, answer is to fill in these numbers on a table, as shown below:

Table1.1: V , I , and R Calculations

R a

R b

V 6 3

Total

9

I 2 2 2

R 3 1.5

4.5

(numbers in bold are given)

3

By KCL, current at all points in the circuit is equal to 2 amps.

Since we are given

R a

= 3Ω , Ohm’s law allows us to solve for the voltage drop across this resistor as 6 volts.

That is, running 2 amps through a 3 ohm resistor produces 6 volts of force. Next, KVL tells us that the 9 volts produced by the battery dissipate across the two resistors. Since the first resistor dissipates 6 volts of force, the second one must dissipate 3 volts, or V b

= 3.

Further application of Ohm’s law reveals that the second resistor is a 3 / 2 = 1 .

5Ω resistor.

Ohm’s law also applies to the circuit as a whole. So, if a 9-volt battery produces 2 amps of current, total resistance in the circuit is 9 / 2 = 4 .

5Ω. This reveals another law of series

circuits. Resistance, as well as voltage, is additive.

While batteries are a DC voltage source, most electrical devices run on AC. An AC generator, or alternator, produces alternating current as a magnetic field rotates around a set of stationary wire coils. When the magnet starts its rotation, at the reference angle of zero degrees, it is completely out of alignment with the coil. It moves closer into alignment until it reaches an angle of 90

◦

, and moves further out of alignment again. Because the electromagnetic force, or voltage, created in this operation varies directly with the degree of alignment between the magnet and the coil, we may (for the present) express instantaneous voltage mathematically as:

(1.2) ν ( t ) = V max sin( ωt ) , where:

• V max is maximum instantaneous voltage, or voltage amplitude,

• ω

is angular frequency, in radians per second, 5

and

• t is time, in seconds.

Multiplying ω (radians per second) times t , (seconds), gives us the angle covered by the magnet in t

seconds. Thus, we may write (1.2) as either

ν ( t ) = V max sin( ωt ), or

ν ( t ) = V max sin( θ ) , (1.3) where θ is the familiar symbol for measuring angles (e.g.: An alternator with an amplitude of 100 volts, produced by a magnet turning one radian per second, has an instantaneous voltage of ν (1) = 100 sin(1) = 84 .

15 volts at t = 1 second). Note that voltage is

3

When we look at multiple lines across which current flows (known as parallel circuits), this relation will no longer hold because current will split along parallel paths. KCL then implies that the sum of the currents along parallel paths is equal to current running through a single path (so that current is preserved).

4

This rule does not hold for parallel circuits (although it does hold for parallel components in series).

See, e.g. Dale (1995).

5

For those of us who have long since forgotten, there are 2 π radians in a circle, so a radian equals approximately 57.3. Thus we may also write ν ( t ) = V max sin(2 πf t ) , where f is frequency, in rotations per second.

4

important in the study of optimal power flow because it is a determining factor of the power a generator produces, that is,

P = I × V.

(1.4) where:

P

From Ohm’s law, we can substitute for either V or I , giving us two alternative expressions for power:

P = I

2

R and P =

V

2

.

R

As resistance is a many-headed beast, for now we will focus on the derivation of power given

by (1.4). The most basic resistance source (the resistor) has a fixed resistance. Ohm’s law

tells us that in a purely resistive circuit, voltage and current will vary only by a constant of proportionality (the fixed resistance). Therefore, since the formula for voltage is given

by (1.2), the formula for current is given by:

i ( t ) = I max sin( ωt ) , (1.5) where:

I max is maximum current, or current amplitude.

Using (1.4), we combine (1.2) and (1.5) to yield the formula for instantaneous AC power:

p ( t ) = V max sin( ωt ) · I max sin( ωt ) .

(1.6)

Using trigonometric identities, we may write sin

2

( x ) as

1

2

(1 − cos 2 x ). Thus we rewrite p ( t ) =

1

2

V max

I max

−

1

2

V max

I max

(cos 2 ωt ) .

(1.7)

Of course, when examining a generator’s power output, we are not concerned with its instantaneous value, which varies continuously, but rather its average value.

Looking

at (1.7), we see that instantaneous power has both a fixed (

( − 1

2

V max

I max

1

2

V max

I max

) and a variable cos(2 ωt )) component. Since, like that of sin x , the average value of cos x is zero over one full cycle, the average value of power reduces to the first component:

P avg

= −

1

2

V max

I max

.

6

We get the much more familiar expression, P = I × E , by denoting voltage as E rather than V

(1.8)

5

Alternately, we may calculate the average value of power directly from the formulas for average values of voltage and current, which we do below. By inspection, we will not get

very far using either (1.2) or (1.5) directly, because we run into the same phenomenon of

zero average value for a sinusoidal signal. This is unimportant, however, as the sign of the

voltage or current in question is altogether arbitrary.

Intuitively, one will feel the same shock whether a voltmeter registers − 50 V or +50 V (don’t try this at home). We get around the sign problem for voltage and current by squaring the instantaneous values of each and

integrating to arrive at their respective averages. Starting with (1.3),

ν ( t ) = V max sin( θ ), we are reminded that the magnet travels 2 π radians per complete rotation. Therefore, the average value for voltage-squared over one complete cycle is:

V

2 max

2 π

Z

2 π sin

2

θ d θ =

0

V

2 max

2 π

Z

2 π

0

1 − cos (2 θ )

2 d θ.

Since the integral of cos(2 plete cycle is V

2 max

/ 2.

x ) is

1

2 sin(2 x ), the average value of voltage-squared over a com-

Taking the square root of this value, we find that the average value for voltage is:

V avg

= | V | =

V

√

2

Going through the same steps, we find that the average value for current is:

(1.9)

I avg

= | I | =

I

√

2

.

Multiplying (1.9) and (1.10), we again find that

P avg

(1.10)

If electrical systems contained only voltage sources, wires, and resistance sources (e.g., resistors and load), the world would be a happy place for the energy economist, with current and voltage always and everywhere in phase. Unfortunately (for us) though, electrical devices contain capacitors and inductors, casting us out of the Eden of synchronous voltage

Let us begin by demonstrating how inductors bring voltage and current out of phase, thus creating a non-zero phase angle. Inductors (typically an inductor is a conducting wire shaped as a coil) oppose changes in current, as expressed by the formula:

V = L d I d t

= L I max cos( ωt ) , (1.11)

7

In a DC circuit, for example, a voltmeter may register a positive or negative value, depending on the reference point.

8

See, e.g. Mittle and Mittal (2006), Section 6.4.1 6.4.2.

10 Inductors and capacitors, of course, do serve useful purposes, such as storing electrical energy and filtering out specific signal frequencies, but that is beside the point.

6

where:

L is inductance, measured in Henrys.

(1.11) states that the voltage drop across an inductor is equal to the inductors inductance

times the rate of change of current over time. To see how this creates a phase shift between

current and voltage, we must refer to the latter two’s sinusoidal nature.

V, I

Instantaneous voltage

Instantaneous current time

Figure 1.2: Instantaneous AC Current and Voltage Perfectly in Phase

Since d I

at a maximum or a minimum, from (1.11) instantaneous voltage,

ν ( t ), is equal to d t zero when I = ( I max

, − I max

). Thus, voltage and current are 90

◦ out of phase for an purely inductive current, as shown below:

V, I

Instantaneous voltage

Instantaneous current time

Figure 1.3: Instantaneous AC Current and Voltage in a Purely Inductive Circuit

Note that in this case we have set R = 1Ω, simply for aesthetics. Note further that we may demonstrate the phase shift for an inductor by recognizing that instantaneous voltage is

11

Note that V > I

in Figure(1.2). Therefore, from (1.1), it must be the case that

R > 1.

7

at its maximum or minimum synchronously with d I d t

.

of inflection, which occur at i ( t ) = 0.

d I d t reaches its extrema at its points

While we may still write i ( t ) = I max sin θ , it is no longer the case that v ( t ) = V max sin θ .

Taking advantage of the fact that cos θ = sin θ + 90

◦

, we write: i ( t ) = I max sin θ, v ( t ) = V max sin( θ + 90

◦

) .

In this instance we say that voltage leads current by 90

◦

, or equivalently, current lags voltage by 90

◦

. More generally, we write: v ( t ) = V max sin( θ + δ ) , where δ ∈ (0

◦

, 90

◦

] is commonly known as the phase angle between voltage and current.

Like inductors, capacitors also introduce phase angles between current and voltage. A capacitor contains two conductors separated by an insulator.

Current flow through a capacitor is directly related to the derivative of voltage with respect to time, as follows: where: i ( t ) = C d V

, d t

C is capacitance, measured in Farads.

(1.12)

Capacitance will depend on the materials composing the insulator. From (1.12), we see

that instantaneous current will be zero when voltage is at its maximum or minimum values, and will reach its maximum and minimum values at the inflection points for voltage.

Graphically, the relationship between voltage and current in a capacitor is as shown below:

V, I

Instantaneous voltage

Instantaneous current time

Figure 1.4: Instantaneous AC Current and Voltage in a Purely Capacitive Circuit

8

In a capacitor, current lags voltage by 90

◦

, and thus we may write:

ν ( t ) = V max sin( θ − 90

◦

) .

Note the difference between a resistor versus an inductor or capacitor. A resistor simply opposes the flow of current, like sand opposes the flow of water. An inductor or capacitor, however, does not oppose a constant flow of current. Inductors oppose changes in current flow , while capacitors oppose changes in voltage , both at angles of | 90 | ◦

. The name for the opposition exerted created by inductors and capacitors is reactance, denoted by the symbol X and measured in ohms. For an inductor, we denote reactance by a vector | 90 |

◦ out of phase with current, creating a | 90 | ◦ phase angle between voltage and current, as shown in Figure 1.5.

Rectance

V

90

◦

I

Figure 1.5: Geometric Representation of Reactance

In general, electrical circuits will not simply contain inductors or capacitors or resistors, but some combination of the three. Therefore, real-world electricity systems will usually be characterized by phase angles of other than 0

◦ or 90

◦

. To determine the angles for such systems, we add the vectors for reactance and resistance, arriving at a quantity called impedance, denoted by Z and measured in ohms. We demonstrate a circuit with a 4 ohm resistor and a 3 ohm inductor, in Figure 1.6, below:

9

Z = 5Ω

X = 3Ω

θ

R = 4Ω

Figure 1.6: Geometric Representation of Impedance

Impedance is the vector sum of resistance and reactance. A circuit with a 4Ω resistor and a 3Ω inductor will thus have total impedance equal to 5Ω.

While we have correctly calculated impedance as 5Ω, it is more common to denote impedance not only by its magnitude, but also its direction as well. We can do this by referring to either polar or rectangular notation. Polar notation uses the vector compass to express the variable of interests quantity in terms of magnitude and direction (that is, phase angle).

To compute the phase angle for impedance, we simply calculate the angle corresponding to this quantity. We express impedance in this case as:

Z = p

4 2 + 3 2 Ω at phase angle δ, or Z = 5Ω at [cos

− 1

(0 .

8)]

◦

= 5Ω at 36 .

87

◦

.

Thus, we would express this example’s impedance in polar notation as:

Z = 5Ω ∠ 36 .

87

◦

(1.13)

(alternatively, the combined effect of the resistor and inductor is to produce an impedance of 5Ω at 36 .

87

◦

).

Notice that no information is lost in switching to polar notation. We may go the other way to calculate the resistive and reactive components of impedance as follows:

Resistance = 5 cos(36 .

87

◦

) = 4Ω ,

Reactance = 5 sin(36 .

87

◦

) = 3Ω .

This yields a second way to express impedance: in terms of resistance plus reactance. We do this using rectangular notation. To switch from polar to rectangular notation graphically, we switch from using the vector compass to representing the horizontal axis as real units, and the vertical axis as imaginary units. In doing so, we place resistance (to real power) on the horizontal axis and reactance on the vertical, as shown below:

10

Imaginary

Axis

Z = 5Ω

X = 3 j Ω

R = 4Ω Real Axis

Figure 1.7: Impedance in Rectangular Form

Thus, we write Z = 4 + 3 j

Having introduced impedance, we may compute power in a mixed resistive/reactive circuit.

Let us then draw a circuit with a 4-ohm resistor ( R ) and a 3-ohm inductor ( L ) (alternatively, reactor):

R = 4Ω ∠ 0

◦

Resistor

L = 3Ω ∠ 90

Inductor

◦

∼

Figure 1.8: AC Circuit with Resistance and Reactance

The symbol,

∼

, represents an AC voltage source. As is standard, we will assign a phase angle of 0

◦ to the voltage source, with the phase angle and magnitude of impedance given

by (1.13). Given total voltage and impedance, we apply Ohm’s law as applied to AC

12 In electrical engineering,

√

− 1 is commonly denoted as j , instead of i . The change in axes accounts for the (initially) confusing switch in the formulas for voltage and current from v ( t ) = V max sin θ to v ( t ) =

V max cos θ . The real quantity is now the adjacent, not the opposite.

11

circuits ( V = IZ

I =

V

I

=

20V ∠ 0

◦

5Ω ∠ 36 .

87 ◦

= 4 A ∠ − 36 .

87

◦

From here, calculating the remaining voltages is straightforward (answers in Table 1.2, below).

Z

Table 1.2: V , I , and Z Calculations

R

V 16 ∠ − 36 .

87

◦

I 4 ∠ − 36 .

87

◦

4 ∠ 0

◦

L

12 ∠ + 53 .

13

◦

4 ∠ − 36 .

87

◦

3 ∠ 90

◦

Total

20 ∠ 0

◦

4 ∠ − 36 .

87

◦

5 ∠ − 36 .

87

◦

Finally, let us move on to AC power calculation. Real power is the rate at which energy is expended. According to Grainer and Stevenson (1994), reactive, or imaginary power,

“expresses the flow of energy alternately toward the load and away from the load.” 14

Intuitively, we can divide a power generator’s (or simply, “generator’s”) output into power capable of doing work (real power), and power not capable of doing work (reactive power).

Reactive power is the result of current moving out of phase with voltage. The greater the phase angle between voltage and current, the less efficient is power output in terms of capability to do work, and the greater is reactive power. Apparent, or complex, power is the (geometric) sum of real and reactive power. Thus, we derive the “power triangle” in the same manner as we derived the relationship between resistance, reactance, and impedance:

13

Again, since there is only one pathway for current, the amount amperage is constant across the entire circuit. The angle, − 36 .

87

◦

, is the amount by which current lags voltage.

14 p. 8. The exact nature of reactive power is not well understood.

12

Imaginary

Axis

Complex Power S = P + jQ

Reactive Power Q = 0 + jQ

Real Power P = P + j 0 Real Axis

Figure 1.9: Complex Power (the “Power Triangle”)

Calculating complex power in rectangular notation is straightforward: Simply take real power plus reactive power equals complex power, or S = P + jQ .

Calculating complex power in polar form is a bit more complicated. Taking the phasors for voltage and current as V = | V | ∠ α and I = | I | ∠ β the formula for complex power is:

V I

∗

= | V I | ∠ α − β, where

∗ denotes the complex conjugate (as we will demonstrate shortly). Note that the angle, α − β , is once again the phase angle between voltage and current, as may be verified by designating voltage as the reference phasor.

To see why we take the complex conjugate of current (i.e., we switch the sign on the angle for current), let us turn to Appendix A eqs.(1A.5) and (1A.6) for reactive and complex power:

Q = | I |

2

× X,

S = | I |

2

× Z.

Dividing (1A.5) by (1A.6):

Q

S

=

Q

.

Z

That is, the cosine of the phase angle for impedance is equal to that of the power triangle, and thus the phase angles themselves are equal. Since the phase angle for impedance is

α − β , the phase angle for the power triangle is this quantity as well. But we have to switch the sign on the current phasor to maintain this result.

13

Euler’s identity: e jθ

= cos θ + j sin θ offers further insight into both rectangular and polar representation of electrical quantities such as impedance and power. Resistance is equal to | Z | cos θ , and reactance is equal to | Z | sin θ . If we denote the horizontal axis as the real number line, and the vertical axis as the imaginary number line, we may express impedance as:

Z = | Z | cos θ + j | Z | sin θ = | Z | e jθ

, and complex power as:

S = | I | 2 | Z | e jθ

.

1.3

DC Network Calculations

1.3.1

Nodal Voltages and Current Flows

Having demonstrated the basics of voltage, current, resistance, and power, we may demonstrate the calculation of these values in circuit analysis. We start with a DC circuit, then move on to its AC counterpart. First let us specify the network itself. We will examine an

elementary circuit, called a ladder network, as shown below:

R b

R d

R f

I

1

R a

R c

R e

R g

I

4

0

Figure 1.10: A Five-node Ladder Network

A network is composed of transmission lines, or branches. The junctions formed when two or more transmission lines (more generally, circuit elements) are connected with each other

15

Taken from Baldick (2006, p. 163 et. seq.

)

14

are called nodes (e.g., in the figure above, line 12 connects node 1 with node 2). In the above example, then, there are seven transmission lines [12, 23, 34, 01, 02, 03, and 04] connecting five nodes [0, 1, 2, 3, 4].

Denote:

N = Number of nodes in a transmission system, k = Number of lines in a transmission system,

R ij

= Resistance of transmission line connecting nodes i and j; or

R

`

= Resistance of transmission line ` ,

Y

`

= Admittance of transmission line ` ,

I j

= Current source, located at node j,

I ij

V j

= Current flowing over line ij,

= Voltage at node j,

V ij

= Voltage differential between nodes i and j

(also known as the “voltage drop”” across line ij ).

Note that all of the above network’s N = 5 nodes are interconnected, directly or indirectly, by transmission lines and are subject to Kirchhoff’s laws. Since the (directional) voltage drops across the system must sum to zero, satisfaction of KVL takes away one degree of freedom in the system. Thus we need write voltage equations for only N − 1 nodes to fully identify an N -node network. KVL also allows us to choose one node as the datum (or ground) node, and set voltage at this node equal to zero. The researcher is free to choose any node as datum. To minimize computational cost, one generally chooses as datum the node with the most transmission lines connected to it. It is customary to denote the datum node as node 0.

There are two current sources in this circuit, I

1 and I

4

. The current sources are generators located at nodes 1 and 4. These generators are connected to the transmission grid by lines 01 and 04, or, alternatively, lines a and g . These lines are generally denoted “limited

In the electrical engineering literature, such lines are known

The ladder network above might represent, say, a transmission

16 Generally, limited interconnection facilities’ sole purpose is to interconnect power plants to the grid.

Such facilities are not required to provide open access to customers wishing to transmit power over the former. However, the distinction between a limited interconnection facility and a transmission line which must provide open access (that is, a facility which must submit an Open Access Transmission Tariff to the FERC) can become blurry when the “limited interconnection facility” is, say, a 40+ mile long line.

This was the case with the Sagebrush line (see, e.g. the case filings before the Federal Energy Regulatory

Commission in docket numbers ER09-666-000 and its progeny (e.g. ER09-666-001). Two relevant orders in these cases are found in 127 FERC ¶ 61,243 (2009) and 130 FERC ¶ 61,093 (2010).

17

See, e.g.

, Glover et al. (2012), section 4.11, and Grainger and Stevenson (1994), chapter 6.

15

line stretching across New York State, where node 1 represents Buffalo, node 2 represents

Rochester, node 3 represents Syracuse, and node 4 represents Albany. Node 0 then, actually represents four physically distinct locations, where generators at or around these four cities are connected to the transmission grid by limited transmission interconnection facilities.

Again, in standard electrical engineering parlance, node 0 is the ground “node.”

Note that one may label the resistance associated with a particular transmission line by naming the nodes the line connects, or simply assigning the line its own, alternative, subscript. Resistance in transmission lines is the opposition to current as current bumps into the material composing the transmission lines. Good conductors, or materials that offer relatively little resistance, are ideal candidates for transmission lines. Metals with free electrons, like copper and aluminum, make good conductors as they provide little resistance.

Before we analyze the equations corresponding to Kirchhoffs Laws, let us introduce the concept of admittance. Admittance measures the ease with which electrons flow through a

circuit’s elements, and is the inverse of impedance.

Since there are no reactive components in our example, though, impedance and resistance are equivalent. We thus loosen our terminology and let admittance denote the inverse of resistance. Labeling admittance by

1

Y , we thus have Y = .

Ohm’s Law is then:

R

I =

1

R

V = Y × V.

Ohm’s Law allows us to write current flow along a particular line as a product of the voltage drop between the two nodes that the line connects and the admittance of the line.

It thus yields the flow of current across the k = 7 transmission lines in our example. To start, we write current flow along line 10, I

10

, as:

I

10

= V

10

Y

10

= ( V

1

− V

0

) Y

10

= V

1

Y

10

(1.14)

(since V

0

= 0). We write current flow across the other six lines analogously:

I

12

= ( V

1

− V

2

) Y

12

,

I

20

I

23

= V

2

Y

20

,

= ( V

2

− V

3

) Y

23

,

I

30

= V

3

Y

30

,

I

34

= ( V

3

− V

4

) Y

34

,

I

40

= V

4

Y

40

.

18 The inverse of resistance is actually conductance.

(1.15)

(1.16)

(1.17)

(1.18)

(1.19)

(1.20)

16

KCL expresses the conservation of current at the ( N − 1) = 4 nodes. That is, KCL states that the sum of the currents entering a node equals the sum of currents exiting that node.

We will use the convention that only current sources (generators) produce current entering a node, while all transmission lines carry current away from that node. Let us begin with node 1. Current I

1 enters node 1, while transmission lines 10 and 12 carry current “away” from this node. Thus KCL implies that I

1

= I

10

+ I

12

. Note that I ij denotes flow of current from node i to node j . If the actual current flow is in the opposite direction i.e.

, from node j to node i , we write I ij

< 0. Otherwise, I ij

≥ 0. The important point is that we specify the assumed direction of current flow by the ordering of the subscripts.

Because we do not incorporate load in this example,

we will have I

1

≥ 0.

I

1

= 0 iff the current source is not currently operational, as when a generator is shut down for maintenance.

I

10 and I

12 may be either positive or negative, depending on the direction

of current flow. Substituting (1.14) and (1.15) for

I

10 and I

12

, respectively, yields:

I

1

= V

1

· Y

10

+ ( V

1

− V

2

) Y

12

.

Grouping the voltage terms yields:

I

1

= ( Y

10

+ Y

12

) V

1

− Y

12

V

2

.

(1.21)

There is no current source at node 2, simply three transmission lines which, by convention, carry current away from this node. Our three current equations for lines 21, 20, and 23 are then, respectively:

Y

21

( V

2

− V

1

) = I

21

,

Y

20

( V

2

) = I

20

,

Y

23

( V

2

− V

3

) = I

23

.

Let us remark at this point that Y

Applying KCL to node 2 yields I

21 ij

+

= Y ji

, i.e.

, admittance is not a directional value.

I

20

+ I

23

or:

− Y

21

V

1

+ ( Y

21

+ Y

20

+ Y

23

) V

2

− Y

23

V

3

= 0 .

(1.22)

The alert reader will notice that node 3 is similar to node 2, in that there is no current source and three branches emanating there. Thus KCL generates:

− Y

32

V

2

+ ( Y

32

+ Y

30

+ Y

34

) V

3

− Y

43

V

4

= 0 .

(1.23)

19 If there were a load located at node 1, then current flow from node 1 would be negative (i.e., net current would flow to node 1, rather than away from it) whenever node 1 load is greater than node 1 generation.

20

This is a likely explanation for the single-subscript notation for resistance and admittance common in

EE texts.

21

Note that this equation does not imply that no current flows through node 2. It states that current traveling toward node two, which takes on a negative sign, equals the amount of current flowing out of node

2 (which has a positive sign), and thus the principle of conservation of current.

17

Finally, our treatment of node 4 is symmetric to node 1, producing:

− Y

43

V

3

+ ( Y

43

+ Y

40

) V

4

= I

1

.

(1.24)

Note that (1.21) — (1.24) form a system of four equations in four unknowns, which we

express in matrix form below (after switching to single subscript notation for line admittances, for expositional ease):

Y a

+ Y b

− Y b

0

0

− Y b

Y b

+ Y c

+ Y d

− Y d

0

0

Y d

− Y d

+ Y e

+ Y f

− Y f

0 V

1

0

Y f

− Y f

+ Y g

V

2

V

3

V

4

=

I

1

0

0

.

I

4

(1.25)

The LHS matrix is known as the admittance matrix. The (square) admittance matrix is usually denoted as A ∈

R n × n

, with generic element A ij

.

V ∈

R n and I ∈

R n are the vectors of unknown voltages and known current injections at each of the networks nodes, respectively.

Given values for admittances and current injections, we may solve for nodal voltages and current flows over each line. As the simplest example, consider resistances of one unit for each resistor in the circuit and current injections of one unit at both nodes 1 and 4. In this case,

Y i

=

1

R i

= 1 ∀ i = a, b, . . . , g.

The system to be solved is given in matrix form below:

2 − 1 0 0 V

1

− 1 3 − 1 0

0 − 1 3 − 1

V

2

V

3

0 0 − 1 2 V

4

1

=

0

0

,

1

(1.26) yielding V

∗

0

=

"

2

3

1

3

1

3

2

#

.

3

18

We may now solve for current flows across all lines in the network, using (1.14) – (1.20).

I

12

I

23

=

I

I

10

Y b

20

=

( V

1

=

Y a

− V

Y c

V

2

V

1

) =

2

=

=

2

3

,

1

3

,

1

3

,

= Y d

( V

2

− V

3

) = 0 ,

I

I

30

40

=

=

Y

Y e g

V

V

3

4

=

=

1

3

,

I

34

= Y f

( V

3

− V

4

) = −

1

3

,

2

3

.

(all currents in amps). The negative sign on I

34 means that current is flowing in the opposite direction than we assumed (i.e., current is flowing from node 4 to node 3, not from node 3 to node 4). One may easily check that KCL is indeed satisfied at the four nodes.

1.4

A Quick DC Sensitivity

Sensitivity analysis is the study of how the value of a problem’s solution, or some function of that solution, changes as we tweak the value(s) of either the solution (vector) or some other model parameter. As a quick introduction to sensitivity analysis, we examine the sensitivity of the vector of voltages in the ladder circuit problem when we change the current injected at a single node. We do this analysis to demonstrate a basic method for calculating sensitivities ( e.g.

a locational marginal price is an example of a sensitivity).

To calculate the sensitivity of a solution to a change in a variable , we start with a square system of equations Ax = b (in our example, A ∈

R

4 × 4

, x ∈

R

4 × 1

, and b ∈

R

4 × 1

Since we are examining the sensitivity of the solution of this system, we assume such a solution exists, and denote it as x

∗

( 0 ), or the “base case” solution. We denote the amount by which we wish to change a given variable as χ ∈

R

1

. At the base case solution, none of the problem inputs have changed at all. Therefore, the base case corresponds to χ = 0

A non-zero value for χ will refer to a “change case.” For example, we may change an

22

We assume the reader is familiar with matrix algebra, so we do not go into details such as conditions for matrix invertibility.

23

The notation is, admittedly, a bit squirrelly, since we change only one variable when performing a

19

admittance from Y i to Y i

+ χ , or change a current source from I j to I j

+ χ . Let us denote the change-case equation as:

A ( χ ) x

∗

( χ ) = b ( χ ) .

(1.27)

That is to say, the coefficient matrix and right hand side vector are now dependent on (or functions of) χ .

We calculate a sensitivity for x

∗

(0) as the partial derivative of the original solution vector

( i.e.

, the base case) to a change in a specific variable, the latter of which we denote as χ j

.

First, since the base case corresponds to no change in any variable, we will denote the base case equation as:

A ( 0 ) x

∗

( 0 ) = b ( 0 ) .

To calculate the sensitivity of the original solution to a change in a variable, solve for the derivative of the base-case solution with respect to the variable in question. To evaluate

this derivative, start by totally differentiating (1.27):

∂ A ( 0 ) x

∗

( 0 ) d χ j

+ A ( 0 )

∂χ j

∂ x

∗

( 0 )

∂χ j d χ j

=

∂ b ( 0 )

∂χ j d χ j

.

Since the d χ j terms all cancel, we solve the remaining equation for

∂ x

∗

( 0 )

, obtaining:

∂χ j

∂ x

∗

( 0 ) = [ A ( 0 )]

− 1

∂χ j

∂ b

∂χ j

∂ A

( 0 ) −

∂χ j

( 0 ) x

∗

.

Let us return to the example shown in (1.25) - the ladder network with current injections

I

1 and I

4 equal to one amp each, and admittances Y a

— Y g wish to calculate the sensitivities of the voltages obtained, ( x equal to one unit each. We

∗

)

0

=

"

2

3

1

3

1

3

2

3

#

, to a change in the node 1 current source from below:

I

1 to I

1

+ χ j

. We show this change in (1.28),

Y a

+ Y b

− Y b

0

0

Y b

− Y

+ Y c

0 b

− Y d

+ Y d

Y d

0

− Y d

+ Y e

+ Y f

− Y f

0 V

1

0

− Y f

Y f

+ Y g

V

2

V

3

V

4

=

I

1

+ χ j

0

0

.

I

4

(1.28) sensitivity analysis. And yet we alternatively speak of “ A ( 0 )” and“ A ( χ ).” Perhaps the best explanation is that as soon as we perturb one variable, designated as χ , the original solution vector no longer holds.

20

Notice that

∂ A

∂χ

· = 0 and

∂ b

∂χ j

0

· = [1 0 0 0]. Finally, inverting the matrix, as found

on the lhs of (1.26), and multiplying, we obtain:

∂ x

∗

( 0 ) = [ A ( 0 )]

− 1

∂χ j

∂ b

∂χ j

( 0 ) =

0 .

6191

0 .

2381

0 .

0952

.

0 .

0476

1.5

The DC Approximation to the AC Network: Calculating

PTDFs

We started with DC network calculations to familiarize the reader with concepts used in the analysis of an AC Network. Electrical engineers, intrepid folks that they are, will actually

set up and solve AC power flow problems as systems on non-linear equations.

Energy economists generally study linearized versions of the AC power equations, though. The obvious advantage of linearizing these systems is that one need not run iterative algorithms to find the critical points for systems of non-linear equations.

This section focuses on only one element of linearized AC analysis, the calculation of

PTDFs. Doing so, we will present the reader important concepts such as the Jacobian of power balance equations (as derived in Appendix A), noting the similarity between this matrix and the admittance matrix of the linearized AC system (derived in Appendix B).

We believe that the advantage of this approach is that it is incremental. We introduce the reader to these important concepts in the context of the evaluation of a single system of equations, rather than throwing the reader the several derivations necessary to solve the optimal power flow problem all at once.

1.5.1

Linearizing AC Power Equations

We derive the equations for power balance and power flow across transmission lines in

Appendix A. (1A.8) and (1A.9), shown below:

P

Q

`

`

=

= n

X u

` u k

[ G

`k cos( θ

`

− θ k

) + B

`k sin( θ

`

− θ k

)] , k =1 n

X u

` u k

[ G

`k sin( θ

`

− θ k

) − B

`k cos( θ

`

− θ k

)] , k =1

24

Well, perhaps they are not that intrepid, since available computer packages solve the problems for them!

21

are called power flow equality constraints,

and must be satisfied at each bus ` in order for

the power system to maintain a constant frequency.

(1A.12) and (1A.13) display power flow across transmission lines as:

˜

`k

= ( µ

`

)

2

G

``

+ u

` u k

[ G

`k cos( θ

`

− θ k

) + B

`k sin( θ

`

− θ k

)] , q

`k

= − ( µ

`

)

2

B

``

+ u

` u k

[ G

`k sin( θ

`

− θ k

) − B

`k cos( θ

`

− θ k

)] .

The linearized AC model is generally known as the DC approximation to the AC network.

Thus, in this model terms such as reactive power and impedance are assumed away. We make two simplifying assumptions to transform the complex admittance matrix to its DC approximation: we set its real terms, G

`k

, equal to zero and ignore the shunt elements

( i.e.

, the terms corresponding to node 0, or the ground). This reduces (1A.8) and (1A.12) to:

P

` p

`k

= n

X u

` u k

[ B

`k sin( θ

`

− θ k

)]

k =2

= u

` u k

[ B

`k sin( θ

`

− θ k

)] .

(1.29)

(1.30)

We finish linearizing these equations by assuming that the base-case solution (the point of reference for our derivatives) involves zero net power flow at nodes 2 — n , and all voltage magnitudes equal to one per unit, so that u ( 0 ) = 1

. This reduces (1.29) and (1.30) to:

P

`

= n

X

B

`k sin( θ

`

− θ k

) , k =2

˜

`k

= B

`k sin( θ

`

− θ k

) .

(1.31)

(1.32)

At this point we deviate from the standard methodology by linearizing these equations about the base-case solution for (real) power. As per Baldick (2006, p. 343), we define a flat start as the state of the system corresponding to phase angles equal to zero at all nodes and all voltage magnitudes equal to one (which, in fact, is the base-case solution to the

problem when we ignore the circuits shunt elements).

As we will see shortly, the model

∂sinθ is now linear at the base-case solution, because = 1.

∂θ

θ =0

25 The power flow equality constraints express the relationship that complex power generated at bus ` is equal to voltage times (the complex conjugate of) current.

26 into a generic node, ` , as the sum of its real P

` and reactive Q

`

√

− 1 components.

28

The traditional interpretation of DC power flow emphasizes small angle approximation to sine and cosine ( i.e.

cos( θ i

− θ k

) ≈ 1 , sin( θ i

− θ k

) ≈ ( θ i

− θ k

)), and the solution of DC power flow being the same as the solution of an analogous DC circuit with current sources specified by the power injections and voltages specified by the angles (see Schweppe et al .(1988), Appendix D). While our presentation differs from the standard, the reader should be able to digest the traditional method once they have solved ours.

22

1.5.2

Calculating PTDFs

The term PTDF, or “shift factor,” means the change in the flow of power across a particular transmission line, `k , induced by an incremental increase in power output at a given node.

The alert reader will note that there exist N shift factor matrices, each one corresponding to the change in power flow across all lines in the system as the result of an increase in power generation at one of the system’s N nodes. Mathematically, we calculate a realpower shift factor as

∂ ˜

`k

∂P j

. We denote this matrix as L j

=

∂ ˜

`k

. This is the matrix

∂P

( m ) j of incremental line flows across all of a systems lines due to an injection of power at node j and withdrawal of that power at node m , for each of the systems other nodes. Power injected and withdrawn at the same node has PTDF

( j ) j

= 0, trivially.

We cannot take the desired partial derivative directly, because there is no explicit term for

P j

∂ ˜

`k

∂P j

=

∂ ˜

`k

∂θ i

∂θ i

∂P j

.

We obtain the second term, of course, by inverting the Jacobian corresponding to

∂P j

.

∂θ i

Differentiating these equations is now fairly straightforward. First, we shorthand equation

(1.31) to express net power flow from node

` as a function of phase angles and voltage injections, and the vector of net power flows as:

P

`

= p

`

θ

µ

,

P = p

θ

,

µ respectively. As alluded to earlier, there are ( n − 1) degrees of freedom in the power balance equations, because power balance indicates that one cannot specify the power balance equation at the n th node independently of the power balance in the rest of the system.

Therefore, we subtract a row and column of the power-balance Jacobian. It is customary to denote the node corresponding to the omitted row and column as the “reference” node.

As shown below, we calculate shift factors by treating the reference node as the node at which power is withdrawn. As is also customary, we denote the reference node as node 0.

29 the only drawback of this approach is that we have already denoted the ground node as node 0, and the ground node and the reference node are not the same thing. However, since we have assumed away the shunt elements, we are not examining the ground node any longer. Thus, there should be no further confusion regarding denoting the reference node as node 0

23

We will demonstrate the calculation of two different Jacobians for expositional clarity.

Denote the power-balance Jacobian (nodal Jacobian) for all of the system’s nodes (the “full” nodal Jacobian) as J pθ

, and the Jacobian for all the system’s nodes, excepting the reference

J pθ

, respectively. The term pθ indicates that we are taking the derivatives of real power flows with respect to phase angles. When solving for PTDFs, we will use the reduced nodal Jacobian because the power-balance equations have ( n − 1) degrees of freedom. Remembering that we are evaluating the linearized power balance equations, we write the reduced nodal Jacobian for our calculation of network shift factors as:

J

ˆ (0) pθ

J pθ

0

1

=

∂ ˆ

∂θ

0

1

(1.33)

(that is, we evaluate this Jacobian at a flat start).

Next we examine the Jacobian for the equations of real-power flow over the transmission lines (branch Jacobian): K ∈

R k × n − 1

. This matrix has k rows, one for each of the equations for the systems transmission lines (ignoring shunt elements). Again, because the system behaves differently when power is withdrawn at each of the system’s non-reference nodes, there are ( n − 1) of these matrices, one every node at which we may withdraw power when power is injected at a set node. We denote the branch Jacobian, evaluated at the base-case of a flat start, as:

K

(0)

= K

0

1

=

∂ p ˜

∂θ

0

1

(1.34)

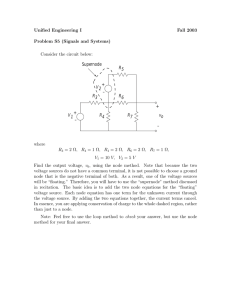

For expositional simplicity, let us now solve (1.33) and (1.34) and the resulting shift fac-

tors for a sample network before introducing the general notation corresponding to these equations. Consider the 5-node model shown below. The value shown between each node pair is the impedance of the transmission line connecting the two nodes. Let us choose node 1 as the reference node, so that we will replace the notation “1” with “0” whenever we refer to this node.

24

Node 4 0 + j 0 .

004 Node 2

0 + j 0 .

001

0 + j 0 .

001 0 + j 0 .

001 Node 0

0 + j 0 .

001

Node 5 0 + j 0 .

004 Node 3

Figure 1.10: A Sample Network

Each line, `k , has R

`k

= 0. That is, the real part of impedance ( i.e.

resistance) is zero for all lines. This corresponds to the assumption that the real component of conductance

( i.e.

the inverse of impedance) is zero as well. The complex component of impedance

( i.e.

reactance) is given by X

`k

− 1. Invert each lines impedance to derive its admittance, denoted Y

`k

:

Y

02

= Y

03

= Y

23

= Y

45

=

1

0 + j 0 .

001

= j 1 , 000 ,

Y

24

Note that

= Y

Y

`k

35

=

1

0 + j 0 .

004

= B

`k

√

− 1.

= j 250 .

For any node ` , we write sin( θ

`

− θ j

), for all nodes j connected to node ` by a transmission

line. We may thus express (1.31) for each of the five nodes in this example as:

P

0

= 1 , 000 sin( θ

0

− θ

2

) + 1 , 000 sin( θ

0

− θ

3

) ,

P

2

= 1 , 000 sin( θ

2

− θ

0

) + 1 , 000 sin( θ

2

− θ

3

) + 250 sin( θ

2

− θ

4

) ,

P

P

P

3

4

5

= 1 , 000 sin( θ

3

− θ

0

) + 1 , 000 sin( θ

3

− θ

2

) + 250 sin( θ

3

− θ

5

) ,

= 250 sin( θ

4

− θ

2

) + 1 , 000 sin( θ

4

− θ

5

) ,

= 250 sin( θ

5

− θ

3

) + 1 , 000 sin( θ

5

− θ

4

) .

Deriving the full nodal Jacobian for this example is straightforward, nonetheless, we show

25

explicitly the elements corresponding to the first row of this matrix:

J

(0)

11

=

J

(0)

12

=

J

(0)

13

=

∂P

0

∂θ

0 θ =0

∂P

0

∂θ

2 θ =0

∂P

0

∂θ

3 θ =0

= 1 , 000 cos( θ

0

− θ

2

) + 1 , 000 cos( θ

0

− θ

3

) = 2 , 000 ,

= 1 , 000 cos( θ

0

− θ

2

)( − 1) = − 1 , 000 ,

= 1 , 000 cos( θ

0

− θ

3

)( − 1) = − 1 , 000 .

Since neither θ

4 nor θ

5 are arguments of P

1

, their corresponding terms are obviously zero.

Taking the remaining partial derivatives yields the matrix:

2 , 000 − 1 , 000 − 1 , 000

0

0 0

− 1 , 000 2 , 250 − 1 , 000 − 250 0

− 1 , 000 − 1 , 000 2 , 250

0 − 250

0 − 250

0 1 , 250 − 1 , 000

.

0 − 250 − 1 , 000 1 , 250

A couple of characteristics of this matrix stand out immediately. First, notice the diagonal terms are equal to the sum of the off-diagonal elements times minus one. Recognizing that the off-diagonal term, J

(0)

`k as:

= − B

`k

, this allows us to shorthand the terms of this Jacobian

∂P

`

0

∂θ k

1

=

P

j ∈

J ( ` )

− B

`k

0

B

`j if k = `, if k ∈

J

( ` ) otherwise.

,

Second, notice that the full nodal Jacobian is symmetric (this fact makes calculation of the full AC model less computationally expensive).

As mentioned above, we omit the power flow equality constraint and partial derivatives corresponding to the base node in order to derive the reduced nodal Jacobian. Thus, the reduced system of equations is:

P

2

P

3

= 1 , 000 sin( θ

2

− θ

0

) + 1 , 000 sin( θ

2

− θ

3

) + 250 sin( θ

2

− θ

4

) ,

= 1 , 000 sin( θ

3

− θ

0

) + 1 , 000 sin( θ

3

− θ

2

P

4

= 250 sin( θ

4

− θ

2

) + 1 , 000 sin( θ

4

− θ

5

)

P

5

= 250 sin( θ

5

− θ

3

) + 1 , 000 sin( θ

5

− θ

4

) .

,

) + 250 sin( θ

3

− θ

5

) ,

32 We could really use some different subscript/superscript/parenthetical notation for the entries in this

Jabobian, but what we have will have to do for now.

26

We take the derivatives of these equations with respect to θ

2

, θ

3

, θ

4 and θ

5

, obtaining:

J

ˆ (0)

=

2 , 250 − 1 , 000 − 250 0

− 1 , 000 2 , 250

− 250

0 − 250

0 1 , 250 − 1 , 000

.

0 − 250 − 1 , 000 1 , 250 with inverse:

J

ˆ (0)

− 1

=

0 .

000655 0 .

000345 0 .

000517 0 .

000483

0 .

000345 0 .

000655 0 .

000483 0 .

000517

0 .

000517 0 .

000483 0 .

002724 0 .

002276

.

0 .

000483 0 .

000517 0 .

002276 0 .

002724

Notice that we do not remove the terms in power flow equality constraints P

2

— P

5 corresponding to the base node, because these terms capture the physical characteristics of the network ( i.e.

the transmission lines between the node in question and the base node).

To calculate the branch Jacobian, K , remember that this matrix is of dimension k × ( n − 1).

Accordingly, we will take derivatives of flows on the k = 6 transmission lines with respect to the N − 1 nodes at which power is injected.

Before taking these derivatives, however, we must determine the (reference) direction of power flow across all k lines. That is, we know that the equation for power flow across a p

`k

= B

`k sin( θ

`

− θ k

), where this equation indicates that power flows from node ` to node k . We just have to figure out whether, for any given node pair, power flows from node k to ` , or vice-versa. There is no one right answer to this question, though, because the direction of power flow will change whenever one changes the node at which power flows into the network ( e.g.

, the direction of flow across line 45 will be different if one injects power at node 2, versus injecting it at node 3).

Fortunately, this observation leads us to the correct method for assigning power flow direction. We simply designate both the node at which power is withdrawn and the node at which power is injected. Since we withdraw power at the reference node, we need only choose an additional, arbitrary, node at which to inject power. From here, we assign power flows such that no physical laws (First Law of Thermodynamics, Kirchhoffs laws) are violated. So, let us arbitrarily designate node 2 as the “injection” node. Having specified the injection node, one may follow two shortcuts to power flow direction:

1. Power flows to the reference node along the lines directly connected to it;

2. Power flows away from the injection node along the lines directly

33 These rules of thumb no longer hold with multiple points of injection and withdrawl, but that observation is not relevant to the present analysis.

27

Following these two shortcuts, we may assign directional power flows as follows:

Node 4 Node 2

Node 0

Node 5 Node 3

Figure 1.11: Directional Power Flows

The first observation tells us that power flows from nodes 2 and 3 to node 0. Load at node 0 draws power along lines 20 and 30. The second observation tells us that power flows away from node 2, to nodes 0, 3, and 4. All that remains is to determine directional flows along the lines connected to node 5. Kirchhoff’s Current Law tells us that the sum of currents entering a node equals the sum of currents exiting that node. Since no current

(or the power corresponding to it) is being withdrawn at node 4, all of the power entering node 4 will flow to node 5, indicating the direction of flow on this line. The same reasoning applies at node 5, so power flows from node 5 to node 3. Note that KCL also tells us that the current flowing from node 3 to node 0 is equal to the sum of currents flowing from nodes 2 and 5 to node 3.

Using the directional power flows indicated above, we write the six equations for power flow as:

˜

20

= 1 , 000( θ

2

− θ

0

) ,

˜

30

= 1 , 000( θ

3

− θ

0

) ,

˜

23

= 1 , 000( θ

2

− θ

3

) , p

24

= 250( θ

2

− θ

4

) , p p

53

45

= 250( θ

5

− θ

3

) ,

= 1 , 000( θ

4

− θ

5

) .

28

Taking the required derivatives across all equations yields:

K

0

1

=

1 , 000 0 0 0

0 1 , 000 0 0

250

0 −

0 − 250

250

0

0 250

.

0 0 1 , 000 1 , 000

We shorthand this matrix as:

K

( ij ) `

=

(

B ij if ` = i,

− B ij if ` = j.

Multiplying the two matrices yields the shift factor matrix ( H ) below:

P

2

P

3

P

4

P

5

H

0

1

= line 20 line 30 line 31 line 24 line 35 line 45

0 .

6552 0 .

3448 0 .

5172 0 .

4828

0 .

3448 0 .

6552 0 .

4828 0 .

5127

0 .

3103 − 0 .

3103 0 .

0345 − 0 .

0345

0 .

0345 − 0 .

0345 − 0 .

5517 − 0 .

4483

0 .

0345 − 0 .

0345 0 .

4483 0 .

5517

.

0 .

0345 − 0 .

0345 0 .

4483 − 0 .

4483

To interpret this matrix, observe that column values indicate the source of the power, that is, the node at which power is generated (as well at the phase angle corresponding to this

The row value corresponds to the line over which this power flows. Therefore, the columns of the matrix yield the PTDFs for every line in the network when power is injected at the node corresponding to that column and withdrawn at the reference node.

Reading down the first column, then, 65.52 percent of the power generated at node 2 flows directly to node 0. The remaining 34.48 percent flows in a more circuitous fashion along the networks other lines. 31.03 percent flows from node 2 through node 3 to node 0, while

3.45 percent flows around the horn, from node 2 to node 4 to node 5 to node 3 and on to node 1. As mentioned above, the amount exiting node 3, 34.48 percent of the increment of power generated, is equal to the sum of the flows entering that node: 31.03 percent, plus

3.45 percent.

34 Even though we assume that no power is generated at nodes 1-3, we may, in principle, calculate shift factors for any node in a transmission system.

29

1.A

Power Equation Derivation

When current and voltage are in phase, an electrical system is producing its maximum efficiency of (real) power output. A graphical analysis is illustrative. Consider first relationship between power, current, and voltage for a purely resistive system.

P, I, E

Instantaneous Voltage

Instantaneous Current

Instantaneous Power time

Figure 1A.1: Current, Voltage, and Power in a Purely Resistive System

As shown in Figure 1A.1, above, when current and voltage are in phase, power is strictly nonnegative because voltage is never positive when current is negative, and vice-versa.

As voltage and current move out of phase, though, this synchronicity vanishes. Consider the inductor example from Figure 1A.2, with instantaneous power added to instantaneous current and voltage.

P, I, E

Instantaneous Voltage

Instantaneous Current

Instantaneous Power time

Figure 1A.2: Current, Voltage, and Power in a Purely Inductive System

Since voltage and current are 90

◦ out of phase for a purely inductive current, half of the time voltage and current will be of the same sign, while they will be of opposite sign the other half of the time. This causes power in this circuit to alternate in cycles of positive and negative values. Positive power means that load in the system is receiving (absorbing)

30

power from the generation source(s), while negative power means that power is actually being returned to the generation source. This means that reactive components (inductors and capacitors) dissipate zero power, as they equally absorb power from, and return power

Adding capacitance into the picture, of course, means that the circuit’s phase angle will decrease, moving voltage and current closer into phase and allowing for positive power output, as shown graphically in Figure 1A.3 below:

P, I, E Instantaneous Voltage

Instantaneous Current

Instantaneous Power time

Figure 1A.3: Current, Voltage, and Power in a Resistive/Reactive Circuit

In a circuit characterized by both resistance and reactance, voltage and current are out of phase by less than | 90

◦

| , and power cycles between positive and negative values, but net power output is positive.

When calculating power in circuits with reactive and resistive elements, we apply Ohm’s law. Once again denoting reactance by X , resistance by R , impedance by Z , current by I , and voltage by E , we have the following three expressions for Ohms law:

E = I × R (circuit with resistive elements only) ,

E = I × X (circuit with reactive elements only) ,

E = I × Z (circuit with resistive and reactive elements).

Note that the basic relationship, voltage equals current times resistance, is unchanged. The point is simply that the nature of resistance varies with the different resistance sources.

Since the basic relationship for power is unchanged as well, power for resistive, reactive,

35

For further reading, see Rau(2003), Appendix A, and Mittle and Mittal, Ch. 6 - 8.

31

and resistive plus reactive circuits is, respectively:

P = | I |

2

× R,

Q = | I |

2

× X,

S = | I |

2

× Z, where:

• P is real power, measured in Watts,

• Q is reactive power, measured in volt-amperes reactive (VAR), and

• S is apparent power, measured in volt-amperes (VA).

(1A.1)

Just as we expressed the relationship between resistance, reactance, and impedance with a right triangle, we express the relationships between different power elements with the power triangle . Consider a circuit containing a 60 volt power source, a capacitor rated at

30 farads, and a 20Ω resistor. In order to calculate P , Q , and S for this circuit, we must calculate the current flowing through it. Impedance is simply:

Z = p

R 2 + X 2 = 36 .

06Ω .

Therefore, current is:

E

I =

Z

Real Power is:

= 1 .

66 amps .

P = I

2

R = 55 .

1 watts , reactive power is:

Q = I

2

X = 82 .

7 VAR , and complex power is:

S = I

2

Z = 99 .

4VA .

The power triangle is shown graphically as:

S

Q

P

Figure 1A.4: The Power Triangle

32

It is straightforward to verify that P/S = R/Z , and so one may derive the phase angle either through either power or impedance manipulations. The former expression is commonly known as the power factor . The ability to reduce a systems phase angle is known as reactive power supply . Only generators with this ability may compete in the reactive power market.

Like we calculated instantaneous power for purely resistive circuits in (1A.1), we may also calculate instantaneous power for a purely inductive circuit, a purely capacitive circuit, and a combined resistive-reactive circuit. For a purely inductive load, current lags voltage by 90

◦

. Therefore, we have: i ( t ) = I max cos( θ − 90) , v ( t ) = V max cos θ.

Instantaneous power is then: p ( t ) = V max

I max cos θ cos( θ − 90) ,

= V max

I max cos θ sin θ,

= | V || I | sin(2 θ ) .

Integrating over [0 , 2 π ], we find that instantaneous power for a purely inductive circuit does indeed have an average value of zero, as argued above. One may also show that average power for a purely capacitive circuit is zero, as found by integrating −| V || I | sin(2 θ ). Finally, for mixed resistive/reactive circuits, we may write: p ( t ) = V max

I max cos θ cos( θ − δ ) ,

=

V max

I max

[cos

2

2

θ cos δ − sin

2

θ cos θ + cos δ + 2 sin θ cos θ sin δ ] .

The first three terms inside the parentheses reduce to (cos θ )(1 + cos 2 θ ), while the fourth reduces to (sin θ )( sin 2 θ ). Thus, we may express instantaneous power in a single-phase AC circuit as: s ( t ) =

V max

I max

(cos δ )(1 + cos 2 θ ) +

2

V max

2

I max

(sin δ )(sin 2 δ

Alternatively, since | V | = V max

/

√

2 and | I | = I max

/

√

2, we have

) .

s ( t ) = | V || I | (cos δ )(1 + cos 2 θ ) + | V || I | (sin δ )(sin 2 θ ) .

(1A.2)

The first term is once again real power, while the second term is reactive power. Note that when the phase angle is equal to zero, reactive power is equal to zero, as expected. These

33

equations give us an alternative method for calculating average real, reactive, and complex power in AC circuits. We calculate average real power as:

1

2 π

Z

2 π

| V || I | (cos δ )(1 + cos 2 θ )d θ = | V || I | cos δ.

0

(1A.3)

By inspection, (1A.3) is at a maximum when δ = 0. Notice that since k

R

2 θ

0 the average value of reactive power from (1A.2) is always zero.

sin 2 θ d θ = 0 ,

We will also find it useful to express power in terms of the systems admittance matrix. To do this, we note that complex power injected into the network at bus ` is given by:

S

`

= V

`

I

`

∗

.

(1A.4)

Post-multiplying the admittance matrix, given by Eq. (36), by the voltage vector V once again yields AX = I , with individual elements:

I

`

= A

``

V

`

X k ∈

J ( ` )

A

`k

V k

.

(1A.5)

Substituting (1A.5) into (1A.4) yields

S

`

= V

`

"

A

``

V

`

+

X k ∈

J ( ` )

A k

V k

#

∗

= | V

`

|

2

A

∗

``

+

X k ∈

J ( ` )

A

∗

`k

V

`

V k

.

We may break down complex power into real and reactive power, starting by dividing the admittance terms into their real and imaginary components. Let us start with the expression for admittance as the inverse of impedance. That is, given Z

`k

= R

`k

+ jX

`k

,

Y

`k

=

1

Z

`k

=

1

R

`k

+ jX

`k

=

1

R

`k

+ jX

`k

×

R

`k

− jX

`k

R

`k

− jX

`k

=

R

`k

( R

`k

) 2

− jX

`k

+ ( X

`k

) 2

.

(1A.6)

Using Eq. (1A.6), we define the real and imaginary parts of resistance, G

`k respectively, as follows: and B

`k

,

G

`k

=

B

`k

=

( R

`k

) 2

R

`k

+ ( X

`k

) 2

( R

`k

) 2

X

`k

+ ( X

`k

) 2

.

Therefore,

Y

`k

= G

`k

− jB

`k

.

(1A.7)

34

When writing the equations for complex power, the notation V max gets cumbersome. So at this point we replace V max with u

We employ Eulers identity to write complex voltage at node ` as:

V

`

= u

` e jθ

` , and complex power as:

P

`

+ jQ

`

=

=

X u

` u k

( G

`k

− jB

`k

) e j ( θ

`

− θ k k ∈

J

( ` ) ∪ `

X u

` u k

G

`k cos( θ

`

− θ k

) + B

`k sin θ

`

− θ k

) k ∈

J

( ` ) ∪ `

+ ju

` u k

G

`k sin( θ

`

− θ k

) − B

`k cos θ

`

− θ k

) , where:

P

`

=

Q

`

=

X u

` u k

G

`k cos( θ

`

− θ k

) + B

`k sin θ

`

− θ k

) , k ∈

J

( ` ) ∪ `

X u

` u k k ∈

J

( ` ) ∪ `

G

`k sin( θ

`

− θ k

) − B

`k cos θ

`

− θ k

) .

(1A.8)

(1A.9)

(1A.8) and (1A.9) are known as power flow equality constraints, and must be satisfied at each bus ` of the power system. Note that the lhs of this equation represents net power flow out of node ` . If real/reactive power generation at this node is greater than real/reactive power consumption , then P

`

, Q

`

> 0, respectively. The rhs of the equation represents the fact that this net power flow travels across the transmission network, from the given node, ` , to the nodes with which it is connected k ∈

J

( ` ). Summing up all of these positive

(indicating net power flow from node ` to node k) and negative (vice-versa) power flows, we arrive at the net real/reactive power flow out of node ` . Finally, the KCL implies that net power flow out of node ` is equal to the sum of the power flows along each line connected to that node, so we write the power balance constraints as: p

`

( x ) = q

`

( x ) =

X u

` u k

G

`k cos( θ

`

− θ k

) + B

`k sin θ

`

− θ k

) − P

`

= 0 , k ∈

J

( ` ) ∪ `

X u

` u k k ∈

J

( ` ) ∪ `

G

`k sin( θ

`

− θ k

) − B

`k cos θ

`

− θ k

) − Q

`

= 0 .

(1A.10)

(1A.11)

36

We use alternate notations because electrical engineering texts do, and we wish to expose the reader to these stylistic differences. Our exposition is closest to that of Baldick (2006, Ch. 6.2).

35

We also derive the formulas for power flow across a given line `k , connecting buses ` and k, in the direction of ` to k. For a simple circuit with no shunt elements, the flow of power across line `k would be equal to: u

` u k

G

`k cos( θ

`

− θ k

) + B

`k sin( θ

`

− θ k

)

This equation simply refers to the drop in voltage (and thus power flow) moving across nodes in a series circuit. Once we take explicit account of the elements connecting generation to the transmission system (the shunt elements), we have to take into the consideration the power sourcing at these elements. We derive this element of the power flow equation by setting k = ` in equations (1A.10) and (1A.10). Doing so yields the quantities: u

` u k

G

`` cos( θ

`

− θ k

) + B

`` sin( θ

`

− θ k

) = ( u

`

)

2

G

``

, and, in the same manner, − ( u

`

)

2

B

``

.

Therefore, denoting real power flow across line `k in the direction of k as ˜

`k

(and doing so analogously for reactive power flow), we have:

˜

`k

= ( u

`

)

2

G

``

+ u

` u k

G

`k cos( θ

`

− θ k

) + B

`k sin( θ

`

− θ k

) , q

`k

= − ( u

`

)

2

B

``

+ u

` u k

G

`k sin( θ

`

− θ k

) − B

`k cos( θ

`

− θ k

) .

(1A.12)

(1A.13)

Likewise, we may rewrite (1A.10) and (1A.11) as:

P

`

= u

2

`

G

``

+

X u k ∈

J

( ` )

X

Q

`

= − u

2

`

B

``

+

` k ∈

J

( ` ) u k u

`

[ G

`k u k

[ G cos( θ

`

− θ k

) + B

`k sin( θ

`

`k sin( θ

`

− θ k

) − B

`k cos(

− θ k

)] ,

θ

`

− θ k

)] .

(1A.14)

(1A.15)

36

1.B

Admittance Matrix for the DC Approximation to the

AC Circuit

For convenience, we return to the admittance matrix for the ladder circuit from (1.25).

For clarity, however, we now denote the admittances with double subscript notation Y

`k

, to denote the admittance of the line connecting nodes ` and k (e.g., Y a now becomes Y

01

, because line a connects nodes 0 and 1). We may thus express the admittance matrix from

Y

02

+ Y

12

− Y

12

−

0

Y

12

0

Y

12

+ Y

02

+ Y

23

− Y

23

− Y

23

Y

23

+ Y

30

0 0 − Y

34

+ Y

34

− jB

jB

12

0

0

12

0

0

− Y

34

Y

34

+ Y

40

,

From (1A.7) we have Y

`k