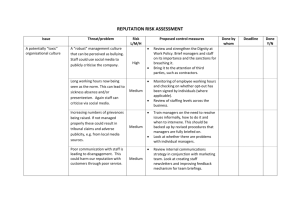

The adequacy of the current sanctions and

advertisement