The ARAR Error Model for Univariate Time Series and Distributed

advertisement

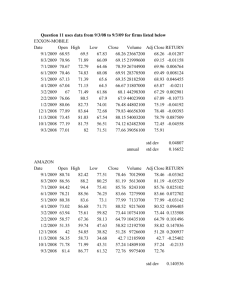

The ARAR Error Model for Univariate Time Series and Distributed Lag Models R. A. L. Carter University of Western Ontario and University of Calgary A. Zellner University of Chicago Abstract We show that the use of prior information derived from former empirical findings and/or subject matter theory regarding the lag structure of the observable variables together with an AR process for the error terms can produce univariate and single equation models that are intuitively appealing, simple to implement and work well in practice. Key words: time series analysis, model formulation. JEL Classification: C11, C22. 1 Introduction “None of the previous work should be construed as a demonstration of the inevitability of MA disturbances in econometric models. As Parzen’s proverb1 reminds us the disturbance term is essentially man-made, and it is up to man to decide if some of his creations are more reasonable than others.” Nicholls, Pagan and Terrell (1975) p.117. Much theoretical and empirical work in economics features low order autoregressions in endogenous variables, often accompanied by exogenous variables. For example Caplin and Leahy (1999) and Sargent (1987, Chapter IX) present AR(1) models. AR(2) models for cycles appear in: Samuelson(1939a,b), Burns and Mitchell (1946), Goodwin (1947), Cooper and Johri(1999) and Sargent (1987, Chapter IX). Papers by Metzler (1941), Garcia-Ferrer et. al. (1987), Geweke (1988), Zellner and Hong (1989), Zellner, Hong and Min (1991) and Zellner and Chen (2001) feature AR(3) models. Gandolfo (1996) provides many examples of models which can be expressed as autoregressions, plus exogenous variables including: cobweb models, foreign trade and taxation multipliers, multiplier-accelerator models, market adjustment models and inventory models. 1 “God made X (the data), man made all the rest (especially, the error term).” quoted in Nicholls et al (1975). We add that in many instances humans, not God, construct the data. 1 Thus, economists building time series models often have quite strong prior beliefs, based on theoretical and applied work, about the lag structure for an observable variable. However, they usually have quite weak prior beliefs about the lag structure for the error in a model. The AR model for the error was popular until Box and Jenkins (1976) methods became dominant in univariate time series analysis and introduced the MA model without much theoretical justification. This produced complicated likelihood functions and estimation procedures and implied infinite AR processes for observed variables. We show here how the use of prior information about the lag structure of the observable variables and a return to the AR model for the error is intuitively appealing, yields finite AR processes for observed variables and simplifies inference procedures. 2 Univariate Models A reasonable starting point for an empirical analysis is to specify that the random variable of interest has been generated by an autoregressive process that reflects prior beliefs about the lag structure. This gives φ(L)(Yt − µ) = Ut , (2.0.1) where φ(L) is a polynomial of degree p in the lag operator L, µ is the origin from which Yt is measured (the mean if Yt is stationary) and Ut is a covariancestationary error with zero mean. Researchers may have quite strong beliefs about the value of p and any restrictions on φ(L) necessary to make Yt stationary. In such cases the parameters of interest are the coefficients of φ(L), φi , or some function of them, such as the roots of φ(L). However, it is rare for researchers to have such strong prior beliefs about the error process and thus a variety of models for Ut may be entertained: see e.g. Fuller and Martin (1961), Zellner et. al. (1965) and Zellner and Geisel (1970). One possible model for the error is Ut = ε t , (2.0.2) where εt is white noise with zero mean and variance σ 2 . But this rather restrictive model implies that a shock to the subject-matter portion of the model, φ(L)(Yt − µ), has an impact in only the current period. Of course, if φ(L) is invertible the MA representation of Yt , Yt = µ + 1 εt , φ(L) (2.0.3) has infinite length. 2.1 The ARMA model A popular alternative model for Ut is the MA model of Box and Jenkins(1976). Ut = θ(L)εt , (2.1.1) where θ(L) is an invertible polynomial of degree q in L. This model was introduced “To achieve greater flexibility in fitting actual time series, . . . ”2 rather than from any explicit prior knowledge about the behavior of the error. But this model is only slightly less restrictive than the white noise model because it implies that the effect on φ(L)(Yt − µ) of a shock εt dies out completely after q periods. Also strong restrictions on the values of the autocorrelations of Y t are needed for θ(L) to be invertible. Now the infinite MA representation Yt = µ + θ(L) εt φ(L) (2.1.2) allows somewhat richer behavior than does the white noise case. However, the AR representation, φ(L) (Yt − µ) = εt , (2.1.3) θ(L) is also infinite, which may be undesirable from a subject matter viewpoint and which complicates inference about the φi . Of course, an MA model for Ut is desirable on a priori grounds if the observations on Yt were the result of temporal aggregation or if the model features unobserved states. However, in many cases an MA model for Ut arises from an examination of sample autocorrelations and is not justified by any prior theory. In these cases the model described in the next section is worthy of consideration. 2.2 The ARAR model We propose an alternative, AR model for Ut which is parsimonious, allows rich time series behavior and simplifies inference procedures: ω(L)Ut = εt , (2.2.1) where ω(L) is a polynomial of degree r in L. Assuming ω(L) is invertible, the infinite MA representation of Ut is Ut = 1 εt . ω(L) (2.2.2) Substituting (2.2.2) into (2.0.1) gives the “structural form” of the model, which we label3 ARAR(p,r), as φ(L)(Yt − µ) = Ut = 2 Box 1 εt . ω(L) (2.2.3) and Jenkins (1976) page 11. (1982) introduces a model with a similar label. His ARARMA model consists of two filters applied sequentially. The first filter is a nonstationary AR with a stationary output which is input to a second ARMA filter with a white noise output. However, his model does not partition the second filter into a portion containing prior information about the dynamics of Yt and a portion modeling the error dynamics. 3 Parzen In (2.2.3) the impact on φ(L)(Yt − µ) of a shock εt may die out very slowly, rather than being cut off abruptly, so our model can produce rich behavior in Ut with values of r as small as two. Also, (2.2.2) is consistent with Wold’s (1938) Decomposition Theorem, in contrast to MA models for Ut which impose a truncation4 on the Wold representation of Ut . If Yt is covariance-stationary, then the MA representations of all the above models for Yt are infinite and, therefore, consistent with Wold’s theorem. However, (2.2.2) has the advantage of being the simplest model that also imposes this consistency on Ut . The “reduced form” of the model is, from (2.0.1) and (2.2.2), ω(L)φ(L)(Yt − µ) = εt , (2.2.4) α(L)Yt = α0 + εt (2.2.5) or where α(L) = ω(L)φ(L) is a polynomial in L of degree p + r with restricted coefficients and α0 = ω(1)φ(1)µ. If φ(L) and ω(L) are invertible the MA representation of Yt is 1 εt . (2.2.6) Yt = µ + ω(L)φ(L) The restrictions that the ARAR model imposes on the coefficients of α(L) will affect the rate at which the impulse response coefficients in (2.2.6) decline, but that decline will be more rapid than in models with fractional differencing. In contrast to (2.1.3), both (2.0.1) and (2.2.3) are finite AR processes. Thus the ARAR model is simpler than the ARMA model and it should be assigned higher prior probability according to the “Simplicity Postulate” of Wrinch and Jeffreys (1921). Also, few scientific theories are framed as infinite AR processes, but many are in the form of finite difference or differential equations. Finally, the form of (2.2.4) makes it simpler to estimate than either (2.1.2) or (2.1.3). To see the parsimony of (2.2.2) as compared to (2.1.1) consider an example in which ω(L) = 1 − ω1L − ω2 L2 = (1 − ξ1 L)(1 − ξ2 L) with ξ1 = .7 and ξ2 = −.5. Then the coefficient of εt−5 in (2.2.2) is .111 so it would take an MA process with at least five parameters to approximate the inverse of the AR with two parameters. Next assume the roots are complex conjugate with ξ1 = .7 + .5i and ξ2 = .7 − .5i. Now the coefficient of εt−5 is .242 so, again, an approximating MA would have to have at least five parameters, compared to two in ω(L). The only behavior that is allowed by the ARMA model but ruled out by the ARAR model is an infinite AR in Yt ; although if p and r are high (2.2.4) will be a very long AR. Seasonal effects are modeled by expressing φ(L) or ω(L) as products of nonseasonal and seasonal polynomials. Because some prior information about φ(L) is assumed to be available, the polynomials φ(L) and ω(L) can be analyzed separately. Some of the coefficients of α(L) may be quite small in absolute value, even though no φi or ωi is small. This can result in small and imprecise estimates of 4 Of course, it is possible for the Wold decomposition to yield a finite MA form in some cases. However, we regard a model which requires a finite MA form for every case to be less desirable. these αi if the restrictions implied by the ARAR structure are ignored, tempting researchers to impose invalid zero restrictions on α(L). As we show below, direct estimation of φ and ω is easy so there is no need to focus solely on (2.2.5). Before considering inferences about φ(L) and ω(L) we note that, without further information, they are not identified. To see this, interchange φ(L) and ω(L) in (2.2.3) which would yield the same unrestricted AR(p + r) as (2.2.5) so the likelihood function based on (2.2.5) would be unchanged. Also, multiplying both sides of (2.2.3) by a nonzero scalar υ0 would leave (2.2.5) unchanged but it would result in υ0 φ(L) = υ0 − υ0 φ1 L − . . . υ0 φp Lp . We avoid this identification problem by adopting the common assumption that the model has been normalized to have the first term in φ(L) equal to 1.0. Identification failure also arises if both sides of (2.2.3) are multiplied by the invertible lag polynomial υ(L) to give υ(L)φ(L)Yt = υ(1)µ + υ(L) εt , ω(L) (2.2.7) leaving (2.2.5) and the likelihood function unchanged. This is analogous to model multiplicity in ARMA models containing the products of seasonal and nonseasonal lag polynomials. Following Box and Jenkins (1976), we assume that all common factor polynomials like υ(L) have been canceled out of the model. Even after normalization and common factor cancellations, an identification problem remains. Write φ(L) and ω(L) in terms of terms of their roots as φ(L) = (1 − λ1 L)(1 − λ2 L) . . . (1 − λp L) (2.2.8) ω(L) = (1 − ξ1 L)(1 − ξ2 L) . . . (1 − ξr L). (2.2.9) α(L) = φ(L)ω(L) = (1 − λ1 L) . . . (1 − λp L)(1 − ξ1 L) . . . (1 − ξr L). (2.2.10) and Thus Now assume the λi and ξi are unknown. Then write α(L) = (1 − η1 L)(1 − η2 L) . . . (1 − ηp+r L) (2.2.11) and assume we know the values of the roots ηi , i = 1, 2, . . . , p + r. The identification problem lies in deciding which terms (1 − ηi L) are part of φ(L) and which are part of ω(L). The solution is to use the same prior information that was used to specify φ(L) to make this decision. In many cases φ(L) will be specified to be of degree two giving a damped cycle with a period in some a priori most probable range. Thus the roots ηi belonging to φ(L) must be a complex conjugate pair with modulus less than one and a period lying in the most probable range. Any roots ηi which are real or complex conjugate with a smaller period must belong to ω(L). In practice the values of the ηi are unknown. But once we specify p and r we can fit an AR(p + r) by ordinary least squares (OLS) and find the roots of the resulting α̂(L). They can be used to find initial values of the φ̂i and ω̂i for use in nonlinear least squares (NLS) estimation of ω(L)φ(L). This idea can even be used on a long AR model produced by someone else. Using their estimated AR coefficients we can obtain estimated roots, moduli and period to help us judge whether their model is really the reduced form of an ARAR model. Alternatively, a priori plausible initial values of φi and ωi can be used in NLS to obtain estimates ω̂(L) and φ̂(L). Then their roots can be compared to those of α̂(L) from (2.2.5) as a check that the specification is correct. After ruling out under identified models, the total number of free parameters in φ(L) and ω(L) may be equal to the number of free parameters in α(L). Such models are just identified so, under normality, ML estimates of the φi and ωi can be derived from OLS estimates of the αi . Alternatively, if NLS estimates of the φi and ωi are used to derive estimates of the αi they should equal the OLS estimates. However, if the number of parameters in α(L) is greater than that in φ(L) and ω(L) (e.g. if some of the φi or ωi are specified to be zero without reducing the value of p or r) the model is over identified so it is not generally possible to solve for unique estimates of the φi and ωi from OLS estimates of the αi . In such cases it will be necessary to estimate the φi and ωi directly by NLS. An interesting simple case is obtained by assuming that p and r are both one so that (2.2.4) becomes ω(L)φ(L)(Yt − µ) = (1 − ωL)(1 − φL)(Yt − µ) (2.2.12) 2 = (1 − [ω + φ]L + ωφL )Y − (1 − ω)(1 − φ)µ = εt from which Yt = (1 − ω)(1 − φ)µ + (ω + φ)Yt−1 − φωYt−2 + εt = α0 + α1 Yt−1 + α2 Yt−2 + εt . (2.2.13) (2.2.14) We believe this ARAR(1,1) model is a useful alternative to the popular ARMA(1,1) model with the same number of parameters. Imposing stationarity and invertibility on the ARMA(1,1) model results in very strong restrictions on the range of admissible values for the autocorrelations of Yt at lags 1 and 2, ρ1 and ρ2 : see Box and Jenkins(1976), Figure 3.10(b). The analogous restrictions on the ARAR(1,1) model result in restrictions on ρ1 and ρ2 which are much weaker. Assuming that −1 < φ < 1 and −1 < ω < 1, results in the feasible set of α1 and α2 values being a subset of that for an unrestricted AR(2) which excludes the subset associated with complex roots. This is shown in Figure 1 which should be compared with Box and Jenkins (1976), Figure 3.9. These restrictions also restrict the ρ1 , ρ2 space to a subset of that for an unrestricted AR(2). This space is shown in Figure 2a which should be compared with Box and Jenkins (1976) Figures 3.3(b) and, especially, 3.10(b), which is reproduced below as Figure 2b. The admissible parameter space shown in Figure 2a is considerably larger than that shown in Figure 2b and negative values of ρ2 are excluded. This last point should aid in model formulation as sample autocorrelations which are negative at both lags one and two would be evidence against an ARAR(1,1) model being appropriate. We note that for many economic time series the first two sample autocorrelations are positive so that the ARAR model is not ruled out. Since both φ(L) and ω(L) have been normalized and there are no common factors, all that is needed for identification is some prior information on the roots of α(L), φ and ω. For example we might identify φ as the (absolutely) larger root of α(L). Since this model is just identified, we could obtain estimates of φ and ω (plus µ) from estimates of the αi by OLS, ML, MAD, traditional Bayes and Bayesian Method of Moments (BMOM): see Zellner (1996, 1997), Zellner and Tobias (2001) and Green and Strawderman (1996). The stationarity restrictions imply that −2 < α1 < 2 and −1 < α2 < 1. Note that if, for example, φ > 0 and ω < 0, α1 may be quite small in absolute value but imposing α1 = 0 would be a specification error. Alternatively, one could estimate φ and ω by NLS and use the results to form estimates of the αi , which should be the same as the OLS estimates. Posterior densities for φ and ω can be obtained from the unrestricted posterior for α1 and α2 by simulation. If the stationarity restriction is to be imposed draws which violate this restriction would be discarded. Now assume a quarterly seasonal model for the error, ω(L) = 1 − ω4 L4 , with the nonseasonal, subject-matter structure φ(L) = 1 − φL, as before. This gives a restricted AR(5) for the reduced form, α(L) = (1 − φL − ω4 L4 + φω4 L5 ) 4 (2.2.15) 5 = (1 − α1 L − α4 L − α5 L ), with only three parameters in contrast to an unrestricted AR(5) with five. However, this model is over identified so it would be necessary to obtain estimates of φ and ω4 directly using NLS. To ensure that ω(L) is invertible impose the uniform prior, p(ω4 ) = .5 over the range −1 < ω4 < 1. To impose stationarity on Yt use the prior p(φ) ∝ (1 − φ)a−1 (1 + φ)b−1 for −1 < φ < 1: see Zellner (1971 p.190). Finally, adopt a uniform prior on log(σ) so that p(σ) ∝ σ −1 . Then, given a T × 1 vector y of data and assuming εt to be independent normal, σ can be integrated out analytically and the joint posterior density for φ and ω4 can be analyzed using bivariate numerical integration. The first step in building a general ARAR model is to select a degree p for the polynomial φ(L) based on prior knowledge. Data plots, sample autocorrelations and partial autocorrelations may also be useful at this stage, e.g. to confirm the presence of unit roots or cycles which suggest a p ≥ 2. A tentative AR(r) model for the error Ut should now be specified with r believed large enough to make εt white noise. This will imply that the degree of α(L) is p + r. The suitability of r can be gauged from the autocorrelations of the residuals from OLS applied to (2.2.5). This procedure differs from the Box and Jenkins (1976) method which bases the lag lengths primarily on the sizes of data autocorrelations and partial autocorrelations. Their procedure may be suitable if the researcher has no prior beliefs about φ(L), although it involves considerable pretesting that can have adverse effects upon subsequent inference; see Judge and Bock(1978). Of course, models initially based on features of a sample can in the future be rationalized by theory and confirmed with new samples. In Bayesian analysis the choice of p and r should be guided by posterior odds ratios. If the odds analysis leads to several favored models results regarding parameters and predictions can be averaged over the models leading to forecasts which are often superior to non-combined forecasts. If the prior odds ratio is set to one, the posterior odds ratio becomes the Bayes factor, B1,2 , which can be calculated exactly for small models: see Monahan (1983). For large samples Schwarz (1978) provides the approximation B1,2 ' T q/2 elr , (2.2.16) where: the log-likelihood ratio lr = log[l(Θ̂1 /y)/l(Θ̂2/y)], Θ̂i is the maximumlikelihood estimate of Θ from model i, q = q2 − q1 and qi is the number of parameters in model i. We note that the regression equation commonly used to test for the presence of a unit root versus a linear trends can be written as φ(L)Yt = µ0 + µ1 t + [ω(L)]−1 εt , (2.2.17) α(L)(Yt − µ0 − µ1 t) = εt : (2.2.18) or as see Schotman and van Dijk (1991) and Zivot (1994). These are both examples of the ARAR models (2.2.3) and (2.2.4) with µ a linear function of the trend variable t and φ(L) of degree 2 or more. If the unit root is believed to be in φ(L), repeated draws can be made from the joint posterior of its coefficients and the roots calculated for each draw. The proportion of draws for which the roots are complex versus real and with modulus below 1.0 versus 1.0 or more are estimates of the posterior probabilities of these properties of φ(L): see Geweke(1988), Schotman and van Dijk (1991) and Zivot (1994). 2.3 Empirical example: Housing starts One of the leading indicators published by the U.S. Department of Commerce is the number of permits issued by local authorities for the building of new private housing units. Pankratz (1983) has described it as “an especially challenging series to model” with Box-Jenkins procedures5 . This makes it an attractive series to use in illustrating the ARAR technique. First we formed a prior belief about the degree of φ(L). Since this series is thought to lead the business cycle, we believed it should have a cycle. Therefore, 5 Pankratz, (1983) p.369. We suspect that the absence of exogenous variables, such as real per capita income, the stock of housing and the real price of housing, from the univariate time series model accounts for much of the difficulty in modeling and, especially, forecasting this series. we specified6 p = 2 and we chose a proper prior for the parameters of φ(L) which placed a modest amount of probability on the region corresponding to conjugate complex roots. Because the data are quarterly, we chose an AR(4) as our tentative model for Ut . This allows for imperfections in the seasonal adjustment and for the presence in the error of omitted variables which are seasonally unadjusted. Thus our structural model is (1 − φ1 L − φ2 L2 )(Yt − µ) = Ut , (2.3.1) (1 − ω1 L − ω2 L2 − ω3 L3 − ω4 L4 )Ut = εt . (2.3.2) with From (2.3.1) and (2.3.2) we obtained the restricted AR(6) reduced form Yt = µφ(1)ω(1) + (φ1 + ω1 )Yt−1 + (φ2 + ω2 − ω1 φ1 )Yt−2 + (ω3 − ω1 φ2 − ω2 φ1 )Yt−3 + (ω4 − ω2 φ2 − ω3 φ1 )Yt−4 − (ω3 φ2 + ω4 φ1 )Yt−5 − ω4 φ2 Yt−6 + εt , (2.3.3) which is just identified and implies the unrestricted reduced form AR(6) Yt = α0 + α1 Yt−1 + α2 Yt−2 + α3 Yt−3 + α4 Yt−4 + α5 Yt−5 + α6 Yt−6 + εt . (2.3.4) Note that if φ(L) has a unit root then φ(1) = 0 and if ω(L) has a unit root then ω(1) = 0. Thus, given a strong prior belief that µ 6= 0 or a large sample mean for Yt , an estimate of α0 close to 0 would lead us to question the stationarity of the both Yt and Ut . Our sample of 191 observations was obtained from the U.S. Department of Commerce, Bureau of Economic Analysis, Survey of Current Business, October 1995 and January 1996. These data are seasonally adjusted index numbers (based on 1987) extending from January 1948 until October 1995. We converted them to quarterly form by averaging over the months of each quarter: they are plotted in Figure 3. Neither Figure 3, nor the sample autocorrelations and partial autocorrelations7, in Table 1, display the pattern typical of a unit root but they do show the cyclical pattern rather clearly. These results, and those which follow, used the observations from the third quarter of 1949 until the third quarter of 1990, 165 observations. The earlier observations provided lagged values and the observations from the fourth quarter of 1990 to the third quarter of 1995 were used for post-sample forecasts. If our specification in (2.3.1) and (2.3.2) is adequate OLS applied to (2.3.4) should yield serially uncorrelated residuals and the roots of α̂(L) should contain a conjugate complex pair with a modulus less than one and a period between 12 and 24 quarters. These are estimates of the roots of φ(L) giving a damped cycle with a period of three to six years. There may also be additional conjugate 6 In this example we are abstracting from the problem of analyzing multiple cycles, such as long cycles in construction activity. 7 For all the calculations reported in Tables 1 to 4 we used TSP Version 4.3A for OS/2. complex pairs of roots with moduli less than one but with shorter periods which model the dynamics of Ut . The estimated lag polynomial, with standard errors in parentheses, is α̂(L) = 1 − 1.189L (07685) − .2700L5 (.1228) − .002388L2 (.1208) + .2014L6. (.07871) + .4343L3 (.1236) − .01077L4 (.1228) (2.3.5) The sample autocorrelations and partial autocorrelations for the OLS residuals are shown in the second and third columns of Table 1: they indicate a lack of serial correlations. The roots of α̂(L) plus their moduli and periods are given in Table 2. There are three complex conjugate pairs all of which have moduli less than one. The pair in the first line have a period of about 23 quarters which corresponds to our prior belief about the period of the business cycle. The other two pairs have shorter periods which we attribute to dynamics of the error. Thus on the basis of these results it appears that our specification is adequate. Unit root and trend analysis was carried out using (2.2.18) assuming a normal likelihood and α0 = α(1)µ0 , β1 = α(1)µ1 and ρ = φ1 + φ2 . The results are in Table 3 where the means of the posteriors are denoted by ˆ and the standard deviations by “Std Dev”. PD (ρ ≥ 1) is the posterior probability that there is a root of one or more when a diffuse prior is used while PJ (ρ ≥ 1) is obtained8 when Jefferys’ prior is used: see Phillips (1991). These results led us to infer that there is neither a unit root in Yt nor a linear trend9 . If the parameters φi and ωi are not of explicit interest and all that is wanted are forecasts of future values of Yt they can easily be obtained by both Bayesian and frequentist analysis of the unrestricted AR(6). Our primary interest here is to draw inferences about φ1 and φ2 with µ, ω1 , ω2 , ω3 , ω4 and σ being nuisance parameters. For this purpose we used NLS, which also gives the means and standard deviations of large-sample normal approximations to the posteriors for the φi and ωi , assuming diffuse priors and normal likelihood. The results are in the second column of Table 4. The approximate posterior mode for σ is denoted by σ̂. Since this model is just identified, these estimates could have been derived from those given in (2.3.5). The posterior means of ω2 and ω3 are small compared to their standard deviations so we imposed ω2 ≡ ω3 ≡ 0 which over identified the model. Thus NLS was used to obtain the results in the third column of Table 4. The approximation in (2.2.16) gave a Bayes factor in favor of the restricted model against the unrestricted model of 32.5. The results in Table 4 can be used to find approximate posterior probabilities for various aspects of the dynamic behavior of Yt . The roots of φ(L) are complex, 8 These values were calculated using the COINT routines for GAUSS which scale the trend value to range from 1 to 1/T. 9 We drew the same inference from the results of augmented Dickey-Fuller (Said and Dickey (1984)), Phillips-Perron (1988) and weighted symmetric (Pantula et.al. (1994)) tests. Table 1: Housing Starts; Autocorrelations and Partial Autocorrelations Data AR(6) Residuals Autocorr. Partial Autocorr. Partial Lag (S.E.) (S.E.) (S.E.) (S.E.) 1 .911 .911 .00213 .00213 (.0765) (.0765) (.0778) (.0778) 2 .765 -.376 -.0273 -.0273 (.125) (.0765) (.0779) (.0778) 3 .581 -.242 -.0238 -.0237 (.150) (.0765) (.0779) (.0778) 4 .397 -.0111 .0146 .0139 (.162) (.0765) (.0780) (.0778) 5 .230 -.0207 .0481 .0468 (.168) (.0765) (.780) (.0778)) 6 .0751 -.126 -.0628 -.0630 (.144) (.0765) (.0781) (.0778)) 7 -.0459 .0382 .00562 .00920 (.170) (.0765) (.0785) (.0778) 8 -.141 -.0471 .0232 .0221 (.170) (.0765) (.0785) (.0778) 9 -.210 -.0548 .0322 .0283 (.171) (.0765) (.0785) (.0778) 10 -.267 -.106 -.0387 -.0384 (.172) (.0765) (.0786) (.0778) Ljung-Box (1978) portmanteau statistics at lags 5 & 10 Q5 344 19.3 Q1 0 370 30.3 Data source: U.S. Department of Commerce, Survey of Current Business Table 2: Housing Starts; Roots of α̂(L) by OLS Roots .860 ± .242i −.624 ± .382i .358 ± .586i Modulus .893 .731 .687 Period 23.0 2.42 6.14 Table 3: Housing Starts; Posteriors for Unit Root and Trend No Trend Linear Trend α̂0 15.4 15.2 Std Dev 3.39 3.43 β̂1 —.00568 Std Dev —.0123 ρ̂ .836 .833 Std Dev .0352 .0359 PD (ρ ≥ 1) .000 .000 PJ (ρ ≥ 1) .000 .000 Table 4: Housing Starts; Asymptotic Posterior Moments Unrestricted Restricted Unrestricted Restricted ARAR(2,4) ARAR(2,4) ARMA(2,2) ARMA(2,2) φ̂1 1.72 1.63 1.04 1.22 Std Dev .0727 .0628 .189 .0771 φ̂2 -.798 -.722 -.217 -.371 Std Dev .0699 .0623 .176 .0741 ω̂1 -.531 -.461 ——Std Dev .0985 .0805 ——ω̂2 -.114 ——– —Std Dev .118 ———ω̂3 -.206 ———Std Dev .110 ———– ω̂4 -.252 -.149 ——Std Dev .0882 .0743 ——θ̂1 ——-.175 —Std Dev ——.177 ———-.398 -.329 θ̂2 Std Dev ——.0910 .0845 σ̂ 7.38 7.41 7.52 7.48 ln likelihood -560 -562 -565 -565 Q5 .658 4.80 2.37 3.96 Q10 1.89 5.79 6.96 7.39 Table 5: Housing Starts; Approx. Posterior Moments, Functions of φ1 and φ2 Unrestricted Restricted ARAR(2,4) ARAR(2,4) Dynamic Method of Std. Std. Property Approx. Mean Dev. Skew. Kurt. Mean Dev. Skew. Kurt. cycle φ21 + 4φ2 Normal Simulated -.233 -.228 .0631 .0648 0.0 .138 3.0 3.12 -.232 -.227 .0815 .0810 0.0 .0600 3.0 2.91 modulus √ −φ2 Normal Simulated .893 .893 .0391 .0392 0.0 -.120 3.0 3.03 .850 .849 .0366 .0365 0.0 -.123 3.0 2.91 period 2π/ arctan(ϑ) Normal Simulated 23.0 24.0 2.75 4.75 0.0 7.91 3.0 175 21.9 23.7 3.41 8.87 0.0 21.0 3.0 831 leading to a cycle in Yt , if φ21 + 4φ2 √ < 0. If a cycle is present, it will be damped if the modulus of the roots, −φ2 , is less than 1.0 and its period p will be 2π/ arctan(ϑ), where ϑ = −φ21 − 4φ2 /φ1 . One way to approximate the posterior probabilities of these properties is to take the first two terms in a Taylor series expansion of these functions about φ̂1 and φ̂2 which have asymptotically normal posterior distributions. The moments of these normal approximations are given in Table 5. An alternative procedure is to simulate the asymptotic joint posterior for the φi and ωi and at each replication compute the relevant functions of φ1 and φ2 . Estimates of posterior moments can be calculated from these simulated values and the relative frequency curves are estimates of the posterior densities. An estimate of the posterior probability of the existence of a cycle is the proportion of the φ√21 + 4φ2 values which are negative and the proportion of those values for which −φ2 < 1.0 is an estimate of the conditional posterior probability that the cycle is damped. Also, estimates of the conditional posterior probability for interesting ranges of the period can be calculated from the simulated values of 2π/ arctan(ϑ). We performed 5000 replications of these simulations for both the unrestricted and restricted ARAR(2,4) models. The relative frequency curves for φ21 +4φ2 are shown in Figure 4 for the unrestricted model and Figure 7 for the restricted model. Their moments are in the top block of Table 5. Although this is a nonlinear function of φ1 and φ2 , the two methods of approximation are very close to one another. The estimated posterior probability of the existence √ of a cycle is greater than .99 for both models. The relative frequency curves for −φ2 are in Figures 5 and 8 and their moments are in the second block of Table 5. The estimate of the conditional posterior probability of the cycles being damped is 1.0 for both models. In this case too, the two methods of approximation gave very similar results. The relative frequency curves for 2π/ arctan(ϑ) are in Figures 6 and 9 and their moments are in the bottom block of Table 5. Now the two methods of approximation were very different: the simulated values are positively skewed and leptokurtic. Still, our estimate of the conditional probability of the period being between 16 and 30 quarters is more than .90 for both models. While the ARAR model is best suited to obtaining inferences about the φi , it is also useful to compare its forecasting performance with that of a traditional ARMA model. An ARMA model might be suggested here by the conversion of the data from monthly to quarterly form by averaging. Application of Box-Jenkins model identification techniques to the results in Table 1 led to an ARMA(2,2) model. This is one of the models found by Pankratz10 to be adequate and it retains the form of φ(L) suggested by our prior beliefs. The NLS results for this model are in the last two columns of Table 4. The restriction θ1 ≡ 0, imposed to produce the results in the last column, was also imposed by Pankratz. Using a starting point based on the sample autocorrelations the ARMA routine took 22 iterations to converge. In contrast, the ARAR routine started from 0.0 and took five iterations to converge. Also, as a vehicle for learning about φ1 and φ2 the restricted ARMA(2,2) is less successful than the restricted ARAR(2,4): the Q10 is larger for the restricted ARMA(2,2), the absolute ratios of posterior means to standard deviations are larger for the restricted ARAR model and the approximate Bayes factor is 1.38 in favor of the restricted ARAR. Also, the ARMA(2,2) results have quite different dynamic properties relative to those of the ARAR(2,4) models: the approximate posterior probability of cycles for the unrestricted ARMA(2,2) is only .276 while that for the restricted ARMA(2,2) is only .401. We obtained one-step-ahead forecasts by the restricted and unrestricted ARAR(2,4) model and by the restricted and unrestricted ARMA(2,2) for 20 quarters beyond the end of the sample. For the first forecast the coefficients were set at the sample period posterior means. Then for subsequent forecasts they were updated for each period. Since data for this period had been left out of the sample used in estimation, we were able to calculate the forecast errors for each of the 20 quarters in the forecast period. Summary statistics for these forecast errors, as percentages of the actual future values of Y t , are in Table 6. The main difference between the methods is the size of the mean percentage forecast errors, which were larger for the ARAR models. This made the RMSE for the unrestricted ARAR(2,4) larger than that for the unrestricted ARMA(2,2). However, the standard deviation of the restricted ARAR percentage forecast errors was smaller than for the restricted ARMA so that in this case ARAR has a smaller RMSE. These forecasts are rather imprecise because, as noted above, obvious exogenous variables have been omitted and no account has been taken of long cycles in building activity. This example demonstrates several advantages of the ARAR model over the ARMA model. NLS estimates of the ARAR parameters took many fewer iterations than ARMA estimates. There was more serial correlation in the 10 The other model which Pankratz found adequate was an ARMA(3,2) with φ 2 set to 0. For our data that model has slightly higher residual autocorrelation, was less parsimonious and had larger forecast errors than did the ARAR(2,2). Table 6: Housing Starts; Percentage Forecast Error Summary Statistics Unrestricted Restricted Unrestricted Restricted ARAR(2,4) ARAR(2,4) ARMA(2,2) ARMA(2,2) Mean 2.92 2.52 1.85 1.86 Std Dev 7.25 6.68 7.25 6.98 RMSE 7.82 7.14 7.49 7.22 ARMA residuals than in the ARAR residuals and the Bayes factor favored the ARAR model. The estimates of the ARAR parameters and the dynamic properties of the estimated ARAR model were in closer accord with our prior beliefs than were the ARMA results. Finally, the restricted ARAR model had percentage forecast errors with smaller RMSE than did the restricted ARMA model. Although a sample of size 165 may seem large enough to justify largesample approximate posteriors, it is still useful to consider the calculation of exact posteriors for the φi and ωi . We adapted the procedure in Zellner (1971) Chapter IV to obtain exact posteriors for φ1 , φ2 , ω1 and ω4 from the restricted ARAR(2,4) model of column 3 in Table 411 . Let φ0 = [φ0 , φ1 , φ2 ], where φ0 = µφ(1), and ω 0 = [ω1 , ω4 ]. Our prior12 on φ, ω and σ was p(φ, ω, σ) = p(φ|σ)p(ω|σ)p(σ). (2.3.6) An inverted gamma distribution was used for p(σ) with parameters s2 = 1.0 and v = 1 giving a mode of .7071. We set p(ω|σ) ∝ 1.0 to reflect our lack of prior knowledge regarding the behavior of Ut . We chose a normal form for p(φ|σ) with E(φ|σ)0 = φ0 = [0, 1.0, −.5] and covariance matrix V (φ|σ) = σ 2 W −1 with 400 0 0 16 −8 . W −1 = 0 0 −8 16 This prior is centered in the region corresponding to complex roots for φ(L) but it is still rather uninformative with respect to φ1 and φ2 . For example, conditional on σ = .7071, the prior probability of obtaining complex roots is only about .12. 11 For these calculations the data was measured as deviations from the estimated sample mean divided by the standard deviation of these deviations. This had no effect on the φ̂1 , φ̂2 , ω̂1 or ω̂4 but it served to eliminate overflow and underflow errors in the numerical evaluations of the integrals discussed below. 12 This prior, and the improper prior used in section (3.3), are quite different to the smoothness priors introduced by Shiller (1973) because they assign prior probability to individual parameters while the smoothness prior assigns prior probability only to the differences between parameters Let the vector of initial values of Yt be y00 = [y1 , . . . , yp+r ] and the vector of the T remaining values be y0 = [yp+r+1 , . . . , yp+r+T ]. For this example p = 2, r = 4, T = 165 and all inferences are conditional on y0 . Define y(ω) as the T × 1 vector with elements yt (ω) = ω(L)yt and z(ω) as the T × k matrix with rows zt0 (ω) = [ω(1), yt−1 (ω), yt−2 (ω)], where k = p + 1. Assume εt ∼ IN (0, σ 2 ). Then the joint posterior, after completing the square on φ, can be written as ( ) g 0 Q(ω)[φ − φ(ω)]) g 2 (ω) + [φ − φ(ω)] (ṽ sg (ṽ−+k+1) p(φ, ω, σ|y, y0 ) ∝ σ exp − 2σ 2 g = [W + z0 (ω)z(ω)]−1 [W φ + z0 (ω)y(ω)], where: ṽ = T + v; φ(ω) 0 and g [W + z0 (ω)z(ω)]φ(ω) g 2 (ω) = vs2 + φ0 W φ + y0 (ω)y(ω) − φ(ω) ṽ sg Q(ω) = [W + z0 (ω)z(ω)]. The use of the proper prior p(φ|σ) in (2.3.6) ensures that Q(ω) will be nonsingular even if ω(1) = 0, thus avoiding the need to confine attention to models with µ ≡ 0 or to impose the restriction ω(1) 6= 0: see Zellner (1971) page 89. Integrating out σ 2 gives h i−(ṽ+k)/2 n o−(ṽ+k)/2 g 0 H(ω)[φ − φ(ω)] g 2 (ω) p(φ, ω|y, y0 ) ∝ sg ṽ + [φ − φ(ω)] h i−1 (2.3.7) 2 (ω) Q(ω). where H(ω) = sg Integrating (2.3.7) over φ gives the joint posterior density for ω1 and ω4 . h i−ṽ/2 −1/2 2 (ω) . |W + z0 (ω)z(ω)| p(ω|y, y0 ) ∝ ṽ sg (2.3.8) However, the lack of identification mentioned above means that (2.3.8) could also be the posterior density for φ1 and φ2 . This composite (scaled) posterior is shown in Figure 10. Identification was achieved by imposing our prior belief that φ(L) has conjugate complex roots leading to cyclical behavior in yt . Thus we identified the higher hill in Figure 10, in the region leading to complex roots, as the joint posterior for φ1 and φ2 and the lower hill, in the region leading to real roots, as the joint posterior for ω1 and ω4 . Since (2.3.8) is difficult to integrate analytically, the marginal posteriors for ω1 , ω4 , φ1 and φ2 were obtained numerically. To obtain the marginal posterior for either ω1 or ω4 we numerically integrated13 over the other ωi . Since conditional on ω, (2.3.7) is multivariate Student-t, conditional posterior densities of the φi are univariate Student-t which can be obtained analytically. Then the products of these conditional densities and the joint density for ω in (2.3.8) are the conditional densities of the φi , given ω. 13 All the numerical integrations reported here were done by Gaussian quadrature using GAUSS 386 version 3.2.13 Table 7: Housing Starts; Exact Posterior Moments, Restricted ARAR(2,4) Coeff. φ1 φ2 ω1 ω4 Mean 1.62 -.706 -.440 -.137 Std. Dev. .0680 .0671 .0860 .0768 Skewness -.0000186 -.000210 -.00176 -.00848 The marginal posterior densities of the φi were obtained by integrating these products over ω1 and ω4 14 . The marginal posteriors for ω1 and ω4 are plotted in Figures 11 and 12, those for φ1 and φ2 are in Figures 13 and 14. In each figure the exact density is graphed with a solid line while the large-sample normal approximate density, is graphed with a dashed line. The exact posterior moments are given in Table 7. The exact and approximate posterior densities differ because they are based on different priors and because the sample is only moderately large. However, they are quite close in the case of φ1 and φ2 , which are the parameters of most interest. To summarize, the exact posterior densities for the ARAR parameters were easily obtained and were close to the asymptotic normal posteriors derived from the NLS estimates. In the next section these techniques will be extended to single equation, distributed lag models. 3 3.1 Distributed Lag Models Early distributed lag models Jorgenson (1966) considered the general distributed lag model Yt = µ + λ(L)xt + Vt (3.1.1) where xt is the value of an exogenous variable Xt , the lag polynomial λ(L) is infinitely long and the error was modeled as Vt = εt . Then λ(L) was approximated as δ(L)/φ(L) with δ(L) = δ0 + δ1 L + . . . + δm Lm and φ(L) as in (2.0.1), having no factors in common with δ(L). These assumptions lead to φ(L)Yt = µ0 + δ(L)xt + φ(L)εt (3.1.2) where µ0 = φ(1)µ. The parameters of interest are the coefficients of the lag polynomials φ(L) and δ(L). As in the previous section, the first disadvantage of this model for Vt is that it confines the effect of a shock εt on Yt − µ − λ(L)xt to only the current period. Secondly, it implies an MA form for the error term in (3.1.2) leading to complicated inference procedures15 . 14 Gibbs sampling procedures could also have been employed to compute these integrals. Marginal densities for φ1 or φ2 can also be obtained by integrating the portion of (2.3.8) which we have identified as the joint posterior of φ1 and φ2 over the other φi . 15 These complications are avoided if the error term φ(L)ε is white noise; see Zellner and t Geisel (1970). A more general model for Vt is the stationary ARMA π(L)Vt = θ(L)εt . (3.1.3) This leads to the Box and Jenkins(1976) transfer function model π(L)φ(L)Yt = π(1)µ0 + π(L)δ(L)xt + φ(L)θ(L)εt . (3.1.4) Now it’s even more difficult to draw inferences about the φi and δi because of the long, restricted MA structure of the error. Also it’s important not to over parameterize the pairs δ(L), φ(L) and π(L), θ(L) to avoid introducing common factors which destroy identification. The ARMAX model can be obtained by either imposing π(L) ≡ φ(L) on (3.1.3) or by adding a distributed lag in xt to the univariate ARMA model (2.1.2) leading to φ(L)Yt = µ0 + δ(L)xt + θ(L)εt . (3.1.5) This model is somewhat simpler to analyze than the transfer function model but it retains the inconvenient MA error structure. 3.2 The ARAR distributed lag model (ARDLAR) Our general distributed lag model is the univariate ARAR model (2.0.1) extended by the addition of a distributed lag in xt to obtain φ(L)Yt = µ0 + δ(L)xt + Ut , (3.2.1) We assume that the degrees of φ(L) and δ(L) can be specified a priori, that φ(L) is invertible and that it has no factors in common with δ(L). Then we can write the model as Yt µ0 δ(L) 1 + xt + Ut φ(1) φ(L) φ(L) = µ + λ(L)xt + Vt . = (3.2.2) This model retains the same infinite lag structure on xt as Jorgenson’s model (3.1.1), the transfer function model (3.1.4), and the ARMAX model (3.1.5). It also approximates this infinite lag structure by the ratio of the two finite lag polynomials: λ(L) = δ(L)/φ(L). However, in contrast to these models, we specify the same stationary AR process for Ut as in the univariate case: ω(L)Ut = εt and φ(L)Vt = Ut . Then (3.1.1) becomes the structural ARDLAR(p,m,r) model16 Yt = µ + 1 δ(L) xt + εt . φ(L) φ(L)ω(L) (3.2.3) We can rewrite (3.2.3) as the reduced form ω(L)φ(L)Yt = α0 + ω(L)δ(L)xt + εt , 16 This (3.2.4) produces the same results as the technique introduced by Fuller and Martin (1961). where α0 = µω(1)φ(1). Our ARDLAR model is simpler than the transfer function model but it still produces very rich behavior in both Yt and Vt because both λ(L) and 1/(ω(L)φ(L)) are infinite in length. Thus it retains the infinite lag structure on xt and there is no need to assume that Ut is white noise to facilitate estimation. Our model is especially convenient because it allows seasonal behavior to be included in ω(L), which could be the product of seasonal and nonseasonal polynomials. The ARDLAR lag model requires the same sort of prior information to identify the φi and ωi as does the ARAR model. Write the reduced form (3.2.4) as α(L)Yt = α0 + β(L)xt + εt , (3.2.5) where α(L) = ω(L)φ(L) and β(L) = ω(L)δ(L). In contrast to the univariate case, interchanging φ(L) and ω(L) in (3.2.4) will change the likelihood function except in the special case that φ(L) = ω(L). Similarly, interchanging φ(L) and δ(L) will change the likelihood function, unless φ(L) = δ(L), and so will interchanging ω(L) and δ(L), unless ω(L) = δ(L). Thus, so long as δ0 6= 1.0, a sufficient condition for identification is that φ(L) and ω(L) be of different degrees. Two other sources of identification failure are removed by assuming that φ(L) has its first term equal to 1.0 and that there are no common factors in φ(L) and δ(L). Now assume that α(L) and β(L) are known but φ(L), ω(L) and δ(L) are unknown and write α(L) in terms of its roots, ηi . Given the values of ηi we would use prior information in the same way as with the ARAR model to decide which (1 − ηi L) are part of φ(L) and which are part of ω(L). Once ω(L) had been identified in this way it could be factored out of β(L) to leave δ(L). In practice, the roots ηi and the polynomial β(L) are unknown but estimates of them may provide some guidance as to identification. In general, the number of free parameters in α(L) and β(L) are p + r and r + m + 1, respectively. Unrestricted OLS applied to (3.2.5) gives p + m + 2r + 1 point estimates which exceeds the number of free parameters in φ(L), ω(L) and δ(L) by r: thus ARDLAR models are generally over identified. Note that (3.2.5) is the autoregressive-distributed-lag (ARDL) model much favored by Hendry: see inter alia Hendry et al (1986). However, the two models are built in different ways. The ARDLAR model begins with fairly precise prior beliefs about the dynamics represented by φ(L) and δ(L). It then explicitly introduces the common factor ω(L) as the model for the error Ut . Prior beliefs about ω(L) are often diffuse. The parameters of interest are the φi and δi while the ωi and σ are nuisance parameters. In contrast, the ARDL model begins with the unrestricted polynomials α(L) and β(L) and tries, through COMFAC analysis, to discover if they contain any common factors, like ω(L). There are no prior beliefs regarding φ(L) and δ(L). Any common factors that are discovered are then put into an AR model for the error. In this procedure the parameters of interest are initially the αi and βi but if common factors are discovered interest presumably switches to the φi and δi . Since this procedure uses the same data over several rounds of testing, it is subject to the pre-test problems mentioned above. Assuming identification, the common factor ω(L) in (3.2.4) imposes a restriction linking the parameters in α(L) and β(L). To exploit this restriction, first condition on the coefficients of ω(L) to obtain φ(L)[ω(L)Yt ] = δ(L)[ω(L)xt ] + εt . (3.2.6) Then condition on the elements of φ(L) and δ(L) to obtain ω(L)[φ(L)Yt ] = ω(L)[δ(L)xt ] + εt . (3.2.7) Equations (3.2.6) and (3.2.7) can be used to generate NLS estimates or to provide the basis for application of the Gibbs sampler. An interesting simple example assumes that the degrees of δ(L) and φ(L) are both one, with | φ |< 1 and δ1 /δ0 6= −φ, so that Yt = µ + (δ0 + δ1 L) xt + V t . (1 − φL) (3.2.8) If observations are taken quarterly without seasonal adjustment an attractive AR model for Ut is the product of a nonseasonal AR(1) and a seasonal AR(1): ω(L)Ut = (1 − ω1 L)(1 − ω4 L4 )Ut = εt (3.2.9) with | ω1 |< 1 and | ω4 |< 1. Thus our model for Ut is a restricted AR(5) with two free parameters and our model for Vt is a restricted AR(6) with three free parameters (φ, ω1 and ω4 ) plus σ 2 . Since δ(L) 6= δ0 and the degree of ω(L) is different from that of φ(L), φ, ω1 and ω4 are identified, as are δ0 and δ1 . The model for Yt can be written as (1−ω1 L)(1−ω4 L4 )(1−φL)Yt = α0 +(1−ω1 L)(1−ω4 L4 )(δ0 +δ1 L)xt +εt (3.2.10) or as the unrestricted ARDL Yt = α0 + Σ6j=1 αj Yt−j + Σ6j=0 βj xt−j + εt . (3.2.11) The over identification of this model is easily seen from the restrictions: α0 = µφ(1)ω(1) α1 = ω 1 + φ α2 = −ω1 φ α3 = 0 α4 = ω 4 α5 = −ω4 (ω1 + φ) α6 = ω 1 ω4 φ β 0 = δ0 β 1 = δ 1 − ω 1 δ0 β2 = −ω1 δ1 β3 = 0 β4 = −ω4 δ0 β5 = ω4 (ω1 δ0 − δ1 ) β6 = ω 1 ω4 δ 1 . (3.2.12) To take advantage of the common factors in (3.2.11) first condition on ω1 and ω4 , then on φ, δ0 and δ1 to obtain equations analogous to (3.2.6) and (3.2.7). The first step in building a general ARDLAR model is to select p, the degree of φ(L), and m, the degree of δ(L). These selections should reflect prior beliefs, based on subject matter knowledge and previous research, about the presence of cycles or trends and about the pattern of the coefficients in the ratio λ(L) = δ(L)/φ(L). A tentative AR model of degree r for the error Ut should now be chosen. This will imply that α(L) is of degree p + r and β(L) is of degree m + r. The adequacy of the choice of r can be checked by examining the residuals from the OLS fit of (3.2.5). If the aim of the model is only to produce forecasts one may wish to stop at this point. However, if the aim is to gain knowledge about the parameters of φ(L) and δ(L) the procedures discussed above and illustrated below should be followed. Error correction models, partial adjustment models and adaptive expectations models can all be written as ARDLAR models. A summary of these model is given in Table 14 below. 3.3 Empirical example: growth of real GDP The AR(3) model has often been used to forecast real GDP or its rate of growth, as in Geweke(1988). However, Garcia-Ferrer et al (1987), Zellner and Hong (1989), Hong (1989) and Zellner et al (1991) have shown that AR(3) models give poor forecasts of turning points which can be improved by converting them to distributed lag models including lagged exogenous variables as leading indicators. We show in this example how a further extension to an ARDLAR is useful. Let Yt be the first difference of the log of real GDP. We begin by building univariate AR and ARAR models for Yt to further illustrate the ARAR technique and to provide a basis for comparison with the subsequent ARDLAR model. We then introduce two leading indicators and use them as the exogenous variables in an ARDLAR model. We follow earlier literature in specifying the first of our univariate models as an unrestricted AR(3). A priori we believe that Yt follows a damped cycle so the first step in building our ARAR model was to specify p = 2. Then setting r = 1 led to an α(L) of degree three, as in the unrestricted AR(3). (1 − ω1 L)(1 − φ1 L − φ2 L2 )Yt = α0 + εt . (3.3.1) Yt was constructed from quarterly observations on U.S. GDP in millions of 1987 dollars, seasonally adjusted at annual rates, from the fourth quarter of 1949 to the fourth quarter of 1990; 165 observations. Observations from the first quarter of 1991 to the third quarter of 1995 were held back for use in calculating forecast errors. The results of trend and unit roots analyses using three lags (the same as in (3.3.1)) appear in top panel of Table 8 which uses the same notation as Table 3. We concluded from these results that Yt is free of unit roots and trends. Table 8: Unit Roots and Trends; Real GDP, M2 and Stock Returns No Trend Linear Trend Rate of Growth of Real GDP α̂0 .04604 .006394 Std Dev .001045 .001776 β̂1 —-.003146 Std Dev —.002529 ρ̂ .3894 .36375 Std Dev .09417 .09600 PD (ρ ≥ 1) .00000 .00000 PJ (ρ ≥ 1) .00000 .00000 Rate of Growth of Real M2 α̂0 .002594 .0032332 Std Dev .0008632 .001585 β̂1 —-.001253 Std Dev —.002611 ρ̂ .5922 .5910 Std Dev .06351 .06352 PD (ρ ≥ 1) .00000 .00000 PJ (ρ ≥ 1) .00000 .00000 Rate of Growth of Real Stock Returns α̂0 .006503 .01626 Std Dev .005782 .01163 —-.01928 β̂1 Std Dev —.01996 ρ̂ .1447 .1370 Std Dev .07708 .07726 PD (ρ ≥ 1) .00000 .00000 PJ (ρ ≥ 1) .00000 .00000 OLS results for the unrestricted AR(3) model are in the second column of Table 9. The roots of α̂(L), their moduli and periods are in the top panel of Table 10. They support our a priori belief in a damped cycle. Because the ARAR(2,1) model is just identified estimates of its parameters could have been obtained from the OLS results or directly by NLS: see column three of Table 9. Of course, the ln likelihoods, residual diagnostics, estimated roots, moduli and periods are the same for the two methods. The value of α̂3 obtained by OLS is small compared to its standard deviation but the values of both φ̂2 and ω̂1 are large compared to their standard deviations. Thus setting α3 to 0 would be a specification error. Table 11 uses the same notation as Table 5 to present posterior results for the dynamic properties of the estimated φ(L) from Table 9. The asymptotic posterior probability of the presence of a cycle is .72. We now introduce two leading indicator variables which have been used in the past by Garcia-Ferrer et al (1987), Zellner and Hong (1989), Hong (1989) and Zellner et al (1991); the rate of growth of real M2 and the real rate of return on stocks. Monthly data on M2 in billions of 1987 dollars and on an index of the prices of 500 common stocks were taken from U.S. Department of Commerce, Bureau of Economic Analysis, Survey of Current Business, October 1995 and January 1996. To convert these monthly series to quarterly series we used the value for the third month in each quarter as the value for that quarter. The rate of growth of real M2, x1,t , is the first difference of the log of M2 and the real rate of return on stocks, x2,t , is the first difference of the log of common stock prices which were deflated by the consumer price index. The results of trend and unit roots analyses for x1,t and x2,t are in the second and third panels of Table 8. The lag length was chosen to minimize the BIC for an unrestricted AR model: it was a lag of one in both cases. Here too, all the posterior probabilities of stochastic nonstationarity were zero to eight significant digits. To obtain an ARDLAR model we added distributed lags in x1,t and x2,t to (3.3.1). In doing so we specified the lag polynomials so as to: reflect the character of x1,t and x2,t as leading indicators; account for the fact that the reporting lag before data on the growth of M2 are publicly available is much longer than the reporting lag for real stock returns; take advantage of the way in which monthly values of x1,t and x2,t were converted to quarterly values. This produced a generalization of the restricted reduced form (3.2.4) which we label ARDLAR(p, m1 , m2 , r) ω(L)φ(L)Yt = α0 + ω(L)δ1 (L)x1,t + ω(L)δ2 (L)x2,t + εt (3.3.2) where: ω(L) and φ(L) are specified as above; δ1 (L) = δ1,2 L2 ( so m1 = 2 with δ1,0 = δ1,1 = 0 ) and δ2 (L) = δ2,1 L (so m2 = 1 with δ2,0 = 0). Thus, there is a delay of three months before x1,t has an impact on Yt and a delay of only one month before x2,t has an impact. We can also write (3.3.2) as the partially restricted reduced form ARDL(3,3,2) model Yt = α0 + α1 Yt−1 + α2 Yt−2 + α3 Yt−3 + β1,2 x1,t−2 + β1,3 x1,t−3 + β2,1 x2,t−1 + β2,2 x2,t−2 + εt , (3.3.3) Table 9: GDP Growth; Asymptotic Posterior Moments Restricted AR(3) ARAR(2,1) ARDLAR(2,2,1,1) ARDL(3,3,2) Eqn (2.2.5) Eqn (3.3.1) Eqn (3.3.2) Eqn (3.3.3) By OLS on ARDL(3,3,2) Eqn (3.3.3) α̂0 Std Dev α̂1 Std Dev α̂2 Std Dev α̂3 Std Dev .004884 .001103 .3403 .07883 .1257 .08267 -.08642 .07841 .004884 .001103 ——————- .004049 .001056 ——————- .004049 .001056 .2091 .07766 .1357 .07206 -.05417 .06628 .004023 .001056 .1993 .07826 .1158 .07693 -.01090 .07346 β̂1,2 Std Dev β̂1,3 Std Dev β̂2,1 Std Dev β̂2,2 Std Dev ————————- ————————- ————————- .1100 .05283 .04623 .02255 .04137 .008879 .01739 .005981 .1328 .07869 .003707 .07698 .03639 .009529 .02818 .01047 φ̂1 Std Dev φ̂2 Std Dev ω̂1 Std Dev δ̂1,2 Std Dev δ̂2,1 Std Dev ——————————- .7676 .1443 -.2023 .1279 -.4273 .1364 ————- .6295 .1450 -.1289 .1208 -.4203 .1410 .1100 .05283 .04137 .008879 ——————————- ——————————- σ̂ .09761 .009761 .008921 .008921 .08919 ln(L) Q5 Q10 531.7 .459 6.78 531.7 .459 6.78 547.3 .803 9.41 547.3 .803 9.41 548.7 .537 7.96 where the restrictions, which over identify the model, are α0 = µφ(1)ω(1) α 1 = ω1 + φ1 α 2 = φ 2 − ω 1 φ1 β1,2 = δ1,2 α3 = −ω1 φ2 β1,3 = −ω1 δ1,2 β2,1 = δ2,1 β2,2 = −ω1 δ2,1 . NLS results for this model are in the fourth column of Tables 9. They show that the addition of the distributed lags in x1,t and x2,t was useful because the posterior means δ̂1,2 and δ̂2,1 are large compared to their standard deviations and because the residual variance was reduced. Also the approximate Bayes factor is 36100 in favor of the ARDLAR(2,2,1,1) over the ARAR(2,1). The fifth column of Table 9 shows the results for the reduced form ARDL(3,3,2) implied by the restrictions shown below (3.3.3), while those in the sixth column were obtained by unrestricted OLS on (3.3.3). A researcher who focussed on these OLS results might be tempted to set α3 and β1,3 to zero. But the results in columns four and five of Table 9 suggest that these restrictions would be inappropriate. Also the approximate Bayes factor in favor of the ADLAR(2,2,1,1) model over the partially restricted ARDL(3,3,2) is 40.69. The estimated roots of α̂(L) implied by the ADLAR(2,2,1,1) model, plus their modulus and period, are given in the third panel of Table 10. They agree closely with those from the AR(3) and ARAR(2,1) models but are much different to those from the partially restricted ARDL(3,3,2) model in the fourth panel. As in the housing example, we approximated the posterior distributions of the dynamic properties of φ(L) from the ARAR(2,1) and ARDLAR(2,2,1,1) models in two ways: by a normal distribution using the first two terms in a Taylor series expansion of the asymptotic NLS distributions or by simulating the nonlinear functions 5000 times. The moments of these two approximations are in Table 11 and their relative frequency curves are in Figures 15 to 20. From the simulations the estimated posterior probability of there being a cycle is .73 for the ARAR(2,1) model and .62 for the ARDLAR(2,2,1,1) model. Conditional on there being a cycle, the estimated probability of it being damped was 1.0 for both models. As with the housing example, the two approximations were quite close to one another for the functions showing the existence of a cycle and its modulus. However, the simulations of the period were skewed right and leptokurtic especially for the ARAR(2,1) model. For both models the estimated probability of the period being between six quarters and 30 quarters was greater than .96. Next we obtained forecasts of the level of GDP for 16 periods beyond the end of the sample used in estimation. For this purpose the ARAR(2,1) model was written in its restricted AR(3) reduced form and the ARDLAR(2,2,1,1) in its restricted ARDL(3,3,2) reduced form. Then forecasts were calculated from these two models and from the partially restricted ARDL(3,3,2) and converted to forecasts of the level of GDP. Summary statistics for the percentage forecast Table 10: GDP Growth; Roots of Estimated α̂(L) Roots Modulus Period Unrestricted AR(3) by OLS .3838 ± .2344i -.4273 .4497 .4273 11.46 α̂(L) From ARAR(2,1) by NLS .3838 ± .2343i -.4273 .4497 .4273 11.46 α̂(L) From ARDLAR(2,2,1,1) by NLS .3147 ± .1727i -.4203 .3590 .4203 12.52 α̂(L) From ARDL(3,3,2) by OLS .4151 -.3026 .08681 .4151 .3026 .08681 Table 11: GDP Growth; Approx. Posterior Moments, Functions of φ1 and φ2 ARAR(2,1) ARDLAR(2,2,1,1) Dynamic Method of Std. Std. Property Approx. Mean Dev. Skew. Kurt. Mean Dev. Skew. Kurt. cycle φ21 + 4φ2 Normal Simulated -.220 -.199 .334 .329 0.0 .138 3.0 2.95 -.119 -.0928 .334 .336 0.0 .256 3.0 3.15 modulus √ −φ2 Normal Simulated .450 .500 .142 .0925 0.0 .179 3.0 2.75 .359 .438 .168 .0922 0.0 .194 3.0 2.66 period 2π/ arctan(ϑ) Normal Simulated 11.5 13.2 6.02 15.7 0.0 16.5 3.0 362 12.5 12.8 13.1 13.2 0.0 8.59 3.0 103 Table 12: GDP Growth; Percentage Forecast Error Summary Statistics Partially Restricted ARAR(2,1) ARDLAR(2,2,1,1) ARDL(3,3,2) Mean -.0434 -.180 -.170 Std Dev 1.65 1.74 1.74 RMSE 1.66 1.75 1.75 errors are shown in Table 12. The forecasting performance of the ARAR model was the best of the three models, in spite of its lack of exogenous variable. On RMSE grounds the ARDLAR and ARDL models were nearly equal. To calculate exact posterior results for the ARDLAR(2,2,1,1) model, let x0t = [x1,t−2 , x2,t−1 ] and γ 0 = [µ0 , φ1 , φ2 , δ1,2 , δ2,1 ]. In contrast to the housing model we used a uniform, diffuse prior for ω1 , γ 0 and log σ. Let s = max(p + r, m1 + r, m2 + r), and write the initial values of Yt and xt as y00 = [y1 , . . . , ys ] and x00 = [x01 , . . . , x0s ]. Write the T remaining observations as y0 = [ys+1 , . . . , ys+T ] and x0 = [xs+1 , . . . , xs+T ]. In this example s = 3 and T = 165. All inferences were conditional on x00 and y00 . Define y(ω) as the T × 1 vector with elements yt (ω) = yt − ω1 yt−1 and x(ω) as the T × 2 matrix with rows x0t − ω1 x0t−1 . Then let z(ω) be the T × k matrix with rows z0t (ω) = [ω(1), yt−1 (ω), yt−2 (ω), x0t (ω)], where k = 1 + p + 2 = 5. Assume εt ∼ IN (0, σ 2 ). Then the joint posterior, after completing the square on γ, is ) ( g 0 [z0 (ω)z(ω)][γ − γ(ω)]) g 2 (ω) + [γ − γ(ω)] (v sg −(T +2) p(γ, ω, σ|y, z, y0 , x0 ) ∝ σ exp − 2σ 2 where; g = [z0 (ω)z(ω)]−1 z0 (ω)y(ω) v = T − k; γ(ω) and g 0 [z0 (ω)z(ω)]γ(ω). g 2 (ω) = y0 (ω)y(ω) − γ(ω) v sg Integrating out σ 2 gives h i−(v+k)/2 n o−(v+k)/2 g 0 H(ω)[γ − γ(ω)] g 2 (ω) p(γ, ω|y, z, y0 , x0 ) ∝ sg v + [γ − γ(ω)] h 2 (ω) where H(ω) = sg i−1 (3.3.4) 0 [z (ω)z(ω)]. Integrating (3.3.4) over γ gives the marginal posterior density for ω1 . h i−v/2 −1/2 2 (ω) |z0 (ω)z(ω)| . p(ω1 |y, z, y0 , x0 ) ∝ v sg (3.3.5) This density is plotted, together with the large-sample approximation, in Figure 21. Posterior moments for ω1 are in Table 13. Since a diffuse prior was used, the difference between the two densities is due entirely to the approximation error resulting from the small sample size. Conditional on ω1 , (3.3.4) is a multivariate Table 13: GDP Growth; ARDLAR(2,2,1,1) Model, Exact Posterior Moments Coeff. φ1 φ2 ω1 δ1,2 δ2,1 Mean .4678 -.02723 -.4203 .1472 .03836 Std. Dev. .1842 .1190 .1898 .06145 .008749 Skewness -.4976 -.02367 .5911 .3166 -.02477 Student-t density so the conditional posterior densities of the γi are univariate Student-t. To obtain the marginal posterior density for an individual γi we numerically integrated ω1 out of the product of the conditional Student-t density and the marginal density for ω1 given in (3.3.5). The resulting posterior densities are plotted in Figures 22 to 25 and their moments are in Table 13. Clearly, the asymptotic approximations are not nearly so close to the exact densities as was the case in the housing example above. 4 Conclusions We have shown in this paper that a useful model for the error in univariate and single equation distributed lag models is a finite, stationary AR. In the past researchers have often used a white noise or MA model for the error without much prior or other information to support their choice. The ARAR and ARDLAR models allow for very rich behavior of the error process and yet are usually easier to implement empirically than models with MA errors. Other appealing features of our ARAR model are its parsimony and the fact that all its components obey the Wold decomposition. We have shown theoretically and empirically how a researcher’s prior beliefs about the autoregressive structure of the observable variable can be used to solve the identification problem inherent in the model. In cases with restrictions on the parameters of the lag polynomials or if exogenous variables are present the models become over identified. The extension of these ideas to dynamic multivariate time series, simultaneous equations models and nonlinear models displaying chaos are the subjects of our current research. Table 14: Examples of Other ARDLAR Models Simple Error Correction Model Banerjee et al (1993), Hendry et al (1986) φ(L) = 1 − φL, ω(L) = 1 − ωL, δ(L) = δ0 + δ1 L, ∆Yt = α0 + (ω + φ − 1)(Yt−1 − xt−1 ) − ωφ(Yt−2 − xt−2 ) + δ0 ∆xt + [ω + φ + δ1 + (1 − ω)δ1 − 1]xt−1 − ω(φ + δ1 )xt−2 + εt Partial Adjustment Model Harberger(1960), Nerlove(1958), Pagan(1985) ∆Yt = µ∗ + φ∗ (yt∗ − Yt−1 ) + Ut ,; 0 ≤ φ∗ < 1 yt∗ = ψ0 + ψ1 xt φ(L) = 1 − φL, δ(L) = δ0 , ω(L) = 1 φ = 1 − φ ∗ , δ 0 = φ ∗ ψ 1 , µ0 = µ ∗ + φ ∗ ψ 0 (1 − φL)Yt = µ0 + δ0 xt + εt Adaptive Expectations Model Zellner and Geisel (1970), Zellner (1971) Yt = µ + ψx∗t+1 + Vt , x∗t+1 = φx∗t + (1 − φ)xt , 0 ≤ φ ≤ 1 δ0 = ψ(1 − φ) ω(L)(1 − φL)Vt = εt ω(L)(1 − φL)Yt = δ0 ω(L)xt + εt Finite Distributed Lag Model φ(L) ≡ 1.0 ω(L)Yt = ω(L)δ(L)xt + εt Acknowledgements Carter acknowledges the generous hospitality of the Graduate School of Business, The University of Chicago during the conduct of this research. Zellner’s research was financed in part by the National Science Foundation and by the H.G.B. Alexander Endowment Fund, Graduate School of Business, University of Chicago. References Banerjee, A., J. Dolado, J. W. Galbraith and D. F. Hendry (1993): CoIntegration, Error-Correction, and the Econometric Analysis of Non-Stationary Data, Oxford, Oxford University Press. Box, G. E. and G. Jenkins (1976): Time Series Analysis: Forecasting and Control, 2nd ed. San Francisco, Holden Day. Burns, A. F. and W. C. Mitchell (1946) Measuring Business Cycles, New York, NBER. Caplin, A. and J. Leahy (1999): “Durable Goods Cycles,” NBER Working Paper 6987. Cooper, R. and A. Johri (1999): “Learning by doing and aggregate fluctuations,” NBER Working Paper 6898. Fuller, W.A. and J.E. Martin (1961): “The effects of autocorrelated errors on the statistical estimation of distributed lag models,” Journal of Farm Economics, 63, 71-82. Gandolfo, G. (1996): Economic Dynamics, 3rd ed., Berlin, SpringerVerlag. Garcia-Ferrer, A., R.A. Highfield, F. Palm and A. Zellner (1987): “Macroeconomic forecasting using pooled international data,” Journal of Business and Economic Statistics, 5, 53-67. Geweke, J. (1988): “The secular and cyclical behavior of real GDP in 19 OECD countries,1957-1983,”Journal of Business & Economic Statistics, 6,479-486. Goodwin, R.M. (1947): “Dynamical coupling with especial reference to markets having production lags,” Econometrica, 15, 181-204. Green, E. and W. Strawderman (1996) “A Bayesian Growth and Yield Model for Slash Pine Plantations,” Journal of Applied Statistics, 23 , 285299. Harberger, A.C. (1960): The Demand for Durable Goods, Chicago, University of Chicago Press. Hendry, D.F., A.R. Pagan and J.D. Sargan (1986): “Dynamic specification,” in Griliches, Z. and M.D. Intriligator eds, Handbook of Econometrics, 3, North-Holland, Amsterdam. Hong, C. (1989): Forecasting Real Output Rates and Cyclical Properties of Models, A Bayesian Approach, PhD Thesis, Department of Economics, University of Chicago. Jorgenson, D. (1966): “Rational distributed lag functions,” Econometrica, 34, 135-139. Judge, G.G. and M.E. Bock (1978): The Statistical Implications of PreTesting and Stein-Rule Estimators in Econometrics, Amsterdam, NorthHolland. Ljung, G.M. and G.E.P. Box (1978): “On a measure of lack of fit in time series models,” Biometrika, 66, 297-303. Metzler, L.A. (1941): “The nature and stability of inventory cycles,” The Review of Economics and Statistics, 23, 113-129. Monahan, J.F. (1983): “Fully Bayesian analysis of ARMA time series models,” Journal of Econometrics, 21, 307-331. Nerlove, M. (1958): The Dynamics of Supply: Estimation of Farmers’ Response to Price, Baltimore, John Hopkins Press. Nicholls, D.F., A.R. Pagan and R.D. Terrell (1975): “The estimation and use of models with moving average disturbance terms: a survey,” International Economic Review, 16, 112-134. Pagan, A. (1985): “Time series behavior and dynamic specification,” Oxford Bulletin of Economics and Statistics, 47, 199-211. Pankratz, A. (1983): Forecasting With Univariate Box-Jenkins Models, New York, Wiley. Pantula, S.G., G. Gonzalez-Farias and W. Fuller (1994): “A comparison of unit-root test criteria,” Journal of Business and Economic Statistics, 12, 449-459. Parzen, E. (1982): “ARARMA models for time series analysis and forecasting,” Journal of Forecasting, 1, 67-82. Phillips, P.C.B. and P. Perron (1988): “Testing for a unit root in time series regression,” Biometrika, 76, 335-346. Phillips, P.C.B. (1991): “To criticize the critics: an objective Bayesian analysis of stochastic trends,” Journal of Applied Econometrics, 6, 333364. Said, E. and D.E. Dickey (1984): “Testing for unit roots in autoregressivemoving average models of unknown order,” Biometrika, 71, 599-607. Samuelson, P.A. (1939a): “Interactions between the multiplier analysis and the principal of acceleration,” Review of Economics and Statistics, 21, 75-78. Samuelson, P.A. (1939b): “A synthesis of the principle of acceleration and the multiplier,” Journal of Political Economy, 47, 786-797. Sargent, T. J. (1987): Macroeconomic Theory, 2nd ed. San Diego, Academic Press. Schotman, P.C. and H.K. Van Dijk (1991): “On Bayesian routes to unit roots,” Journal of Applied Econometrics, 6, 387-401. Schwarz, G. (1978): “Estimating the dimension of a model,” Annals of Statistics, 6, 461-464. Shiller, R. J. (1973): “A distributed lag estimator derived from smoothness priors,” Econometrica, 41, 775-788. Wold, H. (1938): A Study in the Analysis of Stationary Time Series, Uppsala, Almqvist and Wiksell. Wrinch, D.H. and H. Jeffreys (1921): “On certain fundamental principles of scientific inquiry,” Philosophical Magazine Series 6, 42, 369-390. Zellner, A. (1971): An Introduction to Bayesian Inference in Econometrics, New York, John Wiley & Sons. Reprinted by Wiley in 1996. Zellner, A. (1996): “Bayesian method of moments/instrumental variables (BMOM): analysis of mean and regression models,” in J.C. Lee, A. Zellner and W.O. Johnson, eds. Modelling and Prediction Honoring Seymour Geisser, New York, Springer-Verlag and in Zellner A. (1997): Bayesian Analysis in Econometrics and Statistics: The Zellner View and Papers, Cheltenham, U.K., Edward Elgar Publishers, 291-304. Zellner, A. (1997): “The Bayesian method of moments (BMOM): theory and applications,” in T. Fomby and R.C. Hill, eds. Advances in Econometrics, Vol 12, 85-105. Zellner, A. and B. Chen (2001): “Bayesian modeling of economies and data requirements,” Macroeconomic Dynamics, 5, 673-700. Zellner, A. and M. S. Geisel (1970): “Analysis of distributed lag models with applications to consumption function estimation,” Econometrica, 38, 865-888. Zellner, A. and C. Hong (1989): “Forecasting international growth rates using Bayesian shrinkage and other procedures,” Journal of Econometrics, 40, 183-202. Zellner, A., C. Hong and C.-K. Min (1991): “Forecasting turning points in international growth rates using Bayesian exponentially weighted autoregression, time-varying parameters and pooling techniques,” Journal of Econometrics, 49, 275-304. Zellner, A., D.S. Huang and L.C. Chau (1965): “Further analysis of the short-run consumption function with emphasis on the role of liquid assets,” Econometrica, 33, 571-581. A. Zellner and Tobias, J. (2001): “Further results on Bayesian method of moments analysis of the multiple regression model,” International Economic Review, 42, 121-132. Zivot, E. (1994): “A Bayesian analysis of the unit root hypothesis within an unobserved components model,” Econometric Theory, 10, 552-578.