Uncertainty Quantification Techniques for Sensor Calibration

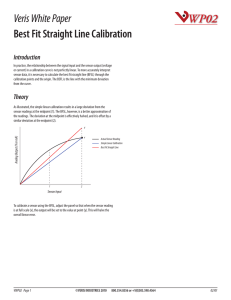

advertisement