§1.8: (20.) Let x = [ x1 x2 ] , v1 = [ −3 5 ] , and v2 = [ 7 −2 ] , and let T

advertisement

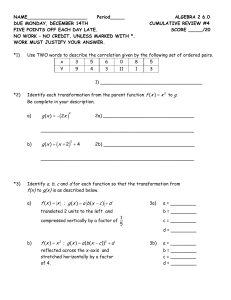

![§1.8: (20.) Let x = [ x1 x2 ] , v1 = [ −3 5 ] , and v2 = [ 7 −2 ] , and let T](http://s2.studylib.net/store/data/018432174_1-ef209ca2da9dd71b813260c507c0e36e-768x994.png)

§1.8:

x1

−3

7

(20.) Let ~x =

, ~v1 =

, and ~v2 =

, and let T : R2 → R2 be a linear transformation

x2

5

−2

that maps ~x into x1~v1 + x2~v2 . Find a matrix A such that T (~x) = A~x for each ~x ∈ R2 .

SOL’N: Without using the theorem from the next section we can actually see exactly what the

matrix will be by what is given. We are given that T is defined by the vector equation

−3

7

T (~x) = x1~v1 + x2~v2 = x1

+ x2

.

5

−2

But we know that a vector equation like this can be rewritten as a matrix equation A~x where the

columns of A are just ~v1 and ~v2 , i.e.

−3 7

A = [~v1 ~v2 ] =

.

5 −2

(24.) An affine transformation T : Rn → Rm has the form T (~x) = A~x + ~b, with A an m × n matrix and

~b ∈ Rm . Show that T is not a linear transformation when ~b 6= ~0.

SOL’N: Remember that T being linear means that it satisfies two properties, namely

T (~u + ~v ) = T (~u) + T (~v ) and T (c~u) = cT (~u) for all ~u, ~v ∈ Rn and c ∈ R. So to show that an affine

transformation is not linear we just have to show that one of these properties does not hold.

So let T : Rn → Rm be defined by T (~x) = A~x + ~b with ~b 6= ~0. Then by the properties of the

product of a matrix and a vector we have

T (c~u) = A(c~u) + ~b = cA(~u) + ~b

whereas

cT (~u) = c(A(~u) + ~b) = cA(~u) + c~b.

So we see that T (c~u) 6= cT (~u) if ~b 6= ~0.

(30.) Suppose vectors ~v1 , . . . , ~vp span Rn , and let T : Rn → Rm be a linear transformation. Suppose

T (~vi ) = ~0 for i = 1, . . . , p. Show that T is the zero transformation. That is, show that if ~x is any

vector in Rn , then T (~x) = ~0.

SOL’N: Again we are going to use the properties of linearity for this problem. Let ~x ∈ Rn . Since

the vectors ~v1 , . . . , ~vp span Rn we know that there exist weights c1 , . . . , cp ∈ R such that

~x = c1~v1 + · · · + cp~vp .

Therefore, by the linearity of the transformation T we have

T (~x) = T (c1~v1 + · · · + cp~vp )

= T (c1~v1 ) + · · · + T (cp~vp )

= c1 T (~v1 ) + · · · + cp T (~vp )

= c1~0 + · · · + cp~0

= ~0

by assumption.

§1.9:

1

−2

3

, T (~e2 ) =

, and T (~e3 ) =

.

4

9

−8

Determine if the transformation is (a) one-to-one and (b) onto. Justify your answer.

(26.) Let T : R3 → R2 be defined so that T (~e1 ) =

SOL’N: By Thm 10 from §1.9 we know that this transformation is a matrix transformation with

standard matrix

1 −2 3

A=

.

4 9 −8

Straight away, we know that we should turn this matrix into reduced echelon form (you should

check my arithmetic here).

11

1 −2 3

1 0

17

∼ ··· ∼

4 9 −8

0 1 − 20

17

We also have from Thm 11 from this section that T is one − to − one if and only if the equation

T (~x) = A~x = ~0 has the trivial solution. We know now that this happens if and only if there are

no free variables. Well, we see from the reduced echelon form of A that there is a free variable so

T is NOT one-to-one.

For determining whether or not T maps onto R2 we know that this happens if and only if the

colums of A span R2 which in turn happens if and only if there is a pivot in every row. Oh, there

is a pivot in every row so we know that the linear transformation T must be onto.

(32.) Let T : Rn → Rm be a linear transformation, with A its standard matrix. Complete the following

statement to make it true: “T maps Rn onto Rm if and only if A has

pivot columns.”

Find some theorems that explain why the statement is true.

SOL’N: We actually talked about this one in class because I forgot that I assigned it for your

homework. We know the statement is true by filling in m into the blank. This is true because we

know, again, that T is onto if and only if the columns span Rm which happens if and only if there

is a pivot in every row. As there are m rows, there must be m pivot columns for the statement to

be true.

(34.) Let S : Rp → Rn and T : Rn → Rm be linear transformations. Show that the mapping

~x 7→ T (S(~x)) is a linear transformation (from Rp to Rm ).

SOL’N: From the yellow-ish box on pg. 66 in the book and the discussion thereafter, we know

that a transformation T is linear if and only if

T (c~u + d~v ) = cT (~u) + dT (~v ) ∀ vectors ~u, ~v and scalars c, d.

So let ~u, ~v ∈ Rp and c, d ∈ R. Then

T (S(c~u + d~v )) = T (cS(~u) + dS(~v ))

| {z }

|

{z

}

∈Rp

∈Rn

by the linearity of S. Note at this point that both cS(~u) and dS(~v ) are vectors living in Rn so

that it makes sense to evaluate T , whose domain is Rn , at their sum. Furthermore, because we

also know T is linear we get

T (cS(~u) + dS(~v )) = cT (S(~u)) + dT (S(~v ))

{z

}

|

∈Rm

which is exactly what we wanted to show to show that the composition T ◦ S is linear.