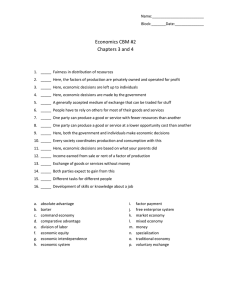

Using CBM for Progress Monitoring

advertisement