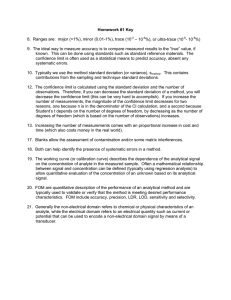

AOAC Guidelines for Single Laboratory

advertisement