EECS 491: Artificial Intelligence - Fall 2013

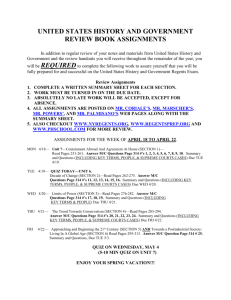

advertisement

EECS 491: Artificial Intelligence - Fall 2013 Instructor Dr. Michael Lewicki Associate Professor Electrical Engineering and Computer Science Dept. Case Western Reserve University email: michael.lewicki@case.edu Office: Olin 508. Office Hours: Mon/Fri 9:30-10:30 or by appointment. Class meeting times Tuesdays and Thursdays 4:15 - 5:30 PM in Sears 356 Web page The course has a blackboard site (https://blackboard.case.edu). Check there periodically for the latest announcements, homework assignments, lecture slides, handouts, etc. Course Description This course is a graduate-level introduction to Artificial Intelligence (AI), the discipline of designing intelligent systems. It focuses on the fundamental theories, algorithms, techniques required to design adaptive, intelligent systems and devices that make optimal use of available information and time. The course covers probabilistic modeling, inference, and learning in both discrete and continuous problem spaces. Practical applications are covered throughout the course. Textbooks Our main textbook for the course is: • Bayesian Reasoning and Machine Learning by Barber, Cambridge 2012 A pdf ebook for this is available online: http://www.cs.ucl.ac.uk/staff/d.barber/brml/. I also recommend these books: • Machine Learning: A Probabilistic Perspective by Murphy, MIT Press, 2012. • Pattern Recognition and Machine Learning by Bishop, Springer, 2006. The schedule topics refer primarily to Barber and Murphy because I think it’s highly instructive to have two points of view. The course will follow more the organization in Barber. Prerequisites It is helpful but not strictly necessary to be familiar with basic concepts in Artificial Intelligence and Machine Learning (e..g EECS 391). The assignments will have a programming component that involves implement and using algorithms, almost always in Matlab, and so I also recommend that you have a basic course in algorithms and data structures (e.g. EECS 233). The mathematical basis of this course is probability theory, so I also recommend an introductory course on statistics and probability theory (e.g. STAT 312 or 325). Both probability theory and topics in this course draw heavily on univariate and multivariate calculus (e.g. MATH 121,122 and 223) and linear algebra (e.g. MATH 201). Having all these courses would make you very well prepared for this course. It is certainly possible to do well without having all the recommended background, but be prepared to spend more time in areas where your background is less solid. Come see me if you have questions. Requirements and Grading Students are required to attend lectures, read the assigned material in the textbooks prior to class, and expected master all the material covered in class. Classes missed due to reasons other than medical conditions cannot be made up. The course will include six combined theory and programming assignments, a course project, and a presentation. There are no exams. The numbers of assignments might be adjusted depending on the flow of the course. Project presentations will be given during the final exam period (see schedule). Grades will be weighted as follows: 75% total, 12.5% each • Assignments: Project report: 15% • • Project presentation: 10% Assignments Assignments are the primary means by which to learn the mathematical material presented in class and will be coordinated with the lectures. Some of the advanced methods discussed in class are not practical to cover in a homework because of their complexity. If you would like to study a particular topic in greater detail, it would be well worth considering designing a class project around that topic. Some assignments will depend on material completed in earlier assignments. Therefore, complete the entire assignment and stay current. The programming assignments will be in Matlab, but it is possible to use other languages, e.g. numerical python, but it is usually more work, and you will not be able to take advantage of matlab code that will be provided with some of the assignments. Each assignment must be turned in as a single pdf file. The reason for this is that it makes grading far easier and avoids formatting and version problems that often arise from ms word files. The best way is to use latex (for equations) and include code and figures as needed. Once you know how to do it, latex is faster and far more flexible than doing it in a word processor, because if you need to update figure, you can simply regenerate the pdf. Equations are also faster to specify and yields much more professional formatting. Students in previous years have also used the Matlab report generator, but you must join multiple pdf files into a single pdf for the assignment. Assignment Drafts In this class, assignments have two due dates: draft and final. For the draft due date, you hand in a draft of the assignment, and I provide you quick feedback that you can use to prepare the final version. Drafts must be complete. You will not receive credit for problems that are unfinished in the draft. If you have questions about specific problems, you should see me prior to the draft due date. The final assignment version is due on the final due date. I’ve found that this arrangement greatly facilitates the learning experience, and avoids partial credit due to misunderstanding. Class Projects The class project is a project of your design. I will send out a request for project proposals in early October and will arrange meetings with you at that time. You are required to submit project proposal, turn in a project report, and give a presentation during the final exam period. The time will be allotted evenly, but expect to have about 20-30 minutes. Collaborations for joint projects are acceptable, but each person must make a unique contribution to the project, and each person must write up a report and give a presentation that describes their contribution to the project. Collaborations must be approved in advance and have a clear plan for the role of each student. Collaboration and Cheating Collaboration is encouraged, but students must turn in their own assignments. Any problem completed collaboratively should contain a statement that the students have contributed equally toward the completion of the assignment. Students are expect to understand all the material presented in the assignment. I reuse some of the problems from previous years, because they are good problems and have been refined and improved over many years. Referring to previous years’ assignments is cheating. It takes a lot of time and effort to develop good homework assignments, and we want you and future students to be able to continue to use them. We also welcome feedback for improvement. It is your responsibility to help protect the educational value of these assignments. Violations in any of the areas above will be handled in accordance with the University Policy on Cheating and Plagiarism. Class Schedule (subject to revision) 1 Date Topics Readings Assignment due dates out draft final Introduction and Overview - course topics 1 Tue, Aug 27 overview, applications, examples of probabilistic modeling and inference chapter introductions in Barber, M.1 2 Thu, Aug 29 Probabilistic Reasoning - basic probability review, reasoning with Bayes’ rule 3 Tue, Sep 3 Reasoning with Continuous Variables - prob- B.1.3, backability distribution functions, prior, likelihood, ground B.8, and posterior, model-based inference B.9.1, M.2.1-4 4 Thu, Sep 5 Belief Networks - representing probabilistic relations with graphs, basic graph concepts, independence relationships, examples and limitations of BNs Graphical Models - Markov networks, Ising model and Hopfield nets, Boltzmann machines, 5 Tue, Sep 10 chain graphs, factor graphs, expressiveness of graphical models B.1.1-2, M.2.1-2 A1 B.2, B.3, M.10.1-2 B.4, M.19.1-4 A1 A2 A1 Inference in Belief Nets - variable elimination, B.5.1.1, M10.3, 6 Thu, Sep 12 sampling methods, Markov Chain Monte Carlo M20.3, (MCMC), Gibbs Sampling B.27.1-3 7 Tue, Sep 17 Inference in Graphical Models 1 - message passing B.5.1-2, M.20, Bishop Ch.8 8 Thu, Sep 19 Inference in Graphical Models 2 - sumproduct algorithm, belief propagation B.5.1-2, M.20, Bishop Ch.8 Learning in Probabilistic Models - represented data, statistics for learning, common probability B.8.1-3,6-7 9 Tue, Sep 24 distributions, learning distributions, maximum M.3.1likelihood Learning as Inference - probabilistic models as 10 Thu, Sep 26 belief networks, continuous parameters, training B.9.1-4 belief networks. 1 11 Tue, Oct 1 Generative Models For Data - Bayesian concept learning, Dirichlet multinomial model, bag M.3.1-4 of words model 12 Thu, Oct 3 Naïve Bayes Classifiers - MLE for NBC, text B.10, M.3.5 classification, Bayesian NB, tree-augmented NB In readings, B.x.y refers to Barber chapter x, section y, M.x.y refers to Murphy. A2 A3 A2 Date Topics Readings Assignment due dates out draft final 13 Tue, Oct 8 Learning with Hidden Variables - hierarchical models, missing data, expectation maximization B.11.1-2, (EM), EM for belief nets, variational Bayes, M.10.4 gradient methods, deep belief nets Gaussian Models - univariate and multivariate 14 Thu, Oct 10 Gaussian (Normal) distributions, linear Gaussian systems, MLE for MVN B.8.4, M.4.1 Principal Component Analysis - dimensionalB.15.1-3, 15 Tue, Oct 15 ity reduction, optimal linear reconstruction, M12.2 whitening Discrete Latent Variable Models 1 - latent se16 Thu, Oct 17 mantic analysis, latent topics, information retrieval, bag of words, A3 A4 A3 B.15.4, M.27.1-2 Tue, Oct 22 Fall break - no class Discrete Latent Variable Models 2 - probabilistic LSA, latent Dirichlet allocation (LDA), B.15.6, 17 Thu, Oct 24 non-negative matrix factorization, kernel PCA, M.27.3-4 topic models Gaussian Mixture Models - clustering, nearest 18 Tue, Oct 29 neighbor classification, k-means, latent variable B.14, M.11 models, mixture models, EM for MMs Bayesian Model Selection - Occam’s razor, Bayesian complexity penalization, Laplace ap- B.12.1-5, 19 Thu, Oct 31 proximation, Bayes information criterion, Bayes M.5.3, M.11.5 factors 20 Tue, Nov 5 Latent Linear Models - factor analysis (FA), probabilistic PCA, MLE and EM for FA, canonical correlation analysis (CCA) B.21.1-5, M.12 21 Thu, Nov 7 Sparse Linear Models - sparse representation, independent component analysis (ICA) and blind source separation (BSS), sparse coding, compressed sensing B.21.6, M.13 22 Tue, Nov 12 Non-Linear Dimensionality Reduction ISOMAP, LLE, deep belief networks handouts Dynamical Models - discrete state Markov models, transition matrix, language modeling, 23 Thu, Nov 14 MLE, mixture of Markov models, applications: Google PageRank algorithm, gene clustering B.23.1, M.17-1-2 Hidden Markov Models (HMMs) - classical inference problems, forward algorithm, forward- B.23.2, 24 Tue, Nov 19 backwards algorithm, Viterbi algorithm, samM.17.3-4 pling, natural language models A4 A5 A4 A5 A6 A5 Date Topics Readings Assignment due dates out draft final Learning HMSs - EM for HMMs (BaumWelch algorithm), GMM emission model, discriminative training, related models and gener- B.23.3-5, 25 Thu, Nov 21 alizations, dynamic Bayes nets, applications: M.17.5-6 object tracking, speech recognition, bioinformatics, part-of-speech tagging 26 Tue, Nov 26 TBD A6 Thu, Nov 28 Thanksgiving break - no class 27 Tue, Dec 3 Continuous-state Markov Models 1 - linear dynamical systems (LDS), stationary distributions, autoregressive models, latent linear dynamical systems, Kalman filtering, robotic SLAM 28 Thu, Dec 5 Continuous-state Markov Models 2 - inference in CSMMs, filtering, smoothing, trajectory B.24.4-7, analysis, learning LDS, EM for LDS, approxi- M.18.4-5 mate online inference Wed, Dec 11 Student Project Presentations - during final exam period: 12:30-3:30, room TBA B.24.1-3, M.18.1-3 A6