Coherence: Credence I

advertisement

Re-cap & Plan

Ramsey & Hájek

Step 1

Steps 2 & 3

Accuracy v. Evidence

Refs

Re-cap & Plan

Step 1

Steps 2 & 3

Accuracy v. Evidence

Refs

We’ve completed our discussion of coherence requirements

for full belief. [The notes on that should now be complete

and correct.] Time for numerical confidence (i.e., credence).

Coherence: Credence I

Branden

Ramsey & Hájek

As always, the application of our framework will involve our

three steps. In the case of sets of credences b, this means:

Fitelson1

Step 1: Define “the vindicated credal set at w” (b̊w ).

Department of Philosophy

Rutgers University

There will be greater controversy about b̊w than B̊w .

&

Step 2: Define “the distance between b and b̊w ” [δ(b, b̊w )].

Munich Center for Mathematical Philosophy

Ludwig-Maximilians-Universität München

Much of the extant literature involves this (choice of δ) step.

Step 3: Choose a fundamental principle that uses δ(b, b̊w ) to

ground a coherence requirement for for credal sets b.

branden@fitelson.org

http://fitelson.org/

This is philosophically fundamental & merits more scrutiny.

Before diving into the three steps for credal CR’s (and how I

think they should be handled), I want to give a bit of

historical/philosophical background on credence vs belief.

1

These seminar notes include joint work with Daniel Berntson (Princeton),

Rachael Briggs (ANU), Fabrizio Cariani (NU), Kenny Easwaran (USC), and

David McCarthy (HKU). Please do not cite or quote without permission.

Branden Fitelson

Re-cap & Plan

Coherence, Lecture #6: Credence I

Ramsey & Hájek

Step 1

Steps 2 & 3

1

Accuracy v. Evidence

Refs

Re-cap & Plan

p is true

??

::

B(p)

b(p) = r

Ramsey & Hájek

Steps 2 & 3

Accuracy v. Evidence

Refs

the coin that I am about to toss is either two-headed or two-tailed,

but you do not know which. What is the probability that it lands

1

heads? . . . reasonably, you assign a probability of 2 , even though

you know that the chance of heads is either 1 or 0. So it is rational

to assign a credence that you know does not match the . . . chance.

Ramsey [47] rejected some versions of A. Specifically, he

rejected Keynes’s suggestion that “??” could be filled-in

with “the a priori/logical probability of p equals r ”.

Ramsey thought that “a priori/logical probabilities” (in the

sense of Keynes, Carnap, and others) do not exist.

This is disanalogous to rational belief, since it is never

rational to believe something that you know is not true.

Others have endorsed different renditions of A. For

instance, Hájek [19] recommends that the “??” can be

filled-in with “the objective chance of p equals r .”

So, this seems to be a counterexample to Hájek’s proposal

for a “truth norm” analogy between full belief and credence.

At this point, you may think that the prospects for filling-in

A are rather dim. But, remember that the role of such

(narrow, alethic) norms for us is merely to fix the ideal state.

Note: if “??” gets filled-in with a probability function (of any

kind), then A trivially yields probabilism. This is analogous

to the trivial entailment of B-consistency via (TB).

Coherence, Lecture #6: Credence I

Step 1

2

There are independent (and deeper) epistemic problems

with his proposal. Hájek himself discusses this example:

The “??” asks whether there is a (local) accuracy

requirement for credence that is akin to the truth norm (TB).

Branden Fitelson

Coherence, Lecture #6: Credence I

One might have Ramsey-style worries about Hájek’s

proposal. That is, one might worry that chances are not

probabilities [23] or that they do not exist for all p [37].

Consider the following analogy:

(A)

Branden Fitelson

3

Branden Fitelson

Coherence, Lecture #6: Credence I

4

Re-cap & Plan

Ramsey & Hájek

Step 1

Steps 2 & 3

Accuracy v. Evidence

Refs

Re-cap & Plan

Step 1: define “the vindicated credal set at w” (b̊w ). Joyce

[25] presupposes the following definition of b̊w :

b̊w

Either b(p) = 1 and p is true at w,

or

Ö b(p) = r

b(p) = 0 and p is false at w.

If the slogan for (TB) was “belief aims at truth”, then I

suppose the slogan for (Tb) should be something like

“credence aims at certainty of truth.” Is that plausible?

Maybe not for actual agents. But, for us, norms such as

(TB)/(Tb) are used only to characterize the ideal state.

vw (p) = r

p is true

::

B(p)

b(p) = r

In this sense, (Tb) is much more plausible. I think there is a

more fundamental, comparative idea that underlies (Tb):

(T) Ideally, one should be strictly more confident in truths than

falsehoods (i.e., if p is true and q is false, then p q).

This will sound implausible if it is interpreted as a norm

that actual agents are required to follow. But, so does (TB).

Coherence, Lecture #6: Credence I

Step 1

Steps 2 & 3

5

Accuracy v. Evidence

Refs

I will return to (T) when we look at CR’s for comparative

confidence. I suspect that (Tb) is a generalization of (T).

Ramsey & Hájek

Step 1

Steps 2 & 3

6

Accuracy v. Evidence

Refs

Moreover, unlike the case of full belief, there is strong

disagreement here — even between naïve candidate δ’s.

The norms we end-up with (assuming analogous choices of

fundamental principles in Step 3, below) will depend

sensitively on which distance measure δ is chosen.

After all, why would one stop short of including only

extremal credences in b̊w ? Strictly speaking, it would be

compatible with (T) to have non-extremal credences in b̊w .

Let’s start by thinking about what sorts of mathematical

representations of b’s are most natural. [In the case of

opinionated B, binary vectors were the natural choice.]

But, why would one do that? Would there be some threshold

t < 1 such that b̊w should contain b(p) = s iff s ≥ t and p is

true at w? If so, why wouldn’t making t closer to 1 always

make b̊w a more apt quantitative precisification of (T)?

Coherence, Lecture #6: Credence I

Re-cap & Plan

Coherence, Lecture #6: Credence I

As in the case of full belief, this second step is fraught with

potential danger/objections. Many δ’s are possible here.

Given this setup, if we accept (T), then it seems natural to

take the ideal/perfect/vindicated set b̊w to be the one that

includes b(p) = 1 [b(p) = 0] just in case p is true [false].

I’ll come back to this later (with ). Moving on with b. . .

Branden Fitelson

Step 2: define “the distance from b to b̊w ” [δ(b, b̊w )].

Suppose agents are opinionated and they assign credence on

a [0, 1] scale, with b(p) = 0 corresponding to certainty that

p is false, and b(p) = 1 being certainty that p is true.

Branden Fitelson

Refs

(Tb) S ought to be certain that p (¬p) iff p is true (false).

Ö b(p) = r vw (p) = r

Ramsey & Hájek

Accuracy v. Evidence

The analogous (local) alethic norm for b would seem to be:

So, on the Joycean approach, b̊w is the set of extremal

credence assignments corresponding to the 0/1-truth-value

assignments associated with world w. This suggests:

Re-cap & Plan

Steps 2 & 3

In the full belief setting, the background assumption was

something to the effect that “belief aims at truth” [53].

Branden Fitelson

Step 1

In the case of B, we used the truth norm (TB) to guide our

definition of the perfectly accurate or vindicated set B̊w .

Let vw (·) be the 0/1–truth-value-assignment associated with

w. That is, vw (p) = 1 iff p is T at w and vw (p) = 0 iff p is

F at w. A simpler way to state Joyce’s definition of b̊w is:

b̊w

Ramsey & Hájek

In this case, it is natural to represent b’s as vectors in Rn ,

where n is the number of propositions in the underlying B.

So, the natural things to consider are measures of distance

between vectors in Rn . For a nice survey, see: [11, Ch. 5].

7

Branden Fitelson

Coherence, Lecture #6: Credence I

8

Re-cap & Plan

Ramsey & Hájek

Step 1

Steps 2 & 3

Accuracy v. Evidence

Refs

Re-cap & Plan

I’ll focus on two natural (lp –metric [11, Ch. 5]) choices for δ

p

sX b(p) − vw (p)2

δ2 (b, b̊w ) Ö

δ1 (the l1 –metric) is also called Manhattan distance, and δ2

(the l2 –metric) is, of course, the Euclidean distance.

And, it often seems clear to us — even in very simple,

non-paradoxical examples — that having extremal credences

would be unreasonable (viz., unsupported by our evidence).

h

i

(PV) (∃w) δ(b, b̊w ) = 0 . [This is the analogue of B-consistency.]

h

i

(SADA) b0 such that: (∀w) δ(b0 , b̊w ) < δ(b, b̊w ) .

It is for this reason that fundamental principles weaker

than (PV) are even more attractive in the credence case.

h

i

h

i

b0 s.t.: (∀w) δ(b0 , b̊w ) ≤ δ(b, b̊w ) & (∃w) δ(b0 , b̊w ) < δ(b, b̊w ) .

Ramsey & Hájek

Step 1

Steps 2 & 3

9

Accuracy v. Evidence

Refs

Branden Fitelson

Re-cap & Plan

Ramsey & Hájek

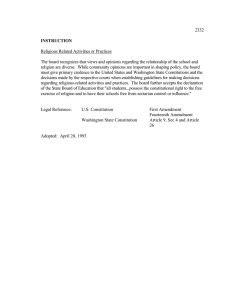

example — non-probabilistic b (red) vs probabilistic b (green).

0.8

0.6

0.6

0.4

0.4

0.2

0.2

0.0

Branden Fitelson

0.2

0.4

0.6

0.8

1.0

Accuracy v. Evidence

Refs

In R3 , the story about δ1 becomes even more interesting.

h0, 0, 21 i weakly (but not strictly) δ1 -dominates h 14 , 14 , 12 i.

+

3

h0, 0, 21 i strictly δ1 -dominates h 16

,

3 5

, i.

16 8

Therefore, in the general case, neither direction of de

Finetti’s theorem carries over from δ2 to δ1 !

Notice how there is a difference between weak and strict

δ1 -dominance. This is not so for δ2 . Generally, δ’s that make

de Finetti’s theorem true imply no such distinction [50].

0.0

0.0

Steps 2 & 3

It is still true, in R2 , that probabilistic credal sets will also be

non-dominated (even weakly), assuming measure δ1 . [This

is trivial in the R2 -case, since no R2 -vector from [0, 1] is

weakly δ1 -dominated by any other R2 -vector from [0, 1]!]

We can visualize its simplest instance, using our toy {P , ¬P }

0.8

Step 1

10

There are non-probabilistic credence sets b that are not even

weakly dominated in δ1 -distance from vindication. In our

toy example, suppose b contains b(P ) = b(¬P ) = 0. This

credal set b is not weakly δ1 -dominated by any R2 -vector.

Theorem (de Finetti). Assuming δ2 as our measure of

distance from vindication, b violates (SADA) [and/or

(WADA)] iff b is a non-probabilistic set of credences.

1.0

Coherence, Lecture #6: Credence I

The story changes drastically if we move from δ2 to δ1 .

(SADA) & (WADA) imply the same coherence requirement

for b, assuming δ2 . This was shown by de Finetti [6].

1.0

Refs

In the credence case, (PV) seems patently too strong. For it

would require one’s credences to be extremal.

Step 3: Choose a fundamental principle which uses δ(b, b̊w )

to ground a CR for b. We have the usual options here.

Re-cap & Plan

Accuracy v. Evidence

In such cases, it seems unreasonable to require consistency,

as this is in tension with the beliefs our evidence supports.

Interestingly, these two natural choices of δ will lead to

drastically different CR’s for b in our framework [39].

Coherence, Lecture #6: Credence I

Steps 2 & 3

In the full belief context, (PV) proves to be too strong. But,

this can only be seen clearly by considering “paradoxical”

cases involving large-ish, minimal-inconsistent belief sets.

p

Branden Fitelson

Step 1

Before looking at the CR’s generated by (SADA)/(WADA) for

δ1 and δ2 (which will include probabilism [7]), I will first

discuss (PV) in the contexts of full belief vs. credence.

X

b(p) − vw (p)

δ1 (b, b̊w ) Ö

(WADA)

Ramsey & Hájek

0.0

0.2

Coherence, Lecture #6: Credence I

0.4

0.6

0.8

1.0

11

Branden Fitelson

Coherence, Lecture #6: Credence I

12

Re-cap & Plan

Ramsey & Hájek

Step 1

Steps 2 & 3

Accuracy v. Evidence

Refs

Re-cap & Plan

As far as I know, there is no characterization of which

distance measures δ yield probabilism (via δ–dominance).

The following quantity (where δ̂ is δ’s point-wise distance

function, e.g., δ̂1 (a, b) = |a − b|, and δ̂2 (a, b) = |a − b|2 ):

has a unique minimum at x = b, for all b ∈ [0, 1].

It’s easy to check that δ2 is proper, but δ1 is improper.

First, notice that there is no reason that a non-probabilistic

agent should worry about whether their b minimizes (?).

After all, (?) assumes the standard, probabilistic definition

of “expectation”. If our toy agent S is non-probabilistic, then

b(¬p) , 1 − b(p). Why should S care about minimizing (?)?

Suppose there is some b ∈ [0, 1] such that setting x = b

does not minimize (?). This implies that the pair hb, δi is

modest. Here, modesty is supposed to be bad. But, why?

Ramsey & Hájek

Step 1

Steps 2 & 3

13

Accuracy v. Evidence

Refs

Joyce [24, pp. 277–80] agrees. But, he maintains impropriety

is nonetheless undesirable in a measure of distance from

vindication, because of its implications for probabilistic S’s.

Re-cap & Plan

Coherence, Lecture #6: Credence I

Ramsey & Hájek

Step 1

Steps 2 & 3

14

Accuracy v. Evidence

Refs

Joyce has in mind a (narrow/local) evidential requirement:

the Principal Principle [34], which (roughly) implies that S

should apportion her credences to the known objective

chance of p — if this is all the evidence S has regarding p.

He claims that some probabilistic agents have the “correct”

credences (in a given context). Example: Pi Ö a fair, 3-sided

1 1 1

die comes up “i”. And, S is such that: b = h 3 , 3 , 3 i.

We can imagine a context in which our agent above knows

only that the die is fair (S has no other Pi -relevant evidence).

Joyce claims that such an S clearly has the “correct”

credences. So, for this (probabilistic and “correct”) agent,

considerations of immodesty would seem to be probative.

In such a case, having the credal set b = h 13 , 13 , 13 i, seems to

be among her (narrow/local) evidential requirements.

For her, there does seem to be something uncomfortable

about adopting δ1 . Her evidential requirements imply that

she should have credences which — by her own lights — do

not minimize expected distance from vindication.

Moreover, if S were to use an improper measure (e.g., δ1 ),

then S would think there is another credence b0 that has

lower expected distance from vindication than their own.

Specifically, if S adopts δ1 , then it turns out that the

(“crazy”) credal set b0 = h0, 0, 0i minimizes (?).

Coherence, Lecture #6: Credence I

Branden Fitelson

There is something odd about Joyce’s argument here. First,

he needs to presuppose a substantive epistemic notion of

“correctness” of credence that goes beyond coherence.

As such, modesty (per se) of the pair hb, δi only seems bad

for agents that already have probabilistic credences b.

Branden Fitelson

Refs

This may seem like bad news for the agent. But, is it?

Many have argued that propriety should be satisfied by

distance measures (in this context). The main argument for

this conclusion is based on what is called immodesty [35].

Re-cap & Plan

Accuracy v. Evidence

In other words, such an agent would be in the position that

they would think there is another credence function b0 that

has lower expected distance from vindication than their b.

b · δ̂(1, x) + (1 − b) · δ̂(0, x)

Coherence, Lecture #6: Credence I

Steps 2 & 3

Specifically, suppose that b(P ) = b ∈ [0, 1] does not

minimize (?). This means that S’s credences fail to minimize

expected distance from vindication — by their own lights.

A distance measure δ is proper iff (it is continuous and):

Branden Fitelson

Step 1

Let’s go back to our toy agent S. Suppose they have a

credence function b, and they adopt δ to measure distance

from vindication. Finally, suppose hb, δi is modest.

However, it is known that de Finetti’s theorem can be

generalized to any proper measure of distance [46].

(?)

Ramsey & Hájek

In fact, there is even a conflict with (WADA)/(SADA) here.

15

Branden Fitelson

Coherence, Lecture #6: Credence I

16

Re-cap & Plan

Ramsey & Hájek

Step 1

Steps 2 & 3

Accuracy v. Evidence

Refs

b0 = h0, 0, 0i not only minimizes (?), it strictly δ1 -dominates

1 1 1

b = h 3 , 3 , 3 i. Thus, S faces a conflict between an evidential

requirement [(PP)] and the non-δ-dominance requirement

[(SADA)]. Joyce thinks the evidential norm trumps here.

Ramsey & Hájek

Step 1

Steps 2 & 3

Accuracy v. Evidence

Accuracy v. Evidence

[5] S. Cohen, Justification and Truth, Philosophical Studies, 1984.

[6] B. de Finetti, Foresight: Its Logical Laws, Its Subjective Sources, in H. Kyburg and

H. Smokler (eds.), Studies in Subjective Probability, Wiley, 1964.

[7]

, The Theory of Probability, Wiley, 1974.

[8] I. Douven and T. Williamson, Generalizing the Lottery Paradox, BJPS, 2006.

[9] K. Easwaran, Dr. Truthlove or: How I Learned to Stop Worrying and Love

Bayesian Probability, manuscript, 2012.

[10] K. Easwaran and B. Fitelson, An “Evidentialist” Worry about Joyce’s Argument for

Probabilism, Dialectica, to appear, 2012.

[11] M. Deza and E. Deza, Encyclopedia of Distances, Springer, 2009.

[12] T. Fine, Theories of Probability, Academic Press, 1973.

[13] P. Fishburn, Utility Theory for Decision Making, 1970.

[14]

17

Refs

[15] B. Fitelson, A Decision Procedure for Probability Calculus with Applications,

Review of Symbolic Logic, 2008.

, The Axioms of Subjective Probability, Statistical Science, 1986.

Branden Fitelson

Re-cap & Plan

Coherence, Lecture #6: Credence I

Ramsey & Hájek

Step 1

Steps 2 & 3

18

Accuracy v. Evidence

[28] N. Kolodny, How Does Coherence Matter?, Proc. of the Aristotelian Society, 2007.

[29] B. Koopman, The axioms and algebra of intuitive probability, Annals of

Mathematics, 1940.

[17] R. Fumerton, Metaepistemology and Skepticism, Rowman & Littlefield, 1995.

[30] H. Kyburg, Probability and the Logic of Belief, Wesleyan, 1961.

[18] R. Grandy and D. Osherson, Sentential Logic for Psychologists, free online

textbook, 2010, http://www.princeton.edu/~osherson/primer.pdf.

[31]

, Conjunctivits, in Induction, Acceptance & Rational Belief, Reidel, 1970.

[19] A. Hájek, Arguments for — or Against — Probabilism?, BJPS, 2008.

[32] L. Laudan, A Confutation of Convergent Realism, in Scientific Realism, UCP, 1984.

[20] R. Hamming, Error detecting and error correcting codes, Bell System Technical

Journal, 1950.

[33] H. Leitgeb, Reducing Belief Simpliciter to Degrees of Belief, manuscript, 2011.

[34] D. Lewis, A Subjectivists’s Guide to Objective Chance, in Studies in Inductive Logic

and Probability, Vol II., UCP, 1980.

[21] J. Hawthorne, The Lockean Thesis and the Logic of Belief, in Degrees of Belief,

Synthese Library, 2009.

[35]

, Immodest Inductive Methods, Philosophy of Science, 1971.

[22] C. Hempel, Deductive-Nomological vs. Statistical Explanation, in Minnesota

Studies in the Philosophy of Science, Vol. III, Minnesota, 1962.

[36] C. List, The theory of judgment aggregation: An introductory review, Synthese,

forthcoming.

[23] P. Humphries, Why Propensities Cannot be Probabilities, Phil. Review, 1985.

[37] B. Loewer, David Lewis’s Humean Theory of Objective Chance, Phil. Sci, 2004.

[24] J. Joyce, Accuracy and Coherence: Prospects for an Alethic Epistemology of

Partial Belief, in F. Huber and C. Schmidt-Petri (eds.), Degrees of Belief, 2009.

[38] P. Maher, Betting on Theories, CUP, 1993.

[39]

, A Nonpragmatic Vindication of Probabilism, Philosophy of Science, 1998.

Coherence, Lecture #6: Credence I

, Joyce’s Argument for Probabilism, Philosophy of Science, 2002.

[40] D. Makinson, The Paradox of the Preface, Analysis, 1965.

[26] M. Kaplan, Decision Theory as Philosophy, OUP, 1996.

Branden Fitelson

Refs

[27] J.M. Keynes, A Treatise on Probability, MacMillan, 1921.

[16] M. Forster and E. Sober, How to Tell when Simpler, More Unified, or Less Ad Hoc

Theories will Provide More Accurate Predictions, BJPS, 1994.

[25]

Refs

[4] D. Christensen, Putting Logic in its Place, OUP, 2007.

Suppose S adopts δ2 . So, S is (strictly) δ-dominated by each

member b0 of a set of (probabilistic) credence functions b0 .

[Note that no member of b0 can be such that b(P ) ≤ 0.2.]

Re-cap & Plan

Steps 2 & 3

[3] R. Carnap, Logical Foundations of Probability, U. of Chicago, 2nd ed., 1962.

To see why Joyce needs an argument for (†), consider a toy

incoherent agent S, such that: b(P ) = 0.2 and b(¬P ) = 0.7.

Coherence, Lecture #6: Credence I

Step 1

[2] L. Bonjour, The Coherence Theory of Empirical Knowledge, Phil. Studies, 1975.

(†) If S adopts a proper measure (e.g., δ2 ), then S’s (local)

evidential requirements cannot conflict with S’s (global)

non-δ-dominance requirements. [But, this can happen if S

adopts an improper measure (e.g., δ1 ), as in the case above.]

Branden Fitelson

Ramsey & Hájek

[1] F. Baulieu, A classification of presence/absence based dissimilarity coefficients,

Journal of Classification, 1989.

We’re inclined to agree. But, we [10] think this sets Joyce up

for an “evidentialist” objection. Now, Joyce needs to argue

for the following asymmetric normative regularity:

Now, what if S’s evidence requires (exactly) that b(P ) ≤ 0.2?

Re-cap & Plan

19

Branden Fitelson

Coherence, Lecture #6: Credence I

20