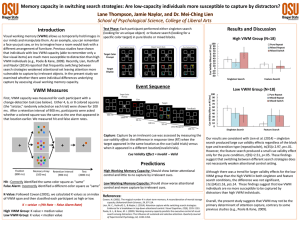

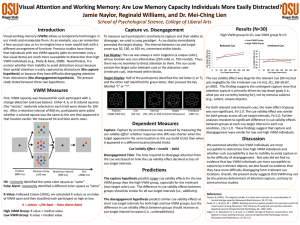

2 Visual memory for features, conjunctions, objects, and locations

advertisement